Author: Ben Martin

System administrators need to keep an eye on their servers to make sure things are running smoothly. If they find a problem, they need to see when it started, so investigations can focus on what happened at that time. That means logging information at regular intervals and having a quick way to analyse this data. Here’s a look at several tools that let you monitor one or more servers from a Web interface.

Each tool has a slightly different focus, so we’ll take them all for a spin to give you an idea of which you might like to install on your machine. The language and design they use to perform statistic logging can have an impact on efficiency. For example, collectd is written in C and runs as a daemon, so it does not have to create any new processes in order to gather system information. Other collection programs might be written in Perl and be regularly spawned from cron. While your disk cache will likely contain the Perl interpreter and any Perl modules used by the collection program, the system will need to spawn one or more new processes regularly to gather the system information.

RRDtool

The tools we’ll take a look at often use other tools themselves, such as RRDtool, which includes tools to store time series data and graph it.

The RRDtool project focuses on making storing new time series data have a low impact on the disk subsystem. You might at first think this is no big deal. If you are just capturing a few values every 5-10 seconds, appending them to the end of a file shouldn’t even be noticeable on a server. Then you may start monitoring the CPU (load, nice values, each core — maybe as many as 16 individual values), memory (swap, cache sizes — perhaps another five), the free space on your disks (20 values?) and a collection of metrics from your UPS (perhaps the most interesting 10 values). Even without considering network traffic, you can be logging 50 values from your system every 10 seconds.

RRDtool is designed to write these values to disk in 4KB blocks instead of as little 4- or 8-byte writes, so the system does not have to perform work every logging interval. If another tool wants all the data, it can issue a flush, ensuring that all the data RRDtool might be caching is stored to disk. RRDtool is also smart about telling the Linux kernel what caching policy to use on its files. Since data is written 4KB at a time, it doesn’t make sense to keep it around in caches, as it will be needed again only if you perform analysis, perhaps using the RRDtool graph command.

As system monitoring tools write to files often, you might like to optimize where those files are stored. On conventional spinning disks, filesystem seeks are more expensive than sequentially reading many blocks, so when the Linux kernel needs to read a block it also reads some of the subsequent blocks into cache just in case the application wants them later. Because RRDtool files are written often, and usually only in small chunks the size of single disk blocks, you might do better turning off readahead for the partition you are storing your RRDfiles on. You can minimize the block readahead value for a device to two disk blocks with the blockdev program from util-linux-ng with a command like blockdev --setra 16 /dev/sdX. Turning off a-time updates and using writeback mode for the filesystem and RAID will also help performance. RRDtool provides advice for tuning your system for RRD.

collectd

The collectd project is designed for repeatedly collecting information about your systems. While the tarball includes a Web interface to analyse this information, the project says this interface is a minimal example and cites other projects such as Cacti for those who are looking for a Web interface to the information gathered by collectd.

There are collectd packages for Debian Etch, Fedora 9, and as a 1-Click for openSUSE. The application is written in C and is designed to run as a daemon, making it a low overhead application that is capable of logging information at short intervals without significant impact on the system.

When you are installing collectd packages you might like to investigate the plugin packages too. One of the major strengths of collectd is support through plugins for monitoring a wide variety of information about systems, such as databases, UPSes, general system parameters, and NFS and other server performance. Unfortunately the plugins pose a challenge to the packagers. For openSUSE you can simply install the plugins-all package. The version (4.4.x) packaged for Fedora 9 is too old to include the PostgreSQL plugin. The Network UPS Tools (NUT) plugin is not packaged for Debian, openSUSE, or Fedora.

The simplest way to resolve this for now is to build collectd from source, configuring exactly the plugins that you are interested in. Some of the plugins that are not commonly packaged at the time of writing but that you may wish to use include NUT, netlink, postgresql, and iptables. Installation of collectd follows the normal ./configure; make; sudo make install process, but your configure line will likely be very long if you specify which plugins you want compiled. The installation procedure and plugins I selected are shown in the below command block. I used the init.d startup file provided in the contrib directory and had to change a few of the paths because of the private prefix I used to keep the collectd installation under a single directory tree. Note that I had to also build a private copy of iproute2 in order to get the libnetlink library on Fedora 9.

$ cd ./collectd-4.5.1 $ ./configure --prefix=/usr/local/collectd --with-perl-bindings=INSTALLDIRS=vendor --without-libiptc --disable-ascent --disable-static --enable-postgresql --enable-mysql --enable-sensors --enable-email --enable-apache --enable-perl --enable-unixsock --enable-ipmi --enable-cpu --enable-nut --enable-xmms --enable-notify_email --enable-notify_desktop --disable-ipmi --with-libnetlink=/usr/local/collectd/iproute2-2.6.26 $ make ... $ sudo make install $ su # install -m 700 contrib/fedora/init.d-collectd /etc/init.d/collectd # vi /etc/init.d/collectd ... CONFIG=/usr/local/collectd/etc/collectd.conf ... daemon /usr/local/collectd/sbin/collectd -C "$CONFIG" # chkconfig collectd on

If more of the optional collectd plugins are packaged in the future, you may be able to install your desired build of collectd without having to resort to building from source.

Before you start collectd, take a look at etc/collectd.conf and make sure you like the list of plugins that are enabled and their options. The configuration file defines a handful of global options followed by some LoadPlugin lines that nominate which plugins you want collectd to use. Configuration of each plugin is done inside a <Plugin foo>...</Plugin> scope. You should also check in your configuration file that the rrdtool plugin is enabled and that the DataDir parameter is set to a directory that exists and which you are happy to have variable data stored in.

When you have given a brief look at the plugins that are enabled and their options, you can start collectd by running service collectd status as root.

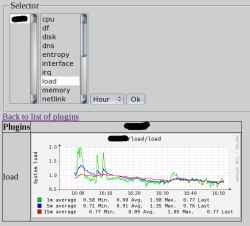

To see the information collectd gathers you have to install the Web interface or another project such as Cacti. The below steps should install the basic CGI script that is supplied with collectd. The screenshot that follows the steps shows the script in action.

# yum install rrdtool-perl # cp contrib/collection.conf /etc/ # vi /etc/collection.conf datadir: "/var/lib/collectd/rrd/" libdir: "/usr/local/collectd/lib/collectd/" # cp collection.cgi /var/www/cgi-bin/ # chgrp apache /var/www/cgi-bin/collection.cgi

If you want to view your collectd data on a KDE desktop, check out kcollectd, a young but already useful project. You can also integrate the generated RRDtool files with Cacti, though the setup process is very longwinded for each graph.

With collectd the emphasis is squarely on monitoring your systems, and the provided Web interface is offered purely as an example that might be of interest. Being a daemon written in C, collectd can also run with minimal overhead on the system.

Next: Cacti

Cacti

While collectd emphasizes data collection, Cacti is oriented toward providing a nice Web front end to your system information. Whereas collectd runs as a daemon and collects its information every 10 seconds without spawning processes, Cacti runs a PHP script every five minutes to collect information. (These time intervals for the two projects are the defaults and are both user configurable.) The difference in default values gives an indication of how frequently each project thinks system information should be gathered.

Cacti is packaged for Etch, Fedora 9, and openSUSE 11. I used the Fedora packages on a 64-bit Fedora 9 machine.

Once you have installed Cacti, you might get the following error when you try to visit http://localhost/cacti if your packages have not set up a database for you. The Cacti Web site has detailed instructions to help you set up your MySQL database and configure Cacti to connect.

FATAL: Cannot connect to MySQL server on 'localhost'. Please make sure you have specified a valid MySQL database name in 'include/config.php'

When you first connect to your Cacti installation in a Web browser you are presented with a wizard to complete the configuration. Cacti presents you with the paths to various tools, SNMP settings, and the PHP binary, and asks which version of rrdtool you have. Although Cacti found my rrdtool, I still had to tell it explicitly the version of rrdtool I had. While this information was easy to supply, a button on the wizard offering to execute rrdtool and figure it out from the --version string would have been a plus.

After the paths and versions are collected Cacti will ask you to log in using the default username and password. When you log in you immediately have to change the admin user’s password.

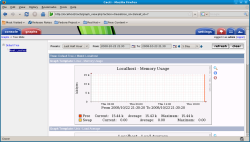

The first screen you are taken to is the console tab. In the main section of the window you are offered three options: create devices, create graphs, and view your graphs. This might lead you to believe that there are no graphs already created. Clicking on the graphs tab you should see that you already have a small collection of graphs: Memory Usage, Load Average, Logged in Users, and Processes. The graphs view is shown in the screenshot below.

Additional information-gathering scripts available for Cacti let you expand what information Cacti can monitor. For example, they let you collect the load and input and output voltage of UPS devices.

Most of these projects’ Web interfaces allow you to view your statistics using predefined time intervals such as hour, day, and week. Cacti goes a big step further and allows you to specify the exact interval that you are interested in through the Web interface.

Cacti offers the most functional and polished Web interface among these projects. It lets you select the time interval displayed on your graphs from more predefined settings, and it also lets you explicitly nominate the start and end time you are interested in. Cacti is the only one of the tools that lets you nominate a custom time range for your graph.

Next: Monitorix

Monitorix

Monitorix shows you system information at a glance in three graphs: a central one on the left to give overview information and two smaller graphs on the right to give related details. It includes a Perl daemon that collects the statistics for your systems and a CGI Web interface that allows you to analyse the data.

There are no Monitorix packages in the Fedora, openSUSE, or Debian repositories. The Monitorix download page offers a noarch RPM file as well as a tarball for non-Red Hat/Fedora-based distributions. I used version 1.2.1 on a 64-bit Fedora 9 machine, installed from the noarch RPM file. If you are installing on a Debian-based system, in the monitorix-1.2.1 tarball there is an install.sh file that will copy the files to the correct path for your distribution, and an un_install.sh file, should you decide to remove Monitorix. You need to install Perl bindings for RRDtool (rrdtool-perl on Fedora 9, librrds-perl on Debian, and rrdtool on openSUSE 11) in order to use Monitorix.

Once you have the files installed in the right place, either by installing the RPM file or running install.sh, you can start collecting information by running service monitorix start. You should also be able to visit http://localhost/monitorix/ and be offered a collection of graphs to choose from (or just nominate to see them all).

Monitorix doesn’t include a plugin system but has builtin support for monitoring

CPU, processes, memory, context switches, temperatures, fan speeds, disk IO, network

traffic, demand on services such as POP3 and HTTP, interrupt activity, and the number

of users attached to SSH and Samba. A screenshot of Monitorix displaying daily graphs is shown below. There are 10 main graph panels, even though you can see only about 1.5 here.

You can configure Monitorix by editing the /etc/monitorix.conf file, which is actually a Perl script. The MNT_LIST option allows you to specify as many as seven filesystems to monitor. The REFRESH_RATE setting sets how many seconds before a Web browser should automatically refresh its contents when viewing the Monitorix graphs. You can also use Monitorix to monitor many machines by setting MULTIHOST="Y" and listing the servers you would like to contact in the SERV_LIST setting, as shown below. Alternatively you can list entire subnets to monitor using the PC_LIST and PC_IP options, examples of which are included in the sample monitorix.conf file.

MULTIHOST="Y" our @SERV_LIST=("server number one", "http://192.168.1.10", "server number two", "http://192.168.1.11");

Having each graph panel made up of a main graph on the left and two smaller graphs on the right allows Monitorix to convey a fair amount of related information in a compact space. In the screenshot, the load is shown in the larger graph on the left, with the number of active processes and memory allocation in the two smaller graphs on the right. Scrolling down, one of the graph panels shows network services demand. This panel has many services shown in the main graph and POP3 and WWW as smaller graphs on the right. Unfortunately, the selection of POP3 seems to be hard-coded on line 2566 of monitorix.cgi where SERVU2 explicitly uses POP3, so if you want to monitor an IMAP mail service instead you are out of luck.

Monitorix is easy to install and get running, and its three graph per row aggregate presentation gives you a good high-level view of your system. Unfortunately some things are still hard-coded into the CGI script, so you have a somewhat limited ability to change the Web interface unless you want to start hacking on the script. The lack of packages in distribution repositories might also turn away many users.

Next: Munin

Munin

The Munin project is fairly clearly split into the gather and analyse functionality. This lets you install just the package to gather information on many servers and have a single central server to analyse all the gathered information. Munin is also widely packaged, making setup and updates fairly simple.

Munin is written in Perl, ships with a collection of plugins, supports many versions of Unix operating systems, and has a searchable plugin site.

Munin is in the Fedora 9 repository, Debian Etch, and as a 1-Click for openSUSE 11. Again, I used the version from the repository for a 64-bit Fedora 9 machine. The project provides two packages: the munin-node package includes all of the monitoring functionality, and the munin package supports gathering information from machines running munin-node and graphing it via a Web interface. If you have a network of machines, you probably only want to install munin-node on most of them and munin on one to perform analysis on all the collected data.

The main configuration file for munin-node, /etc/munin/munin-node.conf, lets you define where log files are kept, what user to run the monitoring daemon as, what address and port the daemon should bind to, and which hosts are allowed to connect to that address and port to download the collected data. In the default configuration only localhost is allowed to connect to a munin-node.

You configure plugins through individual configuration files in /etc/munin/plugin-conf.d. Munin-node for Fedora is distributed with about three dozen plugins for monitoring a wide range of system and device information.

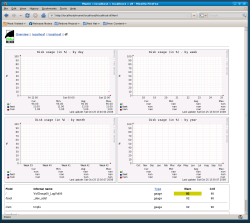

When you visit http://localhost/munin, Munin displays an overview page showing you links to all the nodes that it knows about and including links to specific features of the nodes, such as disk, network, NFS, and processes. Clicking on a node name shows you a two-column display. Each row shows a graph with the daily statistics on the left and weekly on the right. Clicking on either graph in a row takes you to a details page showing that data for the day, week, month, and year. At the bottom of the details page a short textual display gives more details about the data, including notification of irregular events. For example, in the below screenshot of the details page for free disk space, you can see a warning that one of the filesystems has become quite full.

To get an idea of how well a system or service is running on a daily or weekly basis, the Munin display works well. Unfortunately, the Web interface does not allow you to drill into the data. For example, you might like to see a specific two-hour period from yesterday, but you can’t get that graph from Munin.

The plugins site for Munin is quite well done, allowing you to see an example graph for many of the plugins before downloading. A drawback to the plugins site is the search interface, which is very category-oriented. Some full text search would aid users in finding an appropriate plugin. For example, to find the NUT UPS monitoring plugin you have to select either Sensors or “ALL CATEGORIES” first; just being able to throw UPS or NUT into a text box would enable quick cherry-picking of plugins.

A major advantage of Munin is that it ships as separate packages for gathering and analysing information, so you don’t need to install a Web server on each node. The additional information at the bottom of the details page should also prod you if some statistic has a value that you should really pay attention too.

Final words

Of these four applications, Cacti offers the best Web interface, letting you select the time interval displayed on your graphs from more predefined settings, and it also lets you explicitly nominate the start and end time you are interested in. By contrast, in collectd, the emphasis is squarely on monitoring your systems, and the provided Web interface is offered purely as an example that might be of interest. Given that collecting and analysing data can be thought of as separate tasks, it would be wonderful if collectd and Cacti could play well together. Unfortunately, setting up Cacti to use collectd-generated files is a long, manual, error-prone process. While both Cacti and collectd are useful projects by themselves, I can’t help but think that the combination of the two would be greater than its parts. Monitorix and Munin are easy to install and offer a quick overview of a host, but Monitorix’s three graph per row aggregate presentation gives you a better high-level view of your system.

Which one might be best for you? If you spend a lot of time in data analysis or if you plan to allow non-administrators to get a glance of the system statistics, Cacti might be a good project to look into first. If you want to gather information on a system that is already under heavy load, see if collectd can be run without disrupting your system. Munin’s support for gathering information from many nodes using different application packages makes it interesting if you are monitoring a small group of similar machines. If you have a single server and want a quick overview of what is happening, either Cacti or Monitorix is worth checking out first.

Categories:

- Reviews

- System Administration