The ext4 filesystem causes a number of scaling and performance issues which Jan Kara, Linux kernel engineer at SUSE, addressed in his presentation at The Linux Foundation’s Vault storage conference last week.

The ext4 filesystem causes a number of scaling and performance issues which Jan Kara, Linux kernel engineer at SUSE, addressed in his presentation at The Linux Foundation’s Vault storage conference last week.

Ext4 represents the latest evolution of the most-used Linux filesystem, ext3. In that regard, ext4 can be considered a deeper improvement over ext3 than ext3 was over ext2. (Ext3 was mostly about adding journaling to ext2.)

Ext4 modifies important data structures of the filesystem and the failure to pay attention to these structures, such as inodes, can cause a variety of mostly minor problems as well as scaling challenges, Kara said. Still, these can impact performance, which automatically should raise their importance.

He and other ext4 developers have done some work to improve these issues, including reducing contention resulting from handling of inodes, using shrinker to alleviate memory constraints, and more.

Dealing with inodes

Kara began by addressing the orphan list, the list of inodes that simply haven’t been properly closed. With ext4 much revolves around inodes. In a file system, inodes consist roughly of 1 percent of the total disk space, whether it is a whole storage unit or a partition on a storage unit. The inode space is used to track the files stored on the hard disk and it stores metadata about each file, directory, or object. It does not, however, store the data. It only points to those structures.

The usual solution to cleaning up the unprocessed orphan inode list is by unmounting and remounting the file system. This should be pretty straightforward. Simply generate the list of inodes for tracking unlinked open files and files with truncate in progress. It only gets complicated in the event of a crash when you may have large numbers of orphaned inodes. In that case you need just “find the orphans, finish the truncates, and remove unlinked inodes,” Kara explained.

The orphan list can become unwieldy so Kara described some work he did with developer Thavatchai Makphaibulchoke. The problem is that the list handling can be inherently unscalable; in effect becoming unsolvable without disk format changes. “Still, you can do a few things”, he suggested:

-

Remove unnecessary global lock acquisitions when inode is already part of an orphan list (inode modifications itself is guarded by inode-local lock).

-

Make critical sections shorter.

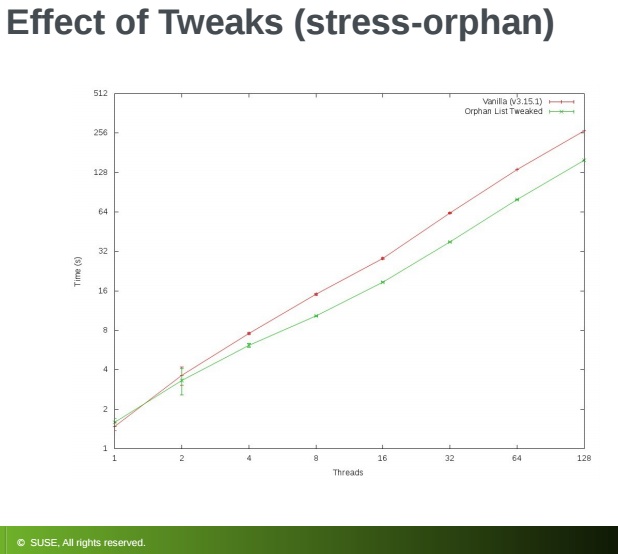

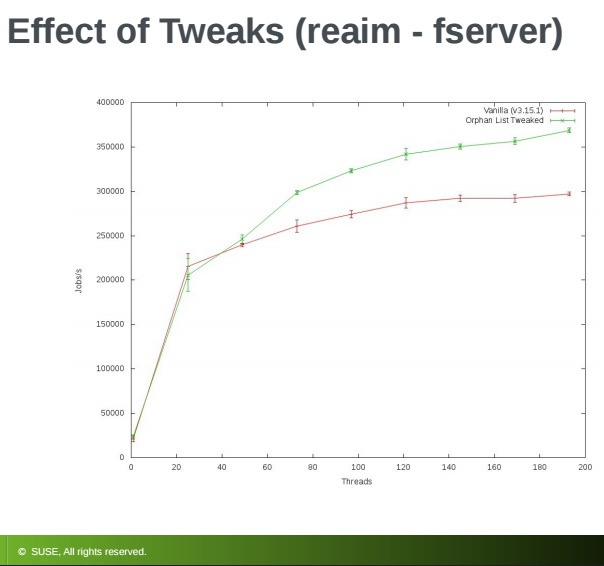

Kara made some changes to the orphan list along the lines he recommended and tested for the effect of the changes by running what he referred to as a stress-orphan micro-benchmark and a reAIM new fserver workload. There were clearly noticeable gains with the orphan stress test and even larger gains in the reAIM fserver test resulting in a measurable increase in jobs per second, he reported.

Kara made some changes to the orphan list along the lines he recommended and tested for the effect of the changes by running what he referred to as a stress-orphan micro-benchmark and a reAIM new fserver workload. There were clearly noticeable gains with the orphan stress test and even larger gains in the reAIM fserver test resulting in a measurable increase in jobs per second, he reported.

Kara went on the run some experiments with orphan file patches, which entailed reallocating system files which store numbers of orphan inodes. For this, “there is a real fix—improve jbd2 reduce overhead, especially if the block already is in the correct state. You also can skip journaling block if the block is part of a running transaction.”

From there he looked at orphan slot allocation strategies. He found two choices, simple or complex:

-

Simple way—search for free slot in a file using sequential search from the beginning under a spinlock

-

More complex—use hash CPUID to a block in the orphan file (can have easily more blocks for a CPU) and then start searching at that block.

His efforts to measure the various orphan file approaches for performance, however, did not produce clear winners. “It was hard to tell,” he noted. In the end, “it doesn’t matter which strategy you use,” he concluded.

Using shrinker

Although the subject was ext4 filesystem scaling, often you experience memory constraints and need to free some entries. That’s when Kara turns to shrinker. Memory management calls shrinker and asks it to scan N objects and free as much as possible. “The objective is to find entries to reclaim without bloating each entry too much,” he said.

When doing this Kara recommends keeping the timestamp of when last reclaimable extent was used in each inode. On reclaim request scan RB (Red/Black) trees of inodes with oldest timestamps until desired number (N) of entries are reclaimed. Note: your scanning may need to skip many unreclaimable extents.

Kara has been working jointly with developer Zheng Liu to improve shrinker. This has involved walking inodes in round-robin order instead of LRU to avoid the need for list sorting. Also, he recommends adding simple aging via an extent REFERENCED bit.

In a test of shrinker for improving latency he wanted to see what could be accomplished with shrinker in a 5-minute run with as many files as time allowed. Kara was able to achieve a maximum latency reduction from 63132 us to 100 us. As with any tests, your results will vary depending on the amount of memory, speed of the write, and whether your data is cached.

Kara wrapped up with key takeaways:

-

Even if you cannot fundamentally improve scalability, just reducing length of critical sections can help.

-

Doing things lockless is still faster.

Ultimately, you have to strike a balance between sophisticated but slow or simple and fast, he concluded.