With the rapid adoption of automotive virtualization, manufacturers can now run multiple systems on a single computer, from highly reliable Linux software for mission-critical functions to highly customizable Android software for infotainment services. Although virtualization enables limitless opportunities to customize the driving experience, all these different virtual machines must still run their graphics processing requests through a single GPU.

In most vehicle architectures, the GPU is a separate co-processor with its own firmware. This means that each VM must send its commands and data to the GPU for processing and then receive the completed request from the GPU’s firmware. The problem with this setup is that if a VM sends an illegal command or bad data to the GPU and causes it to crash, no other VM can interact with the GPU until it is rebooted. Not only is this irritating, but it can affect the stability and security of any mission-critical vehicle software that is running on another VM.

Like everyone else, my company GlobalLogic struggled with a way to share a GPU between VMs without affecting the performance, stability, and security of those VMs. As part of our product development service offerings to automotive manufacturers, we utilize a virtualization platform called Nautilus to run multiple VMs on a single board computer. Although we had successfully sandboxed all automotive OSes leveraging a Xen Type 1 hypervisor, we were still dependent on the GPU hardware — and all its vulnerabilities.

Then we realized that instead of using the GPU’s firmware for all VMs, we should instead flip the script and build an architecture that enables each VM to work with its own GPU firmware. For example, if VM1 needs to process a graphics request, it will upload its firmware to the GPU, execute the necessary commands, and then “log off” from the GPU. Once VM1 is no longer using the GPU, VM2 can begin to upload its firmware — and so on and so forth.

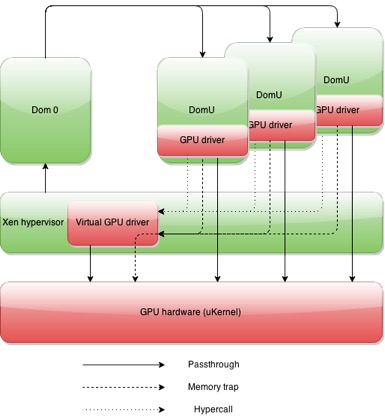

Behind the scenes, the platform stores all states of the GPU in a virtual GPU driver that sits on the Xen hypervisor. When VM1 initiates a request to access the GPU, the platform resets the GPU and uploads the VM’s firmware so that the VM is working with the GPU from its last previous status. This reset-upload-execute-reset process enables each VM and GPU to work in a siloed session and not affect any other VM-GPU sessions. If VM1 sends an illegal command to the GPU, it will only crash its own specific session instead of the entire GPU. This approach greatly improves the stability and security of GPU-sharing in a virtualized environment.

Our GPU virtualization solution enables multiple domains to share the GPU hardware with no more than a 5 percent overall drop in performance. We are able to achieve this functionality by optimizing hardware resources across the native kernel module and carefully managing resources through a Xen mediator driver. With this architecture, no guest domain ever has access to any real hardware — everything is virtualized.

We have thoroughly tested this approach and integrated it into our Nautilus platform with fantastic results. We are currently using this architecture with live customers, and we will be further demonstrating the solution at the GENIVI showcase at CES 2016 if you’re interested in learning more about GPU virtualization.

As automotive manufacturers come to rely more on software to operate their vehicles and engage with drivers, the graphics capabilities of vehicle dashboard displays and IVI systems will become increasingly important. With GPU virtualization, manufacturers can optimize graphics processing performance across multiple VMs while also ensuring the stability and security of those VMs.

Alex Agizim is VP, CTO of Embedded Systems at GlobalLogic Inc.