Editor’s Note: This article is paid for by GridGain as a Platinum-level sponsor of ApacheCon North America and was written by Linux.com.

In-memory computing (IMC) is replacing hard drives as the primary storage system of choice for many enterprises where speedy processing counts. As the price gap narrows between RAM – the solid-state system memory “sticks” that plug directly into slots on the motherboard – and solid-state or rotating-media hard drives, which “talk” with the CPU via slower interconnects it drives the trend toward IMC. IMC “treats RAM as primary storage,” keeping gigabytes of data in RAM — often for months or years (usually saving copies to SSD or hard drives, of course).

In-memory computing (IMC) is replacing hard drives as the primary storage system of choice for many enterprises where speedy processing counts. As the price gap narrows between RAM – the solid-state system memory “sticks” that plug directly into slots on the motherboard – and solid-state or rotating-media hard drives, which “talk” with the CPU via slower interconnects it drives the trend toward IMC. IMC “treats RAM as primary storage,” keeping gigabytes of data in RAM — often for months or years (usually saving copies to SSD or hard drives, of course).

One of the leading software tools for enabling IMC has been In-Memory Data Fabric from GridGain Systems, Inc. Nikita Ivanov (now CTO) and Dmitriy Setrakyan, EVP of Engineering, began developing the software in 2005, co-founding GridGain to sell an enterprise edition of the software that included support and additional features.

In 2014, GridGain adopted the Apache 2.0 license for its open source version, and made the code available through sites including GitHub, leading to a 20x increase in downloads. In late 2014, the company announced that the Apache Software Foundation had accepted into the Apache Incubator program, under the name Apache Ignite, and in early 2015, GridGain announced the migration of the open source code to the ASF site.

GridGain currently plans to do a more complete roll-out, including talks and training sessions, at the upcoming ApacheCon North America, April 13-16, in Austin, Texas. Meanwhile, here’s a quick look at Apache Ignite, in-memory computing and what it means for developers and users, GridGain, and how you can try Apache Ignite now, and get involved in its development.

March of the podlings

Doing this is a natural next step for the software, according to GridGain’s Ivanov.

“We wanted to bring (the open source core of) our code entirely within the Apache umbrella,” says Ivanov. “We feel this will help drive even more adoption of an open-source In-Memory Data Fabric by other Apache developers and users, including those people who have been looking to get real-time performance out of Hadoop.”

“’Incubating’ means that Ignite is not yet fully endorsed by the ASF, but is, like penguins marching to their breeding grounds, at a stage along the ASF’s well-defined incubation process from “establishment” to “project.”

Apache Ignite mentor Konstantin Boudnik said, “The project is off to a great start. The community is working on Apache Ignite v1.0 and is aiming to include new automation and ease-of-use features to simplify deployment. Other features in the works include support for JCache (JSR-107), which is a new standard for Java in-memory object caching; Auto-Loading of SQL data; and dynamic cache creation on the fly.”

GridGain will continue to offer commercialized versions of the software, which, like many open source-based companies, will offer additional features plus support.

“We are already in the process of building the next version of our commercial enterprise edition, Version 7.0, on top of Apache Ignite,” says Ivanov.

In-Memory Computing Isn’t New, But Lower Prices and Bigger Capacities Are

The motivation for doing In-Memory Computing is simple: It takes much less time for the processors to read from and write to RAM socketed on the motherboard versus hard or solid-state drives, where data has to traverse the data bus (and for rotating mechanical media, suffer access delays).

“Traditional computing uses disks as primary storage and memory as a primary cache for frequently-accessed data,” says Ivanov. “In-memory computing turns that upside down. Keeping data in RAM helps reduce latencies and increase application performance. This lets companies meet needs for ‘Fast Data’ — computation and transactions on large data sets in real time, in a way that traditional disk and flash storage can’t come close to matching.”

The notion of keeping primary data in RAM isn’t new. “RAM disk” software was available for early personal computers like the Commodore 64 and Apple II, and for DOS, and it’s available today for Linux (e.g., shm and RapidDisk), and for Windows 8.

What is relatively new, however, is the increasing affordability of RAM — a quick check at NewEgg.com shows 32 gigabyte sticks of server-grade RAM at $10 to $20 per GB — and of cluster and multi-processor architectures that can scale up and out to accommodate terabytes (TB) of RAM.

“You can buy a 10-blade server that has a terabyte of RAM for less than $25,000,” according to Ivanov. And, says Ivanov, while that much RAM does push up the initial price, “Because of RAM’s lower power and cooling costs, and no moving parts to break, analysts say that the TCO (Total Cost of Ownership) for using RAM instead of rotating or solid-state storage as primary storage breaks even in about three years. And that’s just looking at TCO, not including the delivered value from getting much faster processing performance.”

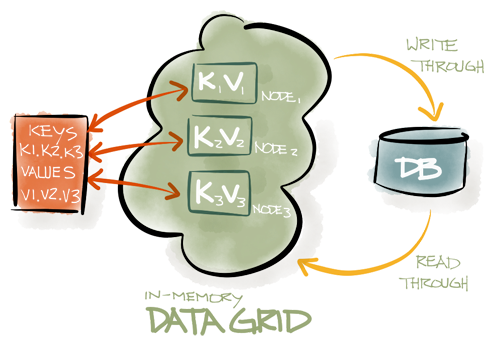

GridGain’s In-Memory Data Fabric is one of several different software solutions to allow “RAM as primary storage” architectures. “Our software slides logically and architecturally above your database and beneath your application,” says Ivanov. “The goal is to give applications high performance and high scalability compared to using disk-based storage.”

Who is using — and will be using — in-memory computing? Anyone looking to crunch data faster. This includes not just financial services and bio-informatics, but also, says Ivanov, “As ‘big data meets fast data,’ we are seeing new use cases like hyperlocal advertising, fraud protection, in-game purchasing for online games, and SaaS-enabling premise-based applications while maintaining SLA and multi-tenancy goals.”

GridGain reports hundreds of deployments using GridGain’s In-Memory Data Fabric, according to the company, at major companies and organizations including Apple, Avis, Canon, E-Therapeutics, InterContinental Hotels Group, Moody’s KMV, Sony, Stanford University, and TomTom.

And, notes Ivanov, “While enterprises probably look at using in-memory computing on systems starting at one-quarter to half a terabyte of RAM, you could be using it on a system with even just a few dozen gigabytes — it depends on how much data you want to keep in RAM.

Recent studies like one that Gartner did in 2014 found that over 90 percent of enterprise operational payloads for datasets — the data these organizations need to process every day — are less than 2TB. That’s completely in the realm of what we are seeing today. The upper limit for RAM today is probably in the 10 to 20 TB range… and that’s due more to economic issues than technical constraints.”

Using Apache Ignite, Getting Involved

Want to try Apache Ignite? Initial Apache 2.0 licensed source code can be found here.

“You can run this on your laptop, a commodity cluster, or on a supercomputer,” says Ivanov.

Want to become part of the Apache Ignite community? There’s no shortage of things you can do.

Specific initial goals will be determined by public discussion among the Apache Ignite community; likely goals, suggests Ivanov, could include:

Migrate the existing Ignite code base to the ASF.

Refactor development, testing, build and release processes to work in ASF.

Attract developer and user interest in the new Apache Ignite project.

Road map the integration efforts with “sister” projects in ASF eco-system like Storm and Spark.

Incorporate externally developed features into the core Apache Ignite project.

“We believe these initial goals are sufficiently difficult to be considered early milestones,” noted Ivanov in GridGain’s February 2015 announcement.

Over time, says Ivanov, “We expect Apache Ignite will become for Fast Data what Hadoop is for Big Data.”