Linux and FOSS are key players in science and research, and together they power the biggest research projects in the world. Here are four projects that show four different ways Linux is used in large-scale research.

CERN Comes Full Circle

In the beginning there was CERN, and they did employ Tim Berners-Lee, who then invented the World Wide Web and gave it to the world. We already had the Internet, a text-only realm full of FidoNets and Nethacks and Usenets and CompuServe. The Web gave it a prettier face and greater accessibility.

Then along came Linus Torvalds and his famous announcement to the comp.os.minix Usenet newsgroup on 25 August 1991:

“Hello everybody out there using minix –

I’m doing a (free) operating system (just a hobby, won’t be big and professional like gnu) for 386(486) AT clones.”

It didn’t take long for the baby Linux to become useful, and for Linux, open source, and open networking protocols to help fuel the astonishing growth of the Internet/WWW. Of course there is much more to this story such as the crucial roles played by Richard Stallman, programming language inventors, UNIX, Theo de Raadt, distribution maintainers, and hordes more.

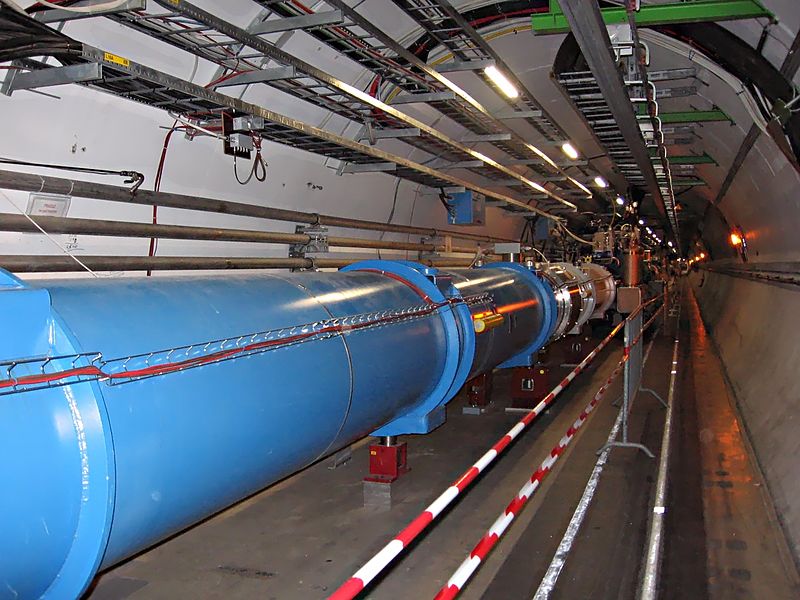

Fast-forward a few years and CERN is reaping the benefits of its role in helping to fuel all of this amazing creative energy, and relies on Linux for some of the biggest computing jobs on the planet. CERN is an early pioneer in grid computing and leads the LHC Computing Grid (LCG) project. This is a giant worldwide grid that supplies the storage and processing power for managing the data generated by the Large Hadron Collider, which is said to generate 15 petabytes of data per year. The middleware runs on Scientific Linux, and can be installed on other distros, though it’s only been tested on SL. CERN runs both Scientific Linux and their own SL spin, Scientific Linux CERN, on over 50,000 of their own servers and desktops.

{Image: Large Hadron Collider. Courtesy of Wikimedia Commons.}

We ordinary mortals can participate too, thanks to LHC@home>.

CERN has always been about openness: open standards, protocols, and access to information. They have a formal open access policy and repository, and are signatories to the Berlin Declaration.

BOINC

Berkeley Open Infrastructure for Network Computing (BOINC) is a popular middleware for grid computing. (Remember when “grid” was the buzzword of the day? How quickly things change.) It was created to support SETI@Home, and of course was quickly adopted for other projects. As the BOINC Web site says, “Use the idle time on your computer (Windows, Mac, or Linux) to cure diseases, study global warming, discover pulsars, and do many other types of scientific research.” Just install and setup BOINC, then choose a project or projects to support. Some of them sound downright cosmic, like Milkyway@home andOrbit@home. FreeHAL is about artificial intelligence, and World Community Grid is “Humanitarian research on disease, natural disasters and hunger.” The core packages should already be in your distro repositories. Easy peasey way to contribute to the advancement of science.

Cloud BioLinux

There are multiple ways to deliver computing and storage resources for power-hungry data crunching, and Cloud BioLinux marshals cloud technologies for genome analysis. This gives us yet another type of cloud. We have IaaS, Infrastructure as a Service. PaaS is Platform as a Service, and SaaS is Software as a Service. Canonical gives us MaaS, Metal as a Service, and Jono Bacon forked this as Heavy Metal as a Service. (That’s a joke, and a mighty fine one.) Cloud BioLinux could be called Science as a Service, Scaas.

Cloud BioLinux is a free community project that runs on your local machine, or with Amazon Web Services (AWS). It offers several different installation images: a VirtualBox appliance, a Eucalyptus image, command-line tools for AWS, and a FreeNX desktop for a full Cloud BioLinux desktop (figure 2.)

{Figure 2: The Cloud BioLinux desktop}

The helpful user’s manual gives some good tips for working with Amazon EC2, which has flexible pricing which can bite you if you don’t understand how it works. You are charged as long as your instance is running, which can run when you’re logged out. You have to terminate an instance to stop the meter. Terminating an instance makes it all vanish– system software and data. Another little pitfall is you’re charged by the time-hour, so if you’re running an instance from 8:55 to 9:05 you get charged for two hours. Moral: EC2 pricing well-documented and has several options, so be sure you know what you’re getting into.

Texas Advanced Computing Center

The High Performance Computing (HPC) Systems at the University of Texas at Austin has two of the biggest Linux clusters in the world. (The biggest are probably Google and Facebook.) One uses Sun Constellation hardware, and the other is a conglomeration of Dell Poweredge servers. Both are blade systems.

The Sun cluster is called Ranger, a nice friendly name for a mammoth computer. It has 3,936 nodes, 62,976 cores, 123 TB memory, and delivers 579.4 teraflops per second. That’s 579.4 trillion operations per second. (I bet Tux Racer flies on that setup.) The operating system is CentOS Linux.

The Dell system’s name is Lonestar. It’s a little feebler than Ranger: 22,656 cores, 44 TB memory, and a mere 302 teraflops per second. Both have über InfiniBand networking and use the high-performance Lustre parallel distributed file system. Lustre is used on giant systems everywhere: in the biggest datacenters, supercomputers, and Internet service providers. (Lustre is another bit of engineering excellence from Sun Microsystems.)

90% of Ranger’s and Lonestar’s resources are dedicated to the Extreme Science and Engineering Discovery Environment (XSEDE). This is a consortium of 16 supercomputers and high-end visualization and data analysis resources in the US, for supporting research. They are connected by a 10GB/s network, XSEDENet.