Author: David 'cdlu' Graham

Greg Kroah-Hartman kicked off the second day of the 9th annual Ottawa Linux Symposium with a talk entitled “Linux Kernel Development – How, What, How fast, and Who?” to a solidly packed main room with an audience of more than 400 people.

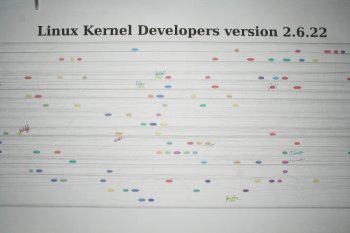

Kroah-Hartman set up a large poster along the back wall of the session room with a relational chart showing the links between developers and patch reviewers for the current development kernel, along with an invitation for all those present whose names are on the chart to sign it.

He launched into his talk with a bubble chart of the development hierarchy as it is meant to work, showing a layer of kernel developers at the bottom who submit their patches to about 600 driver and file maintainers, who in turn submit the patches to subsystem maintainers, who in turn submit them to Andrew Morton or sometimes Linus Torvalds directly.

Andrew Morton was selected to maintain the stable tree while Linus worked on the development tree, Kroah-Hartman says, and explained how Morton merges all unstable patches into a tree called the -mm tree, which stands for memory management, Morton’s historical subtree and Linus actually maintains the stable kernel tree. While they will never admit it, says Kroah-Hartman, they now work effectively the opposite of how they intended.

Patches are submitted up the previously mentioned hierarchy and each person who reviews the patch and sends it up puts their name in the signed-off-by field of the patch. The large chart Kroah-Hartman printed and posted is made up of these signed-off-by field relations and shows that the actual relationships between the layers of developers does not operate quite the way the bubble chart he showed suggests.

A stable release of the kernel comes out approximately every 2.5 months, Kroah-Hartman says. Over the 2.5 years since the 2.6.11 kernel, changes to the kernel have taken place at an average rate of 2.89 patches per hour, sustained for the entire 2 and a half years. Kernel 2.6.19 alone sustained a rate of change of 4 changes per hour, Kroah-Hartman showed on a graph.

In kernel 2.6.21 there are 8.2 million lines of code. 2,000 lines are added per day, with 2,800 lines modified each day, every day, on average, Kroah-Hartman says. He noted that the actual number is a matter of debate, but believes his numbers to be a relatively accurate reflection of reality.

Asked how these numbers compared to Windows Vista, Kroah-Hartman says that the Vista kernel is smaller, but does not contain any drivers so the comparison is not possible to do accurately. Kroah-Hartman was also asked if each dot release was the equivalent of the 2.4 to 2.6 kernel changes. No, he responded, but it takes around six months to do an equivalent amount of change.

Returning to his presentation after a flurry of questions, Kroah-Hartman compared the various parts of the kernel and noted that the ‘arch’, or architecture, tree is the largest part of the kernel by number of files, but that the drivers section of the kernel makes up 52% of the overall size, with the comparison set being core/drivers/arch/net/fs/misc. All six sections seem to be changing at roughly the same rate, he noted.

Linux is more scalable than any other operating system ever, Kroah-Hartman boasted. Linux can run off everything from a USB stick to 75% of the top world supercomputers.

Kroah-Hartman went on to explore the number of developers who contribute to each release of the kernel and found that there were 475 developers contributing to the 2.6.11 kernel, over 800 in the 2.6.21 kernel and 920 in the not yet released 2.6.22 kernel so far. He noted the number could be off a bit as kernel developers seem to have a habit of misspelling their own names, although he says he did his best to correct for that.

The kernel development community is growing rapidly, Kroah-Hartman says. In the initial 2.6 kernel tree there were only 700 developers. In the last two and half years from 2.6.11 to 2.6.22-rc5, around 3,200 people have contributed patches to the kernel. Half of contributors have contributed one patch, a quarter have contributed two, an eighth have contributed three, and so forth, Kroah-Hartman noted as an interesting statistic.

By quantity, Kroah-Hartman says, and not addressing quality, the biggest contributors of patches to the Linux kernel are Al Viro in first place with 1,339 patches in the 2.5 year window, David S. Miller with 1,279, Adrian Bunk with 1,150, and Andrew Morton with 1,071. He listed the top 10 and indicated that a more extensive list is available in his white-paper on the topic. The top 30% of the kernel developers do around 30% of the work, he says, representing a large improvement from 2.5 years ago when the top 20% of kernel developers did approximately 80% of the work.

With statistics flying, Kroah-Hartman listed the top few developers by how many patches they had signed off on rather than written. First was Linus Torvalds at 19,890, followed by Andrew Morton at 18,622, David S. Miller at 6,097, Greg Kroah-Hartman himself at 4,046, Jeff Garzik at 3,383, and Saturday’s OLS keynote speaker James Bottomley in 9th place at 2,048. Sometimes, Kroah-Hartman admitted, it feels like all he does is read patches.

Next, he talked about the companies funding kernel development. Measured by number of patches contributed by people known to be employees of companies and without accounting for people who changed companies during the data period, Red Hat came in second place with 11.8%, Novell in third at 9.7%, Linux Foundation in fourth at 8.1%, IBM in fifth at 7.9%, Intel in sixth at 4.3%, SGI in eight at 2.2%, MIPS in ninth at 1.5%, and HP in the number ten spot at 1.3%.

The keen-eyed observer will note that he omitted first and third place in this list initially. The seventh place was people known to be amateurs working on their own, including students, at 3.9%, and in first place were people whose affiliations were unknown who had contributed fewer than ten patches each making up 33.2% of total kernel development in the 2.5 year period.

Kroah-Hartman made the point that if your company is not showing up in the list of contributors and you are using Linux, you must either be happy with the way things are going with Linux or you must get involved in the process. If you don’t want to do your own kernel contributions, Kroah-Hartman says, you could do what AMD is doing and subcontract his employer, Novell, or another distribution provider, or for less cost contract a private consultant to make needed contributions to the kernel for your company’s needs.

Kroah-Hartman’s talk, as his talks always are, was entertaining and lively in a way difficult to portray in a summary of his content.

The new Ext4 filesystem: current and future plans

The next talk was held by Avantika Mathur of IBM’s Linux technology centre on the topic of the Ext4 filesystem and its current and future plans. Why Ext4, Mathur asked at the outset. The current standard Linux filesystem, Ext3, has a severe limitation with its 16 terabyte filesystem size limit. Between that and some performance issues, it was decided to to branch into Ext4.

Mathur asked why not XFS or an entirely new filesystem? Largely, she explained, because of the large existing Ext3 community. They would be able to maintain backward compatibility and upgrade from Ext3 to Ext4 without the lengthly backup/restore process generally required to change filesystems. The XFS codebase, she says, is larger than Ext3’s. A smaller codebase would be better.

The Ext4 filesystem, available as Ext4-dev starting in Linux kernel 2.6.19, is an Ext3 filesystem clone with 64 bit JBDs, which I am not entirely sure what are. The goals of the Ext4 project, she explained, are to improve scalability, fsck (filesystem check) times, performance, and reliability, while retaining backward compatibility.

The filesystem now supports a max filesystem size of one exabyte. That is to say, Ext4 can hold 260 bytes with 48-bit block numbers times 4KB per block, or 1,152,921,504,606,846,976 bytes. Mathur predicted this should last around five to ten years, after which time the support for filesystems actually that large can be seen and a move to 64 bit filesystems can be attempted, and should be possible without having to go to Ext5 based on Ext4’s design.

Ext3 uses indirect block mapping, Mathur says, while Ext4 uses extents. Extents, she explained, use one address for a contiguous range of blocks. One extent can assign up to 20,000 blocks to the same file, and each inode body can hold four extents.

Mathur went on to describe features in or soon to be in the Ext4 patch tree. Ext4 will feature persistent pre-allocation, where space is guaranteed on the disk in advance, with files being allocated space without the need to zero out the data. Using flags, Mathur explained, these extended allocations will be flagged as initialized or uninitialized. Uninitialized blocks, if read, will be returned as zeros by the filesystem driver.

Also in Ext4, reported Mathur, is delayed allocation. Rather than allocating space at the time a file is written to buffers, it is allocated at the time the buffers are flushed to disk. As a result, files are able to be kept more contiguous on disk and short-lived or temporary files may never be allocated any physical disk space. Ext4 also sports a multiple block allocator which can allocate an entire extent at once. Also, Mathur says, an on-line Ext4 defragmenter is in the works.

Filesystem checks are a concern with one exabyte filesystems, noted Mathur, for their speed. Using current fsck, it would take 119 years to check the entire filesystem. The version of fsck for Ext4 will not check unallocated inodes, Mathur says, among other improvements. She showed a performance chart showing significant performance improvements in e4fsck over its predecessors.

Ext4, has a number of scalability improvements over Ext3, Mathur says, raising the maximum filesize from two terabytes to 16 terabytes, with the file size limit being left there as a performance versus size tradeoff. Ext4 has 256 byte inode entries, up from 128 in Ext3, and Ext4 introduces nanosecond timestamps instead of the second timestamps in Ext3. Mathur explained that this is because with the speed of operations now, files can be modified repeatedly in a single second. Also, she says, Ext4 introduces a 64-bit inode version numbers for the benefit of NFS which makes use of it.

Mathur wrapped up her presentation with a thanks to the 19 people contributing to the Ext4 project.

The evening of the this second day of OLS saw the second of two corporate receptions with IBM putting on a spread and giving away prizes while talking about its Power6 processors in one of the conference rooms.

The Power6 processor, demonstrated briefly by Anton Blanchard, contains 16 4.7GHz cores. He demonstrated a benchmarking tool that compiles the Linux kernel 10 times for effect. It accomplished this in just under 20 seconds.

Of particular note, Blanchard reported that IBM uses Linux to test and debug the Power6 chips. Following this, the hosts of the reception gave away several PS/3s, some Freescale motherboards, and some other smaller bits of swag as door prizes. Thus ended the second day of four days of OLS 2007.

Category:

- Events