In our first three installments in this series, we learned what Kubernetes is, why it’s a good choice for your datacenter, and how it was descended from the secret Google Borg project. Now we’re going to learn what makes up a Kubernetes cluster.

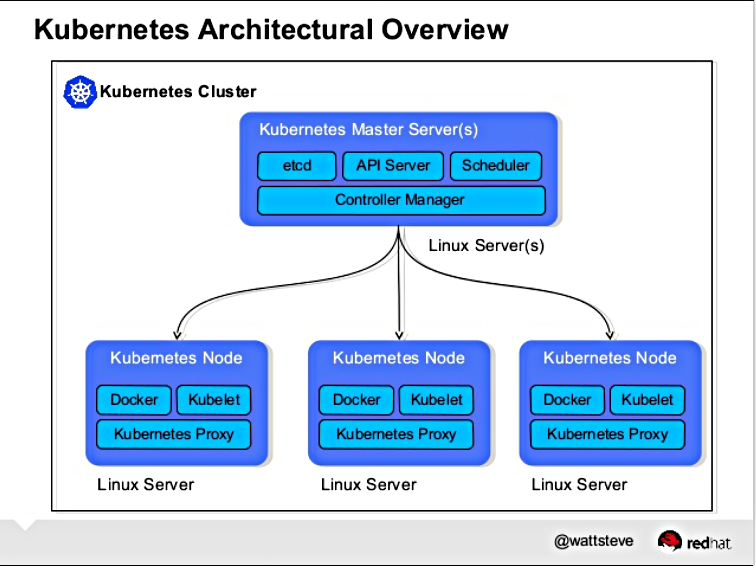

A Kubernetes cluster is made of a master node and a set of worker nodes. In a production environment these run in a distributed setup on multiple nodes. For testing purposes, all the components can run on the same node (physical or virtual) by using minikube.

Kubernetes has six main components that form a functioning cluster:

- API server

- Scheduler

- Controller manager

- kubelet

- kube-proxy

- etcd

Each of these components can run as standard Linux processes, or they can run as Docker containers.

The Master Node

The master node runs the API server, the scheduler, and the controller manager. For example, on one of the Kubernetes master nodes that we started on a CoreOS instance, we see the following systemd unit files:

core@master ~ $ systemctl -a | grep kube kube-apiserver.service loaded active running Kubernetes API Server kube-controller-manager.service loaded active running Kubernetes Controller Manager kube-scheduler.service loaded active running Kubernetes Scheduler

The API server exposes a highly-configurable REST interface to all of the Kubernetes resources.

The Scheduler’s main responsibility is to place the containers on the node in the cluster according to various policies, metrics, and resource requirements. It is also configurable via command line flags.

Finally, the Controller Manager is responsible for reconciling the state of the cluster with the desired state, as specified via the API. In effect, it is a control loop that performs actions based on the observed state of the cluster and the desired state.

The master node supports a multi-master highly-available setup. The schedulers and controller managers can elect a leader, while the API servers can be fronted by a load-balancer.

Worker Nodes

All the worker nodes run the kubelet, kube-proxy, and the Docker engine.

The kubelet interacts with the underlying Docker engine to bring up containers as needed. The kube-proxy is in charge of managing network connectivity to the containers.

core@node-1 ~ $ systemctl -a | grep kube kube-kubelet.service loaded active running Kubernetes Kubelet kube-proxy.service loaded active running Kubernetes Proxy core@node-1 ~ $ systemctl -a | grep docker docker.service loaded active running Docker Application Container Engine docker.socket loaded active running Docker Socket for the API

As a side note, you can also run an alternative to the Docker engine, rkt by CoreOS. It is likely that Kubernetes will support additional container runtimes in the future.

Next week we’ll learn about networking, and maintaining a persistency layer with etcd.

Read more: