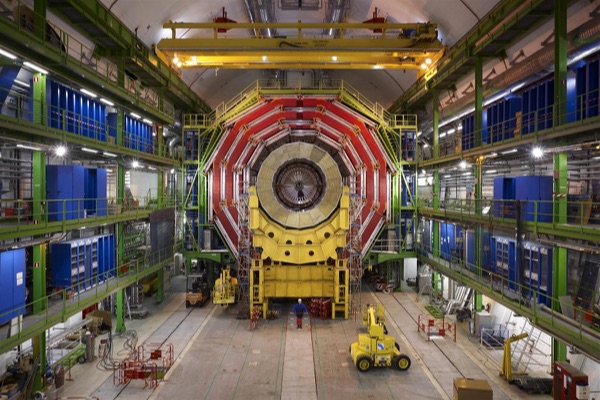

The high energy physics team at California Institute of Technology (Caltech) are part of a vast global network of researchers who are performing experiments with the Large Hadron Collider (LHC) at CERN in Switzerland and France – the world’s biggest machine – to make new discoveries about how our universe evolves, and they’re using Linux and open source software. This includes a search for the Higgs Boson, extra dimensions, supersymmetry, and particles that could make up dark matter.

LHC experiments output an enormous amount of data – over 200 petabytes – that is then shared with the global research community to review and analyze. That data is dispersed throughout a network consisting of 13 Tier 1 sites,160 Tier 2 sites and 300+ Tier 3 sites and crosses a range of service provider and geographic boundaries with different bandwidths and capabilities.

An international team of high energy physicists, computer scientists, and network engineers have been exploring Software-Defined Networking (SDN) as a means of sharing the LHC’s data output quickly and efficiently with the global research community. The project is led by Caltech, SPRACE Sao Paulo, and University of Michigan, with teams from FIU and Vanderbilt.

We met with the Caltech team to understand how they’re using the OpenDaylight open source SDN platform and the OpenFlow protocol to create a highly intelligent software-defined network. Not only will their work have implications for the LHC, but also for any enterprise, telecommunications provider or service provider being faced with ever-increasing data volumes.