Take a look inside one Linux sysadmin’s path to achieving his goals.

Read More at Enable Sysadmin

My journey into Linux system administration

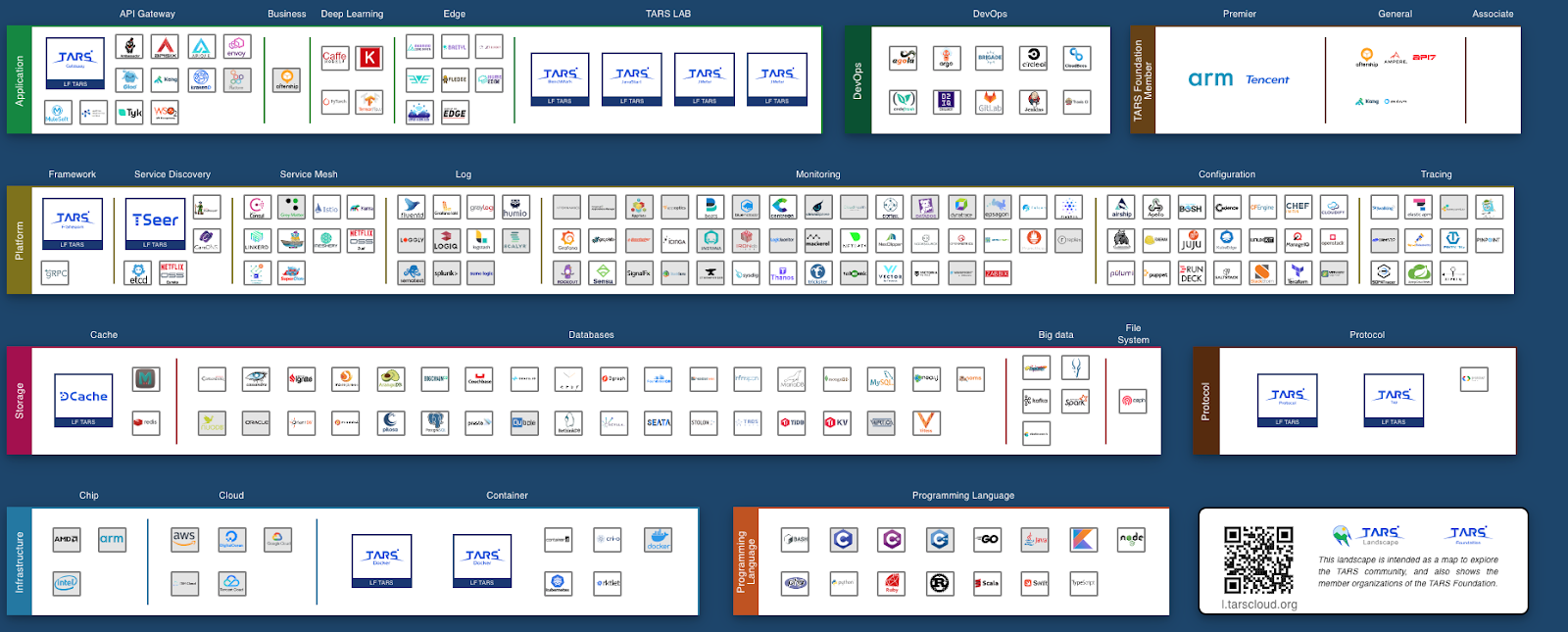

The TARS Foundation Celebrates its First Anniversary

The TARS Foundation, an open source microservices foundation under the Linux Foundation, celebrated its first anniversary on March 10, 2021. As we all know, 2020 was a strange year, and we are all adjusting to the new normal. Meanwhile, despite being unable to meet in person, the TARS Foundation community is connected, sharing and working together virtually toward our goals.

This year, four new projects have joined the TARS Foundation, expanding our technical community. The TARS Foundation launched TARS Landscape in July 2020, presenting an ideal and complete microservice ecosystem, which is the vision that the TARS open source community works to achieve. Furthermore, we welcome more open source projects to join the TARS community and go through our incubation process.

In September 2020, The Linux Foundation and TARS Foundation released a new, free training course, Building Microservice Platforms with TARS, on the edX platform. This course is designed for engineers working in microservices and enterprise managers interested in exploring internal technical architectures working for digital transmission in traditional industries. The course explains the functions, characteristics, and structure of the TARS microservices framework while demonstrating how to deploy and maintain services in different programming languages in the TARS Framework. Besides, anyone interested in software architecture will benefit from this course.

If you are interested in TARS training resources, please check out Building Microservice Platforms with TARS on edX.

Thanking our Members and Contributors

For more updates from TARS Foundation, please read our Annual Report 2020.

We would like to thank all our projects and project contributors. Thank you for your trust in the TARS Foundation. Without you and the value you bring to our entire community, our foundation would not exist.

We also want to thank our Governing Board, Technical Oversight Committee, Outreach Committee, and Community Advisor members! Every member has demonstrated their dedication and tireless efforts to ensure that the TARS Foundation is building a complete governance structure to push out a more comprehensive range of programs and make real progress. With the guidance of these passionate and wise leaders from our governing bodies, TARS Foundation is confident to become a neutral home for additional projects that solve critical problems surrounding microservices.

Thank you to all our members, Arm, Tencent, AfterShip, Ampere, API7, Kong, Zenlayer, and Nanjing University, for investing in the future of open source microservices. The TARS Foundation welcomes more companies and organizations to join our mission by becoming members.

Thank you to our end users! The TARS Foundation End User Community Plan was released to allow more companies to get involved with the TARS community. The purpose of the plan is to enable an open and free platform for communication and discussion about microservices technology and collaboration opportunities. Currently, the TARS Foundation has eight end-user companies, and we welcome more companies to join us as End Users.

What is next?

The TARS Foundation will continue to add more members and end-user companies in the next year while growing our shared resource pool for the benefit of our community. We will also look to include and incubate more projects, aiding our open source microservices ecosystem to empower any industry to turn ideas into applications at scale quickly. As part of our plan for next year, we aim to hold recurring meetup events worldwide and large-scale summits, creating a space for global developers to learn and exchange their ideas about microservices.

Words from our partners

Kevin Ryan, Senior Director, Arm

Through our collaboration with the TARS Foundation and Tencent, we’ve leveraged a significant opportunity to build and develop the microservices ecosystem,” said Kevin Ryan, senior director of Ecosystem, Automotive and IoT Line of Business, Arm. “We look forward to future growth across the TARS community as contributions, members, and momentum continue to accelerate.”

Mark Shan, Open Source Alliance Chair, Tencent

As TARS Foundation turns one year old, Tencent will continue to collaborate with partners and build an open and free microservices ecosystem in open source. By consistently upgrading microservices technology and cultivating the TARS community, we look forward to creating more innovations and making social progress through technology.

Teddy Chan, CEO & Co-Founder, AfterShip

Best wishes to the TARS Foundation for turning one year old and continuing its positive influence on microservices. AfterShip will fully support the future development of the Foundation!

Mauri Whalen, VP of Software Engineering, Ampere

Ampere has been partnering with the TARS Foundation to drive innovation for microservices. Ampere understands the importance of this technology and is committed to providing Ampere/Arm64 Platform support and a performance testing framework for building the open microservices community. We are excited the TARS Foundation has reached its first birthday milestone. Their project is driving needed innovation for modern cloud workloads.

Ming Wen, Co-founder, API7

Congratulations to the first anniversary of the TARS Foundation! With the wave of enterprise digital transformation, microservices have become the infrastructure for connecting critical traffic. The TARS Foundation has gathered several well-known open source projects related to microservices, including the APISIX-based open source microservice gateway provided by api7.ai. We believe that under the TARS Foundation’s efforts, microservices and the TARS Foundation will play an increasingly important role in digital transformation.

Marco Palladino, CTO and Co-Founder, Kong

In this new era driven by digital transformation 2.0, organizations around the world are transforming their applications to microservices to grow their customer base faster, enter new markets, and ship products faster. None of this would be possible without agile, distributed, and decoupled architectures that drive innovation, efficiency, and reliability in our digital strategy: in one word, microservices. Kong supports the TARS foundation to accelerate microservices adoption in both open source ecosystems and enterprise landscape, and to provide a modern connectivity fabric for all our services, across every cloud and platform.”, Marco Palladino, CTO and Co-Founder at Kong.

Jim Xu, Principal Engineer & Architect, Zenlayer

Microservices are the next big thing in the cloud as they enable fast development, scaling, and time-to-market of enterprise applications. TARS Foundation leads in building a strong ecosystem for open-source microservices, from the edge to the cloud. As a leading-edge cloud service provider, Zenlayer is committed to enabling microservices in multi-cloud and hybrid cloud scenarios in collaboration with the TARS Foundation community. As the TARS Foundation enters its second year, Zenlayer will continue to innovate in infrastructure, platforms, and labs to empower microservice implementation for enterprises of all kinds.

He Zhang, Professor, Nanjing University

We fully support the development of microservices and the mission to co-build a Cloud-native ecosystem. Embracing open source and community contribution, we believe the TARS Foundation is creating a future with endless possibilities ahead.

About the TARS Foundation

The TARS Foundation is a nonprofit, open source microservice foundation under the Linux Foundation umbrella to support the rapid growth of contributions and membership for a community focused on building an open microservices platform. It focuses on open source technology that helps businesses to embrace the microservices architecture as they innovate into new areas and scale their applications. For more information, please visit tarscloud.org.

How to use Ansible to send an email using Gmail

How to use Ansible to send an email using Gmail

Here’s a brief introductory article that describes how to configure Gmail with Ansible.

Sarthak Jain

Thu, 3/11/2021 at 4:51pm

Image

Photo by Laura Stanley from Pexels

A lot of people use Gmail daily to send and receive mail. The estimated number of global users in 2020 was 1.8 billion. Gmail works on the SMTP protocol over port number 587. In this article, I demonstrate how to configure your SMTP web server and send mail automatically from Ansible and using ansible-vault to secure passwords.

Encrypt your password file

The ansible-vault command creates an encrypted file where you can store your confidential details.

Topics:

Linux

Linux Administration

Ansible

Read More at Enable Sysadmin

Review of Four Hyperledger Libraries- Aries, Quilt, Ursa, and Transact

By Matt Zand

Recap

In our two previous articles, first we covered “Review of Five popular Hyperledger DLTs- Fabric, Besu, Sawtooth, Iroha and Indy” where we discussed the following five Hyperledger Distributed Ledger Technologies (DLTs):

- Hyperledger Indy

- Hyperledger Fabric

- Hyperledger Iroha

- Hyperledger Sawtooth

- Hyperledger Besu

Then, we moved on to our second article (Review of three Hyperledger Tools- Caliper, Cello and Avalon) where we surveyed the following three Hyperledger t ools:

- Hyperledger Caliper

- Hyperledger Cello

- Hyperledger Avalon

So in this follow-up article, we review four (as listed below) Hyperledger libraries that work very well with other Hyperledger DLTs. As of this writing, all of these libraries are at the incubation stage except for Hyperledger Aries, which has graduated to active.

- Hyperledger Aries

- Hyperledger Quilt

- Hyperledger Ursa

- Hyperledger Transact

Hyperledger Aries

Identity has been adopted by the industry as one of the most promising use cases of DLTs. Solutions and initiatives around creating, storing, and transmitting verifiable digital credentials will result in a reusable, shared, interoperable tool kit. In response to such growing demand, Hyperledger has come up with three projects (Hyperledger Indy, Hyperledger Iroha and Hyperledger Aries) that are specifically focused on identity management.

Hyperledger Aries is infrastructure for blockchain-rooted, peer-to-peer interactions. It includes a shared cryptographic wallet (the secure storage tech, not a UI) for blockchain clients as well as a communications protocol for allowing off-ledger interactions between those clients. This project consumes the cryptographic support provided by Hyperledger Ursa to provide secure secret management and decentralized key management functionality.

According to Hyperledger Aries’ documentation, Aries includes the following features:

- An encrypted messaging system for off-ledger interactions using multiple transport protocols between clients.

- A blockchain interface layer that is also called as a resolver. It is used for creating and signing blockchain transactions.

- A cryptographic wallet to enable secure storage of cryptographic secrets and other information that is used for building blockchain clients.

- An implementation of ZKP-capable W3C verifiable credentials with the help of the ZKP primitives that are found in Hyperledger Ursa.

- A mechanism to build API-like use cases and higher-level protocols based on secure messaging functionality.

- An implementation of the specifications of the Decentralized Key Management System (DKMS) that are being currently incubated in Hyperledger Indy.

- Initially, the generic interface of Hyperledger Aries will support the Hyperledger Indy resolver. But the interface is flexible in the sense that anyone can build a pluggable method using DID method resolvers such as Ethereum and Hyperledger Fabric, or any other DID method resolver they wish to use. These resolvers would support the resolving of transactions and other data on other ledgers.

- Hyperledger Aries will additionally provide the functionality and features outside the scope of the Hyperledger Indy ledger to be fully planned and supported. Owing to these capabilities, the community can now build core message families to facilitate interoperable interactions using a wide range of use cases that involve blockchain-based identity.

For more detailed discussion on its implementation, visit the link provided in the References section.

Hyperledger Quilt

The widespread adoption of blockchain technology by global businesses has coincided with the emergence of tons of isolated and disconnected networks or ledgers. While users can easily conduct transactions within their own network or ledger, they experience technical difficultly (and in some cases impracticality) for doing transactions with parties residing on different networks or ledgers. At best, the process of cross-ledger (or cross-network) transactions is slow, expensive, or manual. However, with the advent and adoption of Interledger Protocol (ILP), money and other forms of value can be routed, packetized, and delivered over ledgers and payment networks.

Hyperledger Quilt is a tool for interoperability between ledger systems and is written in Java by implementing the ILP for atomic swaps. While the Interledger is a protocol for making transactions across ledgers, ILP is a payment protocol designed to transfer value across non-distributed and distributed ledgers. The standards and specifications of Interledger protocol are governed by the open-source community under the World Wide Web Consortium umbrella. Quilt is an enterprise-grade implementation of the ILP, and provides libraries and reference implementations for the core Interledger components used for payment networks. With the launch of Quilt, the JavaScript (Interledger.js) implementation of Interledger was maintained by the JS Foundation.

According to the Quilt documentation, as a result of ILP implementation, Quilt offers the following features:

- A framework to design higher-level use-case specific protocols.

- A set of rules to enable interoperability with basic escrow semantics.

- A standard for data packet format and a ledger-dependent independent address format to enable connectors to route payments.

For more detailed discussion on its implementation, visit the link provided in the References section.

Hyperledger Ursa

Hyperledger Ursa is a shared cryptographic library that enables people (and projects) to avoid duplicating other cryptographic work and hopefully increase security in the process. The library is an opt-in repository for Hyperledger projects (and, potentially others) to place and use crypto.

Inside Project Ursa, a complete library of modular signatures and symmetric-key primitives is at the disposal of developers to swap in and out different cryptographic schemes through configuration and without having to modify their code. On top its base library, Ursa also includes newer cryptography, including pairing-based, threshold, and aggregate signatures. Furthermore, the zero-knowledge primitives including SNARKs are also supported by Ursa.

According to the Ursa’s documentation, Ursa offers the following benefits:

- Preventing duplication of solving similar security requirements across different blockchain

- Simplifying the security audits of cryptographic operations since the code is consolidated into a single location. This reduces maintenance efforts of these libraries while improving the security footprint for developers with beginner knowledge of distributed ledger projects.

- Reviewing all cryptographic codes in a single place will reduce the likelihood of dangerous security bugs.

- Boosting cross-platform interoperability when multiple platforms, which require cryptographic verification, are using the same security protocols on both platforms.

- Enhancing the architecture via modularity of common components will pave the way for future modular distributed ledger technology platforms using common components.

- Accelerating the time to market for new projects as long as an existing security paradigm can be plugged-in without a project needing to build it themselves.

For more detailed discussion on its implementation, visit the link provided in the References section.

Hyperledger Transact

Hyperledger Transact, in a nutshell, makes writing distributed ledger software easier by providing a shared software library that handles the execution of smart contracts, including all aspects of scheduling, transaction dispatch, and state management. Utilizing Transact, smart contracts can be executed irrespective of DLTs being used. Specifically, Transact achieves that by offering an extensible approach to implementing new smart contract languages called “smart contract engines.” As such, each smart contract engine implements a virtual machine or interpreter that processes smart contracts.

At its core, Transact is solely a transaction processing system for state transitions. That is, s tate data is normally stored in a key-value or an SQL database. Considering an initial state and a transaction, Transact executes the transaction to produce a new state. These state transitions are deemed “pure” because only the initial state and the transaction are used as input. (In contrast to other systems such as Ethereum where state and block information are mixed to produce the new state). Therefore, Transact is agnostic about DLT framework features other than transaction execution and state.

According to Hyperledger Transact’s documentation, Transact comes with the following components:

- State. The Transact state implementation provides get, set, and delete operations against a database. For the Merkle-Radix tree state implementation, the tree structure is implemented on top of LMDB or an in-memory database.

- Context manager. In Transact, state reads and writes are scoped (sandboxed) to a specific “context” that contains a reference to a state ID (such as a Merkle-Radix state root hash) and one or more previous contexts. The context manager implements the context lifecycle and services the calls that read, write, and delete data from state.

- Scheduler. This component controls the order of transactions to be executed. Concrete implementations include a serial scheduler and a parallel scheduler. Parallel transaction execution is an important innovation for increasing network throughput.

- Executor. The Transact executor obtains transactions from the scheduler and executes them against a specific context. Execution is handled by sending the transaction to specific execution adapters (such as ZMQ or a static in-process adapter) which, in turn, send the transaction to a specific smart contract.

- Smart Contract Engines. These components provide the virtual machine implementations and interpreters that run the smart contracts. Examples of engines include WebAssembly, Ethereum Virtual Machine, Sawtooth Transactions Processors, and Fabric Chaincode.

For more detailed discussion on its implementation, visit the link provided in the References section.

Summary

In this article, we reviewed four Hyperledger libraries that are great resources for managing Hyperledger DLTs. We started by explaining Hyperledger Aries, which is infrastructure for blockchain-rooted, peer-to-peer interactions and includes a shared cryptographic wallet for blockchain clients as well as a communications protocol for allowing off-ledger interactions between those clients. Then, we learned that Hyperledger Quilt is the interoperability tool between ledger systems and is written in Java by implementing the ILP for atomic swaps. While the Interledger is a protocol for making transactions across ledgers, ILP is a payment protocol designed to transfer value across non-distributed and distributed ledgers. We also discussed that Hyperledger Ursa is a shared cryptographic library that would enable people (and projects) to avoid duplicating other cryptographic work and hopefully increase security in the process. The library would be an opt-in repository for Hyperledger projects (and, potentially others) to place and use crypto. We concluded our article by reviewing Hyperledger Transact by which smart contracts can be executed irrespective of DLTs being used. Specifically, Transact achieves that by offering an extensible approach to implementing new smart contract languages called “smart contract engines.”

References

For more references on all Hyperledger projects, libraries and tools, visit the below documentation links:

- Hyperledger Indy Project

- Hyperledger Fabric Project

- Hyperledger Aries Library

- Hyperledger Iroha Project

- Hyperledger Sawtooth Project

- Hyperledger Besu Project

- Hyperledger Quilt Library

- Hyperledger Ursa Library

- Hyperledger Transact Library

- Hyperledger Cactus Project

- Hyperledger Caliper Tool

- Hyperledger Cello Tool

- Hyperledger Explorer Tool

- Hyperledger Grid (Domain Specific)

- Hyperledger Burrow Project

- Hyperledger Avalon Tool

Resources

- Free Training Courses from The Linux Foundation & Hyperledger

- Blockchain: Understanding Its Uses and Implications (LFS170)

- Introduction to Hyperledger Blockchain Technologies (LFS171)

- Introduction to Hyperledger Sovereign Identity Blockchain Solutions: Indy, Aries & Ursa (LFS172)

- Becoming a Hyperledger Aries Developer (LFS173)

- Hyperledger Sawtooth for Application Developers (LFS174)

- eLearning Courses from The Linux Foundation & Hyperledger

- Certification Exams from The Linux Foundation & Hyperledger

- Hands-On Smart Contract Development with Hyperledger Fabric V2 Book by Matt Zand and others.

- Essential Hyperledger Sawtooth Features for Enterprise Blockchain Developers

- Blockchain Developer Guide- How to Install Hyperledger Fabric on AWS

- Blockchain Developer Guide- How to Install and work with Hyperledger Sawtooth

- Intro to Blockchain Cybersecurity

- Intro to Hyperledger Sawtooth for System Admins

- Blockchain Developer Guide- How to Install Hyperledger Iroha on AWS

- Blockchain Developer Guide- How to Install Hyperledger Indy and Indy CLI on AWS

- Blockchain Developer Guide- How to Configure Hyperledger Sawtooth Validator and REST API on AWS

- Intro blockchain development with Hyperledger Fabric

- How to build DApps with Hyperledger Fabric

- Blockchain Developer Guide- How to Build Transaction Processor as a Service and Python Egg for Hyperledger Sawtooth

- Blockchain Developer Guide- How to Create Cryptocurrency Using Hyperledger Iroha CLI

- Blockchain Developer Guide- How to Explore Hyperledger Indy Command Line Interface

- Blockchain Developer Guide- Comprehensive Blockchain Hyperledger Developer Guide from Beginner to Advance Level

- Blockchain Management in Hyperledger for System Admins

- Hyperledger Fabric for Developers

About Author

Matt Zand is a serial entrepreneur and the founder of three tech startups: DC Web Makers, Coding Bootcamps and High School Technology Services. He is a leading author of Hands-on Smart Contract Development with Hyperledger Fabric book by O’Reilly Media. He has written more than 100 technical articles and tutorials on blockchain development for Hyperledger, Ethereum and Corda R3 platforms at sites such as IBM, SAP, Alibaba Cloud, Hyperledger, The Linux Foundation, and more. As a public speaker, he has presented webinars at many Hyperledger communities across USA and Europe . At DC Web Makers, he leads a team of blockchain experts for consulting and deploying enterprise decentralized applications. As chief architect, he has designed and developed blockchain courses and training programs for Coding Bootcamps. He has a master’s degree in business management from the University of Maryland. Prior to blockchain development and consulting, he worked as senior web and mobile App developer and consultant, angel investor, business advisor for a few startup companies. You can connect with him on LinkedIn.

The post Review of Four Hyperledger Libraries- Aries, Quilt, Ursa, and Transact appeared first on Linux Foundation – Training.

How open source communities are driving 5G’s future, even within a large government like the US

In mid-February, the Linux Foundation announced it had signed a collaboration agreement with the Defense Advanced Research Projects Agency (DARPA), enabling US Government suppliers to collaborate on a common open source platform that will enable the adoption of 5G wireless and edge technologies by the government. Governments face similar issues to enterprise end-users — if all their suppliers deliver incompatible solutions, the integration burden escalates exponentially.

The first collaboration, Open Programmable Secure 5G (OPS-5G), currently in the formative stages, will be used to create open source software and systems enabling end-to-end 5G and follow-on mobile networks.

The road to open source influencing 5G: The First, Second, and Third Waves of Open Source

If we examine the history of open source, it is informative to observe it from the perspective of evolutionary waves. Many open-source projects began as single technical projects, with specific objectives, such as building an operating system kernel or an application. This isolated, single project approach can be viewed as the first wave of open source.

We can view the second wave of open source as creating platforms seeking to address a broad horizontal solution, such as a cloud or networking stack or a machine learning and data platform.

The third wave of open source collaboration goes beyond isolated projects and integrates them for a common platform for a specific industry vertical. Additionally, the third wave often focuses on reducing fragmentation — you commonly will see a conformance program or a specification or standard that anyone in the industry can cite in procurement contracts.

Industry conformance becomes important as specific solutions are taken to market and how cross-industry solutions are being built — especially now that we have technologies requiring cross-industry interaction, such as end-to-end 5G, the edge, or even cloud-native applications and environments that span any industry vertical.

The third wave of open source also seeks to provide comprehensive end-to-end solutions for enterprises and verticals, large institutional organizations, and government agencies. In this case, the community of government suppliers will be building an open source 5G stack used in enterprise networking applications. The end-to-end open source integration and collaboration supported by commercial investment with innovative products, services, and solutions accelerate the technology adoption and transformation.

Why DARPA chose to partner with the Linux Foundation

DARPA at the US Department of Defense has tens of thousands of contractors supplying networking solutions for government facilities and remote locations. However, it doesn’t want dozens, hundreds, or thousands of unique and incompatible hardware and software solutions originating from its large contractor and supplier ecosystem. Instead, it desires a portable and open access standard to provide transparency to enable advanced software tools and systems to be applied to a common code base various groups in the government could build on. The goal is to have a common framework that decouples hardware and software requirements and enabling adoption by more groups within the government.

Naturally, as a large end-user, the government wants its suppliers to focus on delivering secure solutions. A common framework can ideally decrease the security complexity versus having disparate, fragmented systems.

The Linux Foundation is also the home of nearly all the important open source projects in the 5G and networking space. Out of the $54B of the Linux Foundation community software projects that have been valued using the COCOMO2 model, the open source projects assisting with building a 5G stack are estimated to be worth about $25B in shared technology investment. The LF Networking projects have been valued at $7.4B just by themselves.

The support programs at Linux Foundation provide the key foundations for a shared community innovations pool. These programs include IP structure and legal frameworks, an open and transparent development process, neutral governance, conformance, and DevOps infrastructure for end-to-end project lifecycle and code management. Therefore, it is uniquely suited to be the home for a community-driven effort to define an open source 5G end-to-end architecture, create and run the open source projects that embody that architecture, and support its integration for scaling-out and accelerating adoption.

The foundations of a complete open source 5G stack

The Linux Foundation worked in the telecommunications industry early on in its existence, starting with the Carrier Grade Linux initiatives to identify requirements and building features to enable the Linux kernel to address telco requirements. In 2013, The Linux Foundation’s open source networking platform started with bespoke projects such as OpenDaylight, the software-defined networking controller. OPNFV (now Anuket), the network function virtualization stack, was introduced in 2014-2015, followed by the first release of Tungsten Fabric, the automated software-defined networking stack. FD.io, the secure networking data plane, was announced in 2016, a sister project of the Data Plane Development Kit (DPDK) released into open source in 2010.

At the time, the telecom/network and wireless carrier industry sought to commoditize and accelerate innovation across a specific piece of the stack as software-defined networking became part of their digital transformation. Since the introduction of these projects at LFN, the industry has seen heavy adoption and significant community contribution by the largest telecom carriers and service providers worldwide. This history is chronicled in detail in our whitepaper, Software-Defined Vertical Industries: Transformation Through Open Source.

The work that the member companies will focus on will require robust frameworks for ensuring changes to these projects are contributed back upstream into the source projects. Upstreaming, which is a key benefit to open source collaboration, allows the contributions specific to this 5G effort to roll back into their originating projects, thus improving the software for every end-user and effort that uses them.

The Linux Foundation networking stack continues to evolve and expand into additional projects due to an increased desire to innovate and commoditize across key technology areas through shared investments among its members. In February of 2021, Facebook contributed the Magma project, which transcends platform infrastructure such as the others listed above. Instead, it is a network function application that is core to 5G network operations.

The E2E 5G Super Blueprint is being developed by the LFN Demo working group. This is an open collaboration and we encourage you to join us. Learn more here

Building through organic growth and cross-pollination of the open source networking and cloud community

Tier 2 operators, rural operators, and governments worldwide want to reap the benefits of economic innovation as well as potential cost-savings from 5G. How is this accomplished?

With this joint announcement and its DARPA supplier community collaboration, the Linux Foundation’s existing projects can help serve the requirements of other large end-users. Open source communities are advancing and innovating some of the most important and exciting technologies of our time. It’s always interesting to have an opportunity to apply the results of these communities to new use cases.

The Linux Foundation understands the critical dynamic of cross-pollination between community-driven open source projects needed to help make an ecosystem successful. Its proven governance model has demonstrated the ability to maintain and mature open source projects over time and make them all work together in one single, cohesive ecosystem.

As a broad set of contributors work on components of an open source stack for 5G, there will be cross-community interactions. For example, that means that Project EVE, the cloud-native edge computing platform, will potentially be working with Project Zephyr, the scalable real-time operating system (RTOS) kernel, so that Eve can potentially orchestrate Zephyr devices. It’s all based on contributors’ self-interests and motivations to contribute functionality that enables these projects to work together. Similarly, ONAP, the network automation/orchestration platform, is tightly integrated with Akraino so that it has architectural deployment templates built around network edge clouds and multi-edge clouds.

An open source platform has implications not just for new business opportunities for government suppliers but also for other institutions. The projects within an open source platform have open interfaces that can be integrated and used with other software so that other large end-users like the World Bank, can have validated and tested architectural blueprints, with which can go ahead and deploy effective 5G solutions in the marketplace in many host countries, providing them a turnkey stack. This will enable them to encourage providers through competition or challenges native to their in-country commercial ecosystem to implement those networks.

This is a true solutions-oriented open source for 5G stack for enterprises, governments, and the world.

The post How open source communities are driving 5G’s future, even within a large government like the US appeared first on Linux Foundation.

New open source project helps musicians jam together even when they’re not together

Today, the Linux Foundation announced that it would be adding Rend-o-matic to the list of Call for Code open source projects that it hosts. The Rend-o-matic technology was originally developed as part of the Choirless project during a Call for Code challenge as a way to enable musicians to jam together regardless of where they are. Initially developed to help musicians socially distance because of COVID 19, the application has many other benefits, including bringing together musicians from different parts of the world and allowing for multiple versions of a piece of music featuring various artist collaborations. The artificial intelligence powering Choirless ensures that the consolidated recording stays accurately synchronized even through long compositions, and this is just one of the pieces of software being released under the new Rend-o-matic project.

Created by a team of musically-inclined IBM developers, the Rend-o-matic project features a web-based interface that allows artists to record their individual segments via a laptop or phone. The individual segments are processed using acoustic analysis and AI to identify common patterns across multiple segments which are then automatically synced and output as a single track. Each musician can record on their own time in their own place with each new version of the song available as a fresh MP3 track. In order to scale the compute needed by the AI, the application uses IBM Cloud Functions in a serverless environment that can effortlessly scale up or down to meet demand without the need for additional infrastructure updates. Rend-o-matic is itself built upon open source technology, using Apache OpenWhisk, Apache CouchDB, Cloud Foundry, Docker, Python, Node.js, and FFmpeg.

Since its creation, Choirless has been incubated and improved as a Call for Code project, with an enhanced algorithm, increased availability, real-time audio-level visualizations, and more. The solution has been released for testing, and as of January, users of the hosted Choirless service built upon the Rend-o-matic project – including school choirs, professional musicians, and bands – have recorded 2,740 individual parts forming 745 distinct performances.

Call for Code invites developers and problem-solvers around the world to build and contribute to sustainable, open source technology projects that address social and humanitarian issues while ensuring the top solutions are deployed to make a demonstrable difference. Learn more about Call for Code. You can learn more about Rend-o-matic, sample the technology, and contribute back to the project at https://choirless.github.io/

The post New open source project helps musicians jam together even when they’re not together appeared first on Linux Foundation.

LF Edge’s State of the Edge 2021 Report Predicts Global Edge Computing Infrastructure Market to be Worth Up to $800 Billion by 2028

- COVID-19 highlighted that expertise in legacy data centers could be obsolete in the next few years as the pandemic forced the development of new tools enabled by edge computing for remote monitoring, provisioning, repair and management.

- Open source hardware and software projects are driving innovation at the edge by accelerating the adoption and deployment of applications for cloud-native, containerized and distributed applications.

- The LF Edge taxonomy, which offers terminology standardization with a balanced view of the edge landscape, is based on inherent technical and logistical trade offs spanning the edge to cloud continuum is gaining widespread industry adoption.

- Seven out of 10 areas of edge computing experienced growth in 2020 with a number of new use cases that are driven by 5G.

SAN FRANCISCO – March 10, 2020 – State of the Edge, a project under the LF Edge umbrella organization that established an open, interoperable framework for edge independent of hardware, silicon, cloud, or operating system, today announced the release of the 4th annual, State of the Edge 2021 Report. The market and ecosystem report for edge computing shares insight and predictions on how the COVID-19 pandemic disrupted the status quo, how new types of critical infrastructure have emerged to service the next-level requirements, and open source collaboration as the only way to efficiently scale Edge Infrastructure.

Tolaga Research, which led the market forecasting research for this report, predicts that between 2019 and 2028, cumulative capital expenditures of up to $800 billion USD will be spent on new and replacement IT server equipment and edge computing facilities. These expenditures will be relatively evenly split between equipment for the device and infrastructure edges.

“Our 2021 analysis shows demand for edge infrastructure accelerating in a post COVID-19 world,” said Matt Trifiro, co-chair of State of the Edge and CMO of edge infrastructure company Vapor IO. “We’ve been observing this trend unfold in real-time as companies re-prioritize their digital transformation efforts to account for a more distributed workforce and a heightened need for automation. The new digital norms created in response to the pandemic will be permanent. This will intensify the deployment of new technologies like wireless 5G and autonomous vehicles, but will also impact nearly every sector of the economy, from industrial manufacturing to healthcare.”

The pandemic is accelerating digital transformation and service adoption.

Government lockdowns, social distancing and fragile supply chains had both consumers and enterprises using digital solutions last year that will permanently change the use cases across the spectrum. Expertise in legacy data centers could be obsolete in the next few years as the pandemic has forced the development of tools for remote monitoring, provisioning, repair and management, which will reduce the cost of edge computing. Some of the areas experiencing growth in the Global Infrastructure Edge Power are automotive, smart grid and enterprise technology. As businesses began spending more on edge computing, specific use cases increased including:

- Manufacturing increased from 3.9 to 6.2 percent, as companies bolster their supply chain and inventory management capabilities and capitalize on automation technologies and autonomous systems.

- Healthcare, which increased from 6.8 to 8.6 percent, was buoyed by increased expectations for remote healthcare, digital data management and assisted living.

- Smart cities increased from 5.0 to 6.1 percent in anticipation of increased expenditures in digital infrastructure in the areas such as surveillance, public safety, city services and autonomous systems.

“In our individual lock-down environments, each of us is an edge node of the Internet and all our computing is, mostly, edge computing,” said Wenjing Chu, senior director of Open Source and Research at Futurewei Technologies, Inc. and LF Edge Governing Board member. “The edge is the center of everything.”

Open Source is driving innovation at the edge by accelerating the adoption and deployment of edge applications.

Open Source has always been the foundation of innovation and this became more prevalent during the pandemic as individuals continued to turn to these communities for normalcy and collaboration. LF Edge, which hosts nine projects including State of the Edge, is an important driver of standards for the telecommunications, cloud and IoT edge. Each project collaborates individually and together to create an open infrastructure that creates an ecosystem of support. LF Edge’s projects (Akraino Edge Stack, Baetyl, EdgeX Foundry, Fledge, Home Edge, Open Horizon, Project EVE, and Secure Device Onboard) support emerging edge applications across areas such as non-traditional video and connected things that require lower latency, and faster processing and mobility.

“State of the Edge is shaping the future of all facets of just edge computing and the ecosystem that surrounds it,” said Arpit Joshipura, General Manager of Networking, IoT and Edge. “The insights in the report reflect the entire LF Edge community and our mission to unify edge computing and support a more robust solution at the IoT, Enterprise, Cloud and Telco edge. We look forward to sharing the ongoing work State of the Edge that amplifies innovations across the entire landscape.”

Other report highlights and methodology

For the report, researchers modeled the growth of edge infrastructure from the bottom up, starting with the sector-by-sector use cases likely to drive demand. The forecast considers 43 use cases spanning 11 verticals in calculating the growth, including those represented by smart grids, telecom, manufacturing, retail, healthcare, automotive and mobile consumer services. The vendor-neutral report was edited by Charlie Ashton, Senior Director of Business Development at Napatech, with contributions from Phil Marshall, Chief Research officer at Tolaga Research; Phil Shih, Founder and Managing Director of Structure Research; Technology Journalists Mary Branscombe and Simon Bisson; and Fay Arjomandi, Founder and CEO of mimik. Other highlights from the State of the Edge 2021 Report include:

- Off-the-shelf services and applications are emerging that accelerate and de-risk the rapid deployment of edge in these segments. The variety of emerging use cases is in turn driving a diversity in edge-focused processor platforms, which now include Arm-based solutions, SmartNICs with FPGA-based workload acceleration and GPUs.

- Edge facilities will also create new types of interconnection. Similar to how data centers became meeting points for networks, the micro data centers at wireless towers and cable headends that will power edge computing often sit at the crossroads of terrestrial connectivity paths. These locations will become centers of gravity for local interconnection and edge exchange, creating new and newly efficient paths for data.

- 5G, next-generation SD-WAN and SASE have been standardized. They are well suited to address the multitude of edge computing use cases that are being adopted and are contemplated for the future. As digital services proliferate and drive demand for edge computing, the diversity of network performance requirements will continue to increase.

“The State of the Edge report is an important industry and community resource. This year’s report features the analysis of diverse experts, mirroring the collaborative approach that we see thriving in the edge computing ecosystem,” said Jacob Smith, co-chair of State of the Edge and Vice President of Bare Metal at Equinix. “The 2020 findings underscore the tremendous acceleration of digital transformation efforts in response to the pandemic, and the critical interplay of hardware, software and networks for servicing use cases at the edge.”

Download the report here.

State of the Edge Co-Chairs Matt Trifiro and Jacob Smith, VP Bare Metal Strategy & Marketing of Equinix, will present highlights from the report in a keynote presentation at Open Networking & Edge Executive Forum, a virtual conference on March 10-12. Register here ($50 US) to watch the live presentation on March 12 at 7 am PT or access the video on-demand.

Trifiro and Smith will also host an LF Edge webinar to showcase the key findings on March 18 at 8 am PT. Register here.

About The Linux Foundation

Founded in 2000, the Linux Foundation is supported by more than 1,000 members and is the world’s leading home for collaboration on open source software, open standards, open data, and open hardware. Linux Foundation’s projects are critical to the world’s infrastructure including Linux, Kubernetes, Node.js, and more. The Linux Foundation’s methodology focuses on leveraging best practices and addressing the needs of contributors, users and solution providers to create sustainable models for open collaboration. For more information, please visit us at linuxfoundation.org.

# # #

The Linux Foundation has registered trademarks and uses trademarks. For a list of trademarks of The Linux Foundation, please see our trademark usage page: https://www.linuxfoundation.org/trademark-usage. Linux is a registered trademark of Linus Torvalds.

Media Contact:

Maemalynn Meanor

The post LF Edge’s State of the Edge 2021 Report Predicts Global Edge Computing Infrastructure Market to be Worth Up to $800 Billion by 2028 appeared first on Linux Foundation.

Building a container by hand using namespaces: The mount namespace

Check out some theory and practice around the mount namespace

Read More at Enable Sysadmin

Industry-Wide Initiative to Support Open Source Security Gains New Commitments

Open Source Security Foundation adds new members, Citi, Comcast, DevSamurai, HPE, Mirantis and Snyk

SAN FRANCISCO, Calif., March 9, 2021 – OpenSSF, a cross-industry collaboration to secure the open source ecosystem, today announced new membership commitments to advance open source security education and best practices. New members include Citi, Comcast, DevSamurai, Hewlett Packard Enterprise (HPE), Mirantis, and Snyk.

Open source software (OSS) has become pervasive in data centers, consumer devices and services, representing its value among technologists and businesses alike. Because of its development process, open source has a chain of contributors and dependencies before it ultimately reaches its end users. It is important that those responsible for their user or organization’s security are able to understand and verify the security of this dependency supply chain.

“Open source software is embedded in the world’s technology infrastructure and warrants our dedication to ensuring its security,” said Kay Williams, Governing Board Chair, OpenSSF, and Supply Chain Security Lead, Azure Office of the CTO, Microsoft. “We welcome the latest OpenSSF new members and applaud their commitment to advancing supply chain security for open source software and its technology and business ecosystem.”

The OpenSSF is a cross-industry collaboration that brings together technology leaders to improve the security of OSS. Its vision is to create a future where participants in the open source ecosystem use and share high quality software, with security handled proactively, by default, and as a matter of course. Its working groups include Securing Critical Projects, Security Tooling, Identifying Security Threats, Vulnerability Disclosures, Digital Identity Attestation, and Best Practices.

OpenSSF has more than 35 members and associate members contributing to working groups, technical initiatives and governing board and helping to advance open source security best practices. For more information on founding and new members, please visit: https://openssf.org/about/members/

Membership is not required to participate in the OpenSSF. For more information and to learn how to get involved, including information about participating in working groups and advisory forums, please visit https://openssf.org/getinvolved.

New Member Comments

Citi

“Working with the open source community is a key component in our security strategy, and we look forward to supporting the OpenSSF in its commitment to collaboration,” said Jonathan Meadows, Citi’s Managing Director for Cloud Security Engineering.

Comcast

“Open source software is a valuable resource in our ongoing work to create and continuously evolve great products and experiences for our customers, and we know how important it is to build security at every stage of development. We’re honored to be part of this effort and look forward to collaborating,” said Nithya Ruff, head of Comcast Open Source Program Office.

DevSamurai

“We are living in an interesting era, in which new IT technologies are changing all aspects of our lives everyday. Benefits come with risks, that can’t be truer with open source software. Being a part of OpenSSF we expect to learn from and contribute to the community, together we strengthen security and eliminate risks throughout the software supply chain,” Said Tam Nguyen, head of DevSecOps at DevSamurai.

Mirantis

“As open source practitioners from our very founding, Mirantis has demonstrated its commitment to the values of transparency and collaboration in the open source community,” said Chase Pettet, lead product security architect, Mirantis. “As members of the OpenSSF, we recognize the need for cross-industry security stakeholders to strengthen each other. Our customers will continue to rely on open source for their safety and assurance, and we will continue to support the development of secure open solutions.”

Snyk

“As the number of digital transformation projects has exploded the world over, the mission of the Open Source Security Foundation has never been more critical than it is today,” said Geva Solomonovich, CTO, Global Alliances, Snyk. “Snyk is thrilled to become an official Foundation member, and we look forward to working with the entire community to together push the industry to make all digital environments safer.”

About the Open Source Security Foundation (OpenSSF)

Hosted by the Linux Foundation, the OpenSSF (launched in August 2020) is a cross-industry organization that brings together the industry’s most important open source security initiatives and the individuals and companies that support them. It combines the Linux Foundation’s Core Infrastructure Initiative (CII), founded in response to the 2014 Heartbleed bug, and the Open Source Security Coalition, founded by the GitHub Security Lab to build a community to support the open source security for decades to come. The OpenSSF is committed to collaboration and working both upstream and with existing communities to advance open source security for all.

About the Linux Foundation

Founded in 2000, the Linux Foundation is supported by more than 1,000 members and is the world’s leading home for collaboration on open source software, open standards, open data, and open hardware. Linux Foundation’s projects are critical to the world’s infrastructure including Linux, Kubernetes, Node.js, and more. The Linux Foundation’s methodology focuses on leveraging best practices and addressing the needs of contributors, users and solution providers to create sustainable models for open collaboration. For more information, please visit us at linuxfoundation.org.

###

The Linux Foundation has registered trademarks and uses trademarks. For a list of trademarks of The Linux Foundation, please see our trademark usage page: https://www.linuxfoundation.org/trademark-usage. Linux is a registered trademark of Linus Torvalds.

Media Contact

Jennifer Cloer

for the Linux Foundation

503-867-2304

jennifer@storychangesculture.com

The post Industry-Wide Initiative to Support Open Source Security Gains New Commitments appeared first on Linux Foundation.

Linux Foundation Announces Free sigstore Signing Service to Confirm Origin and Authenticity of Software

Red Hat, Google and Purdue University lead efforts to ensure software maintainers, distributors and consumers have full confidence in their code, artifacts and tooling

SAN FRANCISCO, Calif., March 9, 2021 – The Linux Foundation, the nonprofit organization enabling mass innovation through open source, today announced the sigstore project. sigstore improves the security of the software supply chain by enabling the easy adoption of cryptographic software signing backed by transparency log technologies.

sigstore will empower software developers to securely sign software artifacts such as release files, container images and binaries. Signing materials are then stored in a tamper-proof public log. The service will be free to use for all developers and software providers, with the sigstore code and operation tooling developed by the sigstore community. Founding members include Red Hat, Google and Purdue University.

“sigstore enables all open source communities to sign their software and combines provenance, integrity and discoverability to create a transparent and auditable software supply chain,” said Luke Hinds, Security Engineering Lead, Red Hat office of the CTO. “By hosting this collaboration at the Linux Foundation, we can accelerate our work in sigstore and support the ongoing adoption and impact of open source software and development.”

Understanding and confirming the origin and authenticity of software relies on an often disparate set of approaches and data formats. The solutions that do exist, often rely on digests that are stored on insecure systems that are susceptible to tampering and can lead to various attacks such as swapping out of digests or users falling prey to targeted attacks.

“Securing a software deployment ought to start with making sure we’re running the software we think we are. Sigstore represents a great opportunity to bring more confidence and transparency to the open source software supply chain,” said Josh Aas, executive director, ISRG | Let’s Encrypt.

Very few open source projects cryptographically sign software release artifacts. This is largely due to the challenges software maintainers face on key management, key compromise / revocation and the distribution of public keys and artifact digests. In turn, users are left to seek out which keys to trust and learn steps needed to validate signing. Further problems exist in how digests and public keys are distributed, often stored on websites susceptible to hacks or a README file situated on a public git repository. sigstore seeks to solve these issues by utilization of short lived ephemeral keys with a trust root leveraged from an open and auditable public transparency logs.

“I am very excited about the prospects of a system like sigstore. The software ecosystem is in dire need of something like it to report the state of the supply chain. I envision that, with sigstore answering all the questions about software sources and ownership, we can start asking the questions regarding software destinations, consumers, compliance (legal and otherwise), to identify criminal networks and secure critical software infrastructure. This will set a new tone in the software supply chain security conversation,” said Santiago Torres-Arias, Assistant Professor of Electrical and Computer Engineering, University of Purdue / in-toto project founder.

“sigstore is poised to advance the state of the art in open source development,” said Mike Dolan, senior vice president and general manager of Projects at the Linux Foundation. “We are happy to host and contribute to work that enables software maintainers and consumers alike to more easily manage their open source software and security.”

“sigstore aims to make all releases of open source software verifiable, and easy for users to actually verify them. I’m hoping we can make this easy as exiting vim,” Dan Lorenc, Google Open Source Security Team. “Watching this take shape in the open has been fun. It’s great to see sigstore in a stable home.”

For more information and to contribute, please visit: https://sigstore.dev

About the Linux Foundation

Founded in 2000, the Linux Foundation is supported by more than 1,000 members and is the world’s leading home for collaboration on open source software, open standards, open data, and open hardware. Linux Foundation’s projects are critical to the world’s infrastructure including Linux, Kubernetes, Node.js, and more. The Linux Foundation’s methodology focuses on leveraging best practices and addressing the needs of contributors, users and solution providers to create sustainable models for open collaboration. For more information, please visit us at linuxfoundation.org.

###

The Linux Foundation has registered trademarks and uses trademarks. For a list of trademarks of The Linux Foundation, please see our trademark usage page: https://www.linuxfoundation.org/trademark-usage. Linux is a registered trademark of Linus Torvalds.

Media Contact

Jennifer Cloer

for Linux Foundation

503-867-2304

jennifer@storychangesculture.com

The post Linux Foundation Announces Free sigstore Signing Service to Confirm Origin and Authenticity of Software appeared first on Linux Foundation.