The Linux Foundation and several major RISC-V development firms have launched an LF-hosted CHIPS Alliance with a mission “to host and curate high-quality open source code relevant to the design of silicon devices.” The founding members — Esperanto Technologies, Google, SiFive, and Western Digital — are all involved in RISC-V projects.

On the same day that the CHIPS Alliance was announced, Intel and other companies, including Google launched a Compute Express Link (CXL) consortium that will open source and develop Intel’s CXL interconnect. CXL shares many traits and goals of the OmniXtend protocol that Western Digital is contributing to CHIPS (see farther below).

The CHIPS Alliance aims to “foster a collaborative environment that will enable accelerated creation and deployment of more efficient and flexible chip designs for use in mobile, computing, consumer electronics, and Internet of Things (IoT) applications.” This “independent entity” will enable “companies and individuals to collaborate and contribute resources to make open source CPU chip and system-on-a-chip (SoC) design more accessible to the market,” says the project.

This announcement follows a collaboration between RISC-V and Linux Foundation formed last November to accelerate development for the open source RISC-V ISA, starting with RISC-V starter guides for Linux and Zephyr. The CHIPS Alliance is more focused on developing open source VLSI chip design building blocks for semiconductor vendors.

The CHIPS Alliance will follow Linux Foundation style governance practices and include the usual Board of Directors, Technical Steering Committee, and community contributors “who will work collectively to manage the project.” Initial plans call for establishing a curation process aimed at providing the chip community with access to high-quality, enterprise grade hardware.”

A testimonial quote by Zvonimir Bandic, senior director of next-generation platforms architecture at Western Digital, offers a few clues about the project’s plans: “The CHIPS Alliance will provide access to an open source silicon solution that can democratize key memory and storage interfaces and enable revolutionary new data-centric architectures. It paves the way for a new generation of compute devices and intelligent accelerators that are close to the memory and can transform how data is moved, shared, and consumed across a wide range of applications.”

Both the AI-focused Esperanto and SiFive, which has led the charge on Linux-driven RISC-V devices with its Freedom U540 SoC and upcoming U74 and U74-MC designs, are exclusively focused on RISC-V. Western Digital, which is contributing its RISC-V based SweRV core to the project, has pledged to produce 1 billion of SiFive’s RISC-V cores. All but Esperanto have committed to contribute specific technology to the project (see farther below).

Notably missing from the CHIPS founders list is Microchip, whose Microsemi unit announced a Linux-friendly PolarFire SoC, based in part on SiFive’s U54-MC cores. The PolarFire SoC is billed as the world’s first RISC-V FPGA SOC.

Although not included as a founding member, the RISC-V Foundation appears to behind the CHIPS Alliance, as evident from this quote from Martin Fink, interim CEO of RISC-V Foundation and VP and CTO of Western Digital: “With the creation of the CHIPS Alliance, we are expecting to fast-track silicon innovation through the open source community.”

With the exploding popularity of RISC-V, the RISC-V Foundation may have decided it has too much on its plate right now to tackle the projects the CHIPS Alliance is planning. For example, the Foundation is attempting to crack down on the growing fragmentation of RISC-V designs. A recent article in Semiconductor Engineering reports on the topic and RISC-V’s RISC-V Compliance Task Group.

Although the official CHIPS Alliance mission statements do not mention RISC-V, the initiative appears to be an extension of the RISC-V ecosystem. So far, there have been few open-ISA alternatives to RISC-V. In December, however, Wave Computing announced plans to follow in RISC-V’s path by offering its MIPS ISA as open source code without royalties or proprietary licensing. As noted in a Bit-Tech.net report on the CHIPS Alliance, there are also various open source chip projects that cover somewhat similar ground, including the FOSSi (Free and Open Source Silicon) Foundation, LibreCores, and OpenCores.

Contributions from Google, SiFive, and Western Digital

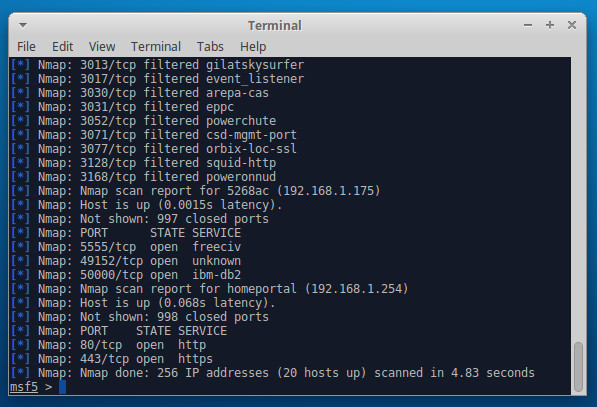

Google plans to contribute to the CHIPS Alliance a Universal Verification Methodology (UVM) based instruction stream generator environment for RISC-V cores. The configurable UVM environment will provide “highly stressful instruction sequences that can verify architectural and micro-architectural corner-cases of designs,” says the CHIPS Alliance.

SiFive will contribute and continue to improve its RocketChip (or Rocket-Chip) SoC generator, including the initial version of the TileLink coherent interconnect fabric. SiFive will also continue to contribute to the SCALA-based Chisel open-source hardware construction language and the FIRRTL “intermediate representation specification and transformation toolkit” for writing circuit-level transformations. SiFive will also continue to contribute to and maintain the Diplomacy SoC parameter negotiation framework.

As noted, Western Digital will contribute its 9-stage, dual issue, 32-bit SweRV Core, which recently appeared on GitHub. It will also contribute a SWERV test bench and SweRV instruction set simulator. Additional contributions will include specification and early implementations of the OmniXtend cache coherence protocol.

Intel launches CXL interconnect consortium

Western Digital’s OmniXtend is similar to the high-speed Compute Express Link (CXL) CPU interconnect that Intel is open sourcing. On Monday, Intel, Alibaba, Cisco, Dell EMC, Facebook, Google, Hewlett Packard Enterprise, Huawei, and Microsoft announced a CXL consortium to help develop the PCIe Gen 5 -based CXL into an industry standard. Intel intends to incorporate CXL into its processors starting in 2021 to link the CPU with memory and various accelerator chips.

The CXL group competes with a Cache Coherent Interconnect for Accelerators (CCIX) consortium founded in 2016 by AMD, Arm, IBM, and Xilinx. It similarly adds cache coherency atop a PCIe foundation to improve interconnect performance. By contrast, OmniXtend is based on Ethernet PHY technology. While the CXL and CCIX groups are focused only on interconnects, the CHIPS Alliance has a far more ambitious agenda, according to an interesting EETimes story on the CHIPS Alliance, CXL, and CCIX.