This week AT&T will release detailed specs to the Open Compute Project for building white box cell site gateway routers for 5G. Over the next few years, more than 60,000 white box routers built by a variety of manufacturers will be deployed as 5G routers in AT&T’s network.

In its Oct. 1 announcement, AT&T said it will load the routers with its Debian Linux based Vyatta Network Operating System (NOS) stack. Vyatta NOS forms the basis for AT&T’s open source dNOS platform, which in turn is the basis for a new Linux Foundation open source NOS project called DANOS, which similarly stands for Disaggregated Network Operating System (see below).

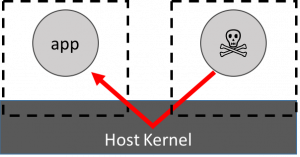

AT&T’s white box blueprint “decouples hardware from software” so any organization can build its own compliant systems running other software. This will provide the cellular gateway industry with flexibility as well as the security of building on an interoperable, long-lifecycle platform. The white box spec appears to OS agnostic. However, routers typically run Linux-based NOS stacks, and that does not appear to be changing with 5G.

The release of specs to the Open Compute Project — an organization that helps standardize open white box designs — departs from the traditional practice of contracting a few vendors to build proprietary solutions for cellular routers. AT&T’s next-gen router blueprint will enable any hardware manufacturer willing to build to spec to compete for the orders. By attracting more manufacturers, AT&T aims to reduce costs, spur innovation, and more quickly meet the “surging data demands” for 5G.

“We now carry more than 222 petabytes of data on an average business day,” stated Chris Rice, SVP, Network Cloud and Infrastructure at AT&T. “The old hardware model simply can’t keep up, and we need to get faster and more efficient.”

The reference design blueprint is said to be flexible enough to enable manufacturers to offer custom platforms for different use cases. In addition to offering faster mobile services, AT&T’s 5G services will enable new applications in “autonomous cars, drones, augmented reality and virtual reality systems, smart factories, and more,” says AT&T.

5G technology will not only provide a major boost in bandwidth for mobile customers, it should also enable wireless services to better compete with the cable providers’ wired broadband Internet services for the home. This week, AT&T rival Verizon opened pre-orders for consumer customers to sign up for 5G home internet service targeted for a launch in 2019.

At publication time, neither AT&T or the Open Compute Project had not yet published the white box specs, but AT&T offered a few details:

-

Supports a wide range of client-side speeds including “100M/1G needed for legacy Baseband Unit systems and next generation 5G Baseband Unit systems operating at 10G/25G and backhaul speeds up to 100G”

-

Supports industrial temperature ranges (-40 to 65°C)

-

Integrates the Broadcom Qumran-AX switching chip with deep buffers to support advanced features and QOS

-

Integrates a baseboard management controller (BMC) for platform health status monitoring and recovery

-

Include a “powerful CPU for network operating software”

-

Provides timing circuitry that supports a variety of I/O</ul>

Vyatta NOS to dNOS to DANOS

Vyatta launched the Debian based, OpenVPN compliant Vyatta Community Edition over a decade ago. The distribution, which later added features like Quagga support and a standardized management console, was available in both subscription-based and open source Vyatta Core versions.

When Brocade acquired Vyatta in 2012, it discontinued the open source version. However, independent developers forked Vyatta Core to create an open source VyOS platform. Last year, Brocade sold its proprietary Vyatta assets to AT&T, which developed it as Vyatta NOS.

AT&T will initially load the proprietary, “production-hardened” Vyatta NOS on the white box routers it purchases. However, the goal appears to be to eventually replace this with AT&T’s dNOS stack under the emerging DANOS framework.

Robert Bays, assistant VP of Vyatta Development at AT&T Labs, stated: “Consistent with our previous announcements to create the DANOS open source project, hosted by the Linux Foundation, we are now sorting out which components of the open cell site gateway router NOS we will be contributing to open source.”

dNOS/DANOS aims to be the world’s first open source, carrier-grade operating system for wide area networks. The software is designed to interoperate with the widely endorsed ONAP (Open Network Automation Platform), a Linux Foundation project for standardizing open source cloud networking software. In AT&T’s dNOS announcement in January, which preceded the DANOS project launch in March, the company stated: “Just as the ONAP platform has become the open network operating system for the network cloud, the dNOS project aims to be the open operating system for white box.”

The DANOS project is also aligned with Linux Foundation projects like FRRouting, OpenSwitch, and the AT&T-derived Akraino Edge Stack. The Akraino project aims to standardize open source edge computing software for basestations and will also support telecom, enterprise networking, and IoT edge platforms.

Different Akraino blueprints will target technologies and standards such as DANOS, Ceph, Kata Containers, Kubernetes, StarlingX, OpenStack, Acumos AI, and EdgeX Foundry. In a few years, we will likely see DANOS-based white box gateway routers running Akraino software to enable 5G applications ranging from autonomous car communications to augmented reality.

Join us at Open Source Summit + Embedded Linux Conference Europe in Edinburgh, UK on October 22-24, 2018, for 100+ sessions on Linux, Cloud, Containers, AI, Community, and more.