Ethereum is a blockchain protocol that includes a programming language which allows for applications, called contracts, to run within the blockchain. Initially described in a white paper by its creator, Vitalik Buterin in late 2013, Ethereum was created as a platform for the development of decentralized applications that can do more than make simple coin transfers.

How does it work?

Ethereum is a blockchain. In general, a blockchain is a chain of data structures (blocks) that contains information such as account ids, balances, and transaction histories. Blockchains are distributed across a network of computers; the computers are often referred to as nodes.

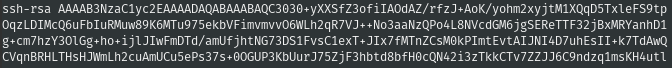

Cryptography is a major part of blockchain technology. Mathematical encryption algorithms like RSA and ECDSA are used to generate public and private keys that are mathematically coupled. Public keys, or addresses, and private keys allow people to make transactions across the network without involving any personal information like name, address, date of birth, etc. These keys and addresses are often called hashes and are usually a long string on hexadecimal symbols.

Example of an RSA generated public key

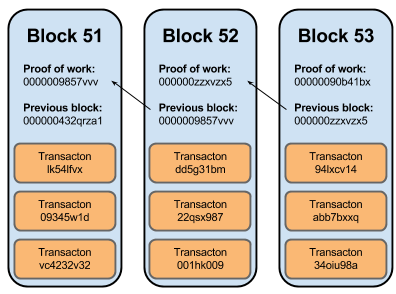

Blockchains have a public ledger that keeps track of all transactions that have occurred since the first, “genesis”, block. A block will include, at least, a hash of the current and previous blocks, and some data. Nodes across the network work to verify transactions and add them to the public ledger. In order for a transaction to be considered legitimate, there must be consensus.

Consensus means that the transaction is considered valid by the majority of the nodes in the network. There are four main algorithms used to achieve consensus among a distributed blockchain network: the byzantine fault tolerance, proof-of-work, proof-of-stake, and delegated proof-of-stake. Chris explains them well in his post.

Attempting to make even the slightest alteration to the data in a block will change the hash of the block and will therefore be noticed by the entire network. This makes blockchains immutable and append-only. A transaction can only be added at the end of the chain, and once a transaction is added to a block there can be no changes made to it.

Source: andrefortuna.org

Users of Ethereum control an account that has a cryptographic private key with a corresponding Ethereum address. If Alice, for example, wants to send Bob 1,000 ETH (ETH / Ether is Ethereum’s money). Alice needs Bob’s Ethereum address so she knows where to send it, and then Bob needs to use his private key that corresponds to that address in order to receive the 1,000 ETH.

Ethereum has two types of accounts: accounts that user’s control and contracts (or “smart contracts”). Accounts that user’s control, like Alice and Bob, primarily serve for ETH transfers. Just about every blockchain system has this type of account that can make money transfers. But what makes Ethereum special is the second type of account; a contract.

Contract accounts are controlled by a piece of code (an application) that is run inside the blockchain itself.

“What do you mean, inside the blockchain?”

EVM

Ethereum has a Virtual Machine, called EVM. This is where contracts get executed. EVM includes a stack (~processor), temporary memory (~RAM), storage space for permanent memory (~disk/database), environment variables (~system information, e.g: timestamp), logs, and sub-calls (you can call a contract within a contract).

An example contract might look like this:

if (something happens):

send 1,000 ETH to Bob (addr: 22982be234)

else if (something else happens):

send 1,000 ETH to Alice (addr: bbe4203fe)

else:

don't send any ETH

If a user sends 1,000 ETH to this account (the contract), then the code in this account is the only thing that has power to transfer that ETH. It’s kind of like an escrow. The sender no longer has control over the 1,000 ETH. The digital assets are now under the control of a computer program and will be moved depending on the conditional logic of the contract.

Is it free?

No. The execution of contracts occurs within the blockchain, therefore within the Ethereum Network. Contracts take up storage space, and they require computational power. So Ethereum uses something called gas as a unit of measurement of how much something costs the Network. The price of gas is voted on by the nodes and the fees user’s pay in gas goes to the miners.

Miners

Miners are people using computers to do computations required validate transactions across the network and add new blocks to the chain.

Mining works like this: when a block of transactions is ready to be added to the chain, miners use computer processing power to find hashes that match a specific target. When a miner finds the matching hash, she will be rewarded with ETH and will broadcast the new block across the network. The other nodes verify the matching hash, then if there is consensus, it is added to the chain.

What’s inside a block?

Within an Ethereum block is something called the state and history. The state is a mapping of addresses to account objects. The state of each account object includes:

- ETH balance

- nonce **

- the contract’s source code (if the account is a contract)

- contract storage (database)

** a nonce is a counter that prevents the account from repeating a transaction over and over resulting perhaps in taking more ETH from a sender than they are supposed to.

Blocks also store history: records of previous transactions and receipts.

State and History and stored in each node (each member of the Ethereum Network). Having each node contain the history of Ethereum transactions and contract code is great for security and immutability, but can be hard to scale. A blockchain cannot process more transactions than a single node can. Because of this, Ethereum limits the number of transactions to 7–15 per second. The protocol has adopted sharding — a technique that essentially breaks up the chain into smaller pieces but still aims to have the same level of security.

Transactions

Every transaction specifies a TO: address. If the TO: is a user-controlled account, and the transaction contains ETH, it is considered a transfer of ETH from account A to account B. If the TO: is a contract, then the code of the contract gets executed. The execution of a contract can result in further transactions, even calls to contracts within a contract, an event known as an inter-transaction.

But contracts don’t always have to be about transferring ETH. Anyone can create an application with any rules by defining it as a contract.

Who is using Ethereum?

Ethereum is currently being used mostly by cryptocurrency traders and investors, but there is a growing community of developers that are building dapps (decentralized applications) on the Ethereum Network.

There are thousands of Ethereum-based projects being developed as we speak. Some more the most popular dapps are games (e.g CryptoKitties and CrptyoTulips).

How is Ethereum different from bitcoin?

Bitcoin is a blockchain technology where users are assigned a private key, linked with a wallet that generates bitcoin addresses where people can send bitcoins to. It’s all about the coins. It’s a way to exchange money in an encrypted, decentralized environment.

Ethereum not only lets users exchange money like bitcoin does, but it also has programming languages that let people build applications (contracts) that are executed within the blockchain.

Bitcoin functions on proof of work as a means of achieving consensus across the network. Whereas Ethereum uses proof of stake.

Ethereum’s creator is public (Vitalik Buterin). Bitcoin’s is unknown (goes by the alias, Satoshi Nakamoto)

Other blockchains that do contracts

There are other blockchain projects that allow the creation of contracts. Here is a brief description of what they are and how they are different than Ethereum:

Neo — faster transaction speeds, inability to fork, less energy use, has two tokens (NEO and GAS), will be quantum resistant.

Icon — uses loopchain to connect blockchain-based communities around the world.

Nem — contract code is stored outside of the blockchain resulting in a lighter and faster network.

Ethereum Classic — a continuation of the original Ethereum blockchain (before it was forked)

Conclusion

Ethereum is a rapidly growing blockchain protocol that allows people to not only transfer assets to each other, but to create decentralized applications that run securely on a distributed network of computers.

This article was produced in partnership with Holberton School and originally appeared on Medium.