Learn how to write for an international audience in this article from our archives.

Writing in English for an international audience does not necessarily put native English speakers in a better position. On the contrary, they tend to forget that the document’s language might not be the first language of the audience. Let’s have a look at the following simple sentence as an example: “Encrypt the password using the ‘foo bar’ command.”

Grammatically, the sentence is correct. Given that “-ing” forms (gerunds) are frequently used in the English language, most native speakers would probably not hesitate to phrase a sentence like this. However, on closer inspection, the sentence is ambiguous: The word “using” may refer either to the object (“the password”) or to the verb (“encrypt”). Thus, the sentence can be interpreted in two different ways:

-

Encrypt the password that uses the ‘foo bar’ command.

-

Encrypt the password by using the ‘foo bar’ command.

As long as you have previous knowledge about the topic (password encryption or the ‘foo bar’ command), you can resolve this ambiguity and correctly decide that the second reading is the intended meaning of this sentence. But what if you lack in-depth knowledge of the topic? What if you are not an expert but a translator with only general knowledge of the subject? Or, what if you are a non-native speaker of English who is unfamiliar with advanced grammatical forms?

Know Your Audience

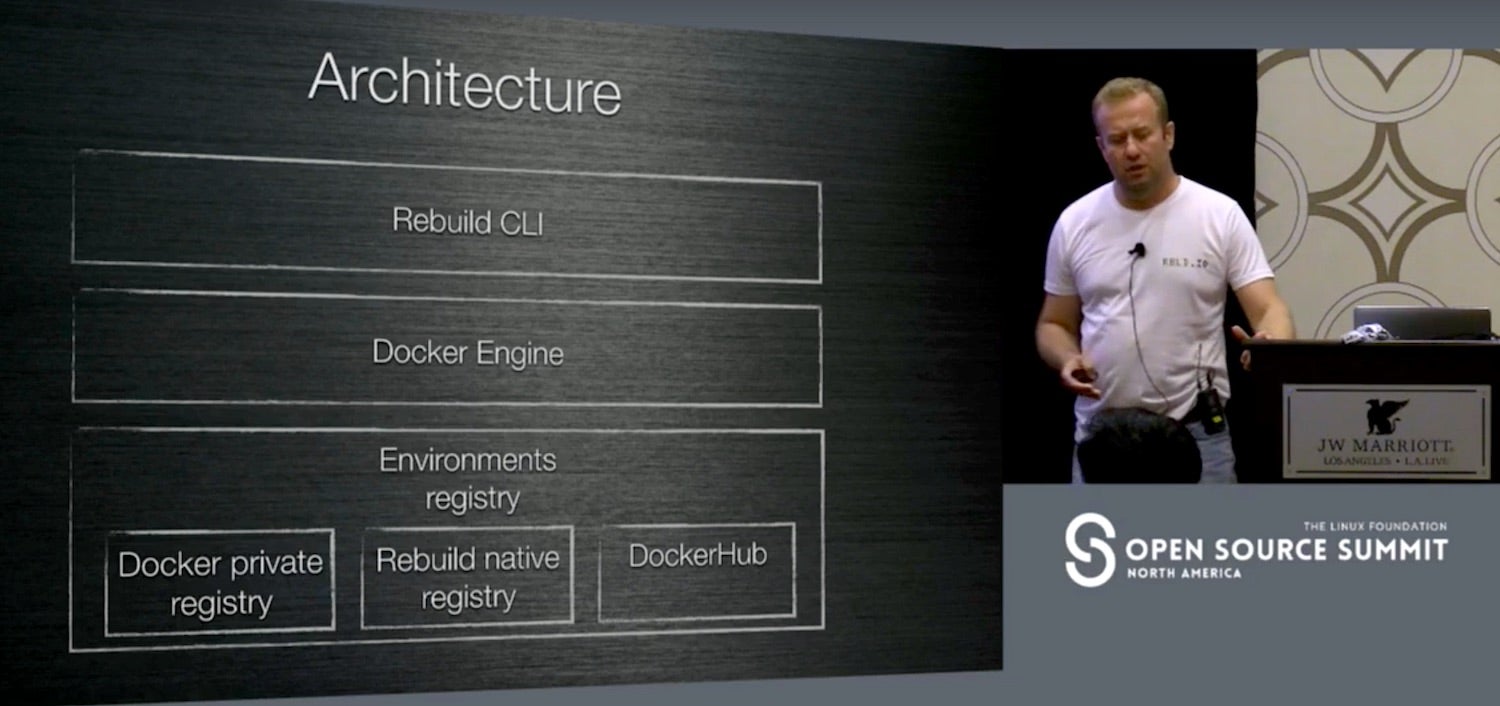

Even native English speakers may need some training to write clear and straightforward technical documentation. Raising awareness of usability and potential problems is the first step. This article, based on my talk at Open Source Summit EU, offers several useful techniques. Most of them are useful not only for technical documentation but also for everyday written communication, such as writing email or reports.

1. Change perspective. Step into your audience’s shoes. Step one is to know your intended audience. If you are a developer writing for end users, view the product from their perspective. The persona technique can help to focus on the target audience and to provide the right level of detail for your readers.

2. Follow the KISS principle. Keep it short and simple. The principle can be applied to several levels, like grammar, sentences, or words. Here are some examples:

Words: Uncommon and long words slow down reading and might be obstacles for non-native speakers. Use simpler alternatives:

“utilize” → “use”

“indicate” → “show”, “tell”, “say”

“prerequisite” → “requirement”

Grammar: Use the simplest tense that is appropriate. For example, use present tense when mentioning the result of an action: “Click OK. The Printer Options dialog appears.”

Sentences: As a rule of thumb, present one idea in one sentence. However, restricting sentence length to a certain amount of words is not useful in my opinion. Short sentences are not automatically easy to understand (especially if they are a cluster of nouns). Sometimes, trimming down sentences to a certain word count can introduce ambiquities, which can, in turn, make sentences even more difficult to understand.

3. Beware of ambiguities. As authors, we often do not notice ambiguity in a sentence. Having your texts reviewed by others can help identify such problems. If that’s not an option, try to look at each sentence from different perspectives: Does the sentence also work for readers without in-depth knowledge of the topic? Does it work for readers with limited language skills? Is the grammatical relationship between all sentence parts clear? If the sentence does not meet these requirements, rephrase it to resolve the ambiguity.

4. Be consistent. This applies to choice of words, spelling, and punctuation as well as phrases and structure. For lists, use parallel grammatical construction. For example:

Why white space is important:

-

It focuses attention.

-

It visually separates sections.

-

It splits content into chunks.

5. Remove redundant content. Keep only information that is relevant for your target audience. On a sentence level, avoid fillers (basically, easily) and unnecessary modifications:

“already existing” → “existing”

“completely new” → “new”

As you might have guessed by now, writing is rewriting. Good writing requires effort and practice. But even if you write only occasionally, you can significantly improve your texts by focusing on the target audience and by using basic writing techniques. The better the readability of a text, the easier it is to process, even for an audience with varying language skills. When it comes to localization especially, good quality of the source text is important: Garbage in, garbage out. If the original text has deficiencies, it will take longer to translate the text, resulting in higher costs. In the worst case, the flaws will be multiplied during translation and need to be corrected in various languages.

Driven by an interest in both language and technology, Tanja has been working as a technical writer in mechanical engineering, medical technology, and IT for many years. She joined SUSE in 2005 and contributes to a wide range of product and project documentation, including High Availability and Cloud topics.