Helm can make deploying and maintaining Kubernetes-based applications easier, said Amy Chen in her talk at KubeCon + CloudNativeCon. Chen, a Systems Software Engineer at Heptio, began by dissecting the structure of a typical Kubernetes setup, explaining how she often described the basic Docker containers as “baby computers,” in that containers are easy to move around, but they still need the “mommy” computer. However, containers do carry with them all the environmental dependencies for a given application.

Basic Units

On the next level up, there is the pod, the basic unit of Kubernetes. Pods group related containers together, along with other frameworks (e.g., databases). They can only be reached from inside the cluster and are given IPs by your LAN. A pod’s IP is dynamic and can change when, for example, they are terminated and spun up again. This makes their addresses unreliable, an issue that the Kubernetes’ service structures solves (see below).

A deployment groups replicated Pods together. Here is where the Kubernetes concepts of actual state and desired state come into play. The deployment controller is in charge of reaching the desired state from the actual state. If, for example, you need three pods and one crashes, the deployment controller will spin up another to achieve the desired state.

A service is a group of pods or deployments. While a service has nothing to do with the states described above, it does provide a way of locating deployments. As pods can be terminated and die, when they are spun up, they may have different IPs, meaning you cannot rely on a pod’s IP as a way to communicate with it. Services define a dependable end point that can be used to communicate with one another. Finally, Ingress routes traffic from the outside worlds to the internal services.

Although all pieces can be managed with Kubernetes’ own kubectl tool to some extent, this does have several drawbacks: kubectl forces you to execute complicated multiple command lines which must be run in a certain order, for example. Also, kubectl does not make any provisions for version controlling your set up. So, if you change something and then want to go back to your initial setup, with kubectl you have to tear everything down and then build it all up, inputting your original settings all over again.

Chart your course

That’s where Helm can help, says Chen. Helm uses charts, text configuration files that define a group of manifest files. Helm charts reference a series of templates, yaml documents that define the deployment, services, and ingress. With those in place, using helm install will bring up your service.

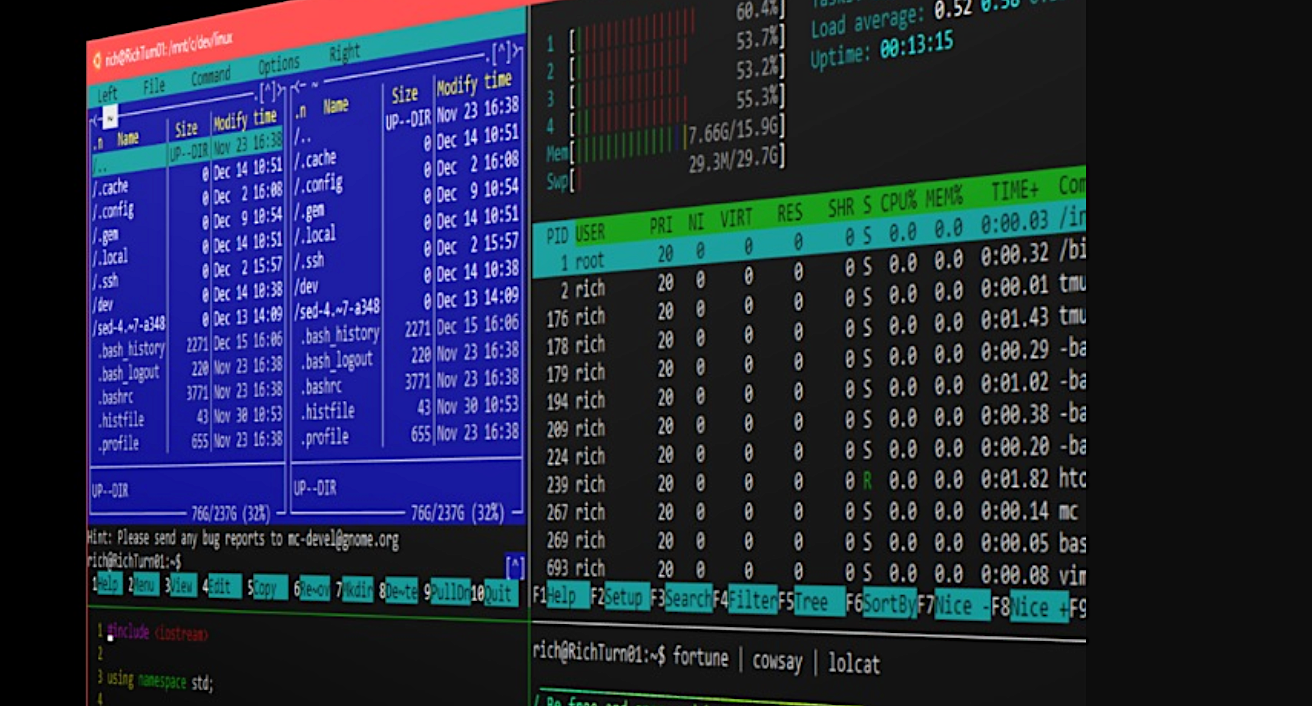

In the demo phase of her talk, Chen showed how Helm makes it easier to get all the moving parts working. The yaml files used by Helm are easily parsable by humans, in that, just by looking at each section, it is easy to understand what they do.

A line that says replicas: 3 in the deployment.yaml file, for example, will bring up three replicas of your pods in the deployment phase; the containerPort: 80 line tells Helm to expose port 80 on the pod; and so on. The service.yaml and ingress.yaml files are equally simple to understand.

After configuring your set up, you can run helm install and the application will start, while returning data on the deployment so you can check everything went correctly.

Helm also lets you use configuration variables. You can create a values.yaml file that contains the values that will be used in the deployment.yaml, service.yaml and ingress.yaml files. This avoids having to edit the configuration files by hand every time you want to change something, making modifications easier and less prone to errors.

Helm also allows you to “upgrade” (which, in this context means modify) a running set up live with the upgrade command. If, for any reason, the upgrade does not conform to what you want, you can use helm list to see the deployment’s you have already had, and then helm rollback to go back to the version that worked best.

In conclusion, Helm is not only useful for beginners thanks to its simplified usage, says Chen, but it also provides some extra features that make running and maintaining Kubernetes-based applications more efficient than using just kubectl.

Watch the entire presentation below:

Learn more about Kubernetes at KubeCon + CloudNativeCon Europe, coming up May 2-4 in Copenhagen, Denmark.