“The blockchain is an incorruptible digital ledger of economic transactions that can be programmed to record not just financial transactions but virtually everything of value.” — Don & Alex Tapscott, authors Blockchain Revolution (2016)

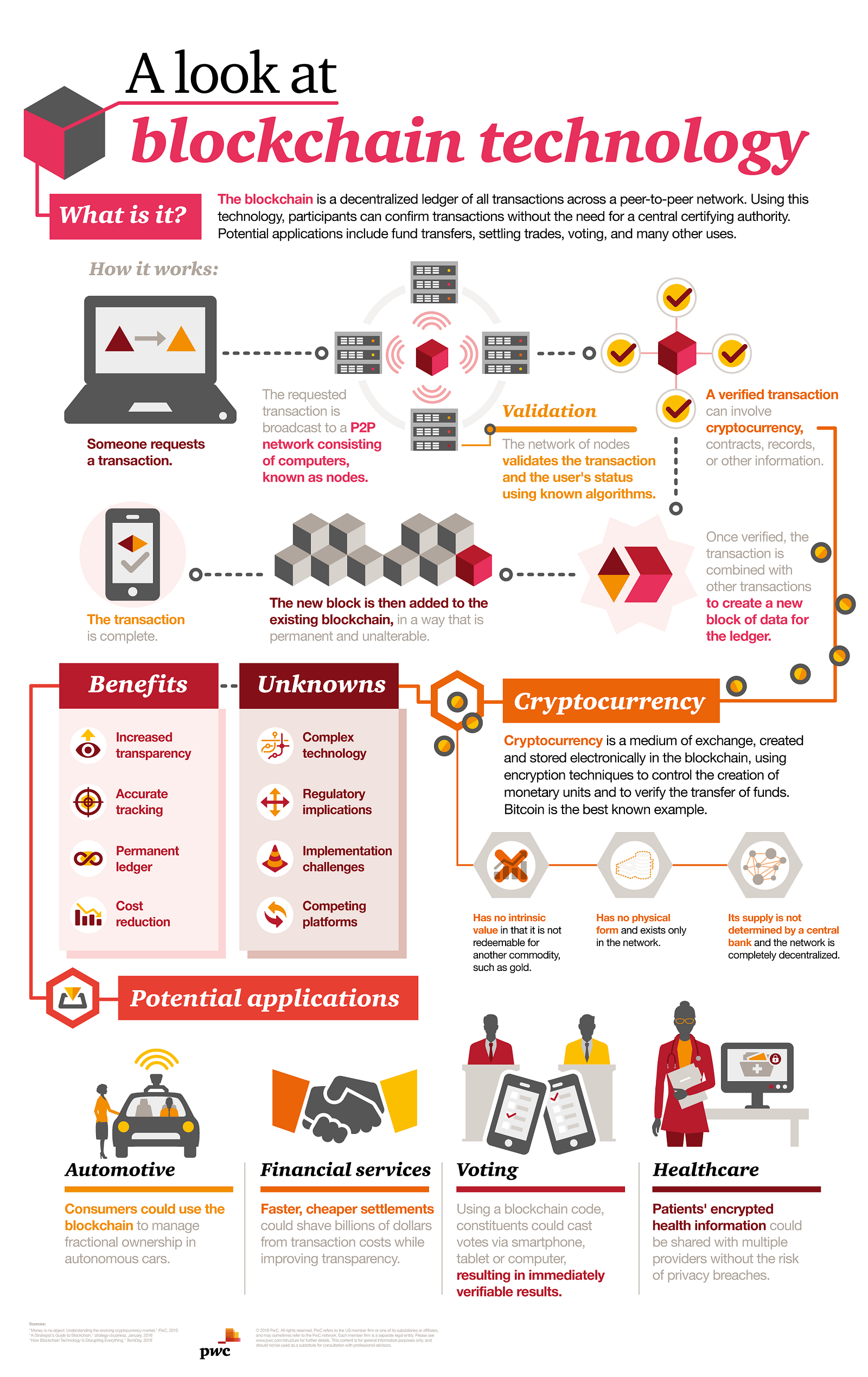

Blockchain is the digital and decentralized ledger that records all transactions. (See the “Blockchain Simplified” video for more information.) Every time someone buys digital coins on a decentralized exchange, sells coins, transfers coins, or buys a good or service with virtual coins, a ledger records that transaction, often in an encrypted fashion, to protect it from cybercriminals. These transactions are also recorded and processed without a third-party provider, which is usually a bank.

A distributed database

Picture a spreadsheet that is duplicated thousands of times across a network of computers. Then imagine that this network is designed to regularly update this spreadsheet and you have a basic understanding of the blockchain.

Information held on a blockchain exists as a shared — and continually reconciled — database. This is a way of using the network that has obvious benefits. The blockchain database isn’t stored in any single location, meaning the records it keeps are truly public and easily verifiable. No centralized version of this information exists for a hacker to corrupt. Hosted by millions of computers simultaneously, its data is accessible to anyone on the Internet.

The main reason we even have this cryptocurrency and blockchain revolution is as a result of the perceived shortcomings of the traditional banking system. What shortcomings, you ask? For example, when transferring money to overseas markets, a payment could be delayed for days while a bank verifies it. Many would argue that financial institutions shouldn’t tie up cross-border payments and funds for such an extensive amount of time.

Likewise, banks almost always serve as an intermediary of currency transactions, thus taking their cut in the process. Blockchain developers want the ability to process payments without a need for this middleman.

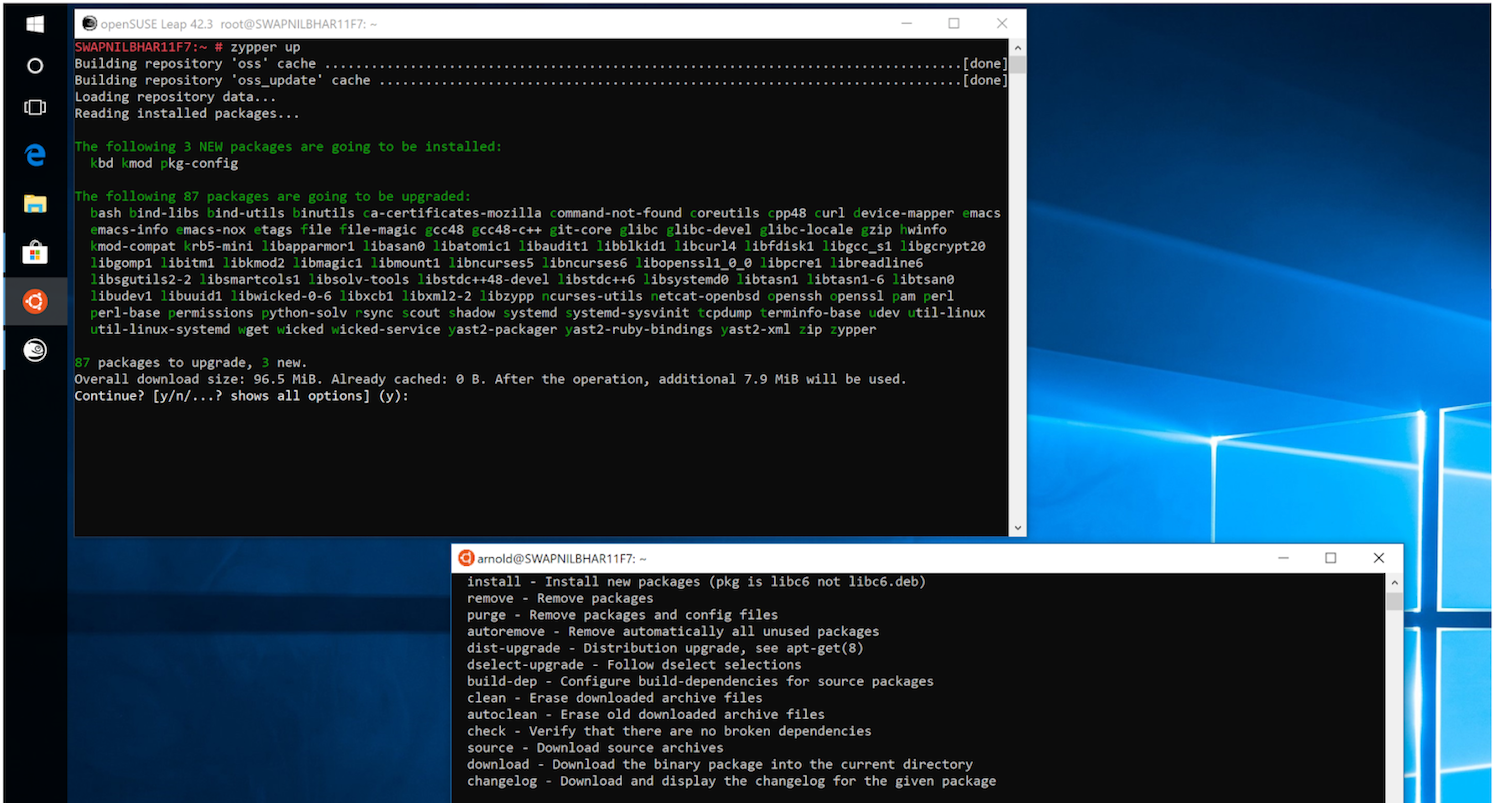

A network of so-called computing “nodes” make up the blockchain.

Node is a computer connected to the blockchain network using a client that performs the task of validating and relaying transactions gets a copy of the blockchain, which gets downloaded automatically upon joining the blockchain network.

Together they create a powerful second-level network, a wholly different vision for how the internet can function.

Every node is an “administrator” of the blockchain, and joins the network voluntarily (in this sense, the network is decentralized). However, each one has an incentive for participating in the network: the chance of winning Bitcoins.

Nodes are said to be “mining” Bitcoin, but the term is something of a misnomer. In fact, each one is competing to win Bitcoins by solving computational puzzles. Bitcoin was the raison d’etre of the blockchain as it was originally conceived. It’s now recognized to be only the first of many potential applications of the technology.

There are an estimated 700 Bitcoin-like cryptocurrencies (exchangeable value tokens) already available. As well, a range of other potential adaptations of the original blockchain concept are currently active, or in development.

What are the applications of blockchain?

The nature of blockchain technology has got imaginations running wild, because the idea can now be applied to any need for a trustworthy record. It is also putting the full power of cryptography in the hands of individuals, stopping digital relationships from requiring a transaction authority for what are considered ‘pull transactions’.

For sure, there is also a lot of hype. This hype is perhaps the result of how easy it is to dream up a high-level use case for the application of blockchain technology. It has been described as ‘magic beans’ by several of the industry’s brightest minds.

There is more on how to test whether blockchain technology is appropriate for a use case or not here — “Why Use a Blockchain?” For now, let’s look at the development of blockchain technology for how it could be useful.

As a system of record

Digital identity

Cryptographic keys in the hands of individuals allow for new ownership rights and a basis to form interesting digital relationships. Because it is not based on accounts and permissions associated with accounts, because it is a push transaction, and because ownership of private keys is ownership of the digital asset, this places a new and secure way to manage identity in the digital world that avoids exposing users to sharing too much vulnerable personal information.

Tokenization

Tokens are used as to bind the physical and digital worlds. These digital tokens are useful for supply chain management, intellectual property, and anti-counterfeiting and fraud detection.

Inter-organizational data management

Blockchain technology represents a revolution in how information is gathered and collected. It is less about maintaining a database, more about managing a system of record.

For governments

Governments have an interest in all three aspects components of blockchain technology. Firstly, there’s the ownership rights surrounding cryptographic key possession, revocation, generation, replacement, or loss. They also have an interest in who can act as part of a blockchain network. And they have an interest in blockchain protocols as they authorize transactions, as governments often regulate transaction authorization through compliance regimes (eg stock market regulators authorize the format of market exchange trades).

For this reason, regulatory compliance is seen as a business opportunity by many blockchain developers.

For financial institutions

For audit trails

Using the client-server infrastructure, banks and other large institutions that help individuals form digital relationships over the internet are forced to secure the account information they hold on users against hackers.

Blockchain technology offers a means to automatically create a record of who has accessed information or records, and to set controls on permissions required to see information.

This also has important implications for health records.

As a platform

For smart contracting

Blockchains are where digital relationships are being formed and secured. In short, this version of smart contracts seeks to use information and documents stored in blockchains to support complex legal agreements.

Other startups are working on sidechains — bespoke blockchains plugged into larger public blockchains. These ‘federated blockchains’ are able to overcome problems like the block size debate plaguing bitcoin. It is thought these groups will be able to create blockchains that authorize super-specific types of transactions.

Ethereum takes the platform idea further. A new type of smart contracting was first introduced in Vitalik Buterin’s white paper, “A Next Generation Smart Contract and Decentralized Application Platform”. This vision is about applying business logic on a blockchain, so that transactions of any complexity can be coded, then authorized (or denied) by the network running the code.

As such, ethereum’s primary purpose is to be a platform for smart contract code, comprising of programs controlling blockchain assets, executed by a blockchain protocol, and in this case running on the ethereum network.

For automated governance

Bitcoin itself is an example of automated governance, or a DAO (decentralized autonomous organization). It, and other projects, remain experiments in governance, and much research is missing on this subject.

For markets

Another way to think of cryptocurrency is as a digital bearer bond. This simply means establishing a digitally unique identity for keys to control code that can express particular ownership rights (eg it can be owned or can own other things). These tokens mean that ownership of code can come to represent a stock, a physical item or any other asset.

For automating regulatory compliance

Beyond just being a trusted repository of information, blockchain technology could enable regulatory compliance in code form — in other words, how blocks are made valid could be a translation of government legal prose into digital code. This means that banks could automate regulatory reporting or transaction authorization.

What is Ethereum?

Ethereum is the second-largest blockchain. It’s much smaller than bitcoin — its cryptocurrency token, ether, has a market cap of $42 billion, compared to bitcoin’s quarter of a trillion dollars — but Ethereum can integrate smart contracts onto its blockchain. “So if I upload a program, let’s say a bet, I escrow some money into it, you escrow some money into it, and then a third party lets us know whether the Chicago Bulls beat the New York Knicks or vice versa, resolving our bet,” explains Joe Lubin, one of Ethereum’s founders.

Ethereum isn’t meant to be just a cryptocurrency like bitcoin, according to Lubin, but a full enterprise platform onto which programmers can build applications for any number of things. Despite that, one ether went from being worth $8 in January to being worth $434 in December as investors began to sense the enormous sums of money to be made.

Like Bitcoin, Ethereum is a distributed public blockchain network. Although there are some significant technical differences between the two, the most important distinction to note is that Bitcoin and Ethereum differ substantially in purpose and capability. Bitcoin offers one particular application of blockchain technology, a peer to peer electronic cash system that enables online Bitcoin payments. While the Bitcoin blockchain is used to track ownership of digital currency (bitcoins), the Ethereum blockchain focuses on running the programming code of any decentralized application.

In the Ethereum blockchain, instead of mining for bitcoin, miners work to earn Ether, a type of crypto token that fuels the network. Beyond a tradeable cryptocurrency, Ether is also used by application developers to pay for transaction fees and services on the Ethereum network.

Bitcoin can be described as digital money. Bitcoin has been around for eight years and is used to transfer money from one person to another. It is commonly used as a store of value and has been a critical way for the public to understand the concept of a decentralized digital currency.

Ethereum is different than Bitcoin in that it allows for smart contracts which can be described as highly programmable digital money. Imagine automatically sending money from one person to another but only when a certain set of conditions are met. For example an individual wants to purchase a home from another person. Traditionally there are multiple third parties involved in the exchange including lawyers and escrow agents which makes the process unnecessarily slow and expensive. With Ethereum, a piece of code could automatically transfer the home ownership to the buyer and the funds to the seller after a deal is agreed upon without needing a third party to execute on their behalf.

Application of Ethereum

In addition to being a great investment coin, Ethereum is a platform that enables what has come to be known as “web 3.0”. “Web 2.0” (the internet as we know it) is based on centralized servers; to have access to the internet and most of the services therein, we have to rely on third-party servers. These servers charge us fees and collect our data (often against our will).

With Ethereum, there are no centralized servers. Instead, Ethereum runs on blockchain technology, a kind of distributed ledger technology that is upheld by a network of thousands of different computers, called “nodes.” In exchange for performing the duties that secure the network and verify the transactions that take place on it, the nodes receive rewards in the form of ETH tokens.

Ethereum was the first cryptocurrency that was built to function primarily as a settlement layer. This means that it was designed as a platform for other things to be developed on. In contrast, Bitcoin was designed initially to transact as a form of “digital cash” (though, over time, Bitcoin has become more of a settlement layer itself).

What are smart contracts and how does Ethereum work?

Ethereum’s smart contracts have been famously explained by Nick Szabo as being similar to a vending machine.

When you use a vending machine, you put money in, press a button, and receive your candy. It’s all automated; there is no third-party that needs to come and make sure that you inserted your coin or unlock the door to give the candy to you.

Smart contracts operate similarly, except they usually have nothing to do with candy; rather, they can be applied to tons of different scenarios across different industries. Smart contracts take the “middle man” out of a variety of legal and financial services.

For example, Hermione is buying a home to move into immediately and needs to set up a mortgage contract with the seller. In today’s world, she must go to a bank or use another third-party service to draw up the contract. The third-party charges fees and the process takes a while–time and money that Harriet doesn’t have.

Using a smart contract, a legal mortgage contract could be set up without involving any third party. The loan’s data would be stored on the blockchain, and anytime that the borrower missed a payment, the keys to the loan’s collateral would be automatically revoked.

Using Smart Contracts to Build Dapps

Ethereum can be (and is) used as a platform for developing “dapps,” another name for “decentralized apps.” There are a variety of benefits of using dapps as opposed to traditional applications, including:

- Dapps are decentralized–they are not run by any singular entity. This means that the third-parties that are an inherent part of the structure of traditional applications do not exist. You know, the third-parties that exist to charge fees or to collect your personal data?

- Ethereum-based dapps know the pseudonymous identity of each user. In other words, dapp users will never have to provide personal information to register or use dapps; in fact, users will not have to register at all. Instead, the dapp will operate using your pseudonymous identity within the Ethereum network.

- All payments are processed within the Ethereum network. There is no need to integrate PayPal, Stripe, or any other third-party means of payment into Ethereum-based dapps. Payments will be securely sent and received on the Ethereum network.

- The blockchain holds all the data. While some extraneous data may be held outside of the blockchain, everything that needs to be kept secure will be stored forever (immutably) within the blockchain. Additionally, any logs created within dapps are held on the blockchain, making public data easily searchable.

- The front- and back-end code is open source. You can independently verify that the dapp you’re using is secure and that there is no malicious code.

Ethereum vs. other smart contract alternatives

Ethereum has a long road ahead if it wants to achieve its ambition of becoming the world’s “decentralized computer.” Even Vitalik Buterin, the creator of Ethereum, doubts its current ability to scale, saying, “Scalability [currently] sucks; the blockchain design fundamentally relies on bottlenecks where individual nodes must process every single transaction in the entire network.”

He’s correct. The Ethereum blockchain keeps getting bigger, and exhibits an increasingly large footprint for the hardware of miners and users alike. Additionally, its relatively outdated algorithmic programming makes inefficient use of the chain’s processing power, and returns a dismal number of transactions per second. This is a problem for businesses who rely on Ethereum smart contracts and impacts its future applicability and price. Fortunately, there are other smart contract platforms built on blockchain that are working to evolve the concept further.

1. QTUM

One of the most promising contenders for Ethereum’s title is QTUM, a hybrid cryptocurrency technology that takes the best attributes of bitcoin and Ethereum before blending them together. The result is a solution that resembles bitcoin core, but also includes an Abstract Accounting Layer that gives QTUM’s blockchain smart contract functionality via a more robust x86 Virtual Machine.

Essentially this is an off-layer scaling solution akin to what bitcoin seeks in SegWit and the Lightning Network, combined with the ability to build and host smart contracts. This has made QTUM a popular destination for developers, who appreciate the protective clauses installed in the platform that make it nigh impossible to commit the kinds of coding infractions that might one day become a multi-million-dollar problem. They also appreciate the presence of second-layer storage, despite its implications on decentralization, because stable business applications are their primary desire, as well they should be.

2. Ethereum Classic

The first hard fork that the cryptocurrency community witnessed was Ethereum forking from Ethereum Classic in 2013, which created a new prototype with ambitions to fill the gaps in Ethereum’s code. The controversy surrounded a hack where one individual stole over $50 million in ETH from a smart contract that was holding them in escrow as part of the original DAO (Decentralized Autonomous Organization) project.

After the hacker created a glitch that withdrew ETH from users instead of depositing it, the community voted to create a new chain that was backwards-compatible with the old one, so that mistakes like these could be reversed, and coins returned to their rightful owners. The hard fork installed a new update to the old Ethereum’s code which made it impossible to backtrack, even in the case of heinous breaches, of which there have been several. Ethereum Classic is continually being upgraded in this manner, thanks to a vibrant and active community, and keeps on pace with other projects despite its age.

3. NEO

NEO is what people like to refer to as “China’s Ethereum,” and for good reason. First, the two are very similar, and bill themselves as hosts of decentralized applications (dApps), ICOs, and smart contracts. They’re both open source, but while Ethereum is supported by a democratic foundation of developers, NEO has the full backing of China’s government. This has made it popular domestically but also abroad, and for its unique value proposition as well.

NEO uses a more energy-efficient consensus mechanism called dBFT (decentralized Byzantium Fault Tolerant) instead of proof-of-work, making it much faster at a rate of 10,000 transactions per second. Moreover, it supports more computer languages than Ethereum. People can build dApps with Java, C#, and soon Python and Go, making this option accessible to startups with big ideas while helping to add to its long-term viability.

4. Cardano

One of the newest entries into the smart contract platform contest, Cardano is a dual-layer solution, but with a unique twist. The platform has a unit of account and a control layer that governs the use of smart contracts, recognizes identity, and maintains a degree of separation from the currency it supports.

Cardano is programmed in Haskell, a language best suited for business applications and data analysis, making its future applications likely to be financial or organizational. This ideal blend of public sector usability and privacy protection makes Cardano a potentially groundbreaking solution, but it’s still very young. While the developer team’s use of deliberate, airtight scientific methodology make progress slow, it will likely be void of any parity or security mistakes that are an unfortunate reality in its more haphazardly assembled peers.

Mitali Sengupta is a former digital marketing professional, currently enrolled as a full-stack engineering student at Holberton School. She is passionate about innovation in AI and Blockchain technologies.. You can contact Mitali on Twitter, LinkedIn or GitHub.