Back in 2016, the Solus developers announced they were switching their operating system over to a rolling release. Solus 3 marks the third iteration since that announcement and, in such a short time, the Solus platform has come a long way. But for many, Solus 3 would be a first look into this particular take on the Linux operating system. With that in mind, I want to examine what Solus 3 offers that might entice the regular user away from their current operating system. You might be surprised when I say, “There’s plenty.”

This third release of Solus is an actual “release” and not a snapshot. What does that mean? The previous two releases of Solus were snapshots. Solus has actually moved away from the regular snapshot model found in rolling releases. With the standard rolling release, a new snapshot is posted at least every few days; from that snapshot an image can be created such that the difference between an installation and latest updates is never large. However, the developers have opted to use a hybrid approach to the rolling release. According to the Solus 3 release announcement, this offers “feature rich releases with explicit goals and technology enabling, along with the benefits of a curated rolling release operating system.”

Of course, no average user really cares if an operating system is a rolling release or a hybrid. From that particular perspective, what is more important is how well the platform works, how easy it is to use, and what it offers out of the box.

Let’s take a look at those three points to see just how well Solus 3 could serve even a new-to-Linux user.

What Solus 3 offers out of the box

On many levels, this is the most important point for first-time users. Why? Because there are many Linux distributions available that don’t meet the minimum needs, without having to tinker and add extra packages out of the box. This, however, is an area where Solus 3 really shines. Once installed, the average user will have everything they need to get their work done — and then some.

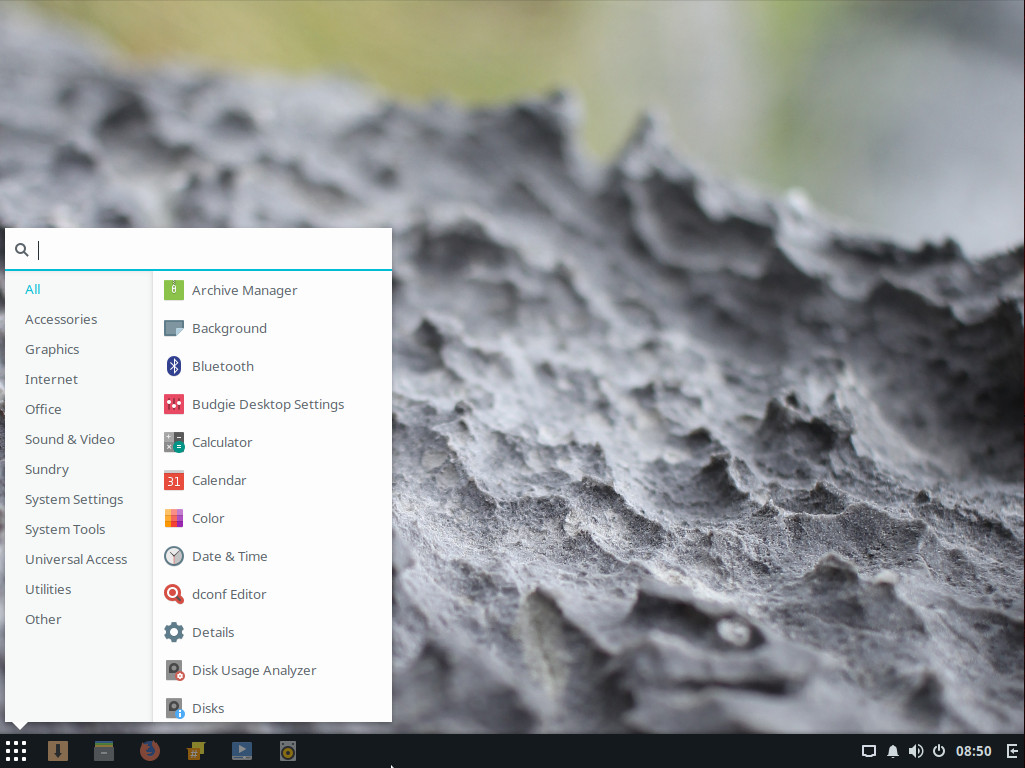

First off, Solus 3 features the Budgie desktop (Figure 1). Anyone that has ever used a PC desktop, since Windows XP, will be instantly at home. The standard features abound:

-

Task bar

-

Application menu (with search)

-

System tray

-

Notification center

-

Desktop icons

Once users get beyond the desktop interface, they’ll find all the applications necessary to go about their days:

-

Firefox web browser (version 55.0.3)

-

LibreOffice office suite (version 5.4.0.3)

-

Thunderbird email client with Lightning calendar pre-installed (version 52.3.0)

-

Rhythmbox audio player (version 3.4.1)

-

GNOME MPV movie player (version 0.12)

-

GNOME Calendar (version 3.24.3)

-

GNOME Files file manager (version 3.24.2)

Do note, the above version numbers reflect a system update upon initial installation.

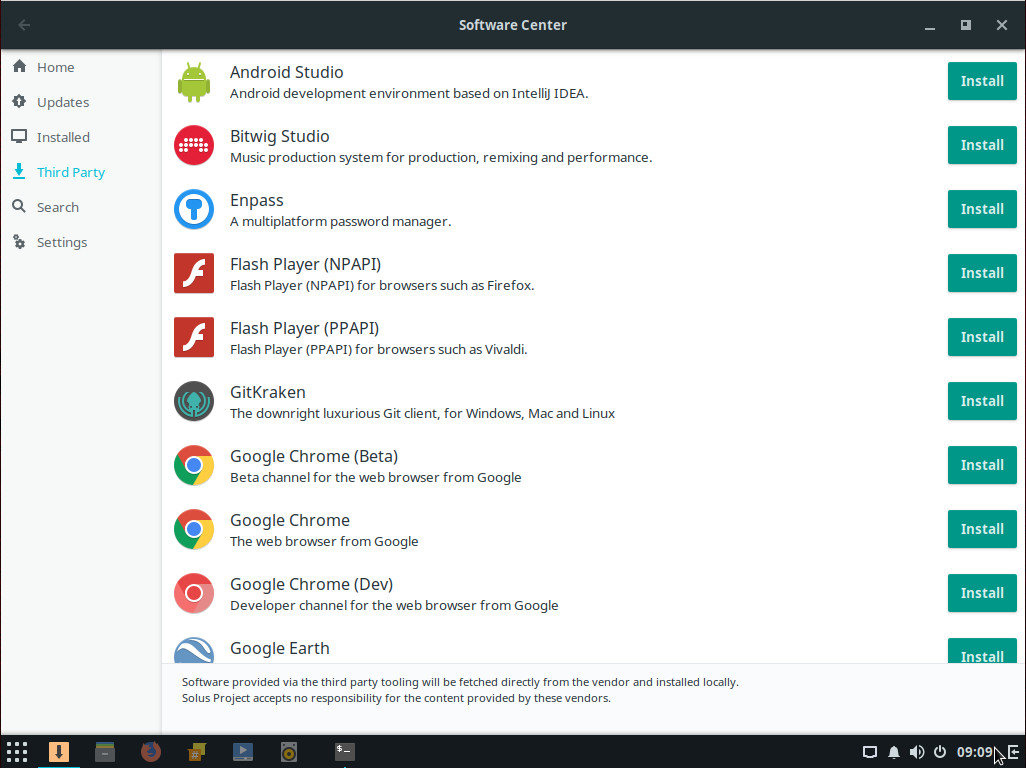

Solus 3 also includes a fairly straightforward Software Center tool — one that has a nifty trick up its sleeve. Unlike many Linux distributions, the Solus Software Center includes a Third Party section that doesn’t require the user to have to install added repositories to add the likes of Android Studio, Google Chrome, Insync, Skype, Spotify, Viber, WPS Office Suite, and more. All you have to to do is open up the Software Center, click Third Party, and find the third-party software you want to install (Figure 2).

Beyond the desktop and the included software, Solus 3 offers the user a remarkably pain-free experience, right out of the box.

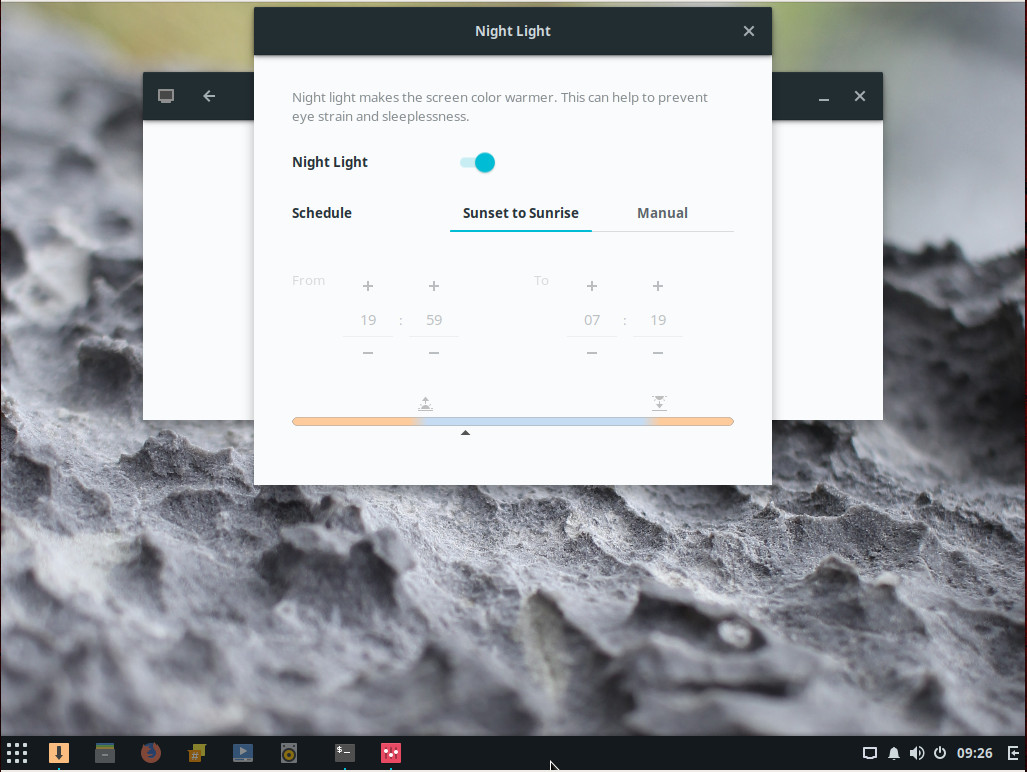

There are also a few small additions that go a long way to making Solus a special platform. Take, for instance, the Night Light feature, a tool that reduces eye strain by taking care of the display’s blue light. From within the Night Light tool, you can even set a schedule to enable/disable the feature (Figure 3).

The only issue I can find with included packages is the missing Samba-GNOME Files integration. Normally, it is possible to right-click a folder within the file manager and enable the sharing of said folder, via Samba. Although Samba is pre-installed, there is no easy way to enable Samba sharing within the default file manager. For those that really need to share out directories with Samba, you’ll have to do it the old-school way … via the terminal.

Solus 3 does make it fairly easy to connect to other shares on your network (by clicking Other Locations in Files and then browsing your local network).

How easy is it to use?

By now, you’ve probably drawn the conclusion that Solus 3 is a new-user dream come true. That conclusion would be spot on. The developers have done an amazing job of ensuring nothing could possibly trip up a new user. And by “nothing,” I do mean nothing. Solus 3 does exactly what a Linux distribution should do — it gets out of the way, so the user can focus on work or social/entertainment distraction. From installation of the operating system, to installation of software, to daily use … the Solus developers have done everything right. I cannot imagine a single user type stumbling over this take on Linux. Period. This is one Linux distribution with barely a single bump in the learning curve.

How well does Solus 3 work?

Considering how “young” Solus is, it is remarkably stable. During my testing phase, I only encountered one issue with the platform—installing the third-party Spotify client (NOTE: Other third-party software installed fine, so this is, most likely, a Spotify issue). Even with that hiccup, a second attempt at installing the Spotify client succeeded. That should tell you how issue-free Solus is. Outside of that (and the Samba issue), I am happy to report that Solus 3 “just works” and does so with grace and ease. To be honest, Solus 3 feels like a much more mature platform than a “3” release should.

Give Solus 3 a try

If you’re looking for a new Linux distribution that will make the transition from any other platform a no-brainer of a task, you cannot go wrong with Solus 3. This hybrid release distribution will make anyone feel right at home on the desktop, look great doing so, and ease away any headache you might have ever experienced with Linux.

Kudos to the Solus developers for releasing a gem of a distribution.

Learn more about Linux through the free “Introduction to Linux” course from The Linux Foundation and edX.