For today’s system administrators, gaining competencies that move them up the technology stack and broaden their skillsets is increasingly important. However, core skills like networking remain just as crucial. Previously in this series, we’ve provided an overview of essentials and looked at evolving network skills. In this part, we focus on another core skill: security.

With ever more impactful security threats emerging, the demand for fluency with network security tools and practices is increasing for sysadmins. That means understanding everything from the Open Systems Interconnect (OSI) model to devices and protocols that facilitate communication across a network.

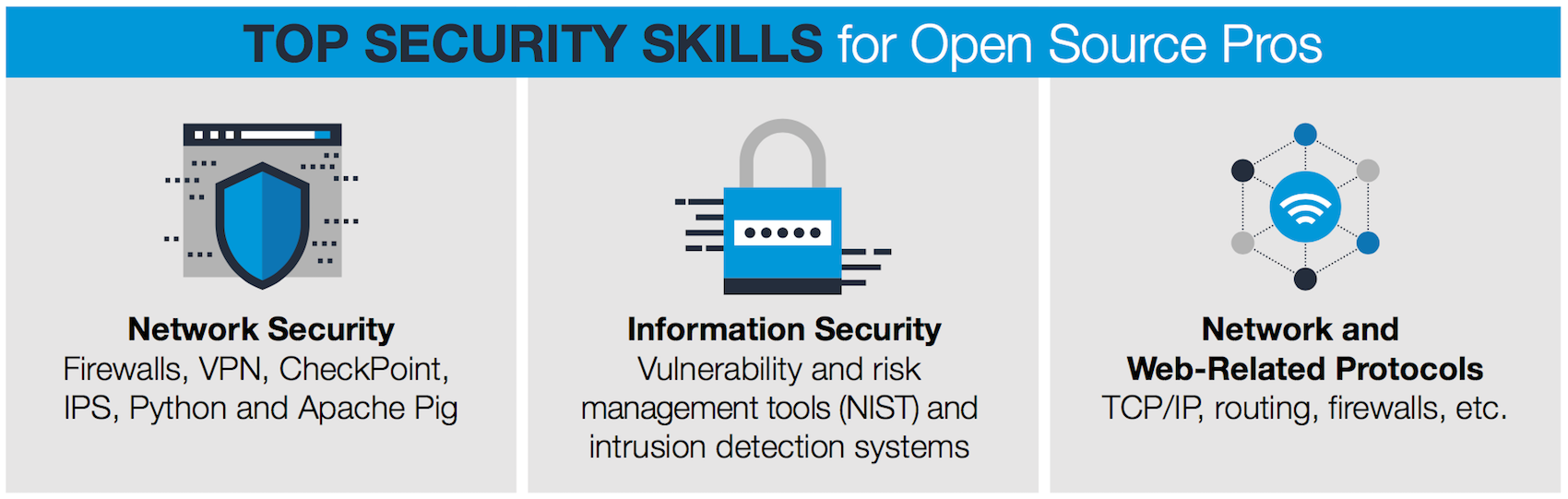

Locking down systems also means understanding the infrastructure of a network, which may or may not be Linux-based. In fact, many of today’s sysadmins serve heterogeneous technology environments where multiple operating systems are running. Securing a network requires competency with routers, firewalls, VPNs, end-user systems, server security, and virtual machines.

Securing systems and networks calls for varying skillsets depending on platform infrastructure, as is clear if you spend just a few minutes perusing, say, a Fedora security guide or the Securing Debian Manual. However, there are good resources that sysadmins can leverage to learn fundamental security skills.

For example, The Linux Foundation has published a Linux workstation security checklist that covers a lot of good ground. It’s aimed at sysadmins and includes discussion of tools that can thwart attacks. These include SecureBoot and Trusted Platform Module (TPM). For Linux sysadmins, the checklist is comprehensive.

The widespread use of cloud platforms such as OpenStack is also introducing new requirements for sysadmins. According to The Linux Foundation’s Guide to the Open Cloud: “Security is still a top concern among companies considering moving workloads to the public cloud, according to Gartner, despite a strong track record of security and increased transparency from cloud providers. Rather, security is still an issue largely due to companies’ inexperience and improper use of cloud services,” and a sysadmin with deeply entrenched cloud skills can be a valuable asset.

Most operating systems and widely used Linux distributions feature timely and trusted security updates, and part of a good sysadmin’s job is to keep up with these. Many organizations and administrators shun spin-off and “community rebuilt” platform infrastructure tools because they don’t have the same level of trusted updating.

Network challenges

Networks, of course, present their own security challenges. The smallest holes in implementation of routers, firewalls, VPNs, and virtual machines can leave room for big security problems. Most organizations are strategic about combating malware, viruses, denial-of-service attacks, and other types of hacks, and good sysadmins should study the tools deployed.

Freely available security and monitoring tools can also go a long way toward avoiding problems. Here are a few good tools for sysadmins to know about:

-

Wireshark, a packet analyzer for sysadmins

-

KeePass Password Safe, a free open source password manager

-

Malwarebytes, a free anti-malware and antivirus tool

-

NMAP, a powerful security scanner

-

NIKTO, an open source web server scanner

-

Ansible, a tool for automating secure IT provisioning

-

Metasploit, a tool for understanding attack vectors and doing penetration testing

For a lot of these tools, sysadmins can pick up skills by leveraging free online tutorials. For example, there is a whole tutorial series for Metasploit, and there are video tutorials for Wireshark.

Also on the topic of free resources, we’ve previously covered a free ebook from the editors at The New Stack called Networking, Security & Storage with Docker & Containers. It covers the latest approaches to secure container networking, as well as native efforts by Docker to create efficient and secure networking practices. The ebook is loaded with best practices for locking down security at scale.

Training and certification, of course, can make a huge difference for sysadmins as we discussed in “7 Steps to Start Your Linux Sysadmin Career.”

For Linux-focused sysadmins, The Linux Foundation’s Linux Security Fundamentals (LFS216) is a great online course for gaining well-rounded skills. The class starts with an overview of security and covers how security affects everyone in the chain of development, implementation, and administration, as well as end users. The self-paced course covers a wide range of Linux distributions, so you can apply the concepts across distributions. The Foundation offers other training and certification options, several of which cover security topics. For example, LFS201 Essentials of Linux System Administration includes security training.

Also note that CompTIA Linux+ incorporates security into training options, as does the Linux Professional Institute. Technology vendors offer some good choices as well; for example, Red Hat offers sysadmin training options that incorporate security fundamentals. Meanwhile, Mirantis offers three-day “bootcamp” training options that can help sysadmins keep an OpenStack deployment secure and optimized.

In the 2016 Linux Foundation/Dice Open Source Jobs Report, 48 percent of respondents reported that they are actively looking for sysadmins. Job postings abound on online recruitment sites, and online forums remain a good way for sysadmins to learn from each other and discover job prospects. So the market remains healthy, but the key for sysadmins is to gain differentiated types of skillsets. Mastering hardened security is surely a differentiator, and so is moving up the technology stack — which we will cover in upcoming articles.

Learn more about essential sysadmin skills: Download the Future Proof Your SysAdmin Career ebook now.

Read more:

Future Proof Your SysAdmin Career: An Introduction to Essential Skills

Future Proof Your SysAdmin Career: New Networking Essentials

Future Proof Your SysAdmin Career: Locking Down Security

Future Proof Your SysAdmin Career: Looking to the Cloud

Future Proof Your SysAdmin Career: Configuration and Automation

Future Proof Your SysAdmin Career: Embracing DevOps

Future Proof Your SysAdmin Career: Getting Certified

Future Proof Your SysAdmin Career: Communication and Collaboration

Future Proof Your SysAdmin Career: Advancing with Open Source