This article was sponsored by Microsoft and written by Linux.com.

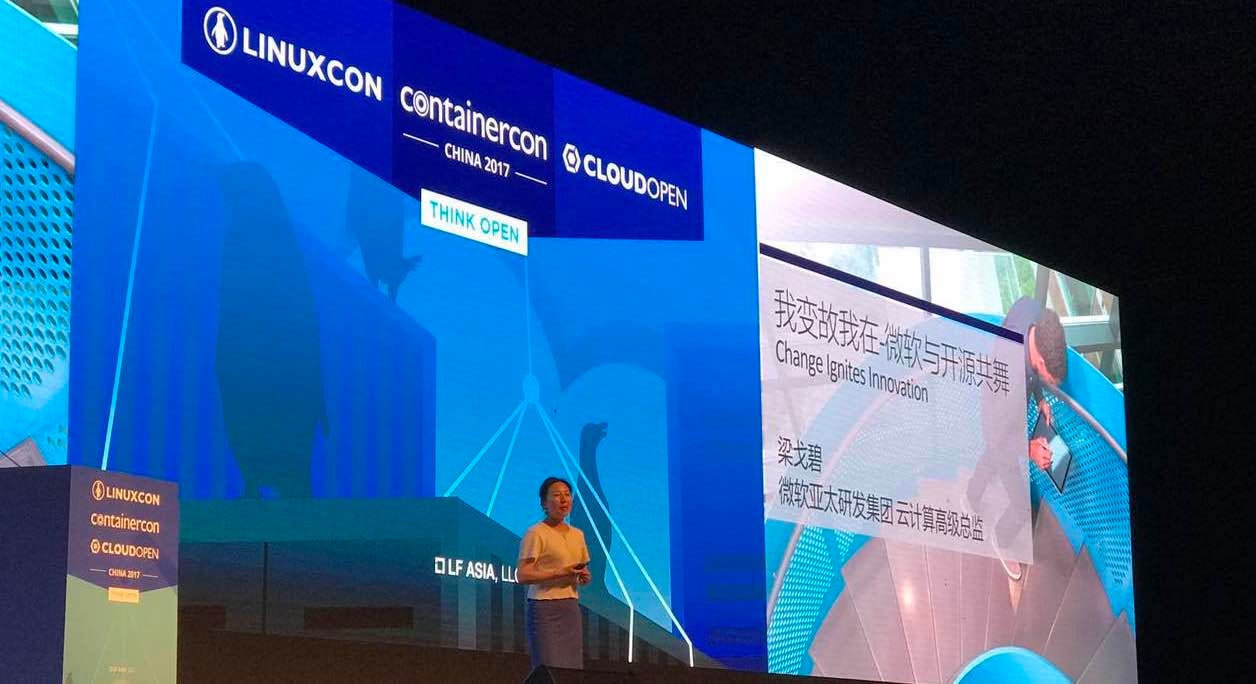

After much anticipation, LinuxCon, ContainerCon and Cloud Open China is finally here. Some of the world’s top technologists and open source leaders are gathering at the China National Convention Center in Beijing to discover and discuss Linux, containers, cloud technologies, networking, microservices, and more. Attendees will also exchange insights and tips on how to navigate and lead in the open source community, and what better way than to meet in person at the conference?

To preview how some leading companies are using open source and participating in the open source community, Linux.com interviewed several companies attending LinuxCon China. Here, Microsoft discusses how and why they adopted open source, how that strategy helps their customers and the open source community, but also how it helps Microsoft innovate and change how it does business.

Linux.com: What is Microsoft’s open source strategy today?

Gebi Liang: Our company mission is to enable companies to do more. An important step is enabling organizations to work on the tools and platforms they know, love and have already invested in. Thus, our strategy centers around providing an open and flexible platform that works the way you want and need it to. The platform integrates with leading ecosystems to deliver consistent offerings. But Microsoft went even further to release technology to support a strong ecosystem through Microsoft’s portfolio of investments, and to contribute technology to the open source community as well.

Shaping and deploying this strategy has been a multi-year journey. But each step along the way was significant including investing in open source contributions across the company and joining key foundations to deepen our partnerships with the community. We also made Linux and OSS run great and smoothly on Azure, and now one in three VMs on Azure are Linux. Microsoft teams forged key open source partnerships to bring more choice in solutions to Azure, such as Canonical, Red Hat, Pivotal, Docker, Chef and many more. Plus, we are also bringing many of our technologies into the open, or making them available on Linux.

Linux.com: What are some of Microsoft’s contributions in open source and as a platform?

Gebi Liang: We are making great progress in enabling and integrating open source, but also in contributing and releasing aspects.

First, while integrating open source solutions into our platforms, we collaborate with the community and contribute the code back to the community. Projects we contributed to is included , but not limited to: Linux and FreeBSD on Hyper-V, Hadoop, Windows container, Mesos and Kubernetes, Cloud Foundry and Openshift, various cloud deployment and management tools such as Chef & Puppet, and Hashicorp tools. Of course, there are many other projects too.

While developing our own VS code, the strong and lightweight IDE, we had also made a lot of contributions to the Electron codebase. As Microsoft has become member of many prominent open source foundations such as the Linux Foundation, we will be even more involved in these communities and continuously contribute.

Microsoft has also been releasing more and more of our Platforms, Services and Products to the open source community. The best-known ones include .Net, Powershell, Typescript, Xamarin, CNTK for machine learning, all the Azure SDKs and CLIs and VS Code.

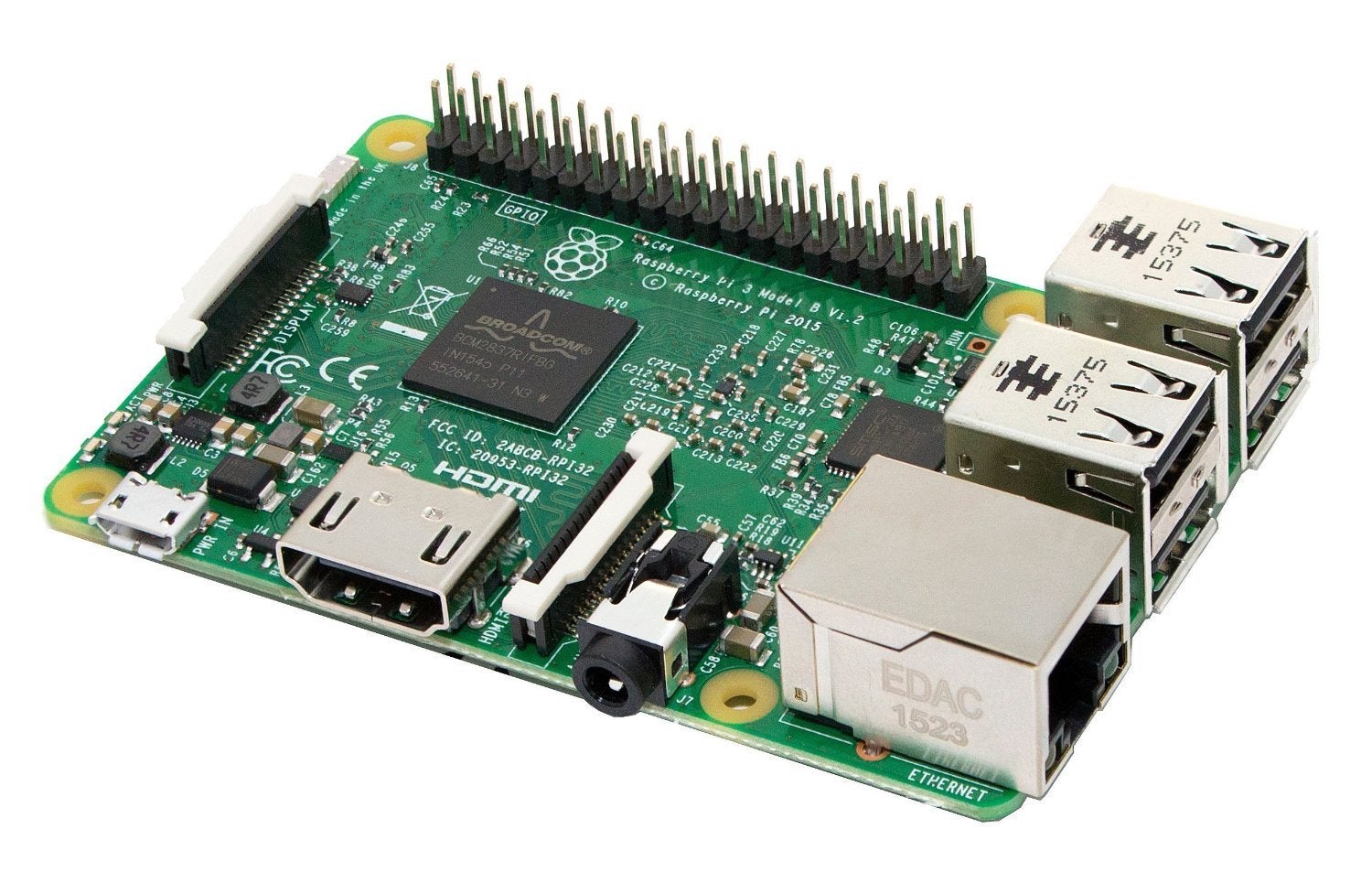

After the acquisition of Deis, we continue to invest in the set of popular K8s tools they developed, and recently released Draft, the tool to create apps for K8s, on Github. Even for products that are not fully open sourced, there are many components, especially the newly developed ones, become open source, such as many of the IoT tools and adapters, and the OMS agent for Linux. You can find the full list at https://opensource.microsoft.com/.

Even in the hardware space, we’re contributing our data center design to Open Compute Project.

Linux.com: How exactly does Microsoft empower companies that are using or looking to use open source?

Gebi Liang: We fully recognize that customers wanted to have more choices including the use of open source, we have been enabling the popular Open source stacks on our platform with unprecedented speed. I am very proud to share a list of such project covering just about every aspect of what customers need. On OS images, we enabled all the major Linux distros plus FreeBSD and OpenBSD as the latest addition. On Dev tools, now developers who are used to Mac environment can use Visual Studio on Mac, VS Code on Linux/Mac, or Eclipse, IntelliJ. And on database/big data, a Linux developer can use SQL on Linux and also use fully managed MySQL/PostgreSQL service on Azure.

In terms of Management/monitoring, one can not only use OMS, PowerShell, but also Chef/Puppet/Ansible/Terraform/Zabbix, etc. And for the popular microservices, we provide the fully diversified microservice platform support on Azure such as Docker Swarm, Mesos DC/OS, Kubernetes (k8s) in addition to Microsoft’s own microservice, service fabric, which supports both Windows and Linux. As a result, today we have 30%+ IaaS VMs running Linux on Azure and in China that number has reached 60%!

Linux.com: How is open source important to innovation at Microsoft?

Gebi Liang: Open source allows us to build on what the community had contributed, it gave us much speed to go to market. Also, when we contribute and release software back to the community, we can leverage the communities for better feedback and build better application inspired by new & creative ideas. This helps us innovate faster and making best practices beyond any single company could achieve. And that’s the Power of Crowd’s Wisdom.

Linux.com: It’s interesting to hear how Microsoft’s embrace of open source helps its customers, but also how open source helps Microsoft innovate internally. What else is Microsoft doing to build or empower an open source culture?

Gebi Liang: We are committed to building a sustainable open source culture at Microsoft. Cultural Shift requires deep internal alignment with rewards and compensation. Microsoft had refined the Performance Review system for better accommodating the culture to share and to contribute. All employees are asked at every performance review to describe how they are empowering others and how they are building on the work of others. Open source is an officially recognized and documented core aspect of the developer skill set. And we can see that internal culture change is paying off with over 16K employees on GitHub, with some of them are even making critical contributions to projects like Docker and Hadoop.

I hope to see everyone at LinuxCon China. I’m happy to share more information about Microsoft and open source and perhaps collaborate on new projects too. See you there!