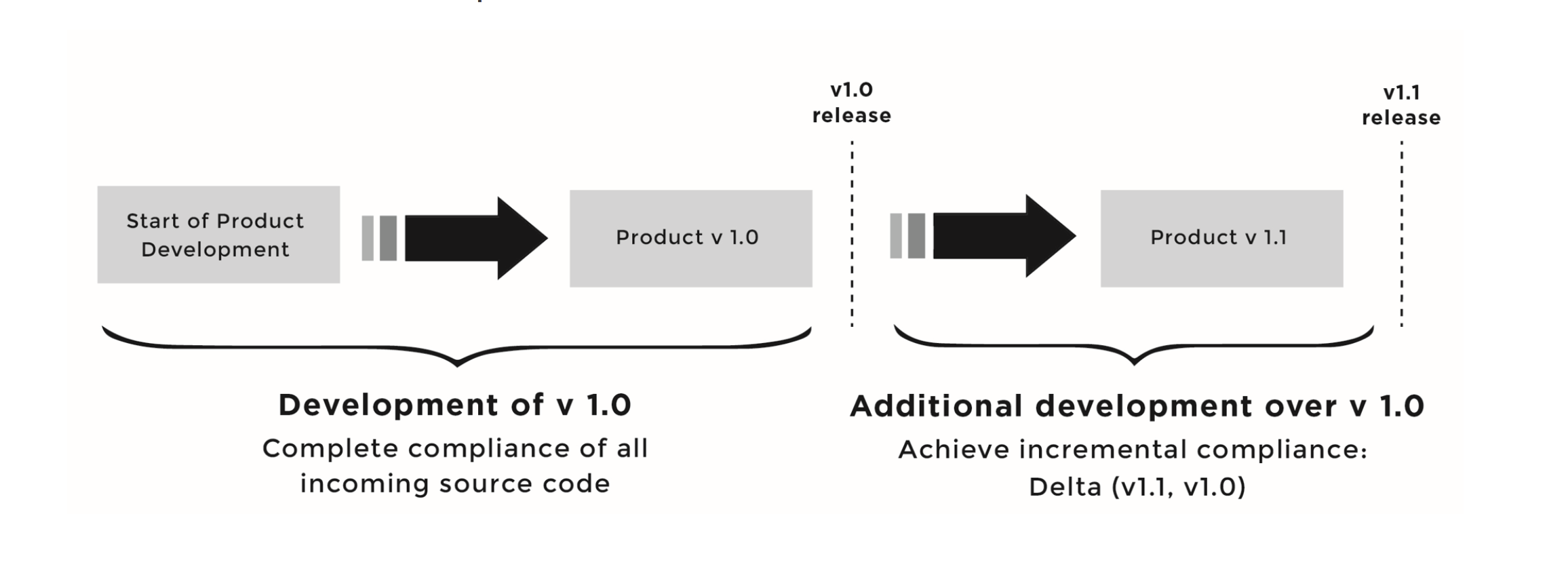

The previous article in this series covered how to establish a baseline for open source software compliance by finding exactly which open source software is already in use and under which licenses it is available. But how do you make sure that future revisions of the same product (or other products built using the initial baseline) stay compliant once the baseline is established?

This is the concept of incremental compliance: you need to ensure compliance of whatever source code changes took place between the initial compliant baseline and the current version.

Maintaining open source license compliance throughout code changes is a continuous effort that depends on discipline and commitment to build compliance activities into existing engineering and business processes. And it’s a process that involves maintaining both the open source code, as well as the open source culture of an organization.

Below are some recommendations, based on The Linux Foundation’s e-book Open Source Compliance in the Enterprise, for some of the best ways to maintain compliance as your organization’s code and company evolves.

Maintaining Code Compliance

First, companies can maintain open source code compliance through processes and improvements aimed at the development process:

-

Adherence to the company’s compliance policy and process, in addition to any provided guidelines

-

Continuous audits of all source code integrated in the code base, regardless of its origins

-

Continuous improvements to the tools used in ensuring compliance and automating as much of the process as possible to ensure high efficiency in executing the compliance program

Maintaining a Culture of Compliance

In addition to the code, companies need to take steps to maintain compliance activities as the organization itself grows and ships more products and services using open source software. They must institutionalize compliance within their development culture to ensure its sustainability. Below are a few ways that companies can maintain the culture of compliance, as well as code compliance.

Sponsorship

Executive-level commitment is essential to ensure sustainability of compliance activities. There must be a company executive who acts as ongoing compliance champion and who ensures corporate support for open source management functions.

Consistency

Achieving consistency across the company is key in large companies with multiple business units and subsidiaries. A consistent interdepartmental approach helps with recordkeeping, and also facilitates sharing code across groups.

Measurement and analysis

Measure and analyze the impact and effectiveness of compliance activities, processes, and procedures with the goal of studying performance and improving the compliance program. Metrics will help you communicate the productivity advantages that accrue from each program element when promoting the compliance program.

Refining compliance processes

The scope and nature of an organization’s use of open source is dynamic — dependent on products, technologies, mergers, acquisitions, offshore development activities, and many other factors. Therefore, it is necessary to continuously review compliance policies and processes and introduce improvements.

Furthermore, open source license interpretations and legal risks continue to evolve. In such a dynamic environment, a compliance program must evolve as well.

A compliance program is of no value unless it is enforced. An effective compliance program should include mechanisms for ongoing monitoring of adherence to the program and for enforcing policies, procedures, and guidelines throughout the organization. One way to enforce the compliance program is to integrate it within the software development process and ensure that some measurable portion of employee performance evaluation depends on their commitment to and execution of compliance program activities.

Staffing

Ensure that staff is allocated to the compliance function, and that adequate compliance training is provided to every employee in the organization. In larger organizations, the compliance officer and related roles may grow to be FTEs (full time equivalents); in smaller organizations, the responsibility of open source management is more likely to be a shared and/or a part-time activity.

Read the first article in this series: