The Linux Foundation’s Hadoop project, ODPi, and Enterprise Strategy Group (ESG) are teaming up on November 7 for a can’t miss webinar for Chief Data Officers and their Big Data Teams.

-

How ODPi pulls complexity out of Hadoop, freeing enterprises and their vendors to innovate in the application space

-

How CDOs and app vendors port apps easily across cloud, on prem and Hadoop distros. Nik revels ESG’s latest research on where enterprises are deploying net new Hadoop installs across on-premise, public, private and hybrid cloud

-

What big data industry leaders are focusing on in the coming months

Removing Complexity

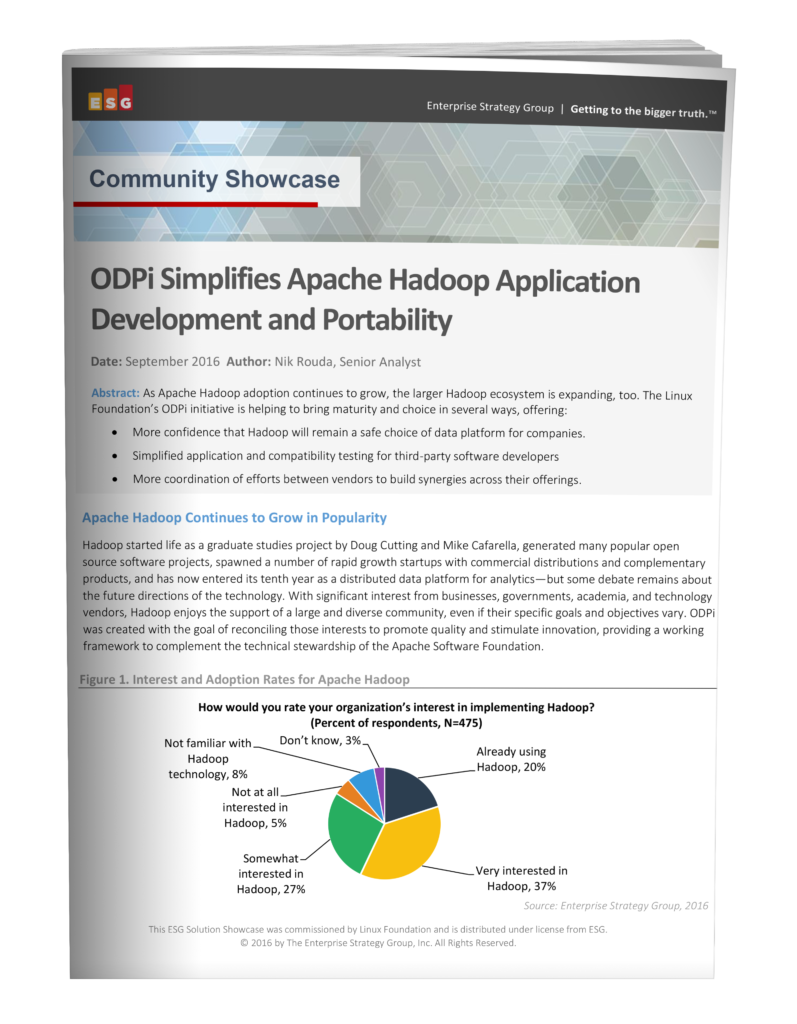

As ESG’s Nik Rouda observes, “Hadoop is not one thing, but rather a collection of critical and complementary components. At its core are MapReduce for distributed analytics jobs processing, YARN to manage cluster resources, and the HDFS file system. Beyond those elements, Hadoop has proven to be marvelously adaptable to different data management tasks. Unfortunately, too much variety in the core makes it harder for stakeholders (and in particular, their developers) to expand their Hadoop-enhancing capabilities.”

The ODPI Compliant certification program ensures greater simplicity and predictability for everyone downstream of Hadoop Core – SIs, app vendors and end users.

Application Portability

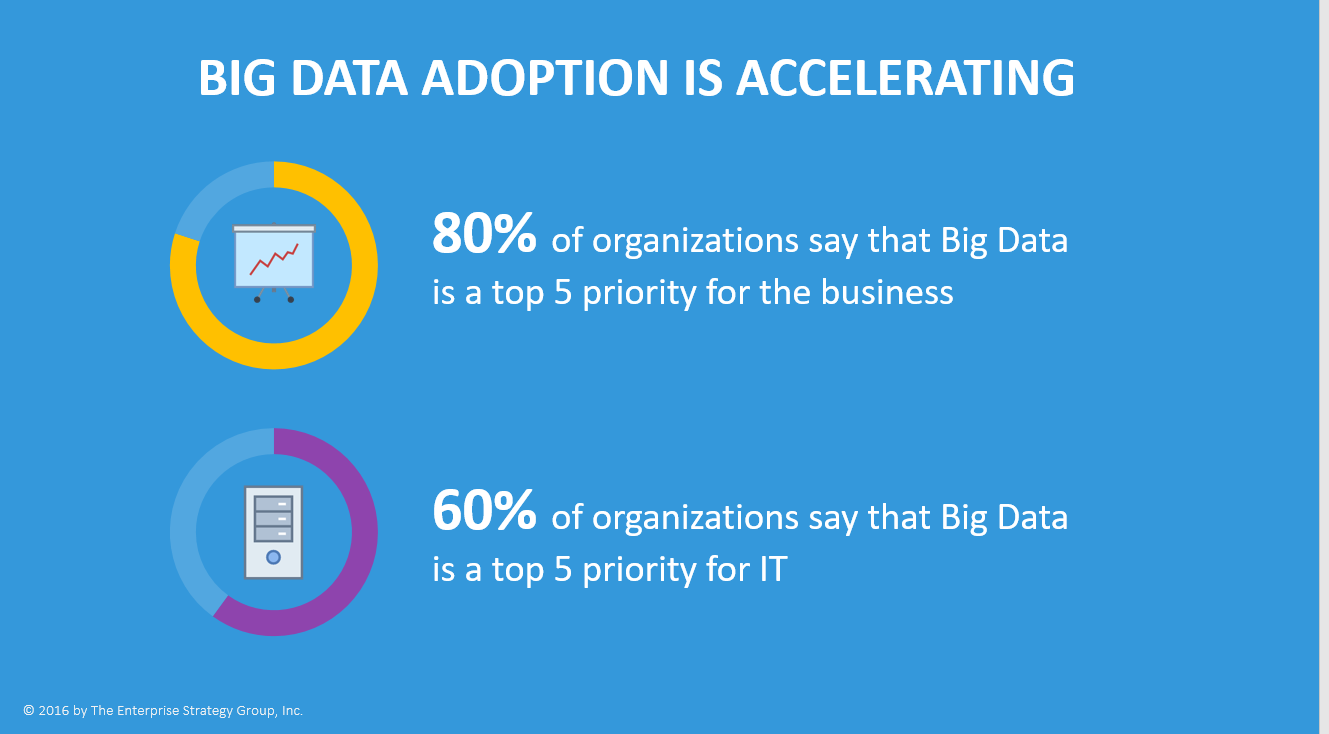

ESG reveals their latest findings on how enterprises are deploying Hadoop, and you may be surprised at the percent moving to the cloud. Find out who’s deploying on premise (dedicated and shared), who’s using pre-configured on-prem infrastructure, what percent are moving to private, public and hybrid cloud.

Where Industry Leaders are Headed

ESG interviewed leaders like Capgemini, VMWare, and more as part of this ODPi research – let their thinking light your way as you develop your Hadoop and Big Data Strategy.

Reserve your spot for this informative webinar.

As a bonus, all registrants will receive a free copy of Nik’s latest Big Data report.

The previous

The previous  The reports show that those high performing IT organizations also have higher employee loyalty and engagement.

The reports show that those high performing IT organizations also have higher employee loyalty and engagement.