Here at The Linux Foundation’s blog, we share content from our projects, such as this article from the Cloud Native Computing Foundation’s blog. The guest post was originally published on Contino Engineering’s blog by Dan Chernoff.

Supply chain attacks rose by 42% in the first quarter of 2021 [1] and are becoming even more prevalent [2]. In response to secure software supply chain breaches like Solar Winds [3], Kaseya[4], and other less publicized compromises [5], the Biden administration issued an executive order that includes guidance designed to improve the federal government’s defense against cyber threats. With all of this comes the inevitable slew of blog posts that detail a software supply chain and how you would protect it. The Cloud Native Computing Foundation recently released a white paper regarding software supply chain security [7], an excellent summary of the current best practices for securing your software supply chain.

The genesis for the content in this article is work done to implement secure supply chain patterns and practices for a Contino customer. The core goals for the effort were; implement a pipeline agnostic solution that ensures the security of the pipelines and enables secure delivery for the enterprise. We’ll talk a little about why we chose the tools we did in each section and how they supported the end goal.

As we start our journey, we’ll first touch on what a secure software supply chain is and why you should have one to set the context for the rest of the blog post. But let’s assume that you have already decided that your software supply chains need to be secure, and you want to implement the capability for your enterprise. So let’s get into it!

Anteing Up

Before you embark upon the quest of establishing provenance for your software at scale, there are some table stakes elements that teams should already have in place. We won’t delve deeply into any of them here other than to list and briefly describe them.

Centralized Source Control, Git is by far the most popular choice. This ensures a single source of truth for development teams. Beyond just having source control, teams should also implement the signing of their Git commits.

Static Code Analysis. This identifies possible vulnerabilities within ‘static’ (non-running) source code by using techniques such as Taint Analysis and Data Flow Analysis. Analysis and results need to be incorporated into the cadence of development.

Vulnerability Scanning. Implement automated tools that scan the applications and containers that are built to identify potential vulnerabilities in the compiled and sometimes running applications.

Linting is a tool that analyzes source code to flag programming errors, bugs, and stylistic errors. Linting is important to reduce errors and improve the overall code quality. This in turn accelerates development.

CI/CD Pipelines. New code changes are automatically built, tested, versioned, and delivered to an artifact repository. A pipeline then automatically deploys the updated applications into your environments (e.g. test, staging, production, etc.).

Artifact Repositories. Provide management of the artifacts built by your CI/CD systems. An artifact repository can help with the version and access control of your artifacts.

Infrastructure as Code (IaC) is the process of managing and provisioning infrastructure (e.g. virtual machines, databases, load balancers, etc.) through code. As with applications, IaC provides a single source of truth for what the infrastructure should look like. It also provides the ability to test before deploying to production.

Automated…well, everything. Human-in-the-loop systems are not deterministic. They are prone to error which can and will cause outages and security gaps. Manual systems also inhibit the ability of platforms to scale quickly.

What is a Secure Software Supply Chain

A software supply chain consists of anything that goes into the creation of your end software product and the mechanisms you use to deliver the product to customers. This includes things like your source code, your build systems, the 3rd party libraries, deployment infrastructure, or delivery repositories.

Attributes:

Establishes Provenance — One part of establishing provenance is ensuring that any artifact that is created and accessed by the customer should be able to trace its lineage all the way back to the developer(s) that merged the latest commit. The other part is the ability to demonstrate (or attest) that for each step in the process, the software, components, and other materials that go into creating the final product are tamper-free.

Trust — Downstream systems and users need a mechanism to verify that the software that is being installed or deployed came from your systems and that the version being used is the correct version. This ensures that malicious artifacts have not been substituted or that older, vulnerable versions have not been relabeled as the current version.

Transparent — It should be easy to see the results and details for all steps that go into the creation of the final artifact. This can include things like test results, output from vulnerability scans, etc.

Key Elements of a Secure Software Supply Chain

Let’s take a closer look at the things that need to be layered into your pipelines to establish provenance, enable transparency, and ensure tamper resistance.

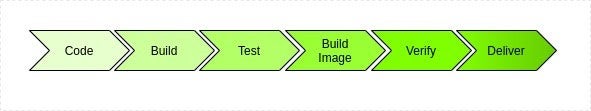

Here is what a typical pipeline might look like that creates a containerized application. We’ll use this simple pipeline and add elements as we discuss them.

Establishing Provenance Using in-toto

The first step in our journey is to establish that artifacts built via a pipeline have not been tampered with and to do so in a reliable and repeatable way. As we mentioned earlier, part of this is creating evidence to use as part of the verification. in-toto is an open-source tool that creates a snapshot of the workspace where the pipeline step is running.

These snapshots (“link files” in the in-toto terminology) verify the integrity of the pipeline. The core idea behind in-toto is the concept of materials and products and how they flow, just like in a factory. Each step in the process usually has some material that will create its product. An example of the flow of materials and products is the build step. The build step uses code as the material, and the built artifact (jar, war, etc.) is the product. A later step in the pipeline will use the built artifact as the material and produce another product. In this way, in-toto allows you to chain the materials and products together and identify if a material has been tampered with during or between one of the pipeline steps. For example, if the artifact constructed during the build step changed before testing.

At the end of the pipeline, in-toto evaluates the link data (the attestation created at each step) against an in-toto layout (think Jenkins file for attestation) and verifies that all the steps were done correctly and by the approved people or systems. This verification can run anytime the product of the pipeline (container, war, etc.) needs to be verified.

Critical takeaways for establishing provenance

in-toto runs at every step of the process. The attestation compares to an overarching layout during verification. This process enables consumers (users and/or deployment systems) to have confidence that the artifacts built were not altered from start to finish.

Establishing Trust using TUF

You can use in-toto verification to know that the artifact was delivered or downloaded without modification. To do that, you will need to download the artifact(s), the in-toto link files used during the build, the in-toto layout, and the public keys to verify it all. That is a lot of work. An easier way is to sign the artifacts produced with a system that enables centralized trust. The most mature framework for doing so is TUF (The Update Framework).

TUF is a framework that gives consumers of artifacts guarantees that the artifact downloaded or automatically installed came from your systems and is the correct version. The guts of how to accomplish this are outside the scope of this blog post. The functionality we are interested in is verifying that an artifact came from the producer we expected and that the version is the expected version.

Implementing TUF on your own is a fair bit of work. Fortunately, an “out of the box” implementation of TUF is available for use, Docker Content Trust (a.k.a. Notary). Notary enables the signing of regular files as well as containers. In our example pipeline, we sign the container image during build time. This signing allows any downstream system or user to verify the authenticity of the container.

Transparency Centralized Data Storage

One of the gaps that in-toto has as a solution is a mechanism to persist the link data it creates. It is up to the team to implement in-toto to capture and store the link data somewhere. All the valuable metadata for each step can be captured and stored outside of the build system. The goal is twofold; the first is to store the link data outside the pipeline to enable teams to retrieve the link data and use it anytime verification needs to run on the artifacts produced from the pipeline. The second goal is to store the metadata around the build process outside the pipeline. That enables teams to implement visualizations, monitoring, metrics, and rules on the data produced from the pipeline without necessarily needing to keep it in the pipeline.

The Contino team created metadata capture tooling that is independent and agnostic of the pipeline. We chose to write a simple python tool that captures the meta and in-toto data and stores it in a database. If the CI/CD platform is reasonably standard, you can likely use built-in mechanisms to achieve the same results. For example, the Jenkins LogStash plugin can capture the output of a build step and persist data to an elastic datastore.

PGP and Signing Keys

A core component for in-toto and Notary are keys used to sign and verify link data and artifacts/containers. in-toto uses PGP private keys to sign the link data produced at each step internally. That signing ensures a relationship between the person or system that did the action and the link data. It also ensures that it can be easily detected if the link data gets altered or tampered with in any way.

Notary uses public and private keys generated using the Docker or Notary CLI. The public keys get stored in the notary database. The private keys sign the containers or other artifacts.

Scaling Up

For a small set of pipelines, manually implementing and managing secure software supply chain practices is straightforward. Management of an enterprise that has hundreds if not thousands of pipelines requires some additional automation.

Automate in-toto layout creation. As mentioned earlier, in-toto has a file akin to a Jenkins file that dictates what person or systems can complete a pipeline step, the material and product flow, and how to inspect/verify the final artifact(s). Embedded in this layout are the IDs for the PGP keys of the people or systems who can perform steps. Additionally, the layout is internally signed to ensure that any tampering can be detected once the layout gets created. To manage this at scale, the layouts need to be automatically created/re-created on demand. We approach this as a pipeline that automatically runs on changes to the code that creates layouts. The output of the pipeline is layouts, which are treated as artifacts themselves.

Treat in-toto layouts like artifacts. in-toto payouts are artifacts, just like containers, jars, etc. Layouts should be versioned, and the layout version linked to the version of the artifact. This versioning enables artifacts to be re-verified with the layout, link files, and relevant keys at artifact creation time.

Automate the creation of the signing keys. Signing keys that are used by autonomous systems should be rotated frequently and through automation. Doing this limits the likelihood for compromise of the signing keys used by in-toto and Notary. For in-toto, this frequent rotation will require the automatic re-creation of the in-toto layouts. For Notary, cycling the signing keys will require revocation of the old key when we implement the new key.

Store and use signing keys from a secret store. When generating signing keys for use by automated systems, storing the keys in secret management systems like Hashicorp’s Vault is an important practice. The automated system can retrieve the signing keys (e.g., Jenkins, GitLab ci, etc.) when needed. Centrally storing the signing keys combats “secrets sprawl” in an enterprise and enables easier management.

Pipelines should be roughly similar. A single in-toto layout can be used by many pipelines, as long as they operate in the same way. For example, pipelines that build a Java application that creates a WAR as the artifact probably operates in roughly the same way. These pipelines can all use the same layout if they are similar enough.

Wrapping it All Up

Using the technologies, patterns, and practices here the Contino team was able to deliver an MVP grade solution for the enterprise. The design will be able to scale up to thousands of application pipelines and help ensure software supply chain security for the enterprise.

At its core, a secure software supply chain encompasses anything that goes into building and delivering an application to the end customer. It is built on the foundations of secure software development practices (e.g. following OWASP top 10, SAST, etc.). Any implementation of secure supply chain best practices needs to establish provenance about all aspects of the build process, provide transparency for all steps and create mechanisms that ensure trustworthy delivery.

Sources:

[4] https://www.zdnet.com/article/updated-kaseya-ransomware-attack-faq-what-we-know-now/

The post Secure software supply chains: good practices, at scale appeared first on Linux Foundation.