Using Global Transaction Identifiers for data replication makes rollouts, debugging, and configuration much easier for admins.

Read More at Enable Sysadmin

Using Global Transaction Identifiers for data replication makes rollouts, debugging, and configuration much easier for admins.

Read More at Enable Sysadmin

RHACS monitors runtime data on containers to help you uncover potential vulnerabilities during product testing.

Read More at Enable Sysadmin

Some great insight into how a kernel sta

Click to Read More at Oracle Linux Kernel Development

How to get started with scripting in Python

Image

WOCinTech Chat, CC BY 2.0

Learn to use functions, classes, loops, and more in your Python scripts to simplify common sysadmin tasks.

Posted:

March 30, 2022

|

by

Peter Gervase (Red Hat, Sudoer), Bart Zhang (Red Hat)

Topics:

Programming

Python

Read the full article on redhat.com

Read More at Enable Sysadmin

Why it makes sense to write Kubernetes webhooks in Golang

When to choose Golang versus Python and YAML for writing Kubernetes webbooks.

lseelye

Mon, 3/21/2022 at 10:16pm

Image

Photo by Rachel Claire from Pexels

Towards the end of 2019, OpenShift Dedicated site reliability engineers (SREs) on the SRE-Platform (SREP) team had a problem ahead of a new feature release: Kubernetes role-based authentication controls (RBAC) wasn’t working. Or, rather, it wasn’t working for us.

Topics:

Kubernetes

Programming

OpenShift

Read More at Enable Sysadmin

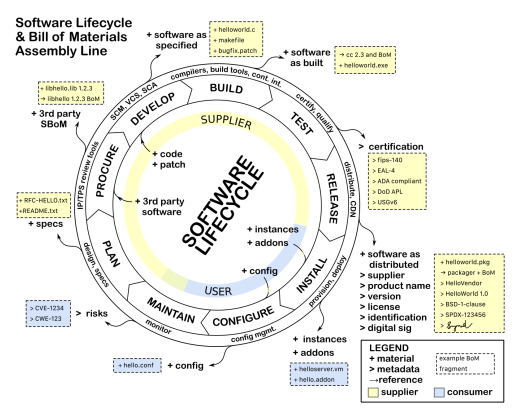

A software bill of materials (SBOM) is a way of summarizing key facts about the software on a system. At the heart of it, it describes the set of software components and the dependency relationships between these components that are connected together to make up a system.

Modern software today consists of modular components that get reused in different configurations. Components can consist of open source libraries, source code or other external, third-party developed software. This reuse lets innovation of new functionality flourish, especially as a large percentage of those components being connected together to form a system may be open source. Each of these components may have different limitations, support infrastructure, and quality levels. Some components may be obsolete versions with known defects or vulnerabilities. When software runs a critical safety system, such as life support, traffic control, fire suppression, chemical application, etc., being able to have full transparency about what software is part of a system is an essential first step for being able to do effective analysis for safety claims.

When a system has functionality incorporated that could have serious consequences in terms of a person’s well being or significant loss, the details matter. The level of transparency and traceability may need to be at different levels of details based on the seriousness of the consequences.

Source: NTIA’s Survey of Existing SBOM Formats and Standards

Safety Standards, and the claims necessarily made against them, come in a variety of different forms. The safety standards themselves mostly vary according to the industry that they target: Automotive uses ISO 26262, Aviation uses DO 178C for software and DO 254 for hardware, Industrial uses IEC 61508 or ISO 13849, Agriculture uses ISO 25119, and so on. From a software perspective, all of these standards work from the same premise that the full details of all software is known: The software should be developed according to a software quality perspective, with additional measures added for safety. In some instances these additional safety measures come in the form of a software FMEA (Failure Modes and Effects Analysis), but in all of them, there are specific code coverage metrics to demonstrate that as much of the code as possible has been tested and that the code complies with the requirements.

Another item that all safety standards have in common is the expectation that the system configuration is going to be managed as part of any product release. Configuration management (CM) is an inherent expectation in software already, but with safety this becomes even more crucial because of the need to track exactly what the configuration of a system (and its software) is if there is a subsequent incident in the field while the system is being used. From a software perspective, this means we need several things:

The goal, then, is to be able to rebuild exactly what the executable or binary was at the time of release.

From the above, it is inherently obvious how the SBOM fits into the need for CM. The safety standards CM requirements, from a source code and configuration standpoint, are greatly simplified by following an effective SBOM process. An SBOM supports capturing the details of what is in a specific release and supports determining what went wrong if a failure occurs.

Because software often relies upon reusable software components written by someone other than the author of the main system/application, the safety standards also have a specific expectation and a given set of criteria for software that you end up including in your final product. This can be something as simple as a library of run-time functions as we might expect to see from a run-time library, to something as extensive as a middleware that manages communication between components. While the safety standards do not always require that the included software be developed in accordance with a safety standard, there are still expectations that you can prove that the software was developed at least in compliance with a quality management framework such that you can demonstrate that the software fulfills its requirements. This is still predicated on the condition that you know all of the details about the software component and that it fulfills its intended purpose.

The included software components can be from:

Regardless of the source or current usage of the software, the SBOM should describe all of the included software in the release.

To this end, the safety standards expect that the following is available for each software component included in your project:

At a minimum, the SBOM describes the software component, supplier and version number, with an enumeration of the included dependent components. This is what is being called for in the minimum viable definition of an SBOM to support cyber security[1] or safety critical software[2].

Having a minimum level of information, while better than nothing, is not sufficient for the level of analysis that safety claims expect. Knowing exactly which source files were included in the build is a better starting point. Even better still is knowing the configuration options that were used to create the image (and be able to reproduce it), and being able to check via some form of integrity check (like a hash) that the built components haven’t changed is going to be key to having a sound foundation for the safety case. SBOMs need to scale from the minimum, to the level of detail necessary to satisfy the safety analysis.

While SBOM tooling may not be able to populate all of this information today, the tools are continuing to evolve so that the facts necessary to support safety analysis can be made available. An international open SBOM standard, like SPDX[3] can become the baseline for modern configuration management and effective documentation of safety critical systems.

[1] The Minimum Elements For a Software Bill of Materials (SBOM) from NTIA

[2] ISO 26262:2018, Part 8, Clause 12 – Qualification of Software Components

[3] ISO/IEC 5962:2021 – Information technology — SPDX® Specification V2.2.1

Peter Brink, Functional Safety Engineering Leader, kVA by UL, Underwriters Laboratories (UL)

Kate Stewart, VP Dependable Embedded Systems, The Linux Foundation

Find out what’s stopping you from accessing a server, printer, or another network resource with these four Linux troubleshooting commands.

Read More at Enable Sysadmin

Install, configure, and test a very basic web server deployment in just eight steps.

Read More at Enable Sysadmin

Arresting climate change is no longer an option but a must to save the planet for future generations. The key to doing so is to transition off fossil fuels to renewable energy sources and to do so without tanking economies and our very way of life.

The energy industry sits at the epicenter of change because energy makes everything else run. And inside the energy industry is the need for a rapid transition to electrification and our vast power grids. Like it or not, utilities face existential decisions on transforming themselves while delivering ever more power to more people without making energy unaffordable or unavailable.

The challenges are daunting:

How to move away from fossil fuels without crashing the global economy that is fueled by energy?Is it possible to speed up the modernization of the electric grid without spending trillions of dollars?Can this be done while ensuring that power is safe, reliable, and affordable for all?

These are all significant problems to solve and represent 75% of the problem in combating climate change through decarbonization. In the Linux Foundation’s latest case study, Paving the Way to Battle Climate Change: How Two Utilities Embraced Open Source to Speed Modernization of the Electric Grid, LF Energy explores the opportunities for digital transformation within electric utility providers and the role of open source technologies in accelerating the transition.

The growth of renewable energy sources is making the challenges of modernizing the modern grid more complicated. In the past, energy flowed from coal and gas generating plants onto the big Transmission System Operator (TSO) lines and then to the smaller Distribution System Operator (DSO) lines to be transformed into a lower voltage suitable for homes and businesses.

But now, with solar panels and wind turbines increasingly feeding electricity back into the grid, the flow of power is two-way.

This seismic shift requires a new way of thinking about generating, distributing, and consuming energy. And it’s one that open source can help us navigate.

Today, energy travels in all directions, from homes and businesses, and from wind and solar farms, through the DSOs to the TSOs, and back again. This fundamental change in how power is generated and consumed has resulted in a much more complicated system that utilities must administer. They’ll require new tools to guarantee grid stability and manage the greater interaction between TSOs and DSOs as renewables grow.

Open source software allows utilities to keep up with the times while lowering expenses. It also gives utilities a chance to collaborate on common difficulties rather than operating in isolation.

The communities developing LF Energy’s various software projects provide those tools. It’s helping utilities to speed up the modernization of the grid while reducing costs. And it’s giving them the ability to collaborate on shared challenges rather than operate in silos.

Two European utility providers, the Netherlands’ Alliander and France’s RTE are leading the change by upgrading their systems – markets, controls, infrastructure, and analytics – with open source technology.

RTE (a TSO) and Alliander (a TSO) joined forces initially (as members of the Linux Foundation’s LF Energy projects) because they faced the same problem: accommodating more renewable energy sources in infrastructures not originally designed for them and doing it at the speed and scale required. And while they are not connected due to geography, the problems they are tackling apply to all TSOs and DSOs worldwide.

The way that Alliander and RTE collaborated via LF Energy on a project known as Short Term Forecasting, or OpenSTEF, illustrates the benefits of open source collaboration to tackle common problems.

“Short-term forecasting, for us, is the core of our existence,” According to Alliander’s Director of System Operations, Arjan Stam. “We need to know what will be happening on the grid. That’s the only way to manage the power flows,” and to configure the grid to meet customer needs.“The same is true for RTE and “every grid operator across the world,” says Lucian Balea, RTE’s Director of Open Source.

Alliander has five people devoted to OpenSTEF, and RTE has two.

Balea says that without joining forces, OpenSTEF would develop far less quickly, and RTE may not have been able to work on such a solution in the near term.

Since their original collaboration on OpenSTEF, they have collaborated on additional LF Energy Projects, CoMPAS, and SEAPATH.

CoMPAS is Configuration Modules for Power industry Automation Systems, which addresses a core need to develop open source software components for profile management and configuration of a power industry protection, automation, and control system. ComPAS is critical for the digital transformation of the power industry and its ability to move quickly to new technologies. It will enable a wide variety of utilities and technology providers to work together on developing innovative new solutions.

SEAPATH, Software Enabled Automation Platform and Artifacts (THerein): aims to develop a platform and reference design for an open source platform built using a virtualized architecture to automate the management and protection of electricity substations. The project is led by Alliander, with RTE and other consortium members contributing.

As we move to a decarbonized future, open source will play an increasingly important role in helping utilities meet their goals. It’s already helping them speed up the grid’s modernization, reduce costs, and collaborate on shared challenges. And it’s only going to become essential as we move toward a cleaner, more sustainable energy system.

Read Paving the Way to Battle Climate Change: How Two Utilities Embraced Open Source to Speed Modernization of the Electric Grid to see how it works and how you and your organization may leverage Open Source. Together, we can develop solutions.

The post LF Energy: Solving the Problems of the Modern Electric Grid Through Shared Investment appeared first on Linux Foundation.

Last year’s Jobs Report generated interesting insights into the nature of the open source jobs market – and informed priorities for developers and hiring managers alike. The big takeaway was that hiring open source talent is a priority, and that cloud computing skills are among the top requested by hiring managers, beating out Linux for the first time ever in the report’s 9-year history at the Linux Foundation. Here are a few highlights:

Now in its 10th year, the jobs survey and report will uncover current market data in a post-COVID (or what could soon feel like it) world.

This year, in addition to determining which skills job seekers should develop to improve their overall employability prospects, we also seek to understand the nature and impact of the “Great Resignation.” Did such a staffing exodus occur in the IT industry in 2021, and do we expect to feel additional effects of it in 2022? And what can employers do to retain their employees under such conditions? Can we hire to meet our staffing needs, or do we have to increase the skill sets of our existing team members?

The jobs market has changed, and in open source it feels hotter than ever! We’re seeing the formation of new OSPOs and the acceleration of open source projects and standards across the globe. In this environment, we’re especially excited to uncover what the data will tell us this year, to confirm or dispel our hypothesis that open source talent is much in demand, and that certain skills are more sought after than others. But which ones? And what is it going to take to keep skilled people on the job?

Only YOU can help us to answer these questions. By taking the survey (and sharing it so that others can take it, too!) you’ll contribute to a valuable dataset to better understand the current state of the open source jobs market in 2022. The survey will only take a few minutes to complete, with your privacy and confidentiality protected.

Thank you for participating!

The project will be led by Clyde Seepersad, SVP & General Manager of Linux Foundation Training & Certification, and Hilary Carter, VP Research at the Linux Foundation.

The post Looking to Hire or be Hired? Participate in the 10th Annual Open Source Jobs Report and Tell Us What Matters Most appeared first on Linux Foundation.