It wasn’t that long ago that the idea of managing petabytes of data, and monitoring giant busy computing clusters running thousands of services was something for the future and not the now. As it turned out, that was a mighty short future, and it’s all happening now. These talks from MesosCon North America show how two different companies are solving configuration and data management issues with Apache Mesos and other tools.

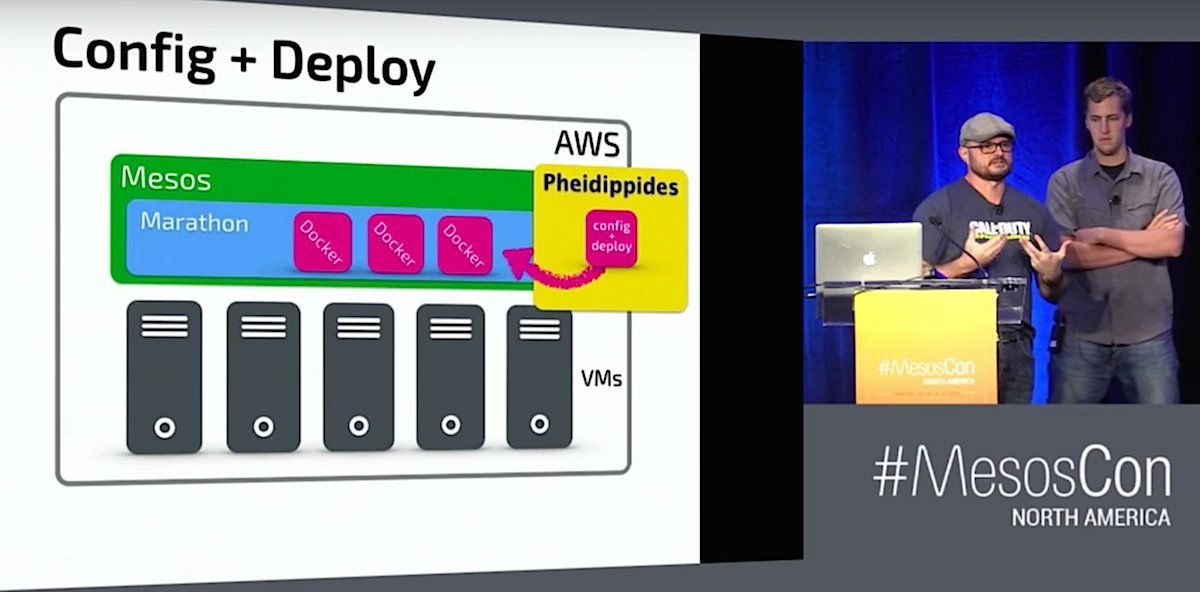

Activision Publishing, a computer games publisher, uses a Mesos-based platform to manage vast quantities of data collected from players to automate much of the gameplay behavior. To address a critical configuration management problem, James Humphrey and John Dennison built a rather elegant solution that puts all configurations in a single place, and named it Pheidippides.

Drew Gassaway explains how Bloomberg Vault used Mesos to build a scalable system to aggregate and manage petabytes of data and provide custom analytics — without asking the customer to change anything.

All Marathons Need a Runner — Introducing Pheidippides

James Humphrey and John Dennison, Analytics Services, Activision Publishing

Activision Publishing makes computer games, lots and lots of them. Activision collects giant quantities of data from players, and according to James Humphrey uses it to “make millions of small decisions…stuff like anti-cheating, match-making, economy simulations, balancing of items in the games.” Analytics Services relies on Docker, Mesos, Marathon, and Jenkins to support the services that process all of this data.

Configuration management became a critical problem. The Docker, Mesos, Marathon, and Jenkins infrastructure makes it very easy to deploy large numbers of containers, services, and Jenkins jobs, all merrily proliferating. The good news is this system is so flexible you can do virtually whatever you want. The bad news is you have many ways to poke yourself in the eye. Humphrey and Dennison built a rather elegant solution to the problem of configuration management and named it Pheidippides, or Pheidip for short. (Pheidippides was supposedly the first marathon runner.)

Dennison explains their approach of making the framework the configuration manager and thinking of configurations as infrastructure rather than loading up containers with individual configurations: “The choice is either put it inside the container and make it a smart container. Or keep it out, and make it dumb. Every time, we have repeatedly learned that you’ve got to keep your containers dumb. Because, you want to switch out the logging system, and now you have a whole bunch of logging logic. Plugins running all over your cluster, and they’re written by different people in different teams…You can always put pieces of your infrastructure in your containers, but don’t.”

This puts all configurations in a single place, rather than scattered throughout hundreds of containers. “The ability to declare something once and only once across many services is very key for us.”

Watch the complete presentation below:

A Seamless Monitoring System for Apache Mesos Clusters

Drew Gassaway, Bloomberg LP

Bloomberg Vault provides managed data services, currently about four petabytes’ worth for all of their customers. Drew Gassaway’s team was tasked with adding data and file analytics, and features such as trade reconstruction, and financial and compliance products. They had to build a new platform for these new products: “We were in a situation that’s similar to a lot of people. You have an existing infrastructure. You have a lot of static VMs. You are doing mostly static resource allocation. We wanted to get away from that. We had two main goals we wanted to achieve with this. We wanted to act as a base for some of these brand new analytics applications we wanted to build. We wanted to have a platform that we ended up putting the majority of our team at the Vault applications onto, so we would have a lot of room to grow. We want to start it pretty quickly, but keep it as scalable as possible.” In other words, a typical Mesos-based solution of fast, cheap, and scalable.

Gassaway’s team had to figure out how to aggregate and manage a large and diverse assortment of logging data from Mesos tasks, syslogs, HAProxy, and other logs captured from a large number of nodes. They wanted support dashboards and alerting. They didn’t want to modify existing applications or require their customers to change anything. They also had to be mindful of protecting potentially sensitive customer data. The platform must also support growth: “We wanted to collect at the node level and push data to the center of our topology…we didn’t want to begin in a situation where we are struggling to keep up pulling from an expanding cluster from the center.”

A primary goal was keeping as much as possible inside Mesos. “We have 3-pronged approach where we have a Mesos task on every node. One is collecting logs, one is collecting metric data and another one is for anything else we might want to actively scrape…we also added some throttling and quality of service behavior, so we can control if certain apps were generating an excessive or overwhelming amount of logs.”

The platform includes a large number of tools including Logstash, Elasticsearch, InfluxDB, and Kibana.

Watch the complete presentation below:

Mesos Large-Scale Solutions

Please enjoy the previous blogs in this series, and watch this spot for more blogs on ingenious and creative ways to hack Mesos for large-scale tasks.

-

4 Unique Ways Uber, Twitter, PayPal, and Hubspot Use Apache Mesos

-

How Verizon Labs Built a 600 Node Bare Metal Mesos Cluster in Two Weeks

-

Running Distributed Applications at Scale on Mesos from Twitter and CloudBees

Apache, Apache Mesos, and Mesos are either registered trademarks or trademarks of the Apache Software Foundation (ASF) in the United States and/or other countries. MesosCon is run in partnership with the ASF.