To create sustained high performance, organizations must invest as much in their people and processes as they do in their technology, according to Puppet’s 2016 State of DevOps Report.

The 50+ page report, written by Alanna Brown, Dr. Nicole Forsgren, Jez Humble, Nigel Kersten, and Gene Kim, aimed to better understand how the technical practices and cultural norms associated with DevOps affect IT and organizational performance as well as ROI.

According to the report, which surveyed more than 4,600 technical professionals from around the world, the number of people working in DevOps teams has increased from 16 percent in 2014 to 22 percent in 2016.

Six key findings highlighted in the report showed that:

-

High-performing organizations decisively outperform low-performing organizations in terms of throughput.

-

They have better employee loyalty.

-

High-performing organizations spend 50 percent less time on unplanned work and rework.

-

They spend 50 percent less time remediating security issues.

-

An experimental approach to product development can improve IT performance.

-

Undertaking a technology transformation initiative can produce sizeable returns for any organization.

Specifically, in terms of throughput, high IT performers reported routinely doing multiple deployments per day and saw:

-

200 times more frequent code deployments

-

2,555 times faster lead times

-

24 times faster mean time to recover

-

60 times lower change failure rate

Shift Left

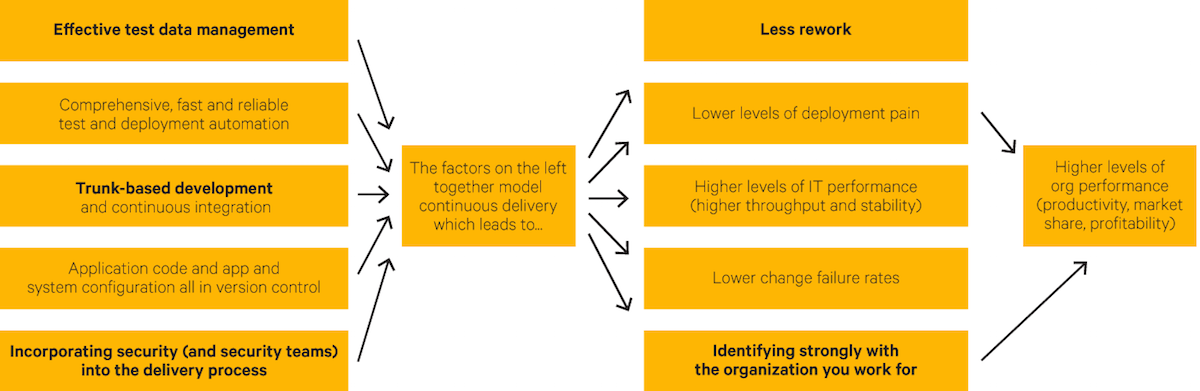

Lean and agile product management approaches, which are common in DevOps environments, emphasize product testing and building in quality from the beginning of the process. In this approach, also known as “shifting left,” developers deliver work in small batches throughout the product lifecycle.

“Think of the software delivery process as a manufacturing assembly line. The far left is the developer’s laptop where the code originates, and the far right is the production environment where this code eventually ends up. When you shift left, instead of testing quality at the end, there are multiple feedback loops along the way to ensure that high-quality software gets delivered to users more quickly,” the report states.

This idea also applies to security, as an integral part of continuous delivery. “Continuous delivery improves security outcomes,” according to the report. “We found that high performers were spending 50 percent less time remediating security issues than low performing organizations.”

For companies just getting started with DevOps, the move involves other changes as well.

“Adopting DevOps requires a lot of changes across the organization, so we recommend starting small, proving value, and using the trust you’ve gained to tackle bigger initiatives,” said Alanna Brown, Senior Product Marketing Manager, Puppet, and co-author of the report in an interview.

“We also think it’s important to get alignment across the organization by shifting the incentive structure so that everyone in the value chain has a single incentive: to produce the highest quality product or service for the customer,” Brown said. Employee engagement is key, as “companies with highly engaged workers grew revenues two and a half times as much as those with low engagement levels.”

In this year’s survey, according to Brown, most respondents reported beginning their DevOps journey with deployment automation, infrastructure automation, and version control — or all three.

“We see these practices as the foundation of a solid DevOps practice because automation gives engineers cycles back to work on more strategic initiatives, while the use of version control gives you assurance that you can roll back quickly should a failure occur,” she said. “Without these two practices in place, you can’t implement continuous delivery, provide self-service provisioning, or adopt many of new technologies and methodologies such as containers and microservices.”

Build a Foundation

Ultimately, however, to be successful, DevOps must overcome “political and cultural inertia,” Brown said. “It can’t be a top-down dictate, nor can it be a purely grassroots effort.”

The 2016 report offers some steps that can make a difference in your organization’s performance. Once you have your foundation in place, Brown said, “you’ll see all the opportunities that exist to automate manual processes… And, of course, there will be the bigger initiatives like moving workloads to a public cloud, building out a self-service private cloud, and spreading DevOps practices to other parts of the organization.”