Ahead of the official Mesa 12.0 debut later this month, Mesa release manager Emil Velikov of Collabora has announced 12.0 Release Candidate 2…

Read more at Phoronix

Ahead of the official Mesa 12.0 debut later this month, Mesa release manager Emil Velikov of Collabora has announced 12.0 Release Candidate 2…

Read more at Phoronix

Hi, I’m grouchy and I work with operations and data and backend stuff. I spent 3.5 years helping Parse grow from a handful of apps to over a million. Literally building serverless before it was cool TYVM.

So when I see kids saying “the future is serverless!” and “#NoOps!” I’m like okay, that’s cute. I’ve lived the other side of this fairytale. I’ve seen what happens when application developers think they don’t have to care about the skills associated with operations engineering. When they forget that no matter how pretty the abstractions are, you’re still dealing with dusty old concepts like “persistent state” and “queries” and “unavailability” and so forth, or when they literally just think they can throw money at a service to make it go faster because that’s totally how services work.

I’m going to split this up into two posts. I’ll write up a recap of my talk in a sec, but first let’s get some things straight. Like words. Like operations.

WHAT IS OPERATIONS?

Let’s talk about what “operations” actually means, in the year 2016, assuming a reasonably high-functioning engineering environment.

The Xen Project’s code contributions have grown more than 10 percent each year. Although growth is extremely healthy to the project as a whole, it has its growing pains. For the Xen Project, it led to issues with its code review process: maintainers believed that their review workload increased and a number of vendors claimed that it took significantly longer for contributions to be upstreamed, compared to the past.

The project developed some basic scripts that correlated development list traffic with Git commits, which showed indeed that it took longer for patches to be committed. In order to identify possible root causes, the project initially ran a number of surveys to identify possible causes for the slow down. Unfortunately, many of the observations made by community members contradicted each other, and were thus not actionable. To solve this problem, the Xen Project worked with Bitergia, a company that focuses on analyzing community software development processes, to better understand and address the issues at hand.

We recently sat down with Lars Kurth, who is the chairperson for the Xen Project, to discuss the overall growth of the Xen Project community as well as how the community was able to improve its code review process through software development analytics.

Like many FOSS projects, the Xen project code review process uses a mailing list-based review process, and this could be a good blueprint for projects that are finding themselves in the same predicament.

Linux.com: Why has there been so much growth in the Xen Project Community?

Lars Kurth: The Xen Project hypervisor powers some of the biggest cloud computing companies in the world, including Alibaba’s Aliyun Cloud Services, Amazon Web Services, IBM Softlayer, Tencent, Rackspace and Oracle (to name a few).

It is also being increasingly used in new market segments such as the automotive industry, embedded and mobile as well as IoT. It is a platform of innovation that is consistently being updated to fit the new needs of computing with commits coming from developers across the world. We’ve experienced incredible community growth of 100 percent in the last five years. A lot of this growth has come from new geographic locations — most of the growth is from China and Ukraine.

Linux.com: How did the project notice that there might be an issue and how did people respond to this?

Lars Kurth: In mid 2014, maintainers started to notice that their review workload increased. At the same time, some contributors noticed that it took longer to get their changes upstreamed. We first developed some basic scripts to prove that the total elapsed time from first code review to commit had indeed increased. I then ran a number of surveys, to be able to form a working thesis on the root causes.

In terms of response, there were a lot of differing opinions on what exactly was causing the process to slow down. Some thought that we did not have enough maintainers, some thought we did not have enough committers, others felt that the maintainers were not coordinating reviews well enough, while others felt that newcomers wrote lower quality code or there could be cultural and language issues.

Community members made a lot of assumptions based on their own worst experiences, without facts to support them. There were so many contradictions among the group that we couldn’t identify a clear root cause for what we saw.

Linux.com: What were some of your initial ideas on how to improve this and why did you eventually choose to work with Bitergia for open analytics of the review process?

Lars Kurth: We first took a step back and looked at some things we could do that made sense without a ton of data. For example, I developed a training course for new contributors. I then did a road tour (primarily to Asia) to build personal relationships with new contributors and to deliver the new training.

In the year before, we started experimenting with design and architecture reviews for complex features. We decided to encourage these more without being overly prescriptive. We highlighted positive examples in the training material.

I also kicked off a number of surveys around our governance, to see whether maybe we have scalability issues. Unfortunately, we didn’t have any data to support this, and as expected different community members had different views. We did change our release cadence from 9-12 months to 6 months, to make it less painful for contributors if a feature missed a release.

It became increasingly clear that to make true progress, we would need reliable data. And to get that we needed to work with a software development analytics specialist. I had watched Bitergia for a while and made a proposal to the Xen Project Advisory Board to fund development of metrics collection tools for our code review process.

Linux.com: How did you collect the data (including what tools you used) to get what you needed from the mailing list and Git repositories?

Lars Kurth: We used existing tools such as MLStats and CVSAnalY to collect mailing list and Git data. The challenge was to identify the different stages of a code review in the database that was generated by MLStats and to link it to the Git activity database generated by CVSAnalY. After that step we ended up with a combined code review database and ran statistical analysis over the combined database. Quite a bit of plumbing and filtering had to be developed from scratch for that to take place.

Linux.com: Were there any challenges that you experienced along the way?

Lars Kurth: First we had to develop a reasonably accurate model of the code review process. This was rather challenging, as e-mail is essentially unstructured. Also, I had to act as bridge between Bitergia, which implemented the tools and the community. This took a significant portion of time. However, without spending that time, it would have been quite likely that the project would fail.

To de-risk the project, we designed it in two phases: the first phase focused on statistical analysis that allowed us to test some theories; the second phase focused on improving accuracy of the tools and making the data accessible to community stakeholders.

Linux.com: What were your initial results from the analysis?

Lars Kurth: There were three key areas that we found were causing the slow down:

Huge growth in comment activity from 2013 to 2015

The time it took to merge patches (=time to merge) increased significantly from 2012 to the first half of 2014. However, from the second half of 2014 time to merge moved back to its long-term average. This was a strong indicator that the measures we took actually had an effect.

Complex patches were taking significantly longer to merge than small patches. As it turns out, a significant number of new features were actually rather complex. At the same time, the demands on the project to deliver better quality and security had also raised the bar for what could be accepted.

Linux.com: How did the community respond to your data? How did you use it to help you make decisions about what was best to improve the process?

Lars Kurth: Most people were receptive to the data, but some were concerned that we were only able to match 60 percent of the code reviews to Git commits. For the statistical analysis, this was a big enough sample.

Further investigation showed that the main factor for this low match rate was caused by cross-posting of patches across FOSS communities. For example, some QEMU and Linux patches cross-posted for review on the Xen Project mailing lists, but the code did not end up in Xen. Once this was understood, a few key people in the community started to see the potential value of the new tools.

This is where stage two of the project came in. We defined a set of use cases and supporting data that broadly covered three areas:

Community use cases to encourage desired behavior: this would be metrics such as real review contributions (not justed ACKed-by and Reviewed-by flags), comparing review activity against contributions.

Performance use cases that would allow us to spot issues early: these would allow us to filter time-related metrics by a number of different criteria such as complexity of a patch series

Backlog use cases to optimize process and focus: the intention here was to give contributors and maintainers tools to see what reviews are active, nearly complete, complete or stale.

Linux.com: How have you made improvements based on your findings and what have been the end results for you?

Lars Kurth: We had to iterate the use cases, the data supporting them and how the data is shown. I expect that that process will continue, as more community members use the tools. For example, we realized that the code review process dashboard that was developed as part of the project is also useful for vendors to estimate how long it will take to get something upstreamed based on past performance.

Overall, I am very excited about this project, and although the initial contract with Bitergia has ended, we have an Outreachy intern working with Bitergia and me on the tools over the summer.

Linux.com: How can this analysis support other projects with the similar code review processes?

Lars Kurth: I believe that projects like the Linux kernel and others that use e-mail based code review processes and Git should be able to use and build on our work. Hopefully, we will be able to create a basis for collaboration that helps different projects become more efficient and ultimately improve what we build.

Resources:

Dashboard: tinyurl.com/xenproject-dashboard

Documentation: tinyurl.com/xenproject-dashdocs

Contribute: tinyurl.com/xenproject-contribute

Five core open source principles–open exchange, participation, meritocracy, community, and release early and often–are outlined in Red Hat’s framework.

In a world where network processors are viewed as a commodity, the assumption is that most innovation will be driven by software. But support is building for the P4 language to boost NFV, as chip specialists point to hardware improvements that will be key for more demanding applications in a virtualized environment.

To make it easier for organizations to take advantage of a broad range of custom processors that can be optimized for specific use cases, hardware vendors have been rallying behind the open source P4 programming language being developed by the P4 Language Consortium. The Consortium is developing the specifications for the P4 programming language as well as associated compilers.

P4 is a declarative language for expressing how packets are processed in network forwarding elements such as a switches, NICs, routers, or other network function appliances. It is based upon an abstract forwarding model consisting of a parser and a set of match+action table resources, divided between ingress and egress. P4 chips compatible with the language can be reprogrammed in the field, after they are installed in hardware, to help them assimilate the intelligence of software-defined networking (SDN). This could lead the P4 language to boost NFV performance.

Read more at SDxCentral.

SDN is moving into the data center at a rapid clip, but while deploying a new technology is one thing, getting people to use it properly is quite another.

According to market analyst IHS Inc., SDN revenues grew more than 80 percent in 2015 compared to the year earlier, topping $1.4 billion. The bulk of that came in the form of new Ethernet switches and controllers, although newer use cases like SD-WAN are on the rise as well and will likely contribute substantially to the overall market by the end of the decade.

This means that, ready or not, the enterprise network is quickly becoming virtualized, severing the last link between data architectures and underlying hardware. This will do wonders for network flexibility and scalability, but it also produces a radically new environment for network managers, few of whom have gotten the appropriate levels of training, if anecdotal evidence is any indication.

Read more at Enterprise Networking Planet.

AT&T’s creation of the Domain 2.0 program, which is driven by the implementation of software-defined networks (SDN) and network functions virtualization (NFV), is causing the telecom equipment industry to rethink how they deliver products and services.

The telco’s initiative has driven all of its vendor partners to be on their toes, meaning they have to be able to quickly innovate and respond to any emerging issue. If they don’t these vendors run the risk of being ousted by another new supplier.

Read more at Fierce Telecom.

On the data analytics front, profound change is in the air, and open source tools are leading many of the changes. Sure, you are probably familiar with some of the open source stars in this space, such as Hadoop and Apache Spark, but there is now a strong need for new tools that can holistically round out the data analytics ecosystem. Notably, many of these tools are customized to process streaming data.

The Internet of Things (IoT), which is giving rise to sensors and other devices that produce continuous streams of data, is just one of the big trends driving the need for new analytics tools. Streaming data analytics are needed for improved drug discovery, and NASA and the SETI Institute are even collaborating to analyze terabytes of complex, streaming deep space radio signals.

While Apache Spark grabs many of the headlines in the data analytics space, given billions of development dollars thrown at it by IBM and other companies, several unsung open source projects are also on the rise. Here are three emerging data analytics tools worth exploring:

Big organizations and small ones are working on new ways to cull meaningful insights from streaming data, and many of them are working with data generated on clusters and, increasingly, on commodity hardware. That puts a premium on affordable data-centric approaches that can improve on the performance and functionality of tools such as MapReduce and even Spark. Enter the open source Grappa project, which scales data-intensive applications on commodity clusters and offers a new type of abstraction that can beat classic distributed shared memory (DSM) systems.

As the developers note: “Grappa provides abstraction at a level high enough to subsume many performance optimizations common to data-intensive platforms. However, its relatively low-level interface provides a convenient abstraction for building data-intensive frameworks on top of. Prototype implementations of (simplified) MapReduce, GraphLab, and a relational query engine have been built on Grappa that out-perform the original systems.”

Grappa is freely available on GitHub under a BSD license. If you are interested in seeing Grappa at work, you can follow easy quick-start directions in the application’s README file to build and run it on a cluster. To learn how to write your own Grappa applications, check out the tutorial.

The Apache Drill project is making such a difference in the Big Data space that companies such as MapR have even wrapped it into their Hadoop distributions. It is a Top-Level project at Apache and is being leveraged along with Apache Spark in many streaming data scenarios.

Drill is notable in streaming data applications because it is a distributed, schema-free SQL engine. DevOps and IT staff can use Drill to interactively explore data in Hadoop and other NoSQL databases, such as HBase and MongoDB. There is no need to explicitly define and maintain schemas, as Drill can automatically leverage the structure that’s embedded in the data. It is able to stream data in memory between operators, and minimizes the use of disks unless needed to complete a query.

The Apache Kafka project has emerged as a star for real-time data tracking capabilities. It provides unified, high-throughput, low-latency processing for real-time data. Confluent and other organizations have also produced custom tools for using Kafka with data streams.

The engineers who created Kafka at LinkedIn also created Confluent, which focuses on Kafka. Confluent University offers training courses for Kafka developers, and for operators/administrators. Both onsite and public courses are available.

Are you interested in more unsung open source data analytics projects on the rise? If so, you can find more in my recent post on the topic.

The entire Internet depends on OpenSSL to secure sensitive transactions, but until the Linux Foundation launched the Core Infrastructure Initiative to support crucial infrastructure projects, it was supported by a small underfunded team with only one paid developer. This is not good for an essential bit of infrastructure, and OpenSSL was hit by some high-profile bugs, such as Heartbleed. Now that OpenSSL has stable support, there should be fewer such incidents — and not a moment too soon, because we have no alternatives.

Asymmetric encryption is an ingenious mechanism for establishing encrypted sessions without first exchanging encryption keys. Asymmetric encryption relies on public-private key pairs. Public keys encrypt, private keys decrypt. Or, you can think of it as public keys lock, and private keys unlock. Anyone who has your public key can encrypt messages to send to you, and only you can decrypt them with your private key. It’s a brilliantly simple concept that greatly simplifies the process of establishing encrypted network connections.

Symmetric encryption uses the same key for encryption and decryption. You have to figure out how to safely distribute the key, and anyone with a copy of the key can decrypt your communications. The advantage of symmetric encryption is that it’s computationally less expensive than asymmetric encryption. OpenSSL takes advantage of this by establishing a session with asymmetric encryption, and then generating symmetric encryption keys to use for the duration of the session.

A Certificate Authority (CA) is the final stop in the public key infrastructure chain. The CA tells your website visitors that your site’s SSL certificate is legitimate. Obviously, this requires a high level of trust, which is why there are a number of commercial certificate authorities, such as Comodo, GlobalSign, GoDaddy, and many others.

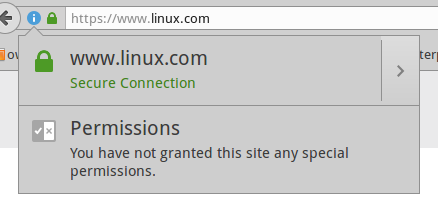

All web browsers include a bundle of trusted root CAs. Mozilla publishes a list of included CAs. On Ubuntu systems, these are stored in /usr/share/ca-certificates/mozilla/ and symlinked to /etc/ssl/certs/. Any website that you visit that is trusted by these root CAs will display a happy little green padlock (Figure 1), and when you click the padlock, you’ll find all kinds of information about the site as well as a copy of the site certificate.

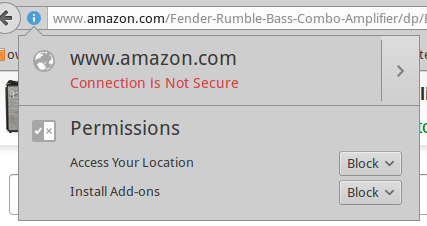

Figure 2 shows a product page on Amazon. The page is not SSL-protected. Why not encrypt all pages? On a site with all content hosted on the same server, it’s easy. There is a small performance hit, but it shouldn’t be noticeable. On a complex site that uses all kinds of external content and ad servers, the process becomes unmanageable. Various page elements are coming from many different domains, so it’s very difficult to set up a CA for all of them. Most sites don’t even try and focus instead on securing their login and checkout pages.

It’s worth using sitewide SSL even on a site that isn’t selling anything, such as a blog, because it assures your site visitors that they are visiting your site and not some fraudulent copycat.

Self-Signed Certificates are the reliable old standby for LAN services. However, your web browsers are still going to pitch fits and report your internal sites as dangerous. I have mixed feelings about web browsers trying to protect us. On one hand, it’s a nice idea. It’s all the same to us: we’re staring at a screen and have no idea what’s going on behind the scenes. On the other hand, a steady diet of alarms doesn’t help — how are we supposed to judge if a warning is legitimate?

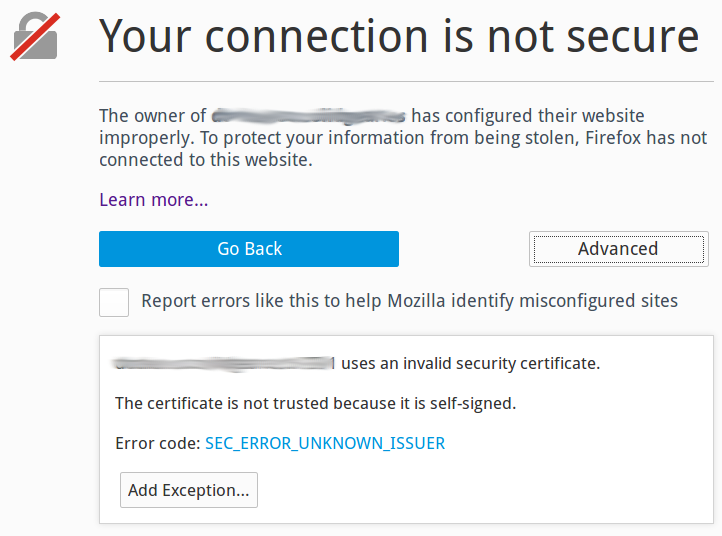

Figure 3 shows a typical Firefox freakout. You can look at the site certificate and make an educated guess. Most site visitors will do as they do with all computer warnings: Ignore them and forge ahead.

In the privacy of your own local network, you can shut up browsers permanently by importing your site certificates into your web browsers. In Firefox, you can do this by clicking the Advanced button and keep clicking through until Firefox gives up trying to scare you away and imports the certificate.

You can also import site certificates from the command line. Your certificate must be an X.509 .pem file. These are plain-text files that you can open and read; they start with —–BEGIN CERTIFICATE—–. On Ubuntu, copy your site certificate into /usr/local/share/ca-certificates/. Then run the CA updater:

$ sudo update-ca-certificates

Updating certificates in /etc/ssl/certs...

WARNING: Skipping duplicate certificate Go_Daddy_Class_2_CA.pem

WARNING: Skipping duplicate certificate Go_Daddy_Class_2_CA.pem

1 added, 0 removed; done.

Running hooks in /etc/ca-certificates/update.d...

Done.

If you’re using a cool configuration management tool like Puppet, Chef, or Ansible, you can roll your certs out to everyone in your shop.

If you use a commercial certificate authority, you can avoid all this. There are many to choose from, so shop around for a good deal. There is also a good free option: Let’s Encrypt. Let’s Encrypt offers both production and test certificates, so you can test all you want to until you get the hang of it. Let’s Encrypt has broad industry support, including Mozilla, Cisco, Facebook, the Electronic Frontier Foundation, and many more.

Ubuntu how-to on creating SSL certificates

Earlier this year, Facebook led the charge to launch a new open source group – the Telecom Infra Project (TIP) – whose mission is to improve global Internet connections. TIP will employ the same methods Facebook has used to re-design data centers via its Open Compute Project (OCP). Some of TIP’s goals are lofty: such as rethinking network architectures and bringing the Internet to underserved regions of the globe.

Read more at SDxCentral.