As it’s been a while since we updated everyone on our progress, we thought it would be appropriate to share what ODPi has been up to over the past several months. In upcoming blogs, we will preview some exciting deliverables that will be coming out the end of March.

As it’s been a while since we updated everyone on our progress, we thought it would be appropriate to share what ODPi has been up to over the past several months. In upcoming blogs, we will preview some exciting deliverables that will be coming out the end of March.

ODPi’s journey to today can be thought of as passing through the following four phases:

-

Problem Recognition

-

Industry Coalescence

-

Getting Organized

-

Getting to Work

In the rest of this blog, I’ll describe each of these phases.

Problem Recognition

If they’re being honest, Hadoop and Big Data proponents recognize that this technology has not achieved its game-changing business potential.

Gartner puts it well: “Despite considerable hype and reported successes for early adopters, 54 percent of survey respondents report no plans to invest [in Hadoop] at this time, while only 18 percent have plans to invest in Hadoop over the next two years,” said Nick Heudecker, research director at Gartner. “Furthermore, the early adopters don’t appear to be championing for substantial Hadoop adoption over the next 24 months; in fact, there are fewer who plan to begin in the next two years than already have.” – Gartner Survey Highlights Challenges to Hadoop Adoption

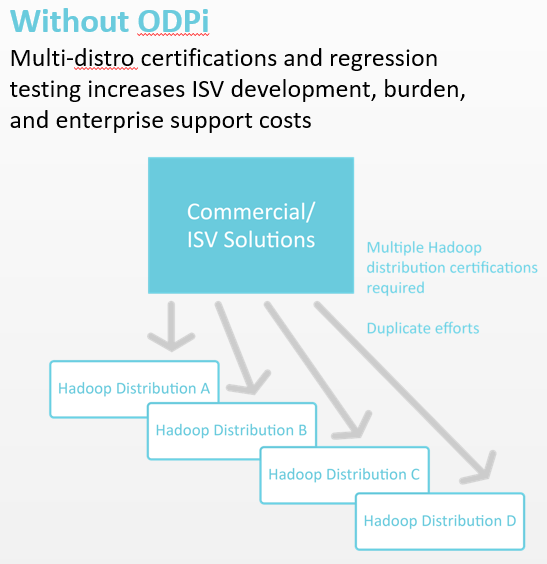

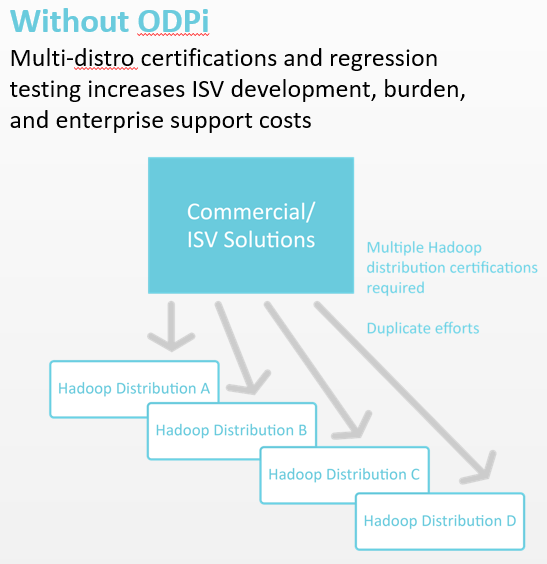

The top two factors suppressing demand for Hadoop according to Gartner’s research are a skills gap and unclear business value for Hadoop initiatives. In ODPi’s view, the fragmented nature of the Hadoop ecosystem is a leading cause for businesses’ difficulty extracting value from Hadoop investments. Hadoop, its components, and Hadoop Distros, are all innovating very quickly and in different ways. This diversity, while healthy in many ways, also slows Big Data Ecosystem development and limits adoption.

Specifically, the lack of consistency across major Hadoop distributions means that commercial solutions and ISVs – precisely the entities in the value chain whose solutions deliver business value – must invest disproportionately in multi-distro certification and regression testing. This increases their development costs (not to mention enterprise support costs) and suppresses innovation.

Industry Coalescence

In February 2015, forward-thinking Big Data and Hadoop players at companies as diverse as AltiScale, Capgemini, CenturyLink, EMC, GE, Hortonworks, IBM, Infosys, Pivotal, SAS and VMware decided to work together to address the underachieving industry growth rate through standardization.

Here’s what some of the founding members had to say when they joined:

-

CapGemini: One of the most consistent challenges that we’ve come across is the need to get multiple vendors’ technologies working together. Sometimes this is to get IBM’s data integration or analytics technologies working on another vendor’s distribution, such as Pivotal or Hortonworks, or to run other vendors analytics tools, such as SAS, on top of an IBM Big Data platform.

-

Hortonworks: Some might look at Pivotal and IBM and others as competitors. We have to set those differences aside and focus on the things we can do jointly. That’s what this initiative is about. It just comes from working together and building trust and we’re used to that. It’s really what open source is about. If you look at the Hadoop industry, there are shared name components. There are varying versions of those components that have different capabilities, different protocols and API incompatibilities. What this effort is aimed at is a stable version of those, so that takes the guesswork out of the broader ecosystem.

-

IBM: One desired outcome of the ODP is to bring new big data solutions to the market more quickly. This will be achieved by making it easier for the ecosystem vendors to enable and test on a well-defined common Hadoop core platform.

-

SAS: SAS is not in it to choose sides on Hadoop distribution vendors. We support all five major distributions — Cloudera, Hortonworks, IBM, MapR and Pivotal — with our applications, and requests continue to pour in for more support of region-specific distributions. SAS will continue our collaboration with all Hadoop vendors.

Anyone else working with multiple distributions of Hadoop will understand the challenges involved. Here are three revealing examples from the last few months, each from a different (unnamed) vendor:

-

Calling an HDFS API to see if an HDFS directory exists. Some don’t throw an exception and return a null for the directory. Some throw an exception.

-

Setting a baseline of Hive 13 so we get access to some new syntax. Try it on one, it works great and we are able to do some really innovative stuff. Try it on another that says it also has Hive 13, and we get “syntax error”?

-

Trying to be a good ecosystem citizen, ie. Anyone else working with multiple distributions of Hadoop will understand the challenges involved. Here are three revealing examples from the last few months, each from a different (unnamed) vendor: veraging the HCAT APIs for accessing shared metadata. All is good. Get the latest “dot” release from the vendor, and guess what, they changed the package name of the class used to get the information. Code change necessary.

Getting Organized

In September of 2015, the decision was made to move under The Linux Foundation. The Linux Foundation’s recognized excellence in Open Source governance and community and ecosystem development provided existing and prospective ODPi members with the confidence that the organization would continue to grow while operating openly, equitably and transparently.

Coinciding with the move to the Linux Foundation, several prominent industry players joined ODPi, bringing the total membership to 25 by the end of 2015.

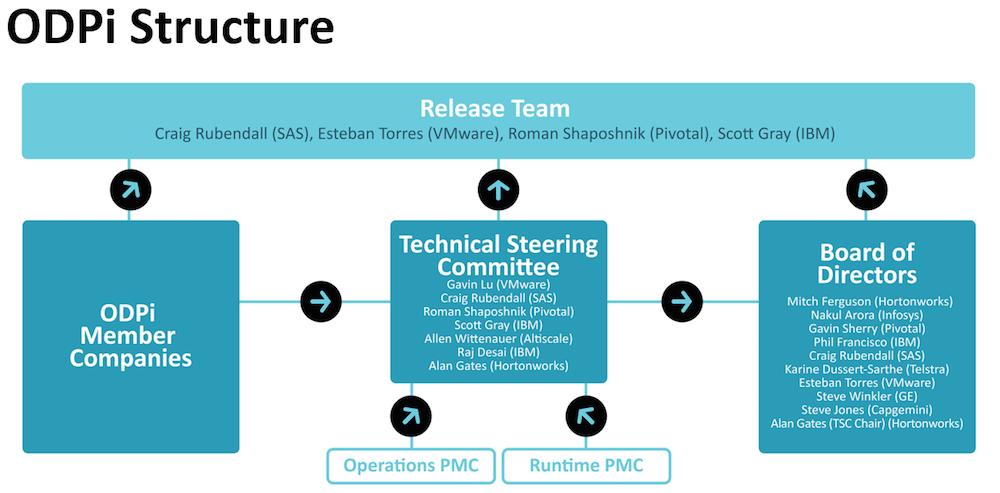

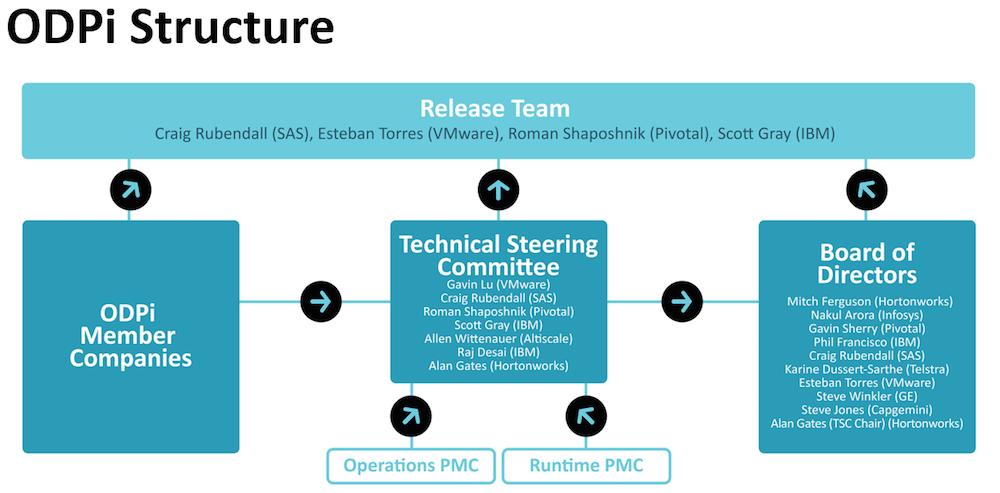

The Linux Foundation facilitated the bylaws and organization of ODPi in order to draw from all of its members’ diverse areas of expertise in the development of the specification. Figure 2 depicts ODPi’s operating structure.

Getting to Work

With the Release Team and TSC in place, the hard work of defining the ODPi Runtime and Operations Specifications got underway in earnest in Q4 2015.

The Release Team published the draft Runtime Specification on January 21 and has been hard at work since then on finalizing the spec and developing tests and a deployment sandbox, which will be announced at the end of March. We will also be publishing more details on the Spec and tests, so check back soon!

The author: John Mertic has spent his entire career in open source, from being a contributor to projects such as PHP, community manager for SugarCRM, and participating in open source foundations OW2 and OpenSocial. A long time speaker and author, he now uses his expertise in his role with the Linux Foundation to help nurture and grow large scale, collaborative open source projects.

As it’s been a while since we updated everyone on our progress, we thought it would be appropriate to share what ODPi has been up to over the past several months. In upcoming blogs, we will preview some exciting deliverables that will be coming out the end of March.

As it’s been a while since we updated everyone on our progress, we thought it would be appropriate to share what ODPi has been up to over the past several months. In upcoming blogs, we will preview some exciting deliverables that will be coming out the end of March.

This week in Linux news, The Linux Foundation advocates for gender diversity in tech industry events with new Women Who Code partnership, SCO lawsuit comes to an end due to a lack of money, and more! Get up to date on the latest Linux & OSS stories with this weekly digest:

This week in Linux news, The Linux Foundation advocates for gender diversity in tech industry events with new Women Who Code partnership, SCO lawsuit comes to an end due to a lack of money, and more! Get up to date on the latest Linux & OSS stories with this weekly digest: Manjaro Deepin, one of the latest additions to the Manjaro family, has been upgraded to version 16.03 and is now ready for download.

Manjaro Deepin, one of the latest additions to the Manjaro family, has been upgraded to version 16.03 and is now ready for download.