One of the best practices for secure development is dynamic analysis. Among the various dynamic analysis techniques, fuzzing has been highly popular since its invention and a multitude of fuzzing tools of varying sophistication have been developed. It can be enormously fun to take the latest fuzzing tool and see just how many ways you can crash your favorite application. But what if you are a developer of a large project which does not lend itself to being fuzzed easily? How should you approach dynamic analysis in this case?

1. Decide your goals

First, decide whether you are looking only for security issues, or whether you are looking for all types of correctness issues. Fuzzing finds a lot of low severity issues which may never be encountered in normal use. These may look exactly like security vulnerabilities with the only difference being that no trust boundary is being crossed. For example, if you fuzz a tool that only ever expects input to come from the output of a trusted tool, you may finds lots of crashes which will never be encountered in normal usage. Are there other ways to get corrupt input into the tool? If so, you have found a security vulnerability, if not, then you have found a low priority correctness issue which may never get fixed. Will the project be willing to address all of the issues found or only the security issues? You can save yourself a lot of time and frustration by setting expectations for dynamic analysis up front.

2. Understand your trust boundaries

Understand (and document) where the error checking should occur

It is easy for security boffins like me to create a mental model of strong security whereby every function defensively checks every input. Sadly for us, the real world is more complex than that. This type of hypervigilance is hugely wasteful and therefore never survives in production. We have to work a little harder to establish a correct mental model of the security boundaries for the project. It is necessary to understand where in the program control flow that the checks should be made and where they can be omitted.

3. Segment your project based on interface

Different fuzzers have different specialities. Segment your project into buckets appropriate for the different types of fuzzers based on the interface – file, network, API.

4. Explore existing tools

New fuzzing tools are being developed all the time and old tools are getting new capabilities. Take a fresh look at some of the most popular tools and see whether or not they can help with even a subset of your project. David Birdwell recently added network fuzzing to a derivative of American Fuzzy Lop which is worth checking out. Hanno Böck has written useful tutorials on how to use some common fuzzing tools at The Fuzzing Project.

5. Write your own tools

When faced with the question of how to perform dynamic analysis on a large mixed language project which did not lend itself to existing tools, I turned to David A. Wheeler to see how he would approach this problem. Dr. Wheeler recommends, “I’d consider writing a fuzzer specific to the project’s APIs & generate random inputs based on them, and adding lots of assertions that are at least enabled during fuzzing. If you know your API (or can introspect it), creating a specific fuzzer is pretty easy – grab your random number generator, set up an isolated container or VM for the fireworks, and go. “

6. Is fuzzing really worth it?

A common critique of fuzzing tools is that after you run them for a while they stop finding bugs. This is a good thing! Just like you wouldn’t throw out your automated test suite because it finds so few regressions, you shouldn’t use this rationale to avoid fuzzing your project. If your fuzzing tools are no longer finding bugs, Congratulations! It’s time to celebrate! And now, onto finding the more difficult bugs.

7. Sounds like a lot of work

Do you really expect me to do all that? Just give me the name of a good tool to run. (American Fuzzy Lop) You don’t have to do all of this, at least not at once. If you find a tool that works with your project to cover even a subset of the project, then you can just start running it. You will wind up figuring out whether the project developers (or you) are willing to fix low priority issues and where the project’s trust boundaries are along the way. You may find a crash, generate a patch and submit it to the project only to find that it gets rejected because the incorrect input generated by the fuzzer can never reach that part of the project and so adding your check is too expensive. Whichever approach you take, do the people following in your footsteps a favor and write it down. Sure it will get outdated, but it makes for a fun read and helps people coming along behind you to stand on your shoulders.

One final reminder, if you are fuzzing someone else’s project and you have any suspicion that you have found a security vulnerability, remember to use the project’s security vulnerability reporting process!

Emily Ratliff is senior director of infrastructure security for the Core Infrastructure Initiative at The Linux Foundation. Ratliff is a Linux, system and cloud security expert with more than 20 year’s experience. Most recently she worked as a security engineer for AMD and logged nearly 15 years at IBM.

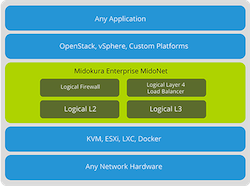

Midokura has released

Midokura has released  Canonical, the company behind the world’s most popular free operating system, Ubuntu Linux, has published multiple Ubuntu Security Notices to inform users about major kernel updates for all of its supported Ubuntu OSes.

Canonical, the company behind the world’s most popular free operating system, Ubuntu Linux, has published multiple Ubuntu Security Notices to inform users about major kernel updates for all of its supported Ubuntu OSes. One of the benefits of the

One of the benefits of the  It’s been more than five years since the launch of Illumos as the concerted, community-based effort around the OpenSolaris code-base. This truly-open Solaris stack continues to be at the heart of OpenIndiana, SmartOS, Dyson, and other operating systems.

It’s been more than five years since the launch of Illumos as the concerted, community-based effort around the OpenSolaris code-base. This truly-open Solaris stack continues to be at the heart of OpenIndiana, SmartOS, Dyson, and other operating systems.