GIMP is great and I use it all the time, but when it comes to batch image processing on Linux, nothing is more handy and simple to use than XnConvert. Although not an open source software, this batch raster graphics editor comes for free without any limitations for private use, and works in all platforms and architectures. You can get it from the official website as a complementary part of XnView (it’s standalone). Here comes a tutorial on how to use this simple yet powerful tool on Linux.

This Week in Linux News: Linux Web Server Malware Resolved, Linux Foundation’s OpenHPC Launch, & More

This week in Linux news, encryption ransomware targeting Linux Web servers is resolved, a new Linux Foundation high-performance computing Collaborative Project launches, & more! Don’t miss these Linux headlines from the past week.

This week in Linux news, encryption ransomware targeting Linux Web servers is resolved, a new Linux Foundation high-performance computing Collaborative Project launches, & more! Don’t miss these Linux headlines from the past week.

1) Linux-based operating systems are being targeted by Web server malware

New Encryption Ransomware Targets Linux Systems– Ars Technica

The malware is stopped by Romanian researchers.

Thanks for Playing: New Linux Ransomware Decrypted, Pwns Itself– The Register

2) Investigating the recently-announced Microsoft & Red Hat team-up.

A Closer Look At Microsoft And Red Hat Partnership– Forbes

3) New Linux Foundation Collaborative Project, OpenHPC, to build framework for high-performance computing.

Linux Foundation Launches Open Source High-Performance Computing Group– The VAR Guy

4) The third installment in the “World Without Linux” web series has been released.

Watch: A World Without Linux Would Mean a World Without Social Connections– Softpedia

5) Valve celebrates the release of first Steam Machines by hosting Linux games sale.

Linux-Friendly Steam Sale Celebrates Valve’s Big Steam Machine Launch– PCWorld

PHP 7.0 RC7 Released, PHP 7 Final Gets Pushed Back

While the highly anticipated PHP 7 release was supposed to happen today, it hasn’t as instead it’s been replaced by another release candidate…

Kubuntu Announces New Release Managers

Kubuntu is moving on in the absence of Jonathan Riddell who left the project and his longtime role as the release manager…

Applied Micro Unveils Next-Gen ARM Server SoC

CEO Paramesh Gopi says the X-Gene 3 chip will help drive ARM’s efforts to take 25 percent of the server market from Intel by 2020.

XFS In Linux 4.4 Isn’t Too Exciting

Dave Chinner has now sent in the XFS file-system updates for the Linux 4.4 kernel…

AMD Publishes AMDGPU PowerPlay Support For Re-Clocking / Power Management

AMD has finally published patches for providing preliminary PowerPlay support for the AMDGPU DRM driver, which will eventually replace the current DPM (Dynamic Power Management) support for Volcanic Islands hardware. This PowerPlay support comes with compatibility for Tonga, Fiji, and the rest of the VI line-up!..

How Bad a Boss is Linus Torvalds?

Where I differ from other observers is that I don’t think that this problem is in any way unique to Linux or open-source communities. With five years of work in the technology business and 25 years as a technology journalist, I’ve seen this kind of immature boy behavior everywhere.

It’s not Torvalds’ fault. He’s a technical leader with a vision, not a manager. The real problem is that there seems to be no one in the software development universe who can set a supportive tone for teams and communities.

Cortex-A35 is Most Power-Efficient 64-bit ARM Design Yet

At the ARM TechCon conference in Santa Clara this week, ARM unveiled the Cortex-A35 architecture, its most power-efficient 64-bit processor design to date. Cortex-A35 draws about 33 percent less power per core and occupies 25 percent less silicon area, compared to the 64-bit Cortex-A53, says ARM. The SoC is aimed primarily at the smartphone market, but can also be used for “power-constrained embedded applications such as single-board computers and automotive,” says the chip designer.

At the ARM TechCon conference in Santa Clara this week, ARM unveiled the Cortex-A35 architecture, its most power-efficient 64-bit processor design to date. Cortex-A35 draws about 33 percent less power per core and occupies 25 percent less silicon area, compared to the 64-bit Cortex-A53, says ARM. The SoC is aimed primarily at the smartphone market, but can also be used for “power-constrained embedded applications such as single-board computers and automotive,” says the chip designer.

Due to ship in smartphones and other devices in late 2016, the ARMv8-A based Cortex-A35 is presented as a replacement for the 32-bit Cortex-A7. Cortex-A35 chips, which will also support 32-bit mode, will be 20 percent faster than Cortex-A7 on 32-bit mobile workloads “while consuming less than 90mW total power per core when operating at 1GHz in a 28nm process node,” says ARM.

Acccording to ARM, more than half of all shipping smartphones now use 64-bit, ARMv8-A SoCs, which range from quad-core Cortex-A53 models to octa-core -A53 and -A57 SoCs. By contrast, Cortex-A7 is found mostly in low-end smartphones, or mid-range phones combined with Cortex-A15 or -A17 cores.

Like all these architectures, Cortex-A35 supports ARM’s Big.Little multi-core task sharing technology, permitting similar hybrids, most likely in combinations with Cortex-A57 or Cortex-A72 cores. In fact, AnandTech suggests that Cortex-A35 is as much the heir to the Cortex-A53 as it is the Cortex-A7. Since much of the duty of the lower-end cores in Big.Little SoCs is to offer more power-efficient operation when doing less demanding work, the -A35 could offer a big power savings over the -A53 in Cortex-A57 or Cortex-A72 driven combo SoCs.

The confusion over which SoC is the heir to which is nothing new. ARM called the 32-bit Cortex-A17 the heir to the Cortex-A9, but it was widely seen — and often used — as a replacement for the Cortex-A15. Previously, ARM had called the widely ignored Cortex-A12 the heir to the Cortex-A9, as well.

Open Source Security Process Part 3: Are Today’s Open Source Security Practices Robust Enough in the Cloud Era?

In part three of this four-part series, Xen Project Advisory Board Chairman Lars Kurth takes a closer look at different open source projects and their approach to security practices with cloud computing to attempt to answer the question: Are open source security practices a fit for the cloud? Read Part 2: Containers vs. Hypervisors – Protecting Your Attack Surface and Part 1: A Cloud Security Introduction.

In part three of this four-part series, Xen Project Advisory Board Chairman Lars Kurth takes a closer look at different open source projects and their approach to security practices with cloud computing to attempt to answer the question: Are open source security practices a fit for the cloud? Read Part 2: Containers vs. Hypervisors – Protecting Your Attack Surface and Part 1: A Cloud Security Introduction.

Most of today’s software stacks contain many different layers. And, in a layered software stack, you are only as strong as the weakest link. Without consistent security vulnerability processes in place across different open source projects, there’s a real possibility of errors when handling security issues.

There are two common ways that open source projects approach security in cloud computing: “Full Disclosure” and “Responsible Disclosure.” Both of these approaches are Security Vulnerability Management Processes, which essentially are best practices that help to address security vulnerabilities swiftly. It is worth noting that these processes have been debated repeatedly. This article will dive into how they have developed overtime, how open source technologies are using these practices today, and whether or not they are a fit for cloud environments.

Let’s begin first with examining the difference between “Full Disclosure” and “Responsible Disclosure.”

Full Disclosure vs. Responsible Disclosure

-

“Full disclosure” is the practice of publishing analysis of software vulnerabilities as early as possible, making the data accessible to everyone without restriction. The primary purpose of widely disseminating information about vulnerabilities is so that potential victims are as knowledgeable as those who attack them.

-

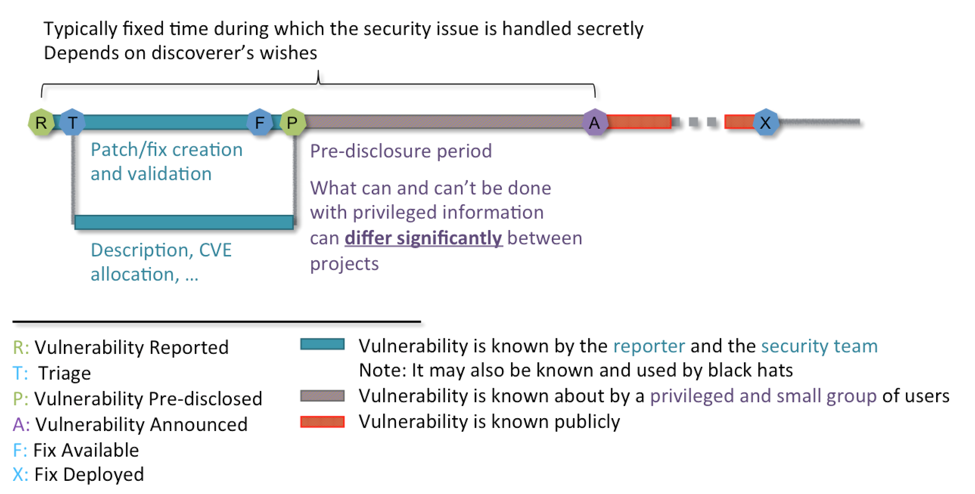

“Responsible Disclosure” is more exclusive on who gets the information of a vulnerability, and is how the majority of open source projects within cloud orchestration stacks are used today. The process is straightforward: whoever discovers a bug works with project’s security team to evaluate and fix the bug. A number of privileged consumers of those projects (such as Linux distros) will test and prepare software packages that fix vulnerabilities, which are then pushed to its entire user base once a security issue is publicly disclosed. The basic workflow and steps in use in “Responsible Disclosure“ can be found in figure 1.

Although different philosophies, both attempt to effectively encourage discoverers to report security issues to security@your-open-source-project instead of selling them or using them for their own gains. Additionally, they both aim to fix security issues as quickly as possible and make sure “exposure time” to security issues (the time from discovery to fix deployed) is minimized. Lastly, they both attempt to ensure that a maximum of users apply patches as quickly as possible.

How Handling Security Issues Differs in the Cloud

Although security practices in most established cloud projects follow the same basic structure laid out in figure 1, there are typically significant differences in the following areas:

-

Whether “responsible disclosure” is used for all vulnerabilities or just some.

-

The list of privileged users (members of the pre-disclosure mailing lists) who are informed before a vulnerability is publicly disclosed.

-

The criteria that are used to establish who can become a member of a pre-disclosure mailing list.

-

The application process to become a member of a pre-disclosure mailing list (some projects don’t have a formal process).

-

Whether the list of privileged users is published or not.

-

What privileged users are allowed to do with the information about security issues that they receive.

-

The typical time-period between the discovery of a security issue and the pre-disclosure to privileged users.

-

The typical time-period between the discovery of a security issue and the disclosure of the issue to all users.

-

The way a project publishes security issues.

-

Whether CVE numbers are assigned for all or most security issues.

-

Whether there is a single place to explore past security issues.

-

Whether security issues are tracked in code commits and other project internae.

Most of the differences can be categorized in terms of operational execution efficiency in the context of an open source project, fairness towards the entire community and transparency with the information and operations of the project’s security process. Although these appear to be small deviations from the basic process, each of these differences can have a huge impact on users of a cloud project.

Distro and Cloud Model of Responsible Disclosure

Let’s consider the examples of “who qualifies to be a privileged user” and “what privileged users are allowed to do with information shared with them during the pre-disclosure period.” These two are closely tied to the goals of a Security Vulnerability Management Processes:

-

Make sure that a fix is available before disclosure.

-

Make sure that downstream projects and products (.e.g. distros) can package and test the fix in their environment.

If you look at a model commonly known as the “Distro Model of Responsible Disclosure,” only vendors of projects creating distributions of the upstream projects are eligible to be privileged users. The information shared with those users can only be used to package and test a fix for vulnerabilities.

In this model, hosting and cloud services and their users are vulnerable immediately after disclosure because it does not allow service providers that use open source software to start planning an upgrade (at scale this can take a week). It also doesn’t allow service providers that use the software to deploy an upgrade before the embargo completes.

In order to make the security process more aligned to the needs of cloud computing, Xen Project pioneered a new form of security vulnerability process, which for lack of a label, we will call Cloud Model of Responsible Disclosure. With this model, public service providers are eligible to be privileged users in addition to distributions. The information shared under embargo may be used to plan an upgrade, inform customers or deploy an upgrade. A similar model has evolved with OpenStack as well.

An Overview of Security Practices of Cloud Projects

The following table lists what disclosure approach, or mix of approaches, different open source projects use.

|

Disclosure Approach |

Used by Project |

|

Full |

Linux Kernel/LXC/KVM if reported via OSS Security Linux

Kernel/LXC/KVM if reported via This e-mail address is being protected from spambots. You need JavaScript enabled to view it

OpenStack and QEMU for low impact issues |

|

Responsible |

Linux Kernel/LXC/KVM if reported via OSS Security Distros Linux Distributions (both open source and commercial)

QEMU, Libvirt, oVirt, … |

|

Responsible |

OpenStack for intermediate to high impact issues; OpenStack gives cloud vendors 3-5 business days to upgrade.

OPNFV, OpenDayLight : process modeled on OpenStack

Xen Project for all issues; Xen Project gives two weeks for cloud vendors to upgrade. |

|

Not Clearly Stated |

Docker : states responsible disclosure; but policy docs are only available on request. Some CVE’s are published.

Cloud Foundry : no clearly stated process; no published CVE’s

CoreOS, Kubernetes: just a mail to report issues |

Despite the fact that Linux distributions have long followed a process of responsible disclosure, there is a complex and hard-to-understand web of different organizations, handling different aspects of Kernel and Distro security.

-

Kernel Security: Handles kernel security issues, with the aim to fix and publicly disclose security issues as quickly as possible.

-

OSS Security: A public list, which is primarily used for discussion and handling of existing and low-impact security issues.

-

OSS Security Distros: A private mailing list, with membership limited to operating system distribution security contacts, which follows a policy a responsible disclosure that primarily handles medium severity or higher impact security issues.

-

In addition, each Linux distribution has its own security team, which will work with one or several of the above entities to handle different aspects of security issues; in addition to security issues of all software packages that make up an individual distribution.

Conclusion

Despite the ubiquity of cloud computing today, the majority of open source projects still follow the Distro Model of Responsible Disclosure. Adding to the issue, many newer open source projects such as CoreOS, Kubernetes and Cloud Foundry all appear to have security vulnerability processes that do not go beyond allowing the discoverer to report security vulnerability issues. This is a reflection of the fact that members of different open-source software projects primarily think about developing code first, and security often takes a back seat to development.

Modern software stacks contain many different layers with incompatible or insufficient vulnerability management processes. In a layered software stack, clearly you are only as strong as the weakest link, and the lack of consistent security vulnerability processes across different open source projects creates complexity that increases the chance of errors related to security issues. The only way to address this problem is to establish consistent, industry-wide best practices that take the needs of cloud and service providers into account.

Read Part 4: Xen Project’s Policy for Responsible Disclosure with Maximum Fairness and Transparency