One of the advantages of the digital world, compared to the physical world is that you can protect some of your ‘valuables’ from permanent loss. I know friends who have lost all of their photographs, books and documents in fires or floods. I wish they had digital versions of those things which we could have saved. It takes only one click to make a copy of your data and you can carry terabytes of data on a hard drive smaller than your wallet.

Why not cloud?

While working on this story I interviewed many users to understand their data-backup plans and I discovered some used public cloud as their primary backup.

I would not use public or third-party cloud as the primary backup of my data for various reasons. First of all, I have over 3 terabytes (TB) of data and it would be extremely expensive for me to buy 3 TB of cloud storage. I would have to pay over $120 per year for 1TB of data or $100 per month for 10TB on Google Drive. Cost is not the only deterrent; I will also consume huge amounts of bandwidth to access that data which may raise eyebrows from my ISP.

The biggest danger is that then once you stop paying, you lose your data. That’s not the only problem with public cloud, the moment you start using such services your data becomes subject to numerous laws and can be accessed by government agencies without your knowledge. Your service provider gains control over your data and can lock you out of your own data for numerous reasons – most notably some ambiguous copyright violations.

Private cloud like ownCloud or Seafile can be an option but once again, since your data left your network it is exposed to the rest of the world and, as usual, it will incur heavy bandwidth use and storage costs.

I do use private cloud but that’s mostly for the data that I want accessible outside the local network or which is shared with others. I never use it as back-up.

Keep calm and go local

I can buy a 4TB NAS hard drive for under $160 and that will last me 3 to 4 years. I won’t have to worry about paying bandwidth for accessing my own data or fear of being locked out.

Now once I have decided to keep my data local and under my control it becomes increasingly important to ensure that I will not lose a byte of it. The first thing I do is make a backup of my files and configure my systems to make regular backups automatically.

There are many GUI tools; some come preinstalled on many distros, but since I run a headless file server I use command line tools and that’s what I am going to talk about in this article.

I also tend to keep things as simple as possible, so the tool I use for my back-up is ‘rsync’.

What’s rsync

Rsync stands for remote sync which was written by Andrew Tridgell and Paul Mackerras back in 1996. It’s one of the most used ‘tools’ in the UNIX world and almost a standard for syncing data. Most Linux distros have rsync pre-installed, but if it’s not there you can install the ‘rsync’ package for your distribution.

Rsync is an extremely powerful tool and does more than just make copies of your files on your system. You can use it to sync files on two directories on the same PC; you can sync directories on two different systems on the same network; or sync directories residing on machines thousands of miles apart, over the Internet.

The functionality of rsync can be expanded by using different ‘options’, which we will talk about soon.

The basic syntax of rsync is

rsync option source-directory destination-directory

Let’s assume you have a directory /media/hdd1/data-1 on hard drive 1 and you want to make a copy of it on a new hard drive which is mounted at /media/hdd2.

The following command will create the directory data-1 on the second hard drive can copy the content of the directory to the destination:

rsync -r /media/hdd1/data-1 /media/hdd2/

The option ‘-r’ ensures that it’s recursive and will also sync all directories.

However once the directory data-1 is created on hdd2 then you can start syncing the content of the two directories:

rsync -r /media/hdd1/data-1/ /media/hdd2/data-1/

Don’t forget the backward slash at the end otherwise rsync will create a new directory inside the destination directory.

Alternatively you can create a new directory on destination and then sync it with source. Let’s assume you created a directory data-2 on the second hard drive and want to sync the two without any confusion:

rsync -r /media/hdd1/data-1/ /media/hdd2/data-2/

This command will simply make an exact copy of your files in the data-1 directory inside the data-2 directory.

What if you have symlinks of different permissions of file ownership and you want to preserve them? Just use the ‘-a’ option and it will preserve the date, ownership, permissions, groups, etc. of the files.

Now you have two sets of directories synced with each other. There is a chance that you may delete some files or folders from the source; I do it all the time. How do we ensure that those are deleted from the destination as well? You need to use the ‘–delete’ option which will take care of such cases.The command becomes:

rsync -a --delete /media/hdd1/data-1/ /media/hdd2/data-2/

If you want to see the progress of files in the terminal, add the ‘-v’ option to it:

rsync -av --delete /media/hdd1/data-1/ /media/hdd2/data-2/

It’s also advisable to compress files for transfer so it saves bandwidth over the network, resulting in faster transfer. You should do it if your devices have slower transfer. The option to use is ‘-z’.

rsync -avz --delete /media/hdd1/data-1/ /media/hdd2/data-2/

You can also throw in ‘-P’ option which is for partial progress.

rsync -avzP --delete /media/hdd1/data-1/ /media/hdd2/data-2/

Working on networked machines

As I wrote in an article earlier I run a local file server at home and mount it on all my devices to access my files. I never save any data on my local machine; I always work on files stored on the primary hard drive on the server. That way my files are always up-to-date and I can pick them from any machine and continue to; no need to copy from one machine to another.

I don’t mount the second, or the back-up hard drive. Mounting it and working on files saved on this hard drive will complicate things because when I run the rsync command it will overwrite the changes from the primary hard drive. Though rsync has a trick (or option) up its sleeves to address such issues. You can use the ‘-u’ option which will force rsync to skip any file which has the modification date later than the source file.

How to sync directories over network

This is where ssh protocol comes into play. I use the following syntax to sync a remote directory with a local directory:

rsync -avzP --delete -e ssh user@server_IP:source-directory /destination_directory_on_local_machine/

Example:

rsync -avzP --delete -e ssh This e-mail address is being protected from spambots. You need JavaScript enabled to view it :/home/swapnil/backup/ /media/internal/local_backup/

To sync a local directory with a remote directory the syntax becomes:

rsync -avzP --delete -e ssh source_directory user@server_IP:path_destination_directory

Example:

rsync -avzP --delete -e ssh /home/swapnil/Downloads/ This e-mail address is being protected from spambots. You need JavaScript enabled to view it :/home/swapnil/Downloads/

Automate backup

You may want to automate backup so you don’t have to add it to your calendar. It will actually be easier to automate the backup then create a calendar entry.

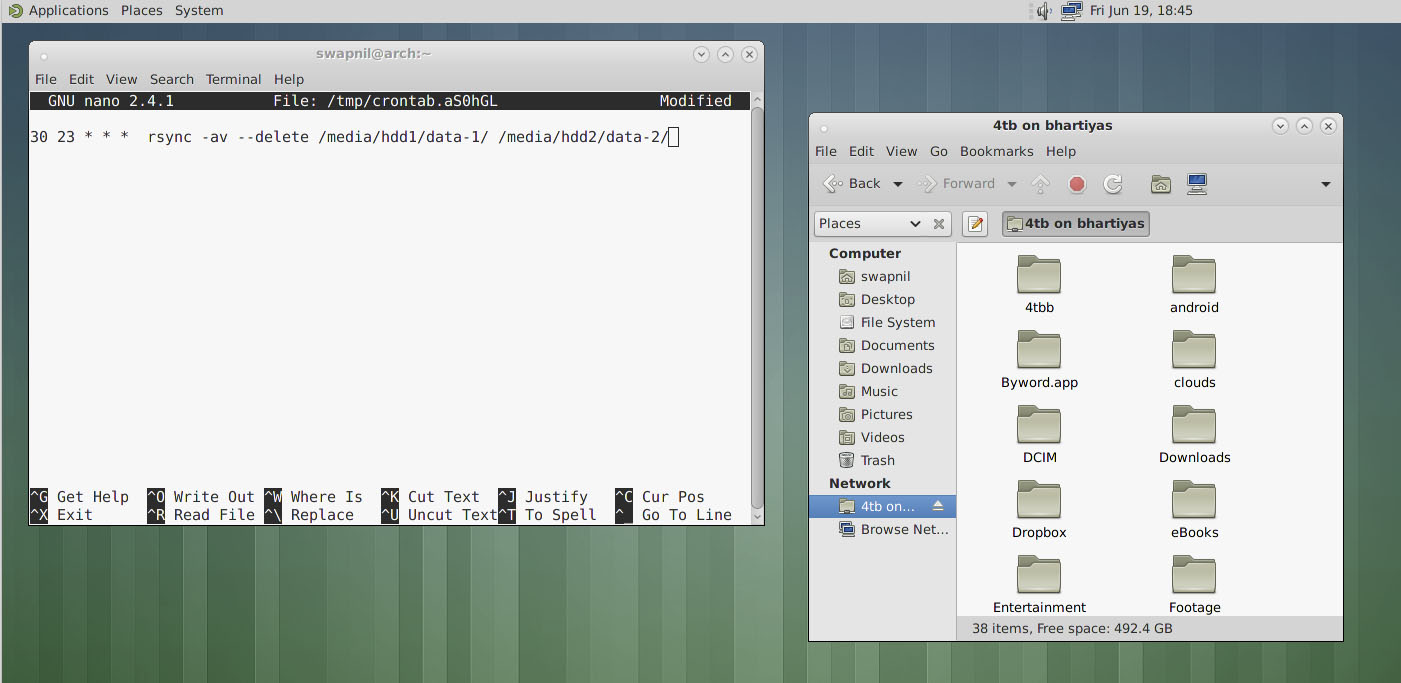

I tend to keep things simple and easy, so I can show new users how easy it is to do such things under Linux. The solution that I use for automation is ‘crontab’. It’s simple, lightweight and does the job well. With Crontab I can configure when I want to run the rsync command: daily, weekly, monthly, or more than once a day (which I won’t do). I have configured mine to run at 11:30 p.m. every day after work so all of the files that I worked on throughout the day get synced.

Depending on your distro you may have to install a package to get crontab on your system. If you are on Arch Linux, for example, you can install ‘cronie’. You can choose the default editor for crontab; I prefer nano. Run this command and replace ‘nano’ with the desired editor.

export EDITOR=nano

Now run ‘crontab -e’ to create cron jobs. It will open an empty file where you can configure the command that you want to run at a desired time. (See image, above.)

The format of crontab is simple; it has five fields followed by the command:

m h dm m dw command

Here m stands for minutes (0-59); h for hour (0-23); dm for day of the month (1-31); m for month (1-12); and dw for day of the week (0-6 where 0 is Sunday). The format is numerical and you have to use ‘*’ to commend the fields that you don’t want to use.

I run the command every day at 11.30 so the format will be

30 23 * * * rsync -av --delete /media/hdd1/data-1/ /media/hdd2/data-2/

If you want to run rsync only once a month then you can do something like this:

30 23 1 * * rsync -av --delete /media/hdd1/data-1/ /media/hdd2/data-2/

Now it will run at 11:30 p.m. on 1st of every month. If you don’t want it to run every month than you can configure it to run every six months:

30 23 1 6 * rsync -av --delete /media/hdd1/data-1/ /media/hdd2/data-2/

That will make it run every year on June 1. If you want to run more than one command, then create a new line for every command. Rsync is not the only command you can automate with ‘crontab’ you can run ‘any’ command using it.

As you can see both tools – rsync and crontab – are extremely simple and lightweight yet extremely powerful and highly configurable. Linux doesn’t have to to complicated!

Keep one copy remotely

One risk of keeping all your data on local machines is that in case of a natural disaster, fire or flood, your local system will be damaged and you will lose your data. It’s recommended to keep another copy of your data on a machine located elsewhere. I have one server at my in-laws’ place; I call it ‘Server In Law’.

The bad news is ISPs don’t allow static IP and may block forwarded ports so it’s not possible to ssh between two machines and sync data. That’s where TeamViewer and SSH Tunnel comes into play. I log into my Server In Law, open a temporary ssh tunnel and then rsync the files.

Since these are GUI-based tools they are beyond the scope of this cli focused article. I may cover it in the future.

Friends with backup are friends indeed

My friends have created a pool of backup servers where they host each other’s hard drives at their place and keep each other’s data distributed. If there are some extremely private files you don’t want your friends to see, you can always encrypt them.