In this tutorial, we’re going to look at managing Amazon S3 from your server-side code. S3 (which stands for Simple Storage Service) is a part of Amazon Web Services. It’s essentially a place to store files. Amazon stores the files in its massive data centers that are distributed throughout the planet. Your files are automatically backed up and duplicated to help ensure that they don’t get lost and are always accessible. You can keep the files private so that only you can download them, or public so that anyone can access them.

In terms of software development, S3 provides a nice place to store your files without having to bog down your own servers. The price is extremely low (pennies per GB of storage and transfer), which makes it a good option for decreasing demans on your own servers. There are APIs for adding S3 access to your applications that run on web servers as well as from your mobile apps.

In order to access S3, you use a RESTful service and access it through HTTP calls, even though you’re connecting from your server, and usually not with the browser. That’s not to say you can’t access it from the browser; however, there are security issues from using it from the browser. To access AWS, you need a private key. You don’t want to pass this private key around and by accessing AWS from the browser, there’s really no way to keep the private key hidden, which allows other people to start using your S3 account without your permisson. Instead, you’ll usually want to access AWS from your server, where you keep your private key, and then you’ll provide a browser interface into your server, not directly into AWS.

Now that said, we need to decide on server-side languages. My favorite language this year is node.js, so that’s what I’ll use. However, the concepts apply to other languages.

Generally when I learn a new RESTful API, I first try to learn the direct HTTP interface; and then after that, I decide whether to use an SDK. The idea is that often the RESTful API itself might be a bit cumbersome, and as such the developers of the API then provide SDKs in different languages. These SDKs provide classes and functions to simplify the use of the API. Sometimes, however, the APIs themselves are pretty easy to use, and I don’t even bother with the SDK. Other times, the SDKs really do help.

AWS has an API that’s not too difficult to use directly, except one part, in my opinion: The security. In order to call into the AWS API, you need to sign each HTTP call. The signature is essentially an encryption hash of the parameters you’re passing, along with your private key. This way Amazon can know the call likely came from the person or application it claims to come from. But along with the aforementioned parameters, you also provide a timestamp with your call. AWS will check that timestamp and if more than 15 minutes has passed since the timestamp, AWS will issue an error. In other words, API calls expire. When you construct an API call, you need to call it quickly, or AWS won’t accept it.

Adding in the encryption hash is a bit complex without some helper code. And that’s one reason I prefer to use the SDKs when using Amazon, rather than make direct HTTP calls. The SDKs include the code to sign your calls for you. So while I usually like to master the HTTP calls directly and then only use the SDK if I find it helps, in this case, I’m skipping right to the SDK.

Using the AWS SDK

Let’s get started. Create a directory to hold a test app in node.js. Now let’s add the AWS sdk. Type:

npm install aws-sdk

In order to use the SDK, you need to store your credentials. You can either store them in a separate configuration file, or you can use them right in your code. Recently a security expert I know got very upset about programmers storing keys right in their code; however, since this is just a test, I’m going to do that anyway. The aws-sdk documentation shows you how to store the credentials in a separate file.

To get the credentials, click on your name in the upper-right corner of the console; then in the drop down click Security Credentials. From here you can manage and create your security keys. You’ll need to expand the Access Keys section and obtain or create both an Access Key ID and a Secret Access Key.

Using your favorite text editor, create a file called test1.js. In this code, we’re going to create an S3 bucket. S3 bucket names need to be unique among all users. Since I just created a bucket called s3demo2015, you won’t be able to. Substitute a name that you want to try, and you may get to see the error code you get back. Add this code to your file:

var aws = require('aws-sdk');

aws.config.update({

accessKeyId: 'abcdef',

secretAccessKey: '123456',

region:'us-west-2'

});

but replace abcdef with your access key ID, and 123456 with your secret access key.

The object returned by the require(‘aws-sdk’) call contains several functions that serve as constructors for different AWS services. One is for S3. Add the following line to the end of your file to call the S3 constructor and save the new object in a variable called s3:

var s3 = new aws.S3();

And then add this line so we can inspect the members of our new s3 object:

console.log(s3);

Now run what we have so far:

node test1.js

You should see an output that includes several members containing data such as your credentials and the endpoints. Since this is a RESTful interface, it makes use of URLs that are known as endpoints. These are the URLs that your app will be calling to manipulate your S3 buckets.

Since we don’t really need this output to use S3, go ahead and remove the console.log line.

Create a bucket

Next we’re going to add code to create a bucket. You could just use the provided endpoints and make an HTTP request yourself. But, since we’re using the SDK and it provides wrapper functions, we’ll use the wrapper functions.

The function for creating a bucket is simply called createBucket. (This function is part of the prototype for the aws.S3 constructor that’s why it didn’t show up in the console.log output.) Because node.js is synchronous, to call the createBucket function, you provide along with your other parameters a callback function. Add the following code to your source file, but don’t change the name s3demo2015 to your own bucket; this way you can see the error you’ll get if you try to create a bucket that already exists:

s3.createBucket({Bucket: 's3demo2015'}, function(err, resp) {

if (err) {

console.log(err);

}

});

Now run what you have, again with:

node test1.js

You’ll see the output of the error. We’re just writing out the raw object; in an actual app, you’ll probably want to return just the error message to your user, which is err.message, and then write the full err object to your error log files. (You do keep log files, right?)

Also, if you put in the wrong keys, instead of getting a message about the bucket already existing, you’ll see an error about the security key being wrong.

Now change the s3demo2015 string to a name you want to actually create, and update the code to print out the response:

s3.createBucket({Bucket: 's3demo2015'}, function(err, resp) {

if (err) {

console.log(err);

return;

}

console.log(resp);

});

Run it again, and if you found a unique name, you’ll get back a JavaScript object with a single member:

{ Location: 'http://s3demo2015.s3.

This object contains the URL for your newly created bucket. Save this URL, because you’ll be using it later on in this tutorial.

Comment the bucket code

Now we could put additional code inside the callback where we use the bucket we created. But from a practical standpoint, we might not want that. In your own apps, you might only be occasionally creating a bucket, but mostly using a bucket that you’ve already created. So what we’re going to do is comment out the bucket creation code, saving it so we can find it later as an example, but not using it again here:

var aws = require('aws-sdk');

aws.config.update({

accessKeyId: 'abcdef',

secretAccessKey: '123456',

region:'us-west-2' }

);

var s3 = new aws.S3();

/*s3.createBucket({Bucket: 's3demo2015'}, function(err, resp) {

if (err) {

console.log(err);

return;

}

console.log(resp);

for (name in resp) {

console.log(name);

}

});*/

Add code to the bucket

Now we’ll add code that simply uses the bucket we created. S3 isn’t particularly complex; it’s mainly a storage system, providing ways to save files, read files, delete files, and list the files in a bucket. You can also list buckets and delete buckets. There are security options, as well, which you can configure, such as specifying whether a file is accessible to everyone or not. You can find the whole list here.

Let’s add code that will upload a file and make the file accessible to everyone. In S3 parlance, files are objects. The function we use for uploading is putObject. Look at this page for the options available to this function. Options are given as values in an object; you then pass this object as a parameter to the function. Members of an object in JavaScript don’t technically have an order (although JavaScript tends to maintain the order in which they’re created, but you shouldn’t rely on that as a fact), so you can provide these members in any order you like. The first two listed in the documentation are required: The name of the bucket and the name to assign to the file. The file name is given as Key:

{

Bucket: 'bucketname',

Key: 'filename'

}

You also include in this object the security information if you want to make this file public. By default, files can only be read by yourself. You can make files readable or writable by the public; typically you won’t want to make them writable by the public. But we’ll make this file readable by the public. The member for the parameter object for specifying the security is called ACL, which stands for Access Control List (as opposed to the ligament in our knee that we tear). The ACL is a string specifying the privileges. You can see the options in the documenation. The one we want is ‘public-read’.

To really get the most out of S3, I encourage you to look through all the options for putObject. This will provide you with a good understanding of what all you can do with S3. Along with that, read over the general S3 documentation, not just the SDK documentation. One option I’ve used is the StorageClass. Sometimes I just need to share a large file with a few people, and don’t need the cloud storage capabilities of S3. To save money, I’ll save the file with StorageClass set to ‘REDUCED_REDUNDANCY’. The file isn’t saved redundantly across the AWS cloud, and costs less to store. All of these can be configured through this parameter object.

Upload a file to S3

Now, let’s do it. We’ll upload a file to S3. The file we upload in this case will just be some HTML stored in a text string. That way we can easily view the file in a browser once it’s uploaded. Here’s the code to add after the commented-out code:

var html = '<html><body><h1>Welcome to S3</h1></body></html>';

s3.putObject( {Bucket:'s3demo2015', Key:'myfile.html', ACL:'public-read', Body: html}, function(err, resp) {

if (err) {

console.log(err);

return;

}

console.log(resp);

});

Notice there’s on additional parameter that I included; I added this after running the test for this article, and I’ll explain it in a moment.

If all goes well, you should get back a string similar to this:

{ ETag: '"

The ETag is an identifier that can be used to determine whether the file has changed. This is typically used in web browsers. A browser may want to determine if a file has changed, and if not, just display the file from cache. But if it has changed, then re-download the file.

But to determine if the file has changed, the browser will obtain, along with the original file, a long hexadecimal number called an ETag. To determine if the file has changed, the browser will first ask the web server for the latest ETag for the file. The server will send back the ETag. If the ETag is different from what the browser has stored along with the cached file, the browser will know that the file has changed. But if the ETag is the same, the browser will know the file hasn’t changed and won’t bother re-downloading it, and will instead use what’s in the cache. This speeds up the web in general and minimizes the amount of bandwidth used.

Read the uploaded file

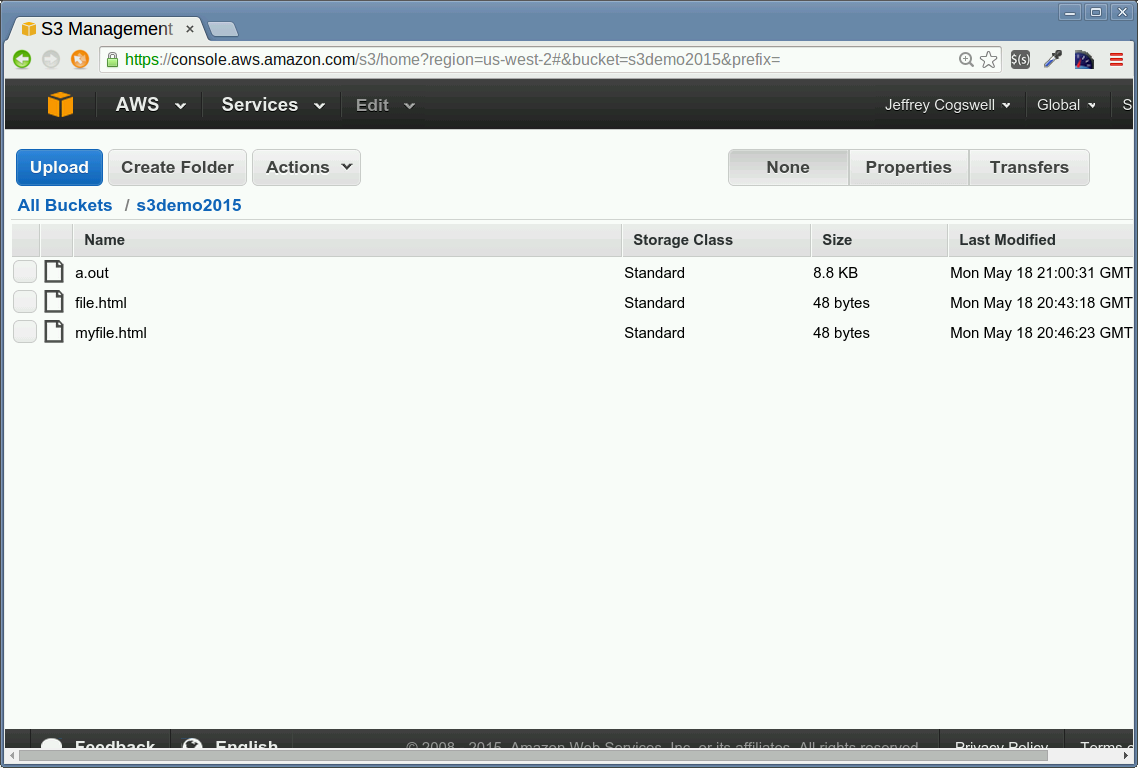

Now that the file is uploaded, you can read it. Just to be sure, you can go over to the AWS console and look at the bucket. In the image, above, you can see the file I uploaded with the preceding code, as well as a couple other files I created while preparing this article, including one I mention later.

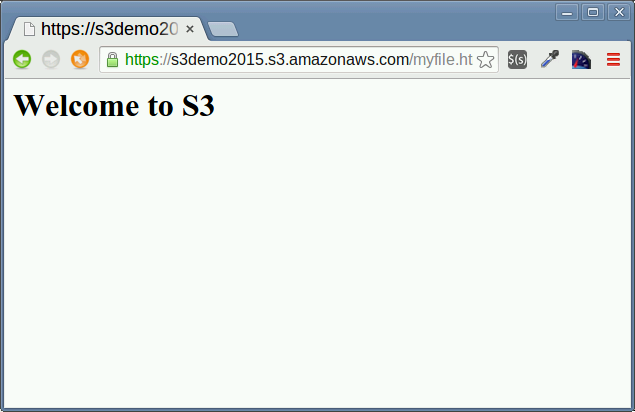

Now let’s look at the file itself. This is where we need the URL that we got back when we created the bucket. In our example we named our file myfile.html. So let’s grab it by combining the URL and the filename. Open up a new browser tab and put this in your address bar, replacing the s3demo2015 with the name of your bucket:

https://s3demo2015.s3.amazonaws.com/myfile.html

(You can also get this URL by clicking on the file in the AWS console and then clicking the Properties button.) You’ll see your HTML-formatted file, as in the following image:

Use the right content type

Now for that final parameter I had to add after I first created this example. When I first made the example, and pointed my browser to the URL, instead of displaying the HTML, the browser downloaded the file. Since I’ve been doing web development for a long time, I knew what that meant: I had the content type set wrong. The content type basically tells the browser what type the file is so that the browser knows what to do with it. I checked the documentation and saw that the correct parameter object’s member is called ContentType. So I added the normal content type for HTML, which is ‘text/html’.

The content type is especially important here if you’re uploading CSS and JavaScript files. If you don’t set the content type for correctly, and then load the CSS or JavaScript from an HTML file, the browser won’t process the files as CSS and JavaScript respectively. So always make sure you have the correct content type.

Also note that we’re not limited to text files. We can save binary files as well. If we were calling the RESTful API manually, this would get a little tricky. But Amazon did a good job creating the SDK and it correctly uploads our files even if they’re binary. That means we can read a binary file in through node’s filesystem (fs) module, and get back a binary array. We can just pass this array into the putObject function, and it will get uploaded correctly.

Just to be sure, I compiled a small C++ program that writes out the number 1. The compiler created a binary file called a.out. I modified the preceding code to read in the file and upload it to S3. I then used wget to pull down the uploaded file and it matched the original; I was also able to execute it, showing that it did indeed upload as a binary file rather than get corrupted through some conversion to text.

Conclusion

S3 is quite easy to use programmatically. The key is first knowing how S3 works, including how to manage your files through buckets, and how to control the security of the files. Then find the SDK for your language of choice, install it, and practice creating buckets and uploading files. JavaScript is asyncronous by design (because it needed to so that web pages wouldn’t freeze up during network calls such as AJAX), and that carries forward to node. Other languages use different approaches, but the SDK calls will be similar.

Then once you master the S3 SDK, you’ll be ready to add S3 storage to your apps. And after that, you can move on to other AWS services. Want to explore more AWS services? Share your thoughts in the comments.