Its name is kind of unique for something that shows system statistics: top. It is a part of the procps package, a set of Linux utilities that provide system information. Besides top, procps also includes free, vmstat, ps, and many other tools.

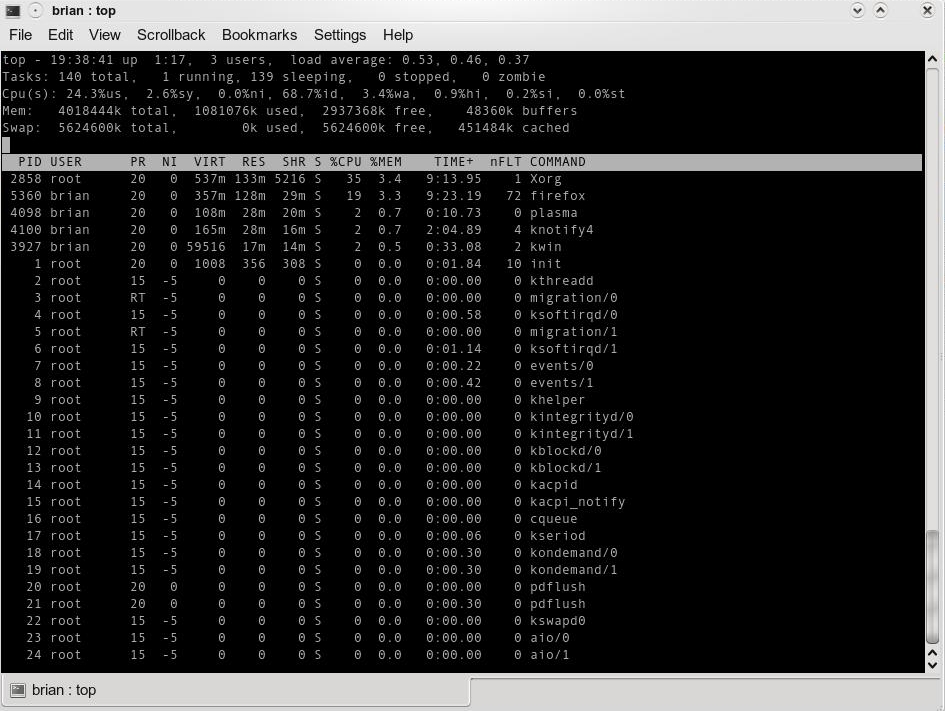

Top gives you a snapshot of a system’s situation, e.g., free physical memory, number of running tasks, percentage of CPU time usage of each processes–all in a single view. So it’s like using ps, free, and uptime at the same time. Top gets most of its information from several files under the /proc directory. You might already be aware that this directory provides users with a broad range of system-wide statistics, but thanks to top, we can summarize most of them inside a single organized window.

Furthermore, with top you can do things like:

- Sort processes based on CPU time usage, memory usage, total runtime, etc.

- Switching to multi view mode. In this mode, you’re given up to four windows and each window can be assigned different settings (sorting, coloring, displayed field)

- Send a signal by mentioning the target process’ PID and the signal number.

To learn how to use top more effectively, please refer to this article.

However, top is not the ideal tool for gathering system statistics in long intervals, say, a week or a month. sadc (system activity data collector) is a better tool for this kind of interval. sadc generates a log that contains system statistics and uses sar to generate report and summaries based on this log. Some people use top in batch mode as an alternative, but it’s not as effective as sadc because:

- sadc records the data in binary format, thus requiring smaller space

- according to personal experiment, by using “time” command, sadc works faster than top therefore the tool itself doesn’t introduce big overhead

That’s enough to reintroduce top. Let’s begin by understanding the meaning of each part of top’s “dashboard.” Also, throughout this article, it’s important to note the meaning of “high” or “low.” There is no absolute threshold to precisely label a number high or low. I tend to apply the high or low label if the statistic is higher or lower compared to the average number you usually see in the related field.

Processor Statistics

On the uppermost line, shown in Figure 1, there are (from left to right): current time (hour:minute:second), uptime (hour:minute), number of active user IDs, and load average.

On the uppermost line, shown in Figure 1, there are (from left to right): current time (hour:minute:second), uptime (hour:minute), number of active user IDs, and load average.

The first two are self-explanatory. How about the number of active user IDs? One might think it’s the number of currently logged-in users, either interactively or not (via ssh, for example). Not exactly. It also counts the number of pseudo terminals spawned under certain user ID privileges. Let’s say you login into GNOME as “joe” and then you spawn two xterm windows, then it counts you as three users. Top increments the number by one to account the existence of init process.

Load average is the representation of the number of processes marked as runnable in the run queue. From left to right, they represent one-minute, five-minute, and 15-minute load averages. They are the same three numbers you can see in /proc/loadavg. A detailed explanation can be found in this Linux Journal article. Bear in mind that a process in a runnable state doesn’t neccessarily mean it’s currently executed by the processor. It’s a mark stating that “I (the task) am ready to be executed, but it’s up to the scheduler to decide when to pick me up.”

For example, if you have a single dual-core processor and one-minute load average reads “2,” then on average each core is executing one process. If it reads “4,” then on average one process is being executed and one is waiting in each core. The actual situation might be different, since load average is actually showing “normalized trends” during certain intervals instead of discrete numbers at any given moment.

Moving one line below, we see statistics about number of tasks in various states:

- Running: as we have discussed before, this represents the number of tasks in a runnable state.

- Sleeping: processes being blocked due to waiting for an event (e.g., time out or I/O completion). It accounts for both interruptible (can be awaken earlier by SysV signal) or uninterruptible (completely ignoring SysV signal) processes.

- Stopped: the exact meaning here is “paused,” not “terminated.” In a terminal, you can stop a program by sending it a SIGSTOP signal or pressing Ctrl-Z if it’s a foreground task.

- Zombie: “A dead body without soul” might be a good analogy. After a child task is terminated, it is cleaned up and the only thing left is a task descriptor that includes a very important value: exit status. So if the number of zombies is high, that is a sign that one or more programs have a bug properly terminating child tasks.

Now we enter CPU section statistics. By pressing “1,” you could switch between cumulative and per-core/physical CPU usage. The numbers here are derived from /proc/stat. Top does the sampling and converts them from counter-based number into percentages.

The two left-most fields, %us and %sy, represent percentage of CPU time spent in user mode and kernel mode, respectively.

What are user and kernel modes? Let’s step back a bit and discuss the Intel x86 platform. In this case, the processor has four levels of privileges, ranging from 0 to 3, each called a “ring.” Ring 0 is the most privileged domain–any codes executed when the CPU enters this level can access anything on the system. Ring 3 is the least-privileged domain.

The Linux kernel, by default, doesn’t use rings 1 or 2, except in certain conditions i.e., entering a hypervisor. Ring 0 is also known as kernel mode, because almost all of kernel codes are granted this privilege. Ring 3 is, easy to guess, known as user mode. User space codes live in this ring.

This logical separation primarily serves as protection. User codes can do some work, but to touch underlying layers, such as reading or writing to the hard disk or allocating several kilobytes of RAM, the codes need to ask for kernel services, also known as system calls. System calls are composed of one or more kernel functions. They are running on behalf of the calling task. So from the privilege escalation point of view, it is the same task but switching from user mode to kernel mode. When a system call finishes, it returns a value and the task is moved back to user mode.

In my opinion, a low or high number on both of these mode values is not really a big concern. We can consider it a way to identify our system’s characteristics. If you find the %sy higher than %us most of the time, it is likely that your machine serves as a file server, database server, or something similar. On the other hand, a higher %us means your machine does a lot of number crunching.

High %sy values could also mean some kernel threads are busy doing something. Kernel threads are like normal tasks, but they have a fundamental difference: they operate entirely in kernel mode. Kernel threads are created to do a specific job.

For example: pdflush writes back updated page caches to the related storage, and a migration thread does the load balancing between CPUs by reassigning some tasks to less-loaded processors. If kernel threads spend too much time in kernel mode, user space tasks will have less of a chance to run. Even with the latest scheduler like the Completely Fair Scheduler (CFS), which provides better fairness, you should read the related documentation to fine-tune the kernel thread.

%hi and %si are statistics related to CPU servicing interrupts. %hi is the percentage of CPU time servicing hard interrupts, while %si is when CPU denotes the soft interrupt time percentage.

Okay, so why are they called “soft” and “hard”? A little background on how Linux kernel handles interrupt first: when an interrupt arrives on a certain IRQ line, the Linux kernel quickly acknowledges it. The related handler in the IDT (Interrupt Descriptor Table) is executed. This acknowledgment process is done while disabling interrupt delivery in the local processor. This is why it has to be quick; if not, the number of pending interrupts will rise and will make hardware response worse.

The solution? Acknowledge fast, prepare some data, and then defer the real work until later. This deferred job is called a soft interrupt, because it’s not really an interrupt but treated like one. By dividing the work of interrupt handling, system response gets smoother and indirectly gives user space code more time to run. Why? Simple–the interrupt handler runs in kernel mode, just like when we perform a system call.

A high %hi value means one or more devices are too busy doing their work, and are most likely overloaded. In certain cases, it might mean the device is broken, so it’s good to do a thorough checking before it gets worse. Check /proc/interrupts and you can pinpoint the source.

Regarding %si, a high number here doesn’t always related to high frequency of real interrupts. Dividing work between the real interrupt handler and soft IRQs is the driver’s job, so high %si tends to show there’s something needed to optimize inside the driver. The easiest way to optimize is upgrade your kernel. If you compile the kernel on your own, use the configuration file of the old kernel as the base config of your new kernel, so you can compare the result of soft IRQ management. Any third-party drivers should also be upgraded. A raw observation using Google tells that network drivers are usually the source of the problem.

Memory Statistics

Let’s move on virtual memory statistics. This area is derived from /proc/meminfo content.

What’s “total memory” in your opinion? The size of your RAM? Not really. The real meaning is the total size of RAM that is mappable by your running kernel. If you’re not using a kernel that has large addressing capability, the maximum addressable memory is around 896 Mb. 896 Mb is 1 Gb minus some reserved kernel-address space. Theoretically, 1 Gb is kernel address space, while 3 Gb is user-space address space in a 32-bit x86 architecture.

To make sure your system can do large addressing, install a kernel package that contains strings such as “PAE,” “bigmem,” or “hugemem.” Using this modified kernel, you can map up to 64 Gb using feature called PAE (Physical Address Extension).

The two confusing fields in memory statistics are “buffers” and “cached.” Cached? What’s cache? First, one might get the wrong conclusion by its placement. Notice it is placed in “Swap” line, so one might take conclusion it is cache of swap or something similar. Not at all. It is actually part of “Mem” line, which describes physical memory consumption.

Buffer is the in-memory copy of blocks as a result of the kernel performing raw disk access. For example, when kernel wants to read content of a file, first the kernel has to retrieve the information from the related inode. To read this inode, the kernel reads a block from the block device at a certain sector offset. Therefore, we can conclude that buffer size rises if there is access to inode or dentry (directory entry), or superblock (when we mount a partition), when directly accessing a file or block device.

The cached field tells us the size of RAM used to cache the content of files being read recently. How is that different from buffer field? Recall that buffer size increases when we bypass the filesystem layer, and cached size increases when do the opposite. We can also see cached size as the degree of caching aggressiveness from the kernel. Both buffer and cache usually grow as we do more read operations–and this is perfectly normal. Subsequent reads could be satisfied by reading from cache, which enhances speed while reducing read latency.

Per-process Statistics

The rest of the top’s dashboard is per-process statistics. This is a very wide subtopic to cover, so choice must be made:

- Uncover the meaning of memory related fields: VIRT, RES, SHR, nFLT, and nDRT

- Understanding NI and PR fields and the connection between the two

“How much memory is allocated by task X?” you ask. You’re confused between looking at VIRT, RES, or SHR. Before answering that, I’ll take a quick recap about two types of memory area: anonymous or file-backed.

An example of an anonymous memory area is the result of executing malloc(), a C function for requesting certain amount of memory. It might happen when your word processor allocates a buffer to hold the characters you have typed. Or when a web browser prepares chunks of memory to display a website.

What about file-backed memory area? When your program loads a shared library, a program called loader loads and maps the requested library into process’s address space. Thus, from the program’s point of view, accessing the library (i.e., calling a function) is like pointing to certain memory address. Refer to this LinuxDevCenter article for further information about memory allocation.

VIRT refers to the length of memory area, while RES shows us how many memory blocks (called pages) are really allocated and mapped to process address space. Thus, VIRT is like talking about the size of the land we own, but the house, what RES represents, built on top of it doesn’t necessarily have to occupy the entire space. Quite likely, RES is far smaller than VIRT and it’s perfectly logical because of the demands of the paging mechanism, which only allocate pages when it is really needed. So, the answer is clear: RES shows you the approximation of per-process memory consumption.

But RES itself has a flaw. Recall that we include the size of file-backed memory area in the calculation (e.g., for the shared library). We know that two or more running programs might use same set of libraries. Think about KDE- or GNOME-based programs. When you run those programs, they will refer to the same libraries but mapped to their own address space. You might get the wrong conclusion those programs require a big area, while in reality the sum of pages that belong to the running programs are lower than the sum of their RES.

This is where SHR comes to rescue. SHR shows us the size of the file-backed memory area. Thus, RES minus SHR leaves us with the size of anonymous memory area. So, actual memory consumption of a process is somewhere between RES and RES minus SHR, depending on how many shared libraries are loaded by any given process and other processes using those same libraries.

Let’s move to other fields: nFLT and nDRT. These two fields don’t show up by default, so you need to select them first by pressing ‘f’ or ‘o’ and pressing the key representing the field. Recall that the kernel does demand paging if a process points to valid memory address but no page is present there. The formal name of this condition is page fault. Page fault has two types: minor and major fault. Minor page faults happen if no storage access is involved. On the other hand, major page faults do involve storage access.

A minor page fault is connected with the anonymous memory area, because there is no need to read the disk. The exception to this is when the pages are swapped out. A major page fault is connected to the file-backed memory area. On every major fault, disk blocks are read and brought to RAM. However, thanks to page cache, if the target disk block’s content is found there then it will be a minor fault.

nFLT shown by top only counts the number of major page faults. If this number gets high, it is a good indication that you need more RAM. If free memory gets tight, some pages in page cache will be flushed out and anonymous memory will be swapped out. Thus, if they are accessed, additional major faults occur. Try to add more RAM, until you see moderate value for nFLT. Also check your program’s internal log or statistics and see if high nFLT means reduced performance. Usually, latency has a tight relationship with major page faults.

nDRT is supposed to display the number of dirty pages. But without a clear reason, this value (which is taken from rightmost field of /proc/<pid>/statm) always shows zero in Linux kernel 2.6.x. Hopefully somebody could fix the related kernel code, so top can correctly show the number. Meanwhile, you can check the size of dirty pages by checking /proc/<pid>/smaps. The statistics are broken down within each VMA (Virtual Memory Area) region.

A dirty page means that a file-backed page contains modified data. This occurs when the kernel want to write to the disk. Except in direct I/O cases, the data are just written to page cache and the page itself is marked as dirty. Later, pdflush kernel threads periodically scan those dirty pages and write their contents to the disk. This asynchronous procedure prevents the writing task from being blocked too long.

Now, we turn our attention to NI and PR field. These fields represents priority of a process. NI displays the nice level, a static priority assigned to a task when it is initialized. By default, every new process has nice level 0, but you can override it with the “nice” utility. NI ranges from -20 to 19. PR shows the dynamic priority, which is calculated based on the nice level. When a process begins its life, PR equals NI plus 20.

During runtime, PR might go up or down depending on the kernel version you use. Pre-2.6.23 Linux kernel, PR can be any value within NI+20-x and NI+20+x. x itself could be considered as “bonus” or “discount” point. If a process sleeps a lot, it will get bonus so its PR is decremented. Otherwise, if a process chews a lot of CPU time, its PR will be incremented. When the kernel scheduler wants to choose which process should run, the process with the lowest dynamic priority wins.

In Linux 2.6.23 and above, PR is always NI plus 20. It is the consequence of the newly merged CFS scheduler, where sleep interval no longer solely dictates dynamic priority adjustment. And, now dynamic priority is not the main concern when selecting the next running task. Without going too deep into CFS internals, it is sufficient to say that the CFS scheduler selects the runnable process that currently consumes the least CPU virtual time. This is the “fairness” CFS wants to achieve, with every process having a proportional amount of CPU processing power, with PR acting as weighing factor to this fairness. Then we can conclude, with the implementation of CFS, a process with higher PR has a chance to preempt the lower one.

About the Author

Mulyadi Santosa, RHCE, lives in Jakarta, the capital city of Indonesia. He works as freelance writer and is an owner of a start-up that focuses on teaching Linux for various levels of audiences. He writes a monthly column about Linux for CHIP Indonesia magazine, and has written articles for various Indonesian and foreign publications. You can contact him at

This e-mail address is being protected from spambots. You need JavaScript enabled to view it

and visit his blog.

Acknowledgments

- Avishay Traeger, for convincing me to write about this topic

- Breno Leitao, Sandeep K. Sinha, Cheetan Nanda for nice discussion and hints.