When you look at the release schedules from big software companies (Apple, Microsoft, Oracle, and so on), you typically find releases about once a year, or every two years, or sometimes even every three years. In the past, although users weren’t necessarily patient, they put up with this release schedule. Even smaller companies used this kind of scheduling. In this scenario, the only time a release came out sooner was to fix bugs. No new features would be included. Of course, software wasn’t delivered online back then. Instead, products were shipped on disk or CD.

When you look at the release schedules from big software companies (Apple, Microsoft, Oracle, and so on), you typically find releases about once a year, or every two years, or sometimes even every three years. In the past, although users weren’t necessarily patient, they put up with this release schedule. Even smaller companies used this kind of scheduling. In this scenario, the only time a release came out sooner was to fix bugs. No new features would be included. Of course, software wasn’t delivered online back then. Instead, products were shipped on disk or CD.

Now compare that to today’s apps in the Google and Apple stores. Because of online delivery, if there’s a show-stopping bug, the developer can quickly update the app, and customers will receive the app within days or hours of the new release. On the other hand, because of this, customers start to expect rapid releases. If a year passes and an app doesn’t receive an update, users tend to assume the app has been abandoned. If they paid for the app, they get angry; and, whether they paid or not, they’ll likely move on to a competing app.

But, there’s another difference. Look at the sheer size of something like Microsoft Word. Regardless of whether all the features in Word are truly necessary, there are allegedly hundreds of developers working on Word at any given moment. Most Android and iPhone apps don’t have nearly that many developers working on them. The apps are generally much smaller and built by smaller teams. Either way, the new online culture of apps being delivered faster and faster has caused changes throughout the software industry. People simply want their software delivered more often. Additionally, they want a quick way to report a bug and see quick responses to the bugs. This mindset has moved into corporate culture as well when dealing with internal software.

With today’s software delivered online, in theory, developers could release their software as they’re working on it. Each day, as they add a new feature or fix a few bugs, they could upload it for release. More reasonably, this could happen once a week or once a month, but that’s provided the software actually works and is in a state that it can be delivered. Therein is the catch: The only way the software can be delivered this often is if you maintain some build that pulls in the changes, and only the changes that are fixed and working.

This has all resulted in a concept called “continuous delivery.” So, how do you, as a developer learn it?

Getting Started with Continuous Delivery

The first thing is to understand what exactly it is. Unfortunately, this concept has been adopted by many people throughout the software world who want to get in on the action and thus the term has become clouded with market-speak. But, if we look to the companies that are pioneering the continuous delivery concepts through tools they’ve built, we start to see some common themes.

Software development guru Martin Fowler has provided some ideas on it as well, with the help of his team at Thoughtworks. Essentially, the idea encompasses three ideas:

-

Your software is deployable throughout its process, and you maintain it in such a state.

-

The system provides feedback on its readiness for deployment.

-

The software can be deployed easily, or, as Fowler says, it provides “push-button deployments.”

Let’s focus on the first and third of these topics.

The Software Is Always Deployable

Anyone who has worked on a team building a large software system knows that at any given moment, different developers will have different branches of the software on their machines, and that at times the master branch might not be fully functional. Although we try to make sure the master branch always works, the reality is we’re not always there. This is one aspect we work to change with continuous delivery.

Consider this scenario: The customer suddenly calls and says, “Let’s see what the product looks like right now.” A typical response might be, “Um… it was working last week, but right now I’m in the middle of adding a new feature, and so you can’t really see it run, because it just doesn’t work.” Well, can you just show the customer what you had in the previous working state before you started adding the new feature? Maybe, or maybe not. It depends on your process.

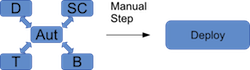

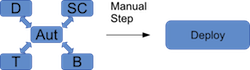

By using automated processes in conjunction with a careful set of policies and procedures, your software is always in a state where it can be deployed. Or, more accurately, you always have a branch that can be deployed. As you make changes, branches get merged, human testers and automated test tools verify the changes work, and the changes get merged into the master branch (or some branch deemed as the deliverable branch). That means the deliverable branch is always in a functional state. And, this leads to push-button deployment, where you can quickly spin up a virtual machine and show it to your client when asked (and deploy it for real to the masses). See Figure 1 above.

Push-Button Deployments

The push-button concept refers to the idea that with a single or couple of commands, the software can be deployed. A key concept people at companies like Puppet Labs have pointed out is that your software is always in a state whereby it <i>can</i> be deployed. It’s not actually continually deployed; it doesn’t get pushed out every hour or so. You’re still in charge of when actual deployments go out. But, you use automation tools to make all this happen. Then, when your customer wants to see it right here, right now, you can spin up a virtual server, enter a command or two, and the software will be loaded and configured on the virtual server, ready for the customer to see the latest changes.

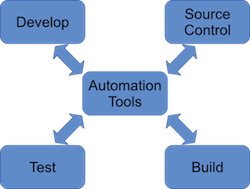

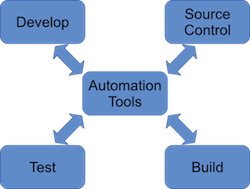

Similarly, when you’re ready to do an actual release, you will use similar tools and commands to do the release. This will, of course, depend on where your software gets released. If it’s a service, you might push the changes to multiple virtual servers. If it’s in an app store, you might push the changes to iTunes or Google Play. And, if it’s an internal application, you might push the changes to a server that gets configured and then a new image is built based on this configuration. Then, future virtual servers that get spun up will use the updated image. The automation tools make all this fast and easy (Figure 2).

The Big Picture

The big picture of development becomes one that uses traditional agile techniques with a great deal of automation and provides for far more frequent releases, along with feedback mechanisms whereby customers and clients can respond with bugs and feature requests. As before, testing is performed on each unit before the unit is added into the master branch. Now, however, the automation tools prevent the branch from being merged in if it doesn’t pass the tests. Yes, there’s a lot of “in theory” to all this, and the marketing folks pushing for continuous delivery may not always truly understand the complexity of software development processes. But, the idea really is sound, and it’s vital in today’s world where we’re dealing with massively scalable software that may run on millions of devices that need quick updates.

Tools

There isn’t enough room in this short article to explain everything you need to know about continuous delivery. But one of the most important aspects is that you make use of the right tools. Two popular such tools are Chef and Puppet in conjunction with the tools you’re already using, such as Git. If you consider how in the older scenarios, developers were stuck working on different boxes that would likely become very different, these tools help enforce consistency between machines. That alone could cause potential headaches, which is why your development process may need to be modified to fit within that model. Also, in realistic terms, the machines won’t always be identical; when you’re working on a branch, you don’t need that branch to exist on another developer’s machine until the merge takes place. (This is where the fuzzy nature of the marketing-speak has to come to grips with the realities of development.)

Conclusion

Continuous delivery takes existing processes and adapts them to today’s mobile (and possibly impatient) customers who want to see updates now. It’s not just to their benefit, however; it’s to our benefit as developers as well, because we can quickly get releases out the door and get feedback from users. Then, if there’s a problem, we don’t need to force our users to sit and wait weeks for a patch. Instead, we can use our automation tools to either get a patch out quickly or immediately roll back to the previous release. In either case, we get the release out quickly so customers don’t complain.

Big software companies are already using many of these processes, although I suspect it’s not always quite as beautiful and perfect as they would like us to believe. But they’re showing that the idea works, and you can use these techniques to get your software out the door.

Now where do you go to learn more? There are lots of sources. The two big companies pushing the tools have pages on it: Chef and Puppet Labs. ThoughtWorks, who pioneered the idea, has a lot of good information on its website. As a final note, I wanted to add some links to articles that were critical of continuous delivery, and provide them here as sort of a counterpoint, but I’m not finding much. It looks like people are generally having good luck with it.

Canonical’s David Planella announced a few days ago the there will be an Ubuntu Community Team Q&A with Kevin Gunn, the leader of the team of Ubuntu developers over Canonical responsible for the convergence implementation, on Ubuntu on Air.

Canonical’s David Planella announced a few days ago the there will be an Ubuntu Community Team Q&A with Kevin Gunn, the leader of the team of Ubuntu developers over Canonical responsible for the convergence implementation, on Ubuntu on Air.

When you look at the release schedules from big software companies (Apple, Microsoft, Oracle, and so on), you typically find releases about once a year, or every two years, or sometimes even every three years. In the past, although users weren’t necessarily patient, they put up with this release schedule. Even smaller companies used this kind of scheduling. In this scenario, the only time a release came out sooner was to fix bugs. No new features would be included. Of course, software wasn’t delivered online back then. Instead, products were shipped on disk or CD.

When you look at the release schedules from big software companies (Apple, Microsoft, Oracle, and so on), you typically find releases about once a year, or every two years, or sometimes even every three years. In the past, although users weren’t necessarily patient, they put up with this release schedule. Even smaller companies used this kind of scheduling. In this scenario, the only time a release came out sooner was to fix bugs. No new features would be included. Of course, software wasn’t delivered online back then. Instead, products were shipped on disk or CD.