Click here to read the February 2021 Linux Foundation Newsletter

The post Linux Foundation Newsletter February 2021: IT Training & Certification Sale, Shuah Khan & Mentorship, OpenSSF First Six Months appeared first on Linux Foundation.

How to create a TLS/SSL certificate with a Cert-Manager Operator on OpenShift

Use cert-manager to deploy certificates to your OpenStack or Kubernetes environment.

Bryant Son

Thu, 2/11/2021 at 4:10pm

Image

Photo by Tea Oebel from Pexels

cert-manager builds on top of Kubernetes, introducing certificate authorities and certificates as first-class resource types in the Kubernetes API. This feature makes it possible to provide Certificates as a Service to developers working within your Kubernetes cluster.

cert-manager is an open source project based on Apache License 2.0 provided by Jetstack. Since cert-manager is an open source application, it has its own GitHub page.

Topics:

Linux

Openshift

Read More at Enable Sysadmin

Unikernels have been around for many years and are famous for providing excellent performance in boot times, throughput, and memory consumption, to name a few metrics [1]. Despite their apparent potential, unikernels have not yet seen a broad level of deployment due to three main drawbacks:

While not all unikernel projects suffer from all of these issues (e.g., some provide some level of POSIX compliance but the performance is lacking, others target a single programming language and so are relatively easy to build but their applicability is limited), we argue that no single project has been able to successfully address all of them, hindering any significant level of deployment. For the past three years, Unikraft (www.unikraft.org), a Linux Foundation project under the Xen Project’s auspices, has had the explicit aim to change this state of affairs to bring unikernels into the mainstream.

If you’re interested, read on, and please be sure to check out:

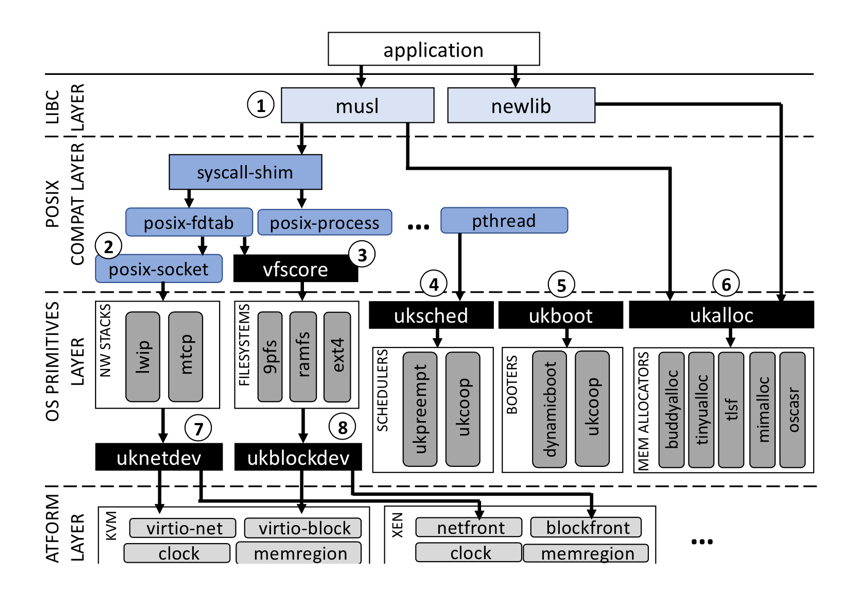

To provide developers with the ability to obtain high performance easily, Unikraft exposes a set of composable, performance-oriented APIs. The figure below shows Unikraft’s architecture: all components are libraries with their own Makefile and Kconfig configuration files, and so can be added to the unikernel build independently of each other.

Figure 1. Unikraft ‘s fully modular architecture showing high-performance APIs

APIs are also micro-libraries that can be easily enabled or disabled via a Kconfig menu; Unikraft unikernels can compose which APIs to choose to best cater to an application’s needs. For example, an RCP-style application might turn off the uksched API (➃ in the figure) to implement a high performance, run-to-completion event loop; similarly, an application developer can easily select an appropriate memory allocator (➅) to obtain maximum performance, or to use multiple different ones within the same unikernel (e.g., a simple, fast memory allocator for the boot code, and a standard one for the application itself).

|

|

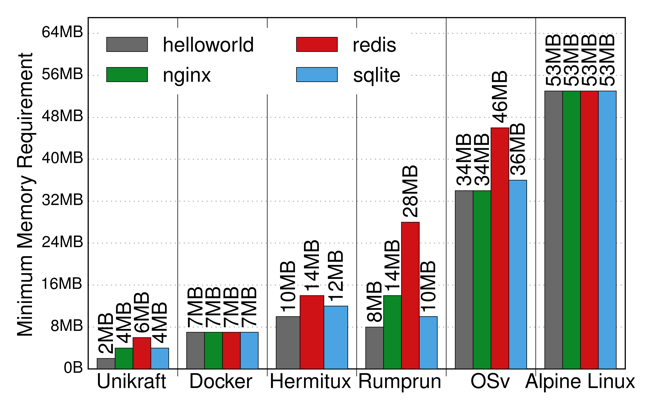

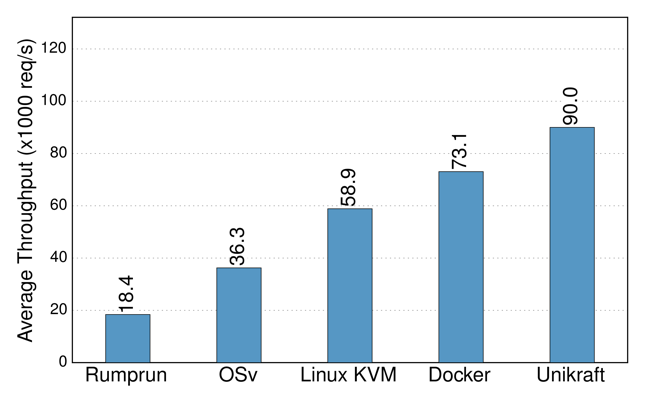

| Figure 2. Unikraft memory consumption vs. other unikernel projects and Linux | Figure 3. Unikraft NGINX throughput versus other unikernels, Docker, and Linux/KVM. |

These APIs, coupled with the fact that all Unikraft’s components are fully modular, results in high performance. Figure 2, for instance, shows Unikraft having lower memory consumption than other unikernel projects (HermiTux, Rump, OSv) and Linux (Alpine); and Figure 3 shows that Unikraft outperforms them in terms of NGINX requests per second, reaching 90K on a single CPU core.

Further, we are working on (1) a performance profiler tool to be able to quickly identify potential bottlenecks in Unikraft images and (2) a performance test tool that can automatically run a large set of performance experiments, varying different configuration options to figure out optimal configurations.

Forcing users to port applications to a unikernel to obtain high performance is a showstopper. Arguably, a system is only as good as the applications (or programming languages, frameworks, etc.) can run. Unikraft aims to achieve good POSIX compatibility; one way of doing so is supporting a libc (e.g., musl), along with a large set of Linux syscalls.

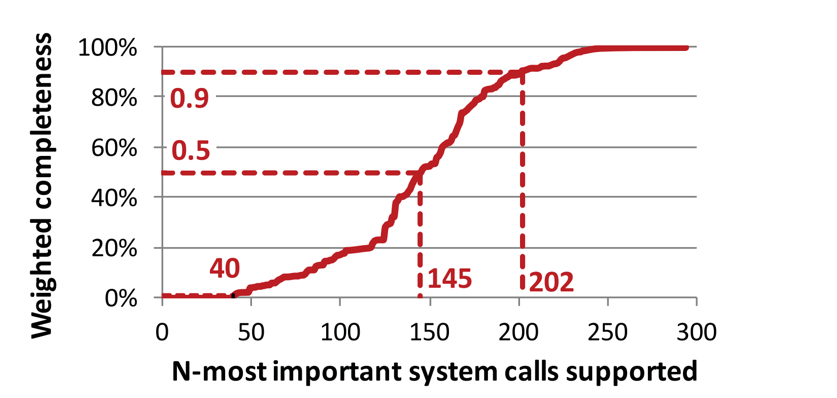

Figure 4. Only a certain percentage of syscalls are needed to support a wide range of applications

While there are over 300 of these, many of them are not needed to run a large set of applications; as shown in Figure 1 (taken from [5]). Having in the range of 145, for instance, is enough to support 50% of all libraries and applications in a Ubuntu distribution (many of which are irrelevant to unikernels, such as desktop applications). As of this writing, Unikraft supports over 130 syscalls and a number of mainstream applications (e.g., SQLite, Nginx, Redis), programming languages and runtime environments such as C/C++, Go, Python, Ruby, Web Assembly, and Lua, not to mention several different hypervisors (KVM, Xen, and Solo5) and ARM64 bare-metal support.

Another apparent downside of unikernel projects is the almost total lack of integration with existing, major DevOps and orchestration frameworks. Working towards the goal of integration, in the past year, we created the kraft tool, allowing users to choose an application and a target platform simply (e.g., KVM on x86_64) and take care of building the image running it.

Beyond this, we have several sub-projects ongoing to support in the coming months:

In all, we believe Unikraft is getting closer to bridging the gap between unikernel promise and actual deployment. We are very excited about this year’s upcoming features and developments, so please feel free to drop us a line if you have any comments, questions, or suggestions at info@unikraft.io.

About the author: Dr. Felipe Huici is Chief Researcher, Systems and Machine Learning Group, NEC Laboratories Europe GmbH

[1] Unikernels Rethinking Cloud Infrastructure. http://unikernel.org/

[2] Is the Time Ripe for Unikernels to Become Mainstream with Unikraft? FOSDEM 2021 Microkernel developer room. https://fosdem.org/2021/schedule/event/microkernel_unikraft/

[3] Severely Debloating Cloud Images with Unikraft. FOSDEM 2021 Virtualization and IaaS developer room. https://fosdem.org/2021/schedule/event/vai_cloud_images_unikraft/

[4] Welcome to the Unikraft Stand! https://stands.fosdem.org/stands/unikraft/

[5] A study of modern Linux API usage and compatibility: what to support when you’re supporting. Eurosys 2016. https://dl.acm.org/doi/10.1145/2901318.2901341

With the recent surge in popularity of cryptocurrencies and related technologies, Square felt an industry group was needed to protect against litigation and other threats against core cryptocurrency technology and ensure the ecosystem remains vibrant and open for developers and companies.

The same way Open Invention Network (OIN) and LOT Network add a layer of patent protection to inter-company collaboration on open source technologies, COPA aims to protect open source cryptocurrency technology. Feeling safe from the threat of lawsuits is a precursor to good collaboration.

By joining COPA, a member can feel secure it can innovate in the cryptocurrency space without fear of litigation between other members.

Square’s core purpose is economic empowerment, and they see cryptocurrency as a core technological pillar. Square helped start and fund COPA with the hope that by encouraging innovation in the cryptocurrency space, more useful ideas and products would get created. COPA management has now diversified to an independent board of technology and regulatory experts, and Square maintains a minority presence.

No! Anyone can join and benefit from being a member of COPA, regardless of whether they have patents or not. There is no barrier to entry – members can be individuals, start-ups, small companies, or large corporations. Here is how COPA works:

Please express interest and get access to our membership agreement here: https://opencrypto.org/joining-copa/

Throughout the modern business era, industries and commercial operations have shifted substantially to digital processes. Whether you look at EDI as a means to exchange invoices or cloud-based billing and payment solutions today, businesses have steadily been moving towards increasing digital operations. In the last few years, we’ve seen the promises of digital transformation come alive, particularly in industries that have shifted to software-defined models. The next step of this journey will involve enabling digital transactions through decentralized networks.

A fundamental adoption issue will be figuring out who controls and decides how a decentralized network is governed. It may seem oxymoronic at first, but decentralized networks still need governance. A future may hold autonomously self-governing decentralized networks, but this model is not accepted in industries today. The governance challenge with a decentralized network technology lies in who and how participants in a network will establish and maintain policies, network operations, on/offboarding of participants, setting fees, configurations, and software changes and are among the issues that will have to be decided to achieve a successful network. No company wants to participate or take a dependency on a network that is controlled or run by a competitor, potential competitor, or any single stakeholder at all for that matter.

Earlier this year, we presented a solution for Open Governance Networks that enable an industry or ecosystem to govern itself in an open, inclusive, neutral, and participatory model. You may be surprised to learn that it’s based on best practices in open governance we’ve developed over decades of facilitating the world’s most successful and competitive open source projects.

For the last few years, a running technology joke has been “describe your problem, and someone will tell you blockchain is the solution.” There have been many other concerns raised and confusion created, as overnight headlines hyped cryptocurrency schemes. Despite all this, behind the scenes, and all along, sophisticated companies understood a distributed ledger technology would be a powerful enabler for tackling complex challenges in an industry, or even a section of an industry.

At the Linux Foundation, we focused on enabling those organizations to collaborate on open source enterprise blockchain technologies within our Hyperledger community. That community has driven collaboration on every aspect of enterprise blockchain technology, including identity, security, and transparency. Like other Linux Foundation projects, these enterprise blockchain communities are open, collaborative efforts. We have had many vertical industry participants engage, from retail, automotive, aerospace, banking, and others participate with real industry challenges they needed to solve. And in this subset of cases, enterprise blockchain is the answer.

The technology is ready. Enterprise blockchain has been through many proof-of-concept implementations, and we’ve already seen that many organizations have shifted to production deployments. A few notable examples are:

However, just because we have the technology doesn’t mean we have the appropriate conditions to solve adoption challenges. A certain set of challenges about networks’ governance have become a “last mile” problem for industry adoption. While there are many examples of successful production deployments and multi-stakeholder engagements for commercial enterprise blockchains already, specific adoption scenarios have been halted over uncertainty, or mistrust, over who and how a blockchain network will be governed.

To precisely state the issue, in many situations, company A does not want to be dependent on, or trust, company B to control a network. For specific solutions that require broad industry participation to succeed, you can name any industry, and there will be company A and company B.

We think the solution to this challenge will be Open Governance Networks.

An Open Governance Network is a distributed ledger service, composed of nodes, operated under the policies and directions of an inclusive set of industry stakeholders.

Open Governance Networks will set the policies and rules for participation in a decentralized ledger network that acts as an industry utility for transactions and data sharing among participants that have permissions on the network. The Open Governance Network model allows any organization to participate. Those organizations that want to be active in sharing the operational costs will benefit from having a representative say in the policies and rules for the network itself. The software underlying the Open Governance Network will be open source software, including the configurations and build tools so that anyone can validate whether a network node complies with the appropriate policies.

Many who have worked with the Linux Foundation will realize an open, neutral, and participatory governance model under a nonprofit structure that has already been thriving for decades in successful open source software communities. All we’re doing here is taking the same core principles of what makes open governance work for open source software, open standards, and open collaboration and applying those principles to managing a distributed ledger. This is a model that the Linux Foundation has used successfully in other communities, such as the Let’s Encrypt certificate authority.

Our ecosystem members trust the Linux Foundation to help solve this last mile problem using open governance under a neutral nonprofit entity. This is one solution to the concerns about neutrality and distributed control. In pan-industry use cases, it is generally not acceptable for one participant in the network to have power in any way that could be used as an advantage over someone else in the industry. The control of a ledger is a valuable asset, and competitive organizations generally have concerns in allowing one entity to control this asset. If not hosted in a neutral environment for the community’s benefit, network control can become a leverage point over network users.

We see this neutrality of control challenge as the primary reason why some privately held networks have struggled to gain widespread adoption. In order to encourage participation, industry leaders are looking for a neutral governance structure, and the Linux Foundation has proven the open governance models accomplish that exceptionally well.

This neutrality of control issue is very similar to the rationale for public utilities. Because the economic model mirrors a public utility, we debated calling these “industry utility networks.” In our conversations, we have learned industry participants are open to sharing the cost burden to stand up and maintain a utility. Still, they want a low-cost, not profit-maximizing model. That is why our nonprofit model makes the most sense.

It’s also not a public utility in that each network we foresee today would be restricted in participation to those who have a stake in the network, not any random person in the world. There’s a layer of human trust that our communities have been enabling on top of distributed networks, which started with the Trust over IP Foundation.

Unlike public cryptocurrency networks where anyone can view the ledger or submit proposed transactions, industries have a natural need to limit access to legitimate parties in their industry. With minor adjustments to address the need for policies for transactions on the network, we believe a similar governance model applied to distributed ledger ecosystems can resolve concerns about the neutrality of control.

Open Governance Networks can be reduced to the following building block components:

Of these building blocks, the Linux Foundation already offers its communities the Business and Technical Governance needed for Open Governance Networks. The final component is the new, LF Open Governance Networks.

LF Open Governance Networks will enable our communities to establish their own Open Governance Network and have an entity to process agreements and collect transaction fees. This new entity is a Delaware nonprofit, a nonstock corporation that will maximize utility and not profit. Through agreements with the Linux Foundation, LF Governance Networks will be available to Open Governance Networks hosted at the Linux Foundation.

If you’re interested in learning more about hosting an Open Governance Network at the Linux Foundation, please contact us at governancenetworks@linuxfoundation.org

The post Understanding Open Governance Networks appeared first on Linux Foundation.

The sipcalc command prevents hard CIDR calculations and helps your fellow sysadmins adhere to the CIDR house rules.

Read More at Enable Sysadmin

What’s the next Linux workload that you plan to containerize?

You’re convinced that containers are a good thing, but what’s the next workload to push to a container?

khess

Tue, 2/9/2021 at 8:18pm

Image

Image by Gerd Altmann from Pixabay

I’m sure many of my fellow sysadmins have been tasked with cutting costs, making infrastructure more usable, making services more accessible, enhancing security, and enabling developers to be more autonomous when working with their test, development, and staging environments. You might have started your virtualization efforts by moving some web sites to containers. You might also have moved an application or two as well. This poll focuses on the next workload that you plan to containerize.

Topics:

Linux

Linux Administration

Containers

Read More at Enable Sysadmin

ktest: Automated Testing For Kernel ProgrammersOracle Linux kernel developer Daniel Jordan contributes this post on ktest, a tool for making kernel programmers’ lives easier.In October 2010, Steven Rostedtannouncedon the LKML that he was working on a script calledktest.plto automate certain aspects of Linux kernel testing. The script is aimed at individual kernel programmers testing their patch series, and provides an alternative to the Autotest framework,…

Click to Read More at Oracle Linux Kernel Development

There are many options to manage Linux container registries using the registries.conf file.

Read More at Enable Sysadmin

Sysadmin hardware: Considerations for planning a PC build

There are many considerations when it comes to putting together a new PC. You must consider the purpose, hardware compatibility, and cost. Follow my steps as I put together a new gaming system.

tcarriga

Tue, 2/9/2021 at 4:14am

Image

photo by Tyler Carrigan

Disclaimer: In 2021, building and buying computers has seen an enormous uptick. Combine the demand for components with the manufacturing and shipping delays caused by current events, and you have a very tough market to jump into. The newest graphics cards and CPUs can be difficult to find markup. Despite this, the information below will be helpful when planning your next build.

Topics:

Linux

Hardware

Read More at Enable Sysadmin