In the previous article, we started building the foundation for building a custom operator that can be applied to real-world use cases. In this part of our tutorial series, we are going to create a generic example-operator that manages our apps of Examplekind. We have already used the operator-sdk to build it out and implement the custom code in a repo here. For the tutorial, we will rebuild what is in this repo.

The example-operator will manage our Examplekind apps with the following behavior:

-

Create an Examplekind deployment if it doesn’t exist using an Examplekind CR spec (for this example, we will use an nginx image running on port 80).

-

Ensure that the pod count is the same as specified in the Examplekind CR spec.

-

Update the Examplekind CR status with:

-

A label called Group defined in the spec

-

An enumerated list of the Podnames

-

Prerequisites

You’ll want to have the following prerequisites installed or set up before running through the tutorial. These are prerequisites to install operator-sdk, as well as a a few extras you’ll need.

-

golang version v1.10+

-

dep version v0.5.0+

-

gpg (brew install gpg)

-

docker version 17.03+

-

kubectl version v1.10.0+

-

minikube latest version

-

An account on DockerHub to push images to a repository

Initialize your Environment

1. Make sure you’ve got your Kubernetes cluster running by spinning up minikube. minikube start

2. Create a new folder for your example operator within your Go path.

mkdir -p $GOPATH/src/github.com/linux-blog-demo

cd $GOPATH/src/github.com/linux-blog-demo

3. Initialize a new example-operator project within the folder you created using the operator-sdk.

operator-sdk new example-operator

cd example-operator

|

What just got created? By running the operator-sdk new command, we scaffolded out a number of files and directories for our defined project. See the project layout for a complete description; for now, here are some important directories to note:

|

Create a Custom Resource and Modify it

4. Create the Custom Resource and it’s API using the operator-sdk.

operator-sdk add api --api-version=example.kenzan.com/v1alpha1 --kind=Examplekind

|

What just got created? Under pkg/ais/example/v1alpha, a new generic API was created for Examplekind in the file examplekind_types.go. Under deploy/crds, two new K8s yamls were generated:

A DeepCopy methods library is generated for copying the Examplekind object |

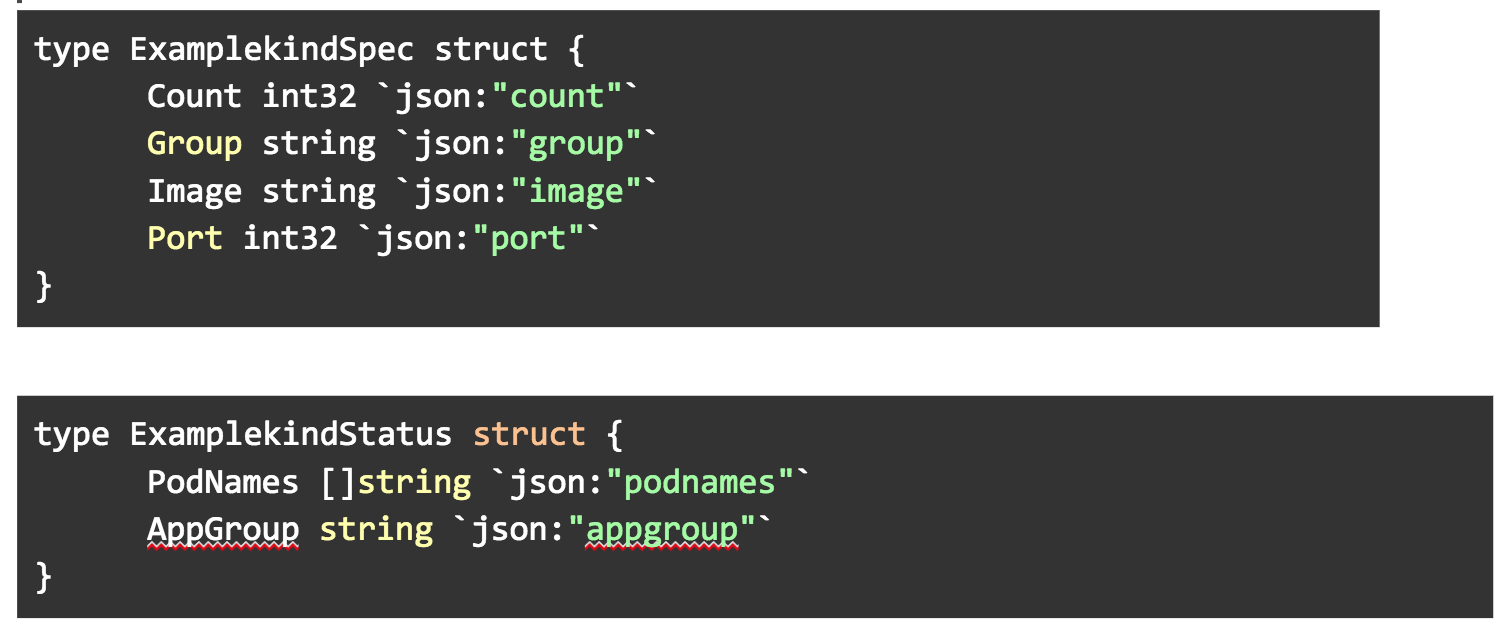

5. We need to modify the API in pkg/apis/example/v1alpha1/examplekind_types.go with some custom fields for our CR. Open this file in a text editor. Add the following custom variables to ExamplekindSpec and ExamplekindStatus structs.

|

The variables in these structs are used to generate the data structures in the yaml spec for the Custom Resource, as well as variables we can later display in getting the status of the Custom Resource. |

6. After modifying the examplekind_types.go, regenerate the code.

operator-sdk generate k8s

|

What just got created? You always want to run the operator-sdk generate command after modifying the API in the _types.go file. This will regenerate the DeepCopy methods. |

Create a New Controller and Write Custom Code for it

7. Now add a controller to your operator.

operator-sdk add controller --api-version=example.kenzan.com/v1alpha1 --kind=Examplekind

|

What just got created? |

8. Replace the examplekind_controller.go file with the one in our completed repo. The new file contains the custom code that we’ve added to the generated skeleton.

Wait, what was in the custom code we just added?

If you want to know what is happening in the code we just added, read on. If not, you can skip to the next section to continue the tutorial.

To break down what we are doing in our examplekind_controller.go, lets first go back to what we are trying to accomplish:

-

Create an Examplekind deployment if it doesn’t exist

-

Make sure our count matches what we defined in our manifest

-

Update the status with our group and podnames.

To achieve these things, we’ve created three methods: one to get pod names, one to create labels for us, and last to create a deployment.

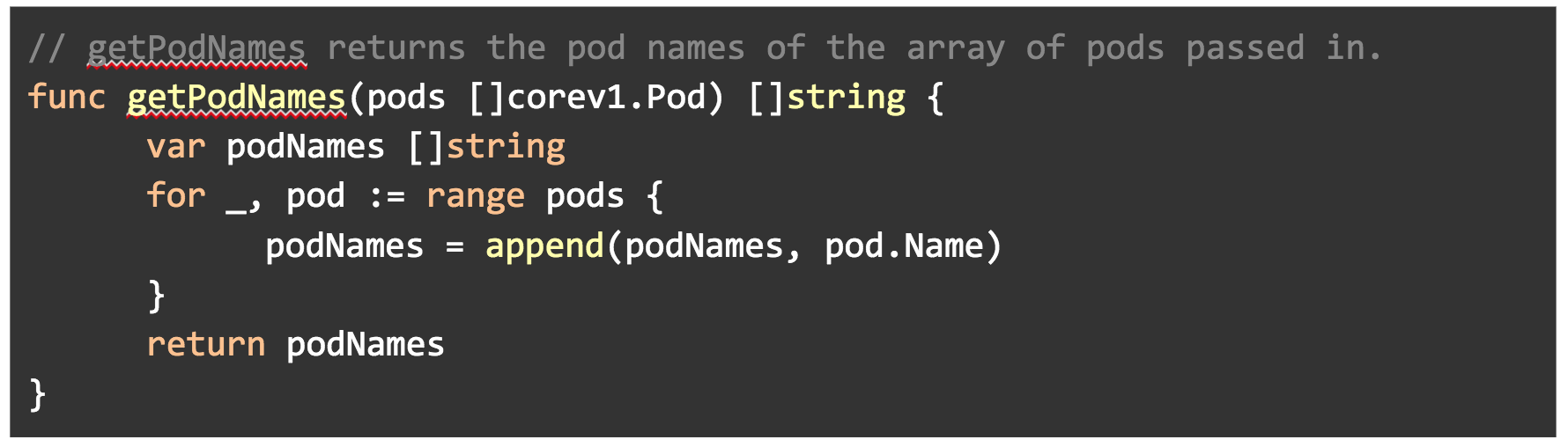

In getPodNames(), we are using the core/v1 API to get the names of pods and appending them to a slice.

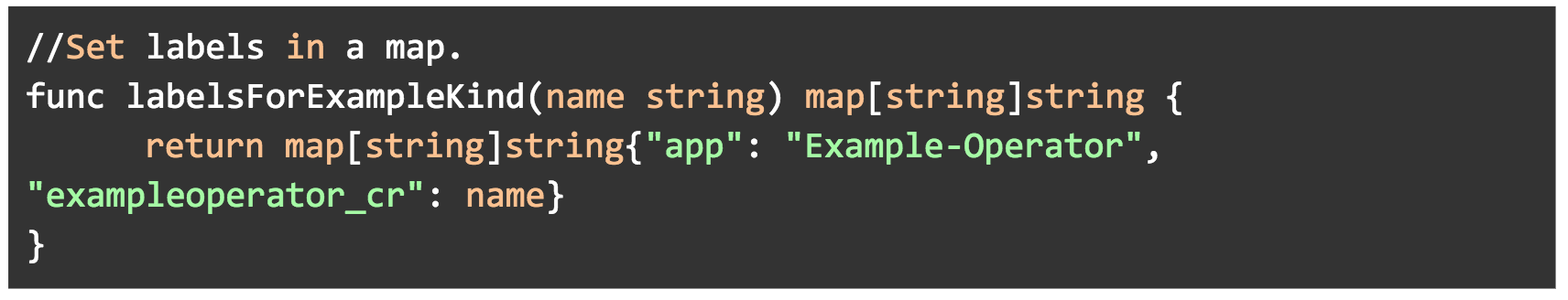

In labelsForExampleKind(), we are creating a label to be used later in our deployment. The operator name will be passed into this as a name value.

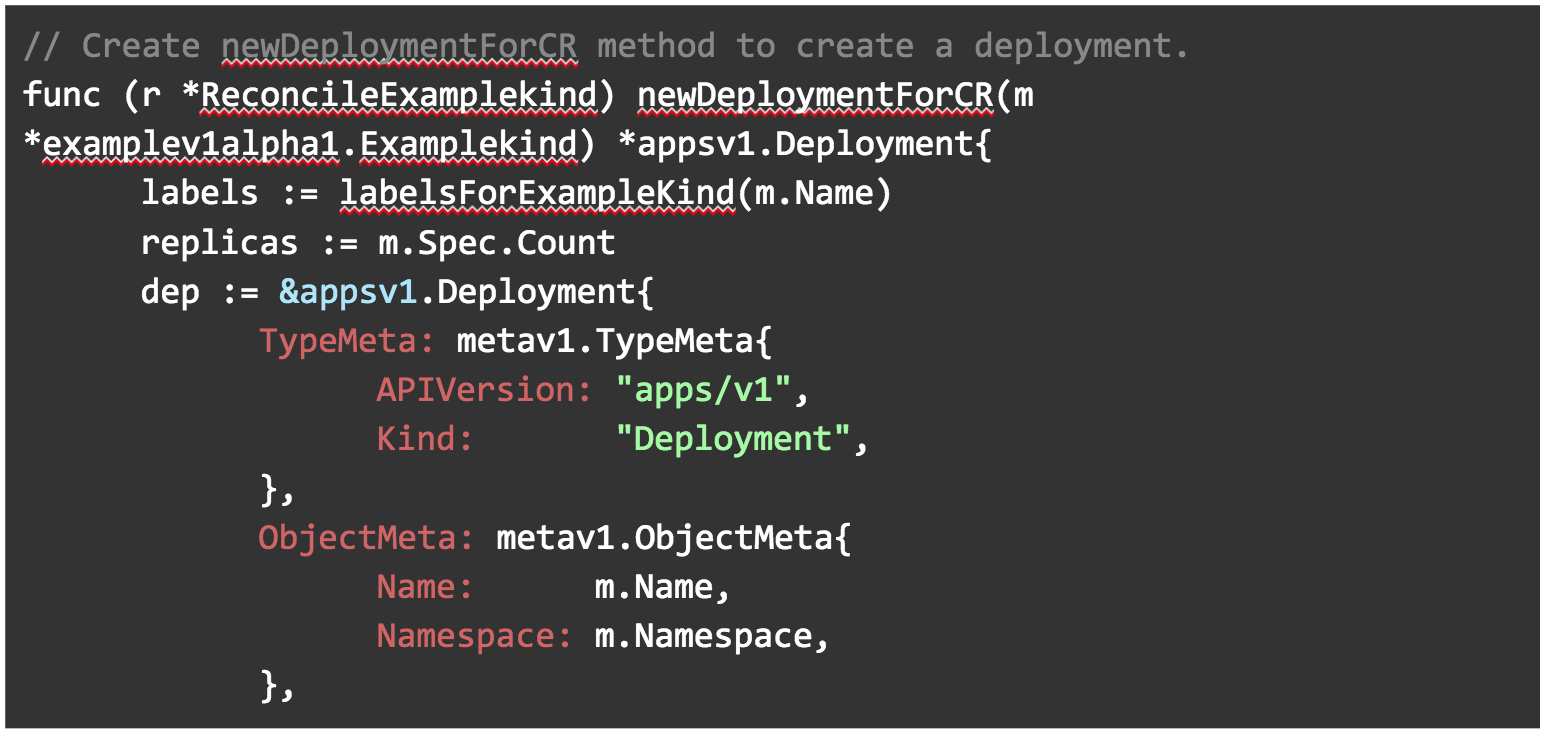

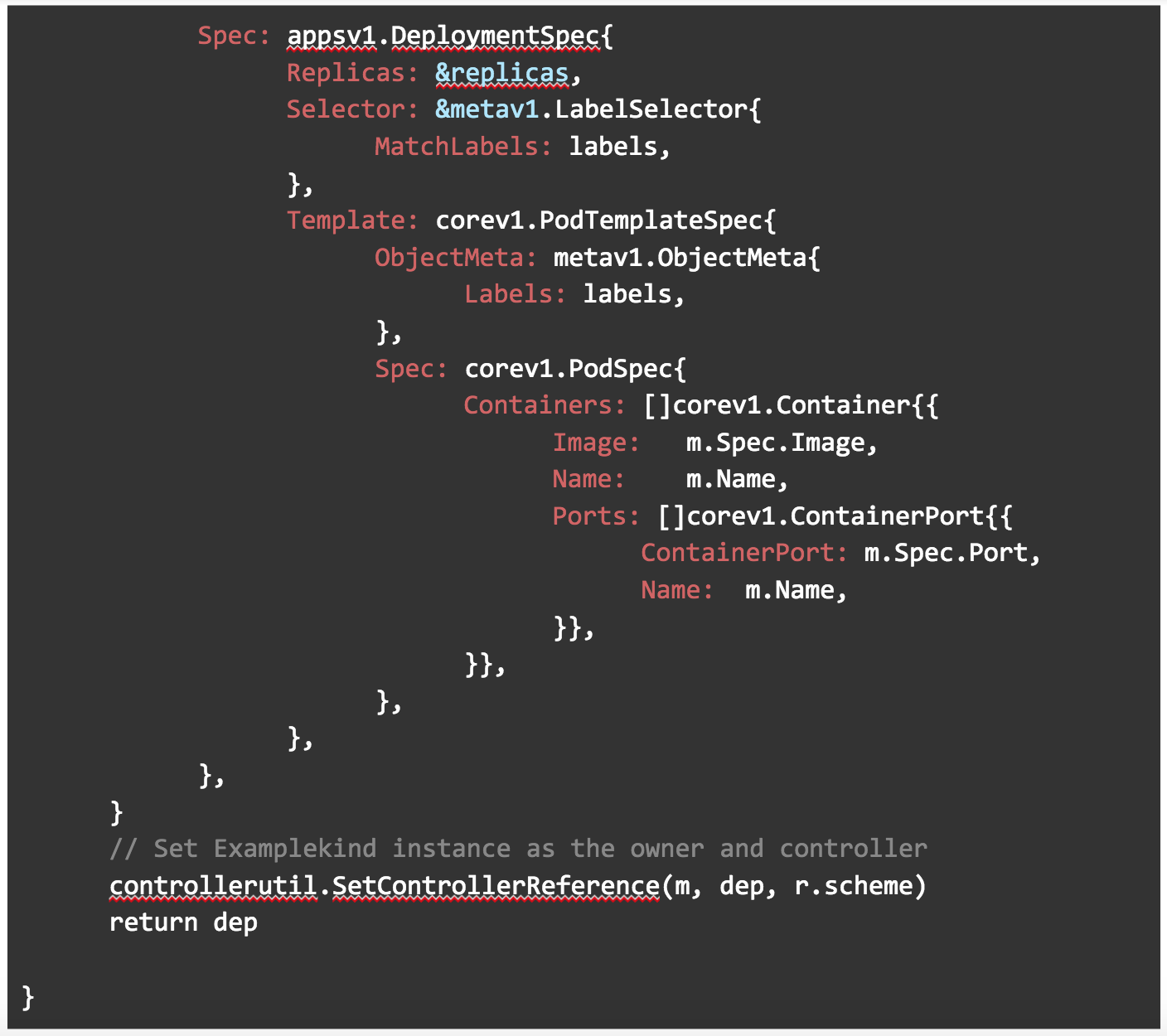

In newDeploymentForCR(), we are creating a deployment using the apps/v1 API. The label method is used here to pass in a label. It uses whatever image we specify in our manifest as you can see below in Image: m.Spec.Image. Replicas for this deployment will also use the count field we specified in our manifest.

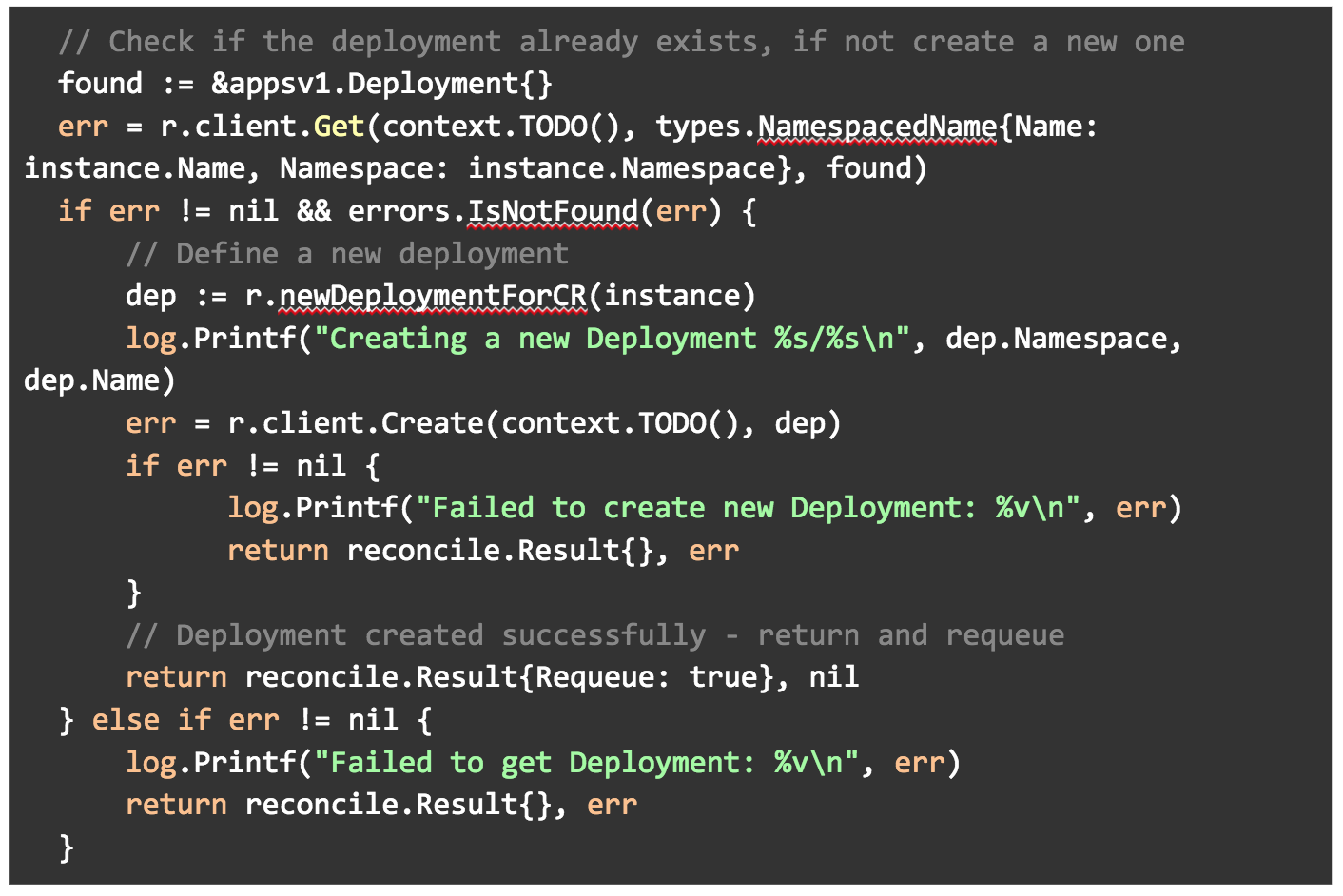

Then in our main Reconcile() method, we check to see if our deployment exists. If it does not, we create a new one using the newDeploymentForCR() method. If for whatever reason it cannot create a deployment, print an error to the logs.

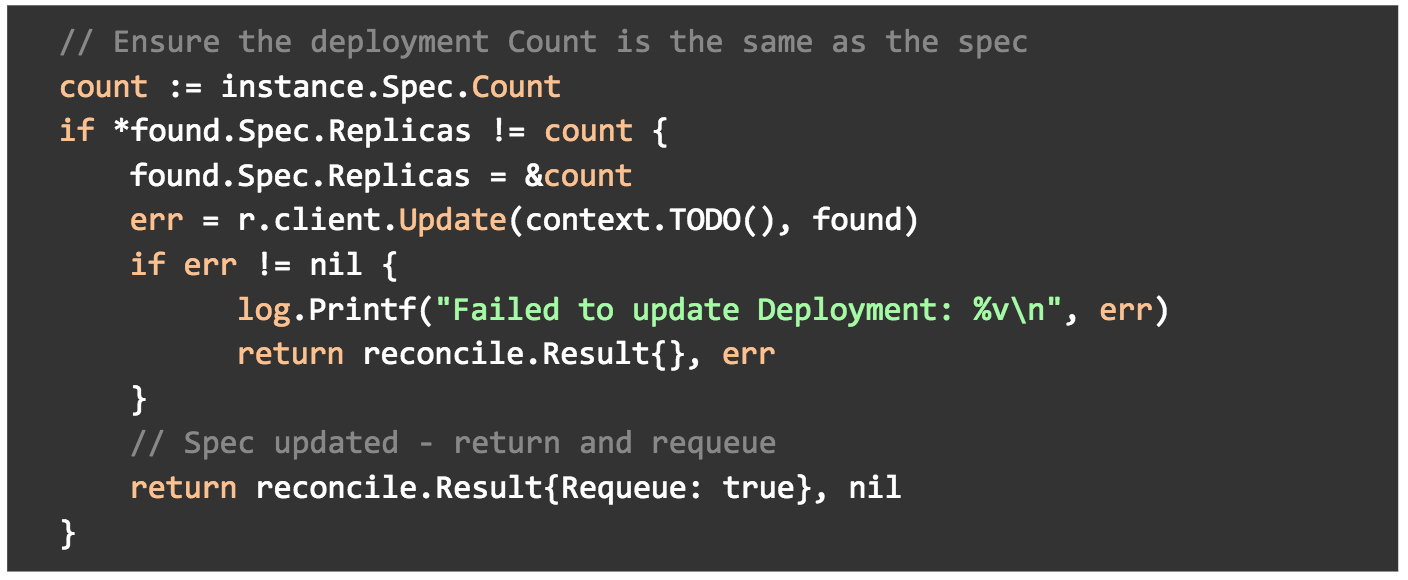

In the same Reconcile() method, we are also making sure that the deployment replica field is set to our count field in the spec of our manifest.

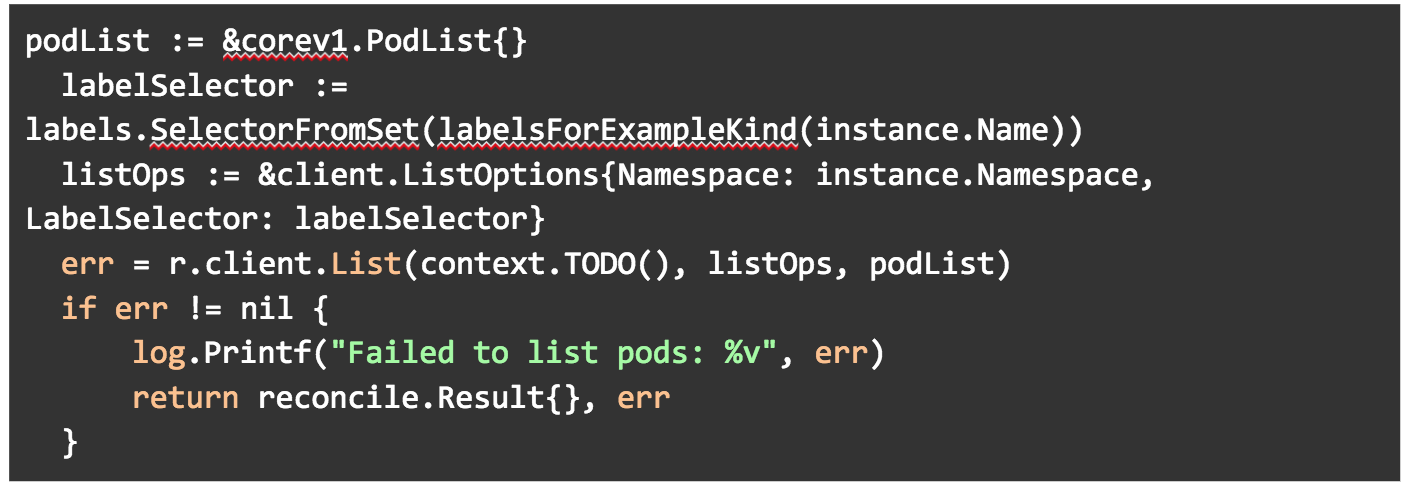

And we are getting a list of our pods that matches the label we created.

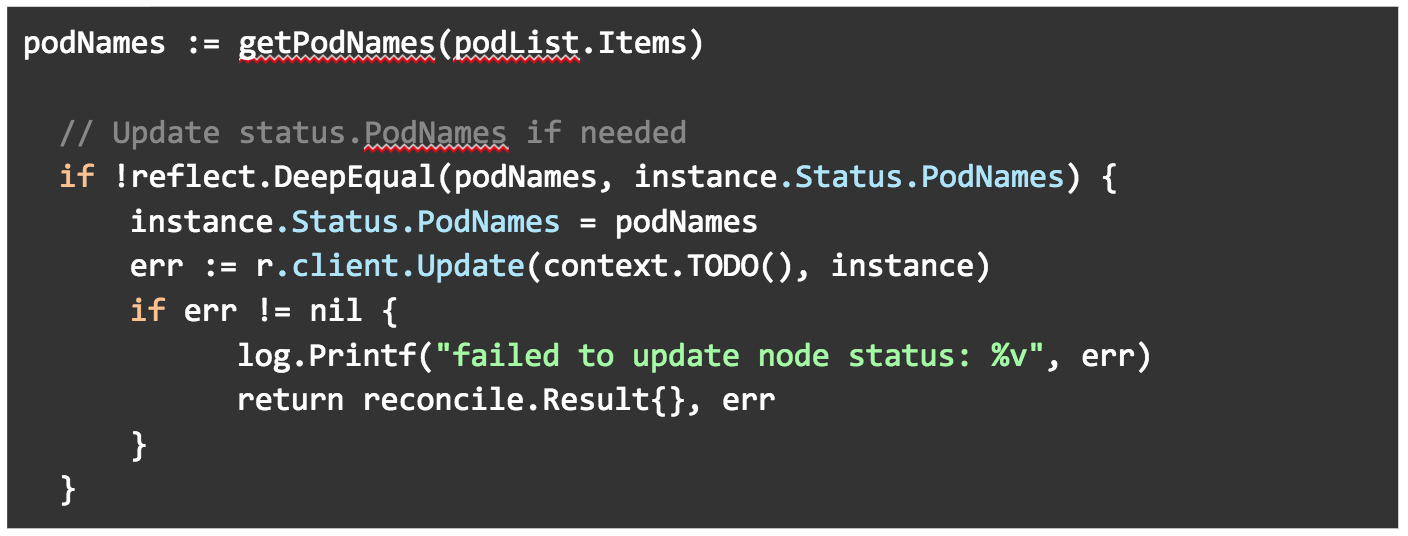

We are then passing the pod list into the getPodNames() method. We are making sure that the podNames field in our ExamplekindStatus ( in examplekind_types.go) is set to the podNames list.

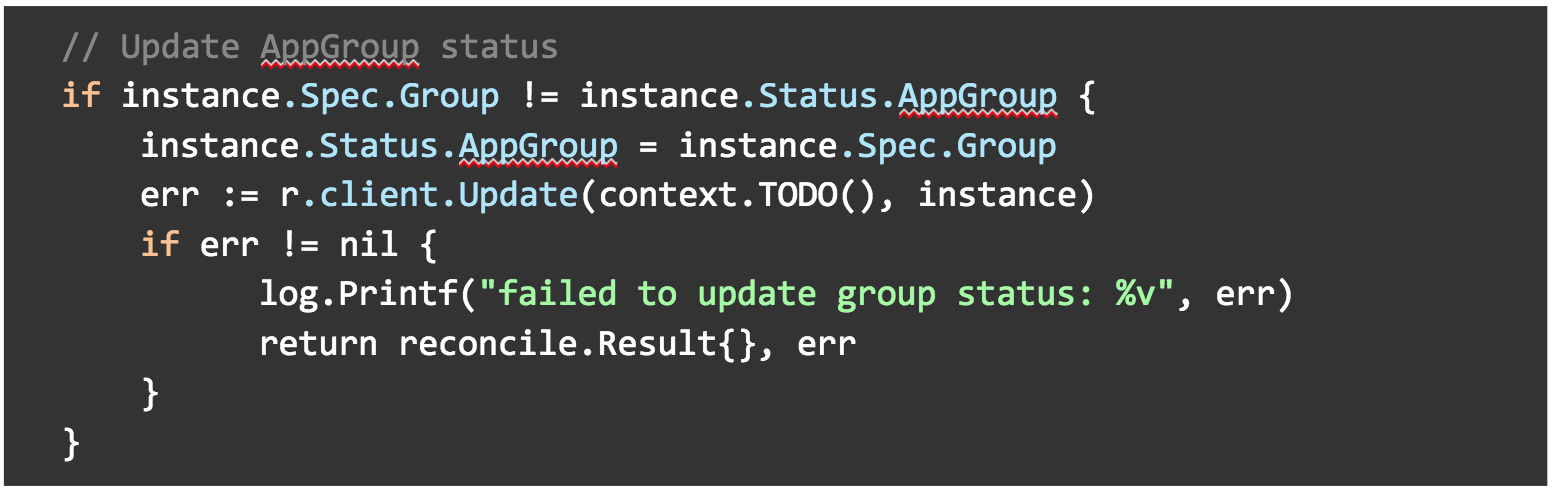

Finally, we are making sure the AppGroup in our ExamplekindStatus (in examplekind_types.go) is set to the Group field in our Examplekind spec (also in examplekind_types.go).

Deploy your Operator and Custom Resource

We could run the example-operator as Go code locally outside the cluster, but here we are going to run it inside the cluster as its own Deployment, alongside the Examplekind apps it will watch and reconcile.

9. Kubernetes needs to know about your Examplekind Custom Resource Definition before creating instances, so go ahead and apply it to the cluster.

kubectl create -f deploy/crds/example_v1alpha1_examplekind_crd.yaml

10. Check to see that the custom resource definition is deployed.

kubectl get crd

11. We will need to build the example-operator as an image and push it to a repository. For simplicity, we’ll create a public repository on your account on dockerhub.com.

a. Go to https://hub.docker.com/ and login

b. Click Create Repository

c. Leave the namespace as your username

d. Enter the repository as “example-operator”

e. Leave the visibility as Public.

f. Click Create

12. Build the example-operator.

operator-sdk build [Dockerhub username]/example-operator:v0.0.1

13. Push the image to your repository on Dockerhub (this command may require logging in with your credentials).

docker push [Dockerhub username]/example-operator:v0.0.1

14. Open up the deploy/operator.yaml file that was generated during the build. This is a manifest that will run your example-operator as a Deployment in Kubernetes. We need to change the image so it is the same as the one we just pushed.

a. Find image: REPLACE_IMAGE

b. Replace with image: [Dockerhub username]/example-operator:v0.0.1

15. Set up Role-based Authentication for the example-operator by applying the RBAC manifests that were previously generated.

kubectl create -f deploy/service_account.yaml

kubectl create -f deploy/role.yaml

kubectl create -f deploy/role_binding.yaml

16. Deploy the example-operator.

kubectl create -f deploy/operator.yaml

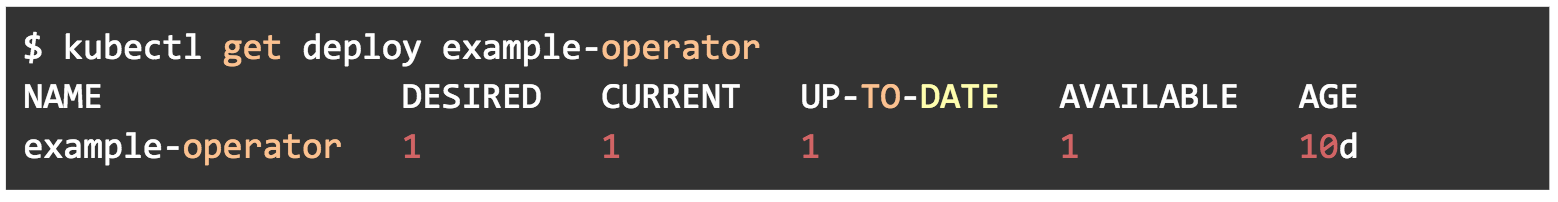

17. Check to see that the example-operator is up and running.

kubectl get deploy

18. Now we’ll deploy several instances of the Examplekind app for our operator to watch. Open up the deploy/crds/example_v1alpha1_examplekind_cr.yaml deployment manifest. Update fields so they appear as below, with name, count, group, image and port. Notice we are adding fields that we defined in the spec struct of our pkg/apis/example/v1alpha1/examplekind_types.go.

apiVersion: "example.kenzan.com/v1alpha1" kind: "Examplekind" metadata: name: "kenzan-example" spec: count: 3 group: Demo-App image: nginx port: 80

19. Apply the Examplekind app deployment.

kubectl apply -f deploy/crds/example_v1alpha1_examplekind_cr.yaml

20. Check that an instance of the Examplekind object exists in Kubernetes.

kubectl get Examplekind

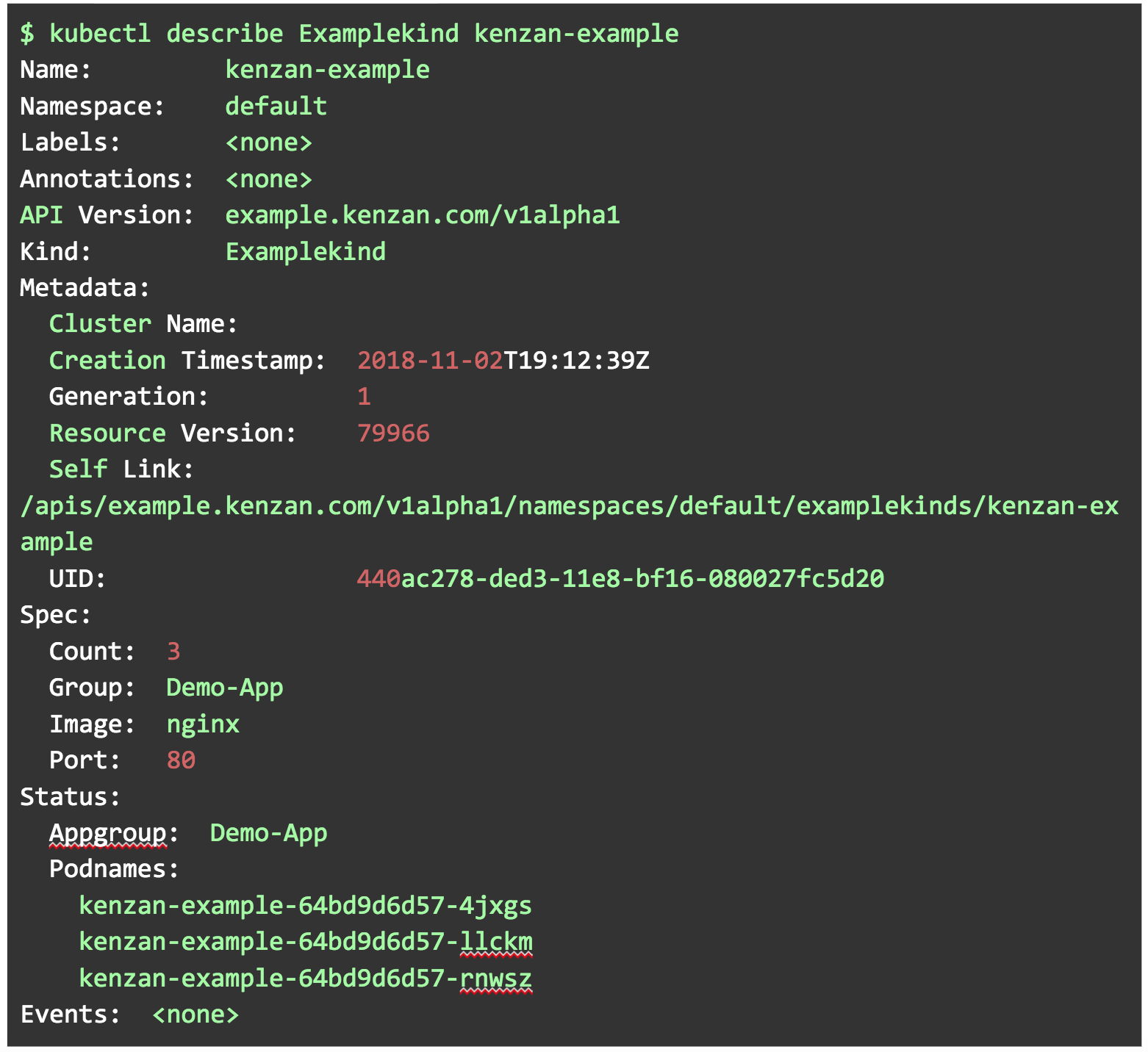

21. Let’s describe the Examplekind object to see if our status now shows as expected.

kubectl describe Examplekind kenzan-example

Note that the Status describes the AppGroup the instances are a part of (“Demo-App”), as well as enumerates the Podnames.

22. Within the deploy/crds/example_v1alpha1_examplekind_cr.yaml, change the count to be 1 pod. Apply the deployment again.

kubectl apply -f deploy/crds/example_v1alpha1_examplekind_cr.yaml

23. Based on the operator reconciling against the spec, you should now have one instance of kenzan-example.

kubectl describe Examplekind kenzan-example

Well done. You’ve successfully created an example-operator, and become familiar with all the pieces and parts needed in the process. You may even have a few ideas in your head about which stateful applications you could potentially automate the management of for your organization, getting away from manual intervention. Take a look at the following links to build on your Operator knowledge:

-

See some of the existing operators available for specific applications.

-

The CoreOS introduction blog post includes some valuable best practices on building operators

-

The K8s core API is key to writing custom code for your operators

-

The operator-sdk cli reference shows CLI commands

Toye Idowu is a Platform Engineer at Kenzan Media.