What Is Machine Learning

I am partial towards this definition by Nvidia:

“Machine Learning at its most basic is the practice of using algorithms to parse data, learn from it, and then make a determination or prediction about something in the world.”

The first step to understanding machine learning is understanding what kinds of problems it intends to solve, based on the foregoing definition. It is principally concerned with mapping data to mathematical models — allowing us to make inferences (predictions) about measurable phenomena in the world. From the machine learning model’s predictions, we can then make rational, informed decisions with increased empirical certainty.

Take, for example, the adaptive brightness on your phone screen. Modern phones have front- and rear-facing cameras that allow the phone to constantly detect the intensity of ambient light, and then adjust the brightness of the screen to make it more pleasant for viewing. But, depending on an individuals taste, they might not like the gradient preselected by the software, and have to constantly fiddle with the brightness by hand. In the end, they turn off adaptive brightness altogether!

What if, instead, the phone employed machine learning software, that registered the intensity of ambient light, and the brightness that the user selected by hand as one example of their preference. Over time, the phone could build a model of an individuals preferences, and then make predictions of how to set the screen brightness on a full continuum of ambient light conditions. (This is a real thing in the next version of Android).

As you can imagine, machine learning could be deployed for all kinds of data relationships susceptible to modeling, which would allow programmers and inventors to increasingly automate decision-making. To be sure, a perfect understand of the methodology of machine learning is to have a fairly deep appreciation for statistical sampling methods, data modeling, linear algebra, and other specialized disciplines.

I will instead try to offer a brief overview of the important terms and concepts in machine learning, and offer an operative (but fictional) example from my own time as a discovery lawyer. In closing, I will discuss a few of the common problems associated with machine learning.

Nuts & Bolts: Key Terms

Feature. A feature represents the the variable that you change in order to observe and measure the outcome. Features are the inputs to a machine learning scheme; in other words, a feature is like the independent variable in a science experience, or the x variable on a line graph.

If you were designing a machine learning model that would classify emails as “important” (e.g., as Gmail does with labels), the model could take more than one feature as input to the model: whether the sender is in your address book (yes or no); whether the email contains a particular phrase, like “urgent”; whether the receiver has previously marked emails from the sender as important, among potential measurable features relating to “importance.”

Features need to be measurable, in that they can be mapped to numeric values for the data model.

Label. The label refers to the variable that is observed or measured in response to the various features in a model. For example, if a model meant to predict college applicant acceptance/rejection based off two features — SAT Score & GPA— the label would indicate yes (1) or no (0) for each example fed into the model. A label is like the dependent variable in a science experiment, or the Y variable on a line graph.

Example. An example is one data entry (like a line on an excel spreadsheet) that includes all features and their associated values. An example can be labeled (includes the label, i.e., the Y variable, with it’s value), or unlabeled (the value of the Y variable is unknown).

Training: Broadly, training is the act of feeding a mathematical model with labeled examples, so that the model can infer and make predictions for unlabeled examples.

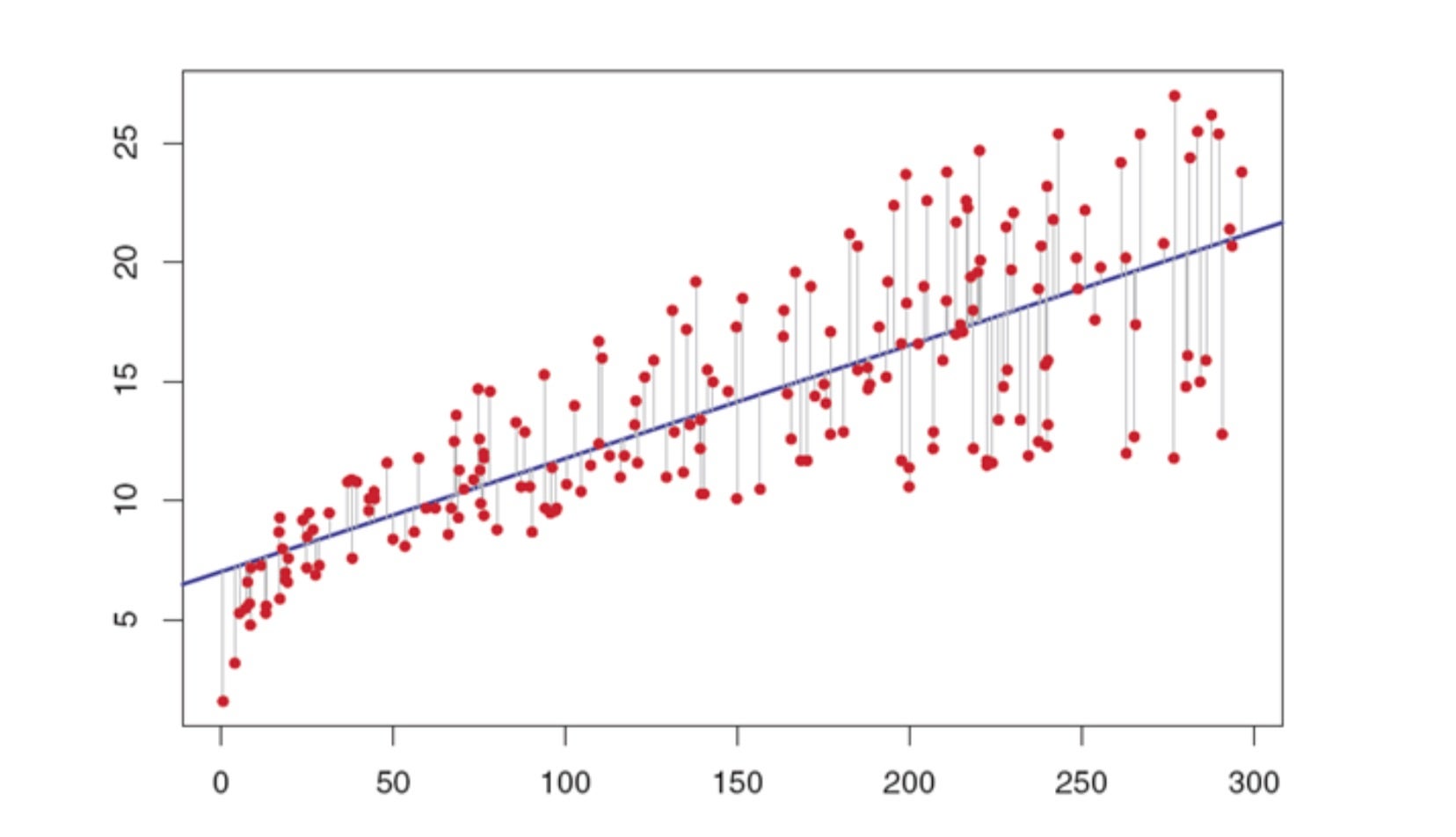

Loss. Loss is the difference between the models prediction and the label on a single example. Statistical models aim to reduce loss as much as possible. For example, if you are to fit a line through a cloud of data points to show the linear growth on the Y-axis as X varies, a model would want a line that fits through all the points such that the sum of every loss is minimized as much as possible. Humans can do this intuitively, but computers can be automated to try different slopes until it arrives at the best mathematical answer.

Generalization/Overfitting: Overfitting is an outcome in which a model does not accurately predict testing data (i.e., doesnt “generalize” well) because the model tries to fit the training data too precisely. These problems can occur from poor sampling.

Types of Models

Regression. A regression model is a model that tries to predict a value along some continuum. For example, a model might try to predict the number of people that will move to California; or the probability that a person will get a dog; or the resell price of a used bicycle on craigslist.

Classification. A classification model is a model that predicts discrete outcomes — in a sense, it sorts inputs into various buckets. For example, a model might look at an image and determine if it is a donut, or not a donut.

Model Design: Linear, or…?

Conceptually, the simplest models are those in which the label can be predicted with a line (i.e., are linear). As you can imagine, some distributions cannot naturally be mapped along a continuous line, and therefore you need other mathematical tools to fit a model to the data. One simple machine learning tool to deal with nonlinear classification problems is feature crosses, which is merely adding a new feature that is the cross product (multiplication) of other existing features.

On the more complex side, models can rely on “neural networks”, so called because they mirror the architecture of neurons in humans cognitive architecture, to model complex linear and non-linear relationships. These networks consists of stacked layers of nodes, each represented a weighted sum with some bias from various input features (with potentially a non-linear layer added in), that ultimately yields an output after the series of transformations is complete. Now onto a (simpler) real life example.

Real Life Example: An Attorney’s Perspective

Perhaps only 20+ years ago, the majority of business records (documents) were kept on paper, and it was the responsibility of junior attorneys to sift through literal reams of paper (most of it irrelevant) to find the proverbial “smoking gun” evidence in all manner of cases. In 2018, nearly all business records are electronic. The stage of litigation in which documents are produced, exchanged, and examined is called “discovery.”

As most business records are now electronic, the process of discovery is now aided and facilitated by the use of computers. This is not a fringe issue for a businesses. Every industry is subject to one or more federal or state stricture relating to varied document retention requirements — prohibitions on the destruction of business records — in order to check and enforce compliance with whatever regulatory schema (tax, environmental, public health, occupational safety, etc.)

Document retention also becomes extremely important in litigation — when a dispute or lawsuit arises between two parties — because there are evidentiary and procedural rules that aim to preserve all documents (information) that are relevant and responsive to the issues in the litigation. Critically, a party that fails to preserve business records in accordance with the court’s rules is subject to stiff penalties, including fines, adverse instructions to a jury, or the forfeit of some or all claims.

A Common but High-Stakes Regulatory Problem Solved by Machine Learning

So, imagine the government is investigating the merger between two broadband companies, and the government suspects that the two competitors engaged in illegal coordination to raise the price of broadband service and simultaneously lower quality. Before they approve the merger, the government wants to be certain that the two parties did not engage in unfair and deceptive business practices.

So, the government commands the two parties to produce for inspection, electronically, every business record (email, internal documents, transcripts of meetings…) that include communications directly between the two parties, has discussions relating to the merger, and/or is related to the pricing of broadband services.

As you might imagine, the total corpus of ALL documents controlled by the two companies borders on the hundreds of millions. That is a lot of paper to sift through for the junior attorneys, and given the government’s very specific criteria, it will take a long time for a human to read each document, determine its purpose, and decide whether its contents merit inclusion in the discovery set. The companies lawyers are also concerned that if they over-include non-responsive documents (i.e., just dump 100’s of millions of documents on the government investigator), they will be deemed to not have complied with the order, and lose out on the merger.

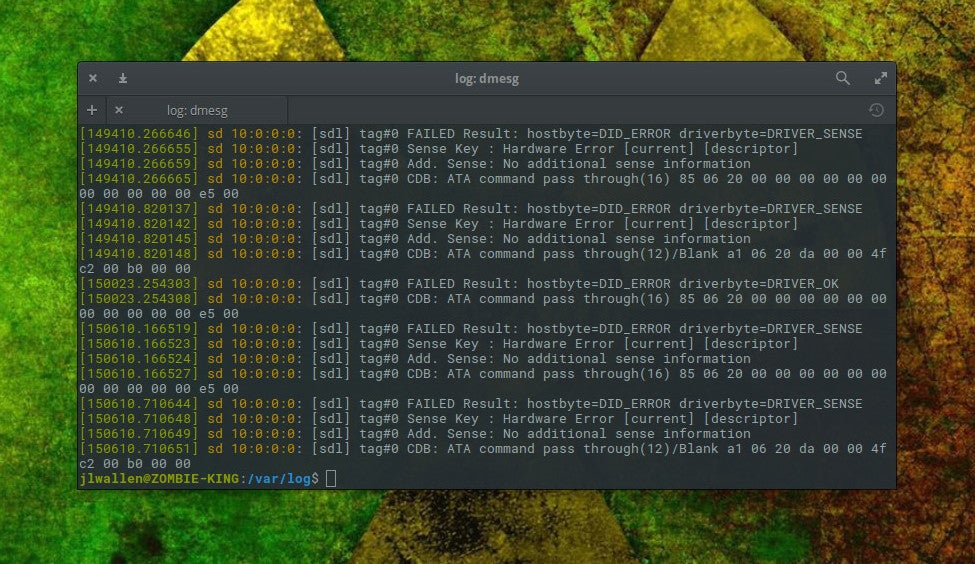

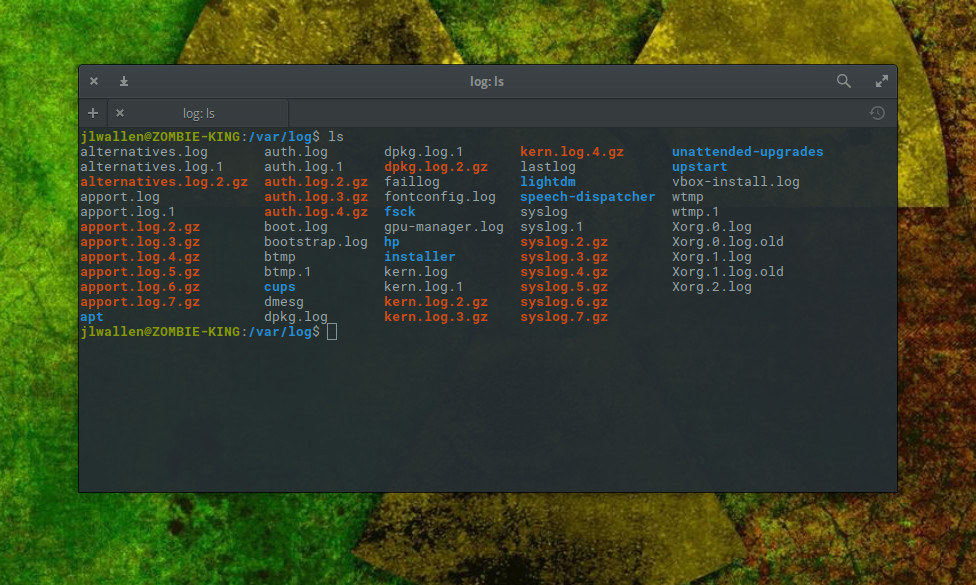

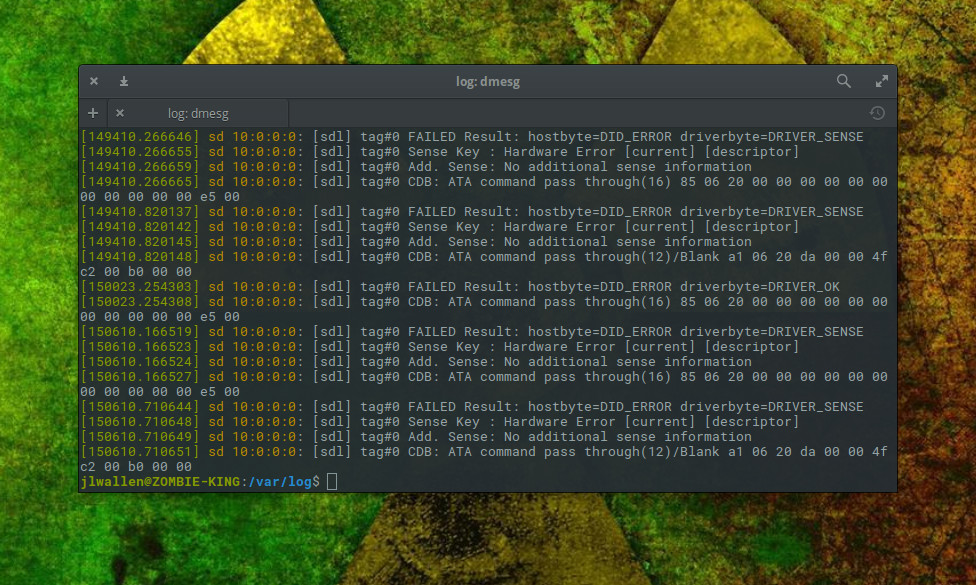

Aha! But our documents can be stored and searched electronically, so maybe they can just design a bunch of keyword searches pick out every document that has the term “pricing”, among other features, before having to review the document for relevancy. This is a huge improvement, but it is still slow, as the lawyers have to anticipate a lot of keyword searches, and still need to read the documents themselves. Enter the machine learning software.

With modern tools, lawyers can load the entire body of documents into one database. First, they will code a representative sample (a number of “examples”) for whether the document should or should not be included in the production of records to the government. These labeled examples will form the basis of the training material to be fed to the model. After the model has been trained, you can provide unlabeled examples (i.e., emails that haven’t been coded for relevance yet), and the machine learning model will predict the probability that the document would have been coded as relevant by a person from all the historical examples it has been fed.

On that basis, you might be able to confidently produce/exclude a million documents, but only have human-coded .1% of those as a training set. In practice, this might mean automatically producing all the documents above some probability threshold, and having a human manually review anything that the model is unsure about. This can result in huge cost savings in time and human capital. Anecdotally, I recall someone expressed doubt to me that the FBI could not have reviewed all of Hillary Clinton’s emails in a scant week or two. With machine learning and even a small team of people, the task is actually relatively trivial.

Upside, Downsides

Bias. It is important to underscore that machine learning techniques are not infallible. Biased selection of the training examples can result in bad predictions. Consider, for example, our discovery example above. Let’s say one of our errant document reviewers rushed through his batch of 1000 documents, and just haphazardly picked yes or no, nearly at random. Now, we might no longer expect the prediction model will accurately identify future examples as fitting the government’s express criteria, or not.

The discussion of “bias” in machine learning can also relate to human invidious discrimination. In one famous example, a twitter chatbot began to make racist and xenophobic tweets, which is a socially unacceptable outcome, even though the statistical model itself cannot be ascribed to have evil intent. Although this short primer is not an appropriate venue for the topic, policymakers should remain wary of drawing conclusions based on models whose inputs are not scrutinized and understood.

Job Displacement. In the case of our junior attorneys sequestered in the basement sifting through physical paper, machine learning has enabled them to shift to more intellectual redeeming tasks (like brewing coffee and filling out time-sheets). But, on the flip side, you no longer need so many junior lawyers, since their previous scope of work can now largely be automated. And, in fact, the entire industry of contract document review attorneys is seeing incredible consolidation and shrinkage. Looking towards the future, our leaders will have to contemplate how to either protect or redistribute human labor in light of such disruption.

Links/Resources/Sources

Framing: Key ML Terminology | Machine Learning Crash Course | Google Developers — developers.google.com

What is Machine Learning? – An Informed Definition — www.techemergence.com

The Risk of Machine-Learning Bias (and How to Prevent It) — sloanreview.mit.edu

This article was produced in partnership with Holberton School and originally appeared on Medium.