The landscape of applications is quickly changing. Many platforms are migrating to containerized applications… and with good cause. An application wrapped in a bundled container is easier to install, includes all the necessary dependencies, doesn’t directly affect the hosting platform libraries, automatically updates (in some cases), and (in most cases) is more secure than a standard application. Another benefit of these containerized applications is that they are universal (i.e., such an application would install on Ubuntu Linux or Fedora Linux, without having to convert a .deb package to an .rpm).

As of now, there are two main universal package systems: Snap and Flatpak. Both function in similar fashion, but one is found by default on Ubuntu-based systems (Snap) and one on Fedora-based systems (Flatpak). It should come as no surprise that both can be installed on either type of system. So if you want to run Snaps on Fedora, you can. If you want to run Flatpak on Ubuntu, you can.

I will walk you through the process of installing and using Flatpak on Ubuntu 18.04. If your platform of choice is Fedora (or a Fedora derivative), you can skip the installation process.

Installation

The first thing to do is install Flatpak. The process is simple. Open up a terminal window and follow these steps:

-

Add the necessary repository with the command sudo add-apt-repository ppa:alexlarsson/flatpak.

-

Update apt with the command sudo apt update.

-

Install Flatpak with the command sudo apt install flatpak.

-

Install Flatpak support for GNOME Software with the command sudo apt install gnome-software-plugin-flatpak.

-

Reboot your system.

Usage

I’ll first show you how to install a Flatpak package from the command line, and then via the GUI. Let’s say you want to install the Spotify desktop client via Flatpak. To do this, you must first instruct Flatpak to retrieve the necessary app. The Spotify Flatpak (along with others) is hosted on Flathub. The first thing we’re going to do is add the Flathub remote repository with the following command:

sudo flatpak remote-add --if-not-exists flathub https://flathub.org/repo/flathub.flatpakrepo

Now you can install any Flatpak app found on Flathub. For example, to install Spotify, the command would be:

sudo flatpak install flathub com.spotify.Client

To find out the exact command for each install, you only have to visit the app’s page on Flathub and the installation command is listed beneath the description.

Running a Flatpak-installed app is a bit different than a standard app (at least from the command line). Head back to the terminal window and issue the command:

flatpak run com.spotify.Client

Of course, after you’ve re-started your machine (upon installing the GNOME Software Support), those apps should appear in your desktop menu, making it unnecessary to start them from the command line.

To uninstall a Flatpak from the command line, you would go back to the terminal and issue the command:

sudo flatpak uninstall NAME

where NAME is the name of the app to remove. In our Spotify case, that would be:

sudo flatpak uninstall com.spotify.Client

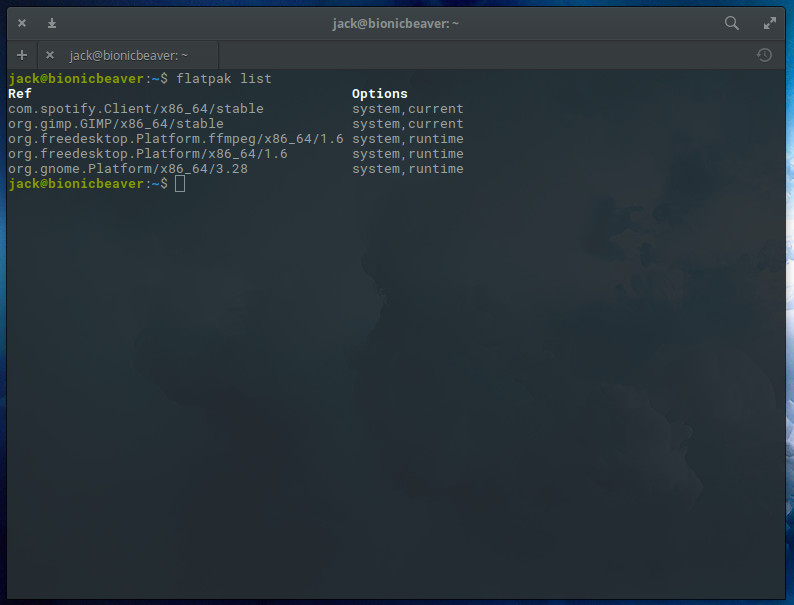

Now we want to update our Flatpak apps. To do this, first list all of your installed Flatpak apps by issuing the command:

flatpak list

Now that we have our list of apps (Figure 1), we can update with the command sudo flatpak update NAME (where NAME is the name of our app to update).

So if we want to update GIMP, we’d issue the command:

sudo flatpak update org.gimp.GIMP

If there are any updates to be applied, they’’ll be taken care of. If there are no updates to be applied, nothing will be reported.

Installing from GNOME Software

Let’s make this even easier. Since we installed GNOME Software support for flatpak, we don’t actually have to bother with the command line. Don’t be mistaken, unlike Snap support, you won’t actually find Flatpak apps listed within GNOME Software (even though we’ve installed Software support). Instead, you’ll find support through the web browser.

Let me show you. Point your browser to Flathub.

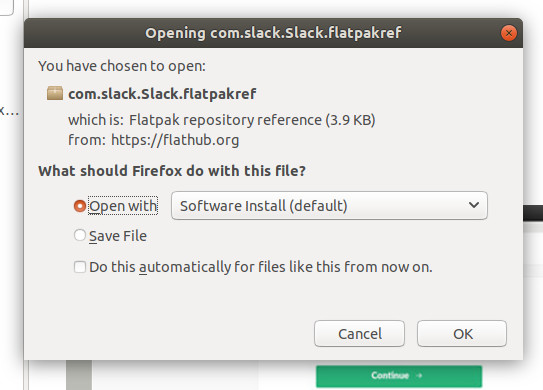

Let’s say you want to install Slack via Flatpak. Go to the Slack Flathub page and then click on the INSTALL button. Since we installed GNOME Software support, the standard browser dialog window will appear with an included option to open the file via Software Install (Figure 2).

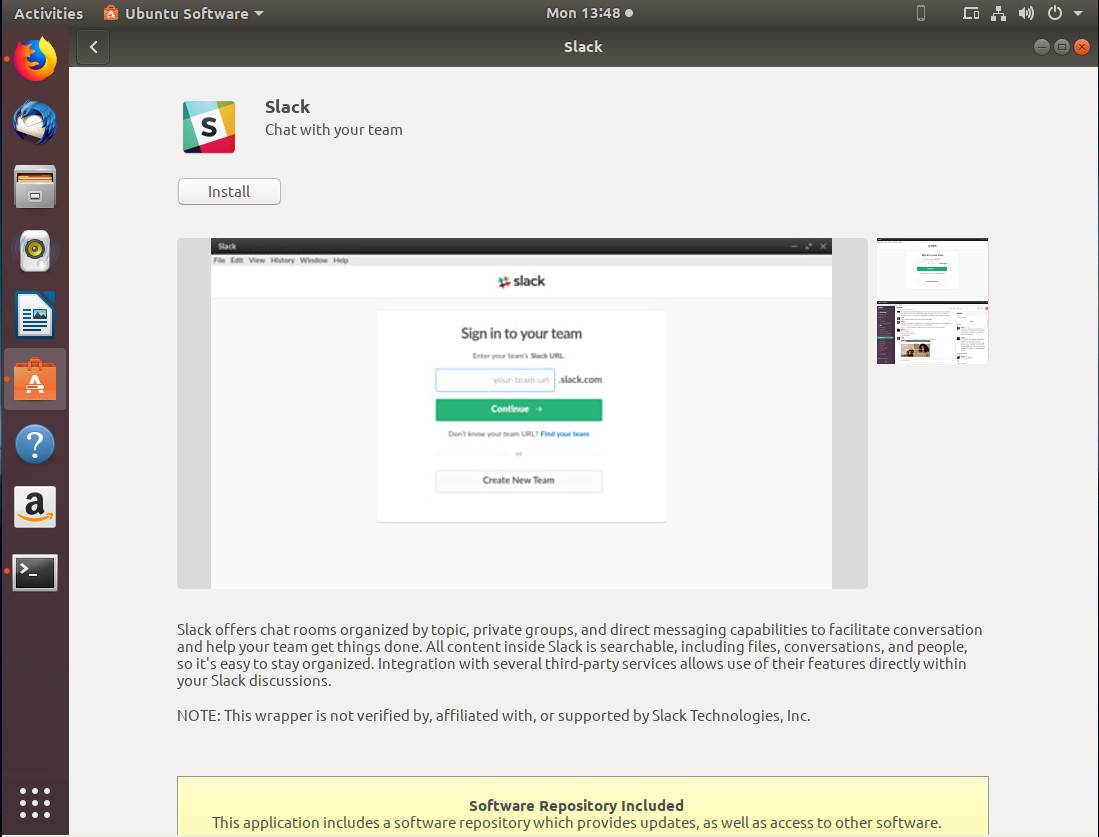

This action will then open GNOME Software (or, in the case of Ubuntu, Ubuntu Software), where you can click the Install button (Figure 3) to complete the process.

Once the installation completes, you can then either click the Launch button, or close GNOME Software and launch the application from the desktop menu (in the case of GNOME, the Dash).

After you’ve installed a Flatpak app via GNOME Software, it can also be removed from the same system (so there’s still not need to go through the command line).

What about KDE?

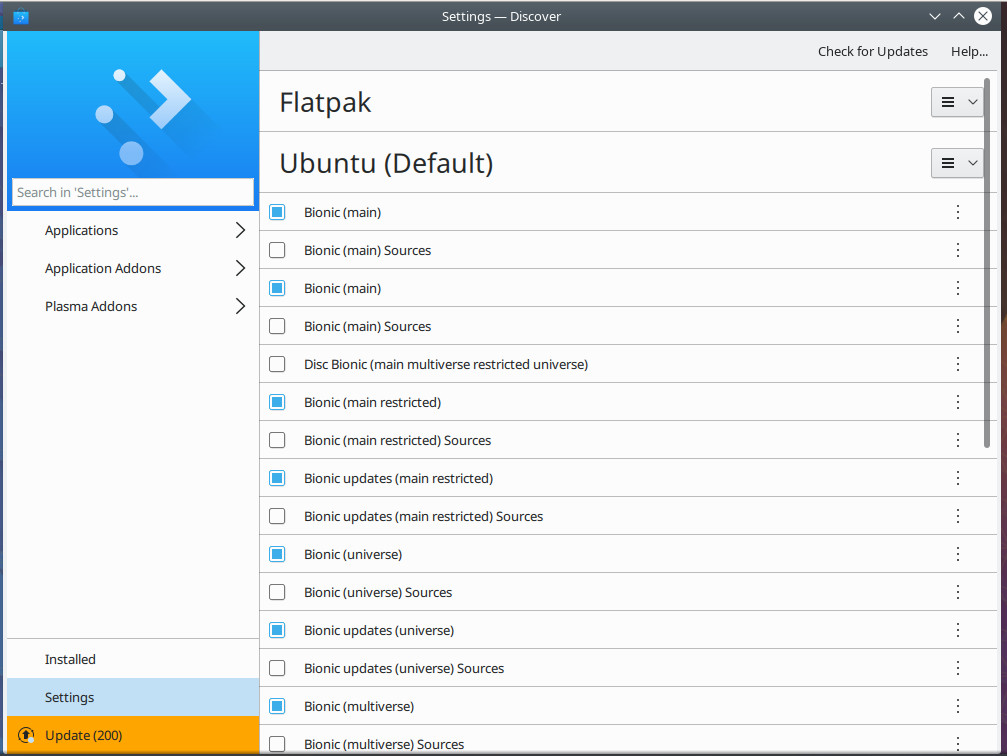

If you prefer using the KDE desktop environment, you’re in luck. If you issue the command sudo apt install plasma-discover-flatpak-backend, it’ll install Flatpak support for the KDE app store, Discover. Once you’ve added Flatpak support, you then need to add a repository. Open Discover and then click on Settings. In the settings window, you’ll now see a Flatpak listing (Figure 4).

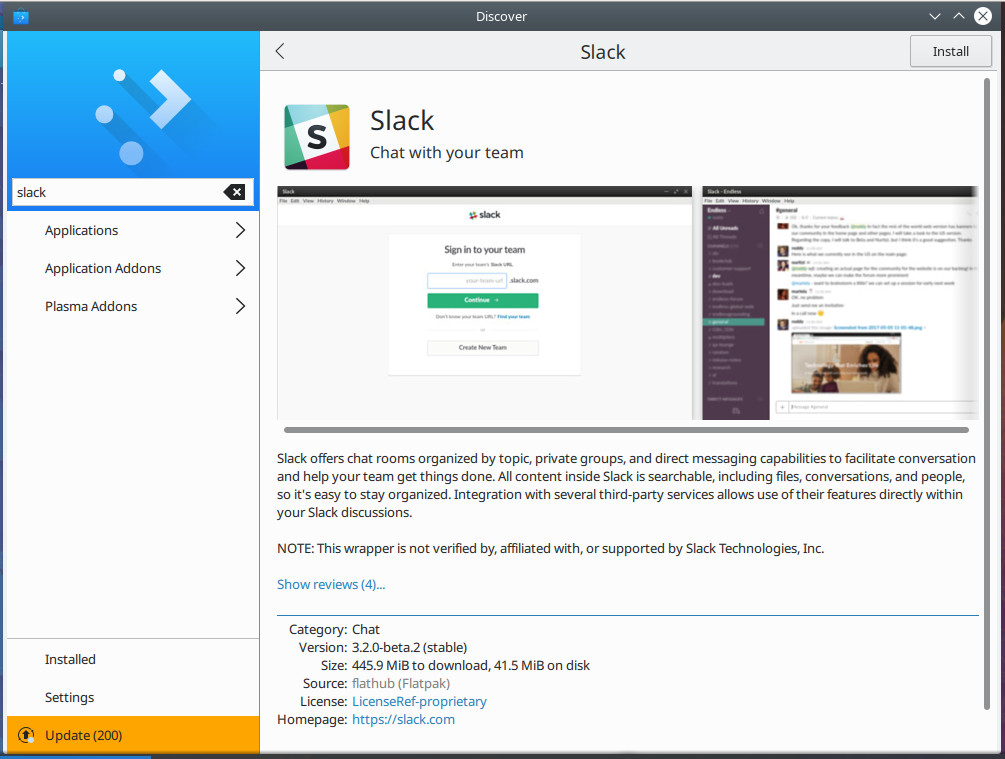

Click on the Flatpak drop-down and then click Add Flathub. Click on the Applications tab (in the left navigation) and you can then search for (and install) any applications found on Flathub (Figure 5).

Easy Flatpak management

And that’s the gist of using Flatpak. These universal packages can be used on most Linux distributions and can even be managed via the GUI on some desktop environments. I highly recommend you give Flatpak a try. With the combination of standard installation, Flatpak, and Snaps, you’ll find software management on Linux has become incredibly easy.

Learn more about Linux through the free “Introduction to Linux” course from The Linux Foundation and edX.