The punchline: Microsoft just unveiled a mostly open source, embedded Arm SoC design with a custom Linux kernel.

The correct response?

1. Ha! Ha! Ha! Ha! You’re killing me!

2. Good one, dude, but April 1st was weeks ago.

3. Hallelujah! Linux and open source have finally beaten the evil empire. Can Apple be next?

4. We’re doomed! After Redmond gets its greedy hands on it, Linux will never be the same.

5. Smart strategic move — let’s see if they can manage not to screw it up like they did with Windows RT.

Microsoft’s Azure Sphere announcement was surprising on many levels. This crossover Cortex-A/Cortex-M SoC architecture for IoT offers silicon-level security, as well as an Azure Sphere OS based on a secure custom Linux kernel. There’s also a turnkey cloud service for secure device-to-device and device-to-cloud communication.

Azure Sphere is notable for being Microsoft’s first major Arm-based hardware since its failed Windows RT-based Surface tablets. It’s also one of its biggest hardware plays since the Xbox, which contributed some of its silicon security technology to Azure Sphere.

Azure Sphere is not only Microsoft’s first Linux-based product, but also one of the most open source. Precise details await the release of the first Azure Sphere products later this year, but Microsoft stated is offering “royalty-free” licensing of its “silicon security technologies” to silicon partners. These include MediaTek, NXP, Nordic, Qualcomm, Silicon Labs, ST Micro, Toshiba, and Arm, which collaborated with Microsoft on the technology. Microsoft is not likely to build its own SoCs, but it has set itself up as an IP intermediary between Arm and the SoC vendors.

Considering how tightly the Azure Sphere architecture is intertwined with the silicon and OS security, the media has interpreted Microsoft’s licensing verbiage as indicating an essentially open source design. Because the technology is based on Arm IP, it’s not as open source as RISC-V technology, but it would likely be more open than most processors.

“Microsoft is putting Azure Sphere up against Amazon FreeRTOS, so I assume it will be pretty permissive open source licensing,” said Roy Murdock, an analyst at VDC Research Group’s IoT & Embedded Technology unit. “Microsoft has finally realized it doesn’t make sense to alienate potential embedded engineers. It realizes it can get more from licensing Azure cloud services than from OS revenues. It’s a smart move.”

Under Satya Nadella’s leadership, Microsoft has further experimented with open source technologies while offering a friendlier face toward the Linux community, especially in regard to Azure. Microsoft is a regular contributor to the Linux kernel and a member of the Linux Foundation. The bad old days of Steve Ballmer deriding Linux while warning about its threat to the tech industry seem long gone. Still, these have all been baby steps compared to Azure Sphere.

Azure Sphere is not an MCU

Despite all the surprises, Azure Sphere is not quite as revolutionary as Microsoft suggests. Its billed as a major new cross-over microcontroller platform, but it’s really more like an application processor than an MCU.

“It’s not accurate to call it an MCU just because it has Cortex-M cores,” noted VDC’s Murdock. “It’s more like an SoC. But if you’re competing with Amazon FreeRTOS, it’s smart marketing.”

Based on the specs listed by the first Azure Sphere SoC — the MediaTek MT3620 — which is due to ship in products by the end of the year, this is a relatively normal Cortex-A7 based SoC with dual Cortex-M4 MCUs backed up by exceptional end-to-end security. NXP has been making similar, hybrid Cortex-A/Cortex-M SoCs for years, including its Cortex-A7 based i.MX7 and -A53-based i.MX8. Others such as Renesas and Marvell have also paired the low-power, Linux-oriented Cortex-A7 with Cortex-M MCUs on various SoCs.

Microsoft hints that other SoC vendors may choose different combinations of Cortex-A and -M chips. One interesting choice for IoT is a single-core implementation of Cortex-A53, such as used by NXP’s LS1012A SoC. Other possibilities may be found in the low-power Cortex-A35.

Security blanket

What makes Azure Sphere potentially attractive to chipmakers beyond the royalty-free licensing and Microsoft’s robust market presence is the multi-layered security, which is desperately needed at the vulnerable IoT edge. In addition to providing a 500MHz Cortex-A7 core and dual Cortex-M4F MCUs for real-time processing, the flagship MT3620 SoC has a third Cortex-M4F core that handles secure boot and system operation within an isolated subsystem. There’s also a separate Andes N9 RISC core supports an isolated WiFi subsystem.

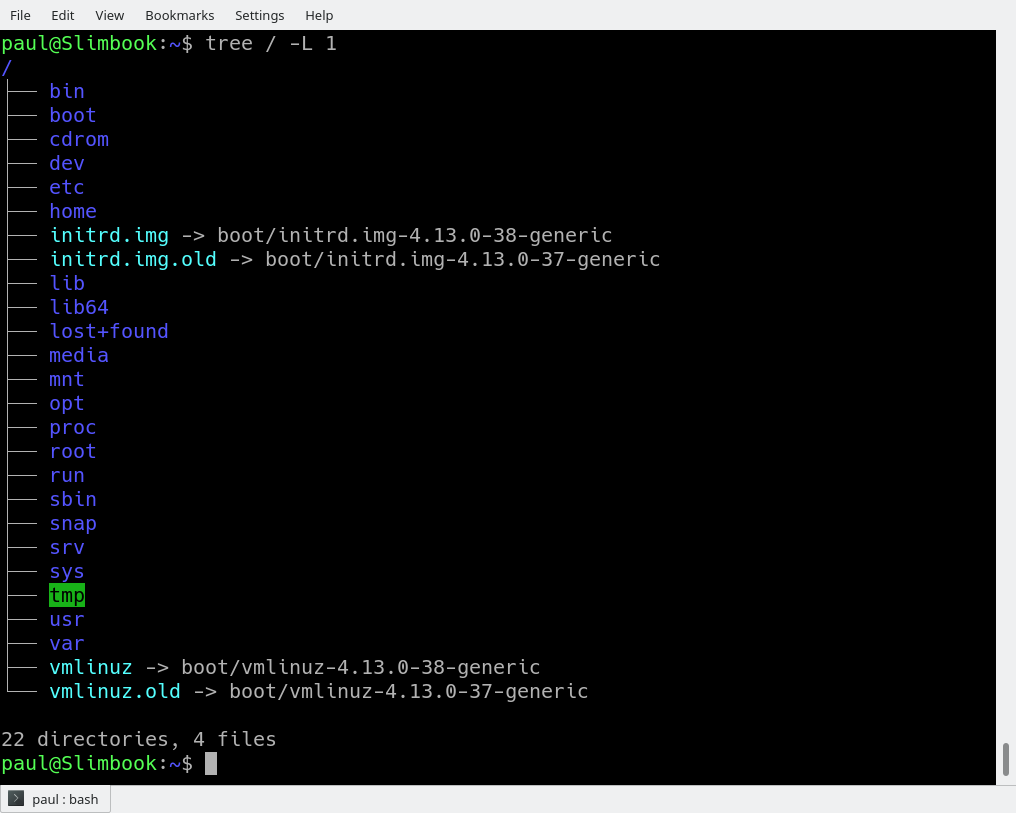

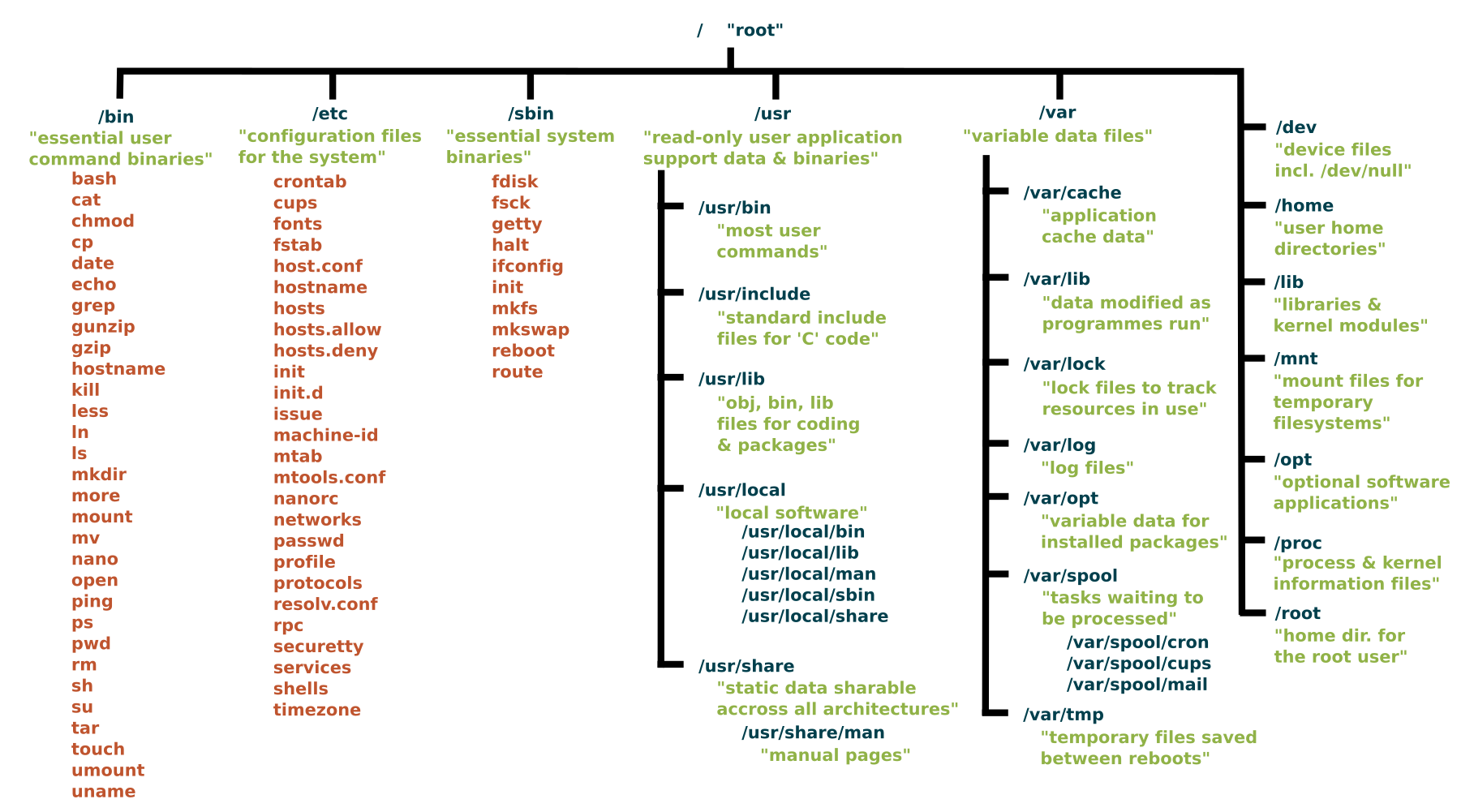

The Linux-based Azure Sphere OS features a Microsoft Pluton Security Subsystem that works closely with the hardware security subsystem. It “creates a hardware root of trust, stores private keys, and executes complex cryptographic operations,” says Microsoft. Underlying the kernel layer is a security monitor layer and at the top is a container layer for application-level security.

The third major security component lies in the cloud. The Azure Sphere Security Service is a cloud-based turnkey platform that brokers trust for device-to-device and device-to-cloud links via certificate-based authentication. The service detects “emerging security threats across the entire Azure Sphere ecosystem through online failure reporting, and renewing security through software updates,” says Microsoft.

Microsoft would love you to connect Azure Sphere Security Service with Azure Cloud and its Azure IoT Suite. To its credit, however, it is also supporting other major cloud services like Amazon AWS and Google Cloud. In this way, it may be more open than Amazon AWS IoT ecosystem with the related, Linux-oriented AWS Greengrass platform for edge devices, which also offers end-to-end security. Amazon FreeRTOS, which was announced in December along with a major investment in the open source FreeRTOS project, expands upon FreeRTOS with libraries that add AWS and AWS Greengrass support for secure cloud-based or local processing and connectivity.

VDC’s Murdock speculates that most Azure Sphere customers will stick with Azure. “We can definitely expect tight integration between Azure Sphere and Azure IoT Suite,” he said. “Microsoft will offer developers a one-click option to turn their data telemetry over to Azure and get security updates. You will be able to connect to other cloud platforms, but it will be complicated. Microsoft is relying on security as a hook, which is smart.”

Microsoft’s goal is not only to push more customers to Azure, but also to harvest the vast amount of information available from millions of edge devices. “Azure Sphere will let Microsoft look at more interesting data and do predictive maintenance,” said Murdock.

Unlike AWS and most other IoT ecosystems that trumpet end-to-end security, Azure Sphere has the benefit of embedding the security at the chip level in addition to OS and cloud. Of course, this is also a limitation because you need a compliant chip to benefit from the security umbrella. This may be one reason Samsung’s Artik platform , which in October was expanded with more security-enhanced Secure Artik models, has yet to set the world on fire.

Indeed, Artik may be the closest analogue to Azure Sphere in that security is baked into a variety of Artik modules and their dedicated Arm chips, and the same security framework also extends to the Artik Cloud. Samsung doesn’t use hybrid SoCs, but it offers a variety of Linux-ready Cortex-A modules and Cortex-M based MCU modules that are intended to work together.

Why not Windows Embedded or IoT Core?

Shortly before the Azure Sphere announcement, VDC Research released an insightful brief called A Call to Revisit Windows Embedded. The report recommended reinvigorating, opening up, and perhaps fully open sourcing, the neglected, but still widely used Windows Embedded platform. In this way it could both establish a foothold in IoT and compete with Amazon FreeRTOS, which VDC sees as a potentially huge play in the MCU world.

Microsoft has instead focused on Windows 10 IoT Core, which competes with Linux on higher powered Arm SoCs and Intel Atom processors. Yet even this minimalist Windows variant is not able to squeeze onto low-end IoT node devices with limited memory and power where Linux and Windows Embedded are still viable.

Presumably, Microsoft decided it would take too much time and effort to update Windows Mobile, especially when IoT developers would prefer to work with Linux anyway. Microsoft can still make money by selling Windows Embedded to legacy customers while advancing into the future with Linux.

Another approach would have been to mimic Amazon and fully embrace the RTOS and MCU world below that level. Like FreeRTOS, a new breed of open source RTOSes such as Arm Mbed and the Intel-backed Zephyr, are offering more Linux-like features for improving, wireless connected Cortex-M and -R SoCs. Yet perhaps Microsoft envisioned that as endpoint IoT devices offer more Internet connectivity, multimedia, and AI processing, low-end Cortex-A cores will be increasingly essential. That road leads to Linux.

Despite Microsoft’s embrace of Linux, Microsoft Chief Legal Officer Brad Smith couldn’t resist a backhanded compliment during the announcement. He chose to use the example of a toy from among the many potential targets for Azure Sphere, ranging from industrial gear to consumer appliances to smart city infrastructure.

“Of course, we are a Windows company, but what we’ve recognized is the best solution for a computer of this size in a toy is not a full-blown version of Windows,” said Smith at the Azure Sphere announcement, as quoted by Redmond. “It is what we are creating here. It is a custom Linux kernel, complemented by the kinds of advances that we have created in Windows itself.”

Join us at Open Source Summit + Embedded Linux Conference Europe in Edinburgh, UK on October 22-24, 2018, for 100+ sessions on Linux, Cloud, Containers, AI, Community, and more. Sign up below for the latest event news: