Despite its widespread and growing adoption, the Yocto Project is one of the more misunderstood of Linux technologies. It’s not a distribution but rather a collection of open source templates, tools, and methods for creating custom embedded Linux-based systems. Yocto Project contributor and Intel Embedded Software Engineer Stephano Cetola explained more about Yocto in his talk at the recent Embedded Linux conference in Portland.

Although embedded hardware vendors often list “Yocto” along with Ubuntu, Fedora, and the like, one Yocto Project build is often markedly different from another. Embedded developers who once constructed their own DIY Linux stacks from scratch to provide stripped-down stacks optimized for power savings and specific technologies now typically use Yocto. In the process, they save countless hours of debugging and testing.

“Yocto is a line drawn in the sand — we’re picking certain versions of software, drawing a line and testing them,” Cetola said. “Every day we test four different architectures and build on six different distros checking for compatibility and performance. We can provide you with BeagleBone SDKs and images, and there’s a bug tracker too.”

Most of this was old news to the attendees at Cetola’s talk, “Real-World Yocto: Getting the Most out of Your Build System.” Yet, there’s a lot about Yocto that even experienced developers don’t know, said Cetola. His talk covered a variety of Yocto tips, with a focus on using the BitBake build engine.

Cetola highlighted some of his favorite best practices, utilities, scripts, and commands, including wic (OpenEmbedded Image Creator), Shared State cache, and packaged feeds. (You can watch the entire presentation below.)

Layering up

The Yocto Project is built around the concept of layers. This is often difficult for developers to grasp so they often just ignore it. “People tend to lump everything into one giant layer because it’s a relatively quick way to get your build started,” said Cetola. “But if you put all your distro information, hardware requirements, and software into a single layer, you’ll be kicking yourself later.”

The biggest drawback is the difficulty in updating hardware and software. “When your boss comes in and drops a web kiosk project on your desk and asks if this will work on your layer scheme, the only way you can answer yes is if you have separated out the layers.”

Having a separate distro layer, for example, makes it easier to support both a frame buffer and an X11 layer, says Cetola. “If your hardware uses different architectures or you don’t want your different hardware mingling together you can separate them into layers to help you distribute these internally,” he added. “In software, you may have Python living with C, and if they have nothing do with each other, separating them means you can ship the manufacturer or QA team only what they need.”

Wic’ing your way to bootable image formats

Yocto Project developers often struggle when integrating a vendor layer into a bootable image, said Cetola. “In the past it’s been hard to add multiple partitions or try to do a layered architecture where your layering read-only and read-write on top of each other.”

A new tool called wic (OpenEmbedded Image Creator) can help out. Wic “reads from a kickstart WKS file that allows you to generate custom partitions and media that you can burn to,” explained Cetola. “For example, your manufacturer may expect to get an SD card, but you may also want to boot from NAND or NOR. Wic lets you cleanly separate these concepts, and then reuse them.”

Wic lets you copy files into or remove files from a Wic image, as well as ls a directory inside an image for greater introspection. Wic also supports bmap-tools, “which is an order of magnitude faster than using dd,” said Cetola. “Bmap-tools realizes that you’re going to copy useless data, so it skips that by doing a sparse copy. Once you use bmap you’ll never use dd again.”

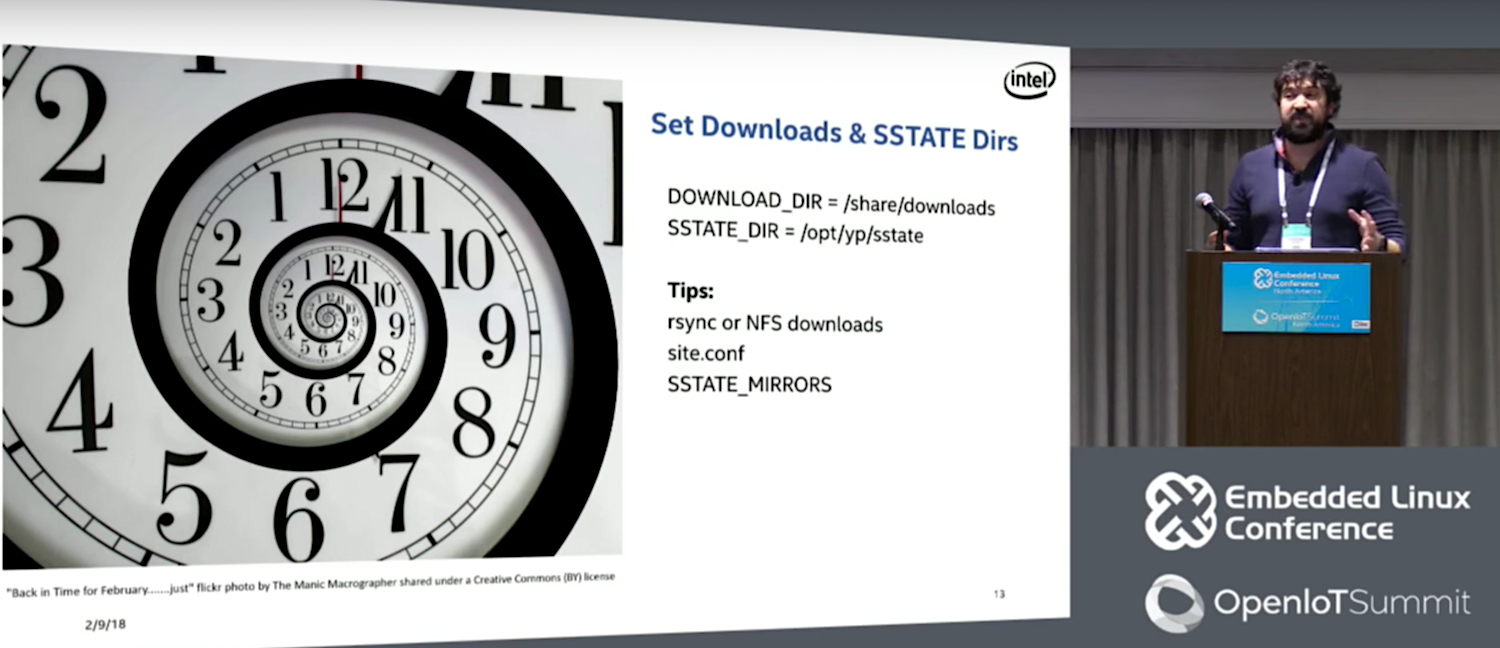

SSTATE is your friend

One of the blessings (in flexibility and code purity) and curses (drudgery) of Yocto development is that everything is built from scratch. That means it must be rebuilt from scratch over and over again. Fortunately, the platform offers a workaround in the form of shared state cache (SSTATE), which is sort of a snapshot of an unaltered Yocto recipe deployed as a set of packaged data generating a cache. BitBake checks SSTATE to see if a recipe does not need to be rebuilt, thereby saving time.

“One of the biggest complaints about Yocto is that it takes a long time to build, and it does — Buildroot wins on speed every time,” says Cetola. “SSTATE cache, which is meant to be used circumstantially, can speed the process up, but a lot of people don’t take advantage of it.” Cetola said there were numerous examples in which building from scratch “isn’t ideal,” for example when a team is doing a build on underpowered laptops.

Cetola recommended using site.conf, which he described as “a configuration file that BitBake looks for when it starts up inside the conf directory.” He continued: “I have a script that starts my build directory and copies in my site.conf, which sets the download and SSTATE directories.”

Cetola also suggested that developers make greater use of the related SSTATE mirror technology, which he said is “handy for sharing with other machines.” These can also be managed by site.conf. “People think they won’t benefit from SSTATE mirrors, but it’s extremely useful.”

Package feeds

Another way to accelerate the build process is to use package feeds, which “can not only save an immense amount of time during your personal development, but also for anybody who needs to quickly install the software,” said Cetola. He described a scenario in which “you use dd to burn something to a card, and you load it on a board and it doesn’t work. So you change your software, boot it again, burn it, load it, and it’s missing a library.”

The missing library is “probably sitting in an rpm folder,” said Cetola. “Yocto can run a BitBake package index, which indexes the folder so rpm can look for it, so you essentially have a repo. By creating a package feed, and sharing that folder on a webserver, and running BitBake package index, you’ve saved yourself the trouble of pulling the SD card and copying something onto it. Instead you just say ‘rpm install’.”

Yocto grows introspective

Sometimes the problem is not a missing file, but rather an unexpected one. “Customers always say to me: ‘I just built my file system and booted my board, and there’s a file there — where did it come from and why is it there?’”

To figure out what the hell is going on — a process known as introspection — Cetola starts with oe-pkgdata-util to find the path. “It should output the name of the recipe that caused that file to populate on the board,” he explained. “You can point it any file and it will do its best to introspect the file and figure it out.” If that’s not enough, he turns to git grep, as well as DNF, “which gives you a lot of introspection onto what’s on your board and why.”

Weird files show up from time to time because “when we do a build and run a file system a lot of stuff is done dynamically,” explained Cetola. In this case, the above tools aren’t likely to help. For example, “inside the guts of Yocto there are rootfs post process commands that can slip something on to the board. For that I use IRC. One of the things I love about the Yocto Project is that it’s very friendly. The IRC channel is a great place to ask questions. People respond.”

Other tools include the “recipetool” and its appendfile sub-command, which “will generate the recipe for you” if you need to change a file, said Cetola. Developers can also use a dependency tree, which can be generated within BitBake using the -g option. “Yocto 2.5 will have an oe-depends-dot tool, which will save you from having to look at that gigantic dependency tree by letting you introspect specific parts.”

Cetola is surprised that developers don’t make greater use of BitBake options. “Whenever I go out to lunch and am running a substantial build, I use the -k option, which keeps the build from stopping when it encounters an error.” Other options include the -e option, which outputs the BitBake environment, and -C command for invalidating a stamp (specific clean).

BitBake scripts

Cetola also gave a shout out to some of his favorite BitBake scripts, starting with devtool, which the Yocto Project wiki describes as a way “to ‘mix’ customization into” a Yocto image. “If you’re not a full-time kernel developer but you need to do some edits on the kernel, devtool can be a lifesaver,” said Cetola. “Using `devtool modify` and `devtool build` you can modify and build the kernel without building an SDK or rolling your own cross compilation environment. It’s also a handy tool for generating recipes. Once you’ve finished making a small edit to the kernel, `devtool finish` can make the patch for you.”

Another useful script is BitBake-layers, “which is great when you’re building layers or searching for them.” Cetola also recommended bitbake-dumpsig/diffsings. “Say you changed one thing in your recipe and BitBake recompiled 25 things,” he said. “What happened was that the change caused different SSTATE hashes to invalidate. To find out why, you can use bitbake-dumpsig/diffsings. Dumpsigs will dump all the information (stored in the ‘stamps’ directory) into a format where you can see the things it is basing its hash on, and then use the diff tool to compare them to work out whether there was a dependency change.”

Cetola concluded with a call for community involvement. “If you’re brave enough to look at Bugzilla please do, but if that’s a bit much, just find a part of the system you’re interested in working on and send us an email — we’re always willing to take contributions and willing to help.”

Watch the entire presentation below: