Windows 10 accounts for roughly 42 percent of market share, according to the latest figures from StatCounter. It’s also the the most commonly used platform by developers, followed by Linux. That’s a huge market.

On the other side of the spectrum are data centers, servers, private and public clouds. These are dominated by Linux. In fact, more than 50 percent of Microsoft Azure machines run Linux. Windows dominates the developer system market, and Linux dominates the cloud and datacenter. Two different worlds. And, imagine the life of a developer who uses Windows 10 machines to manage their Linux workloads on cloud.

In the past these developers, especially web developers, who worked on servers running Linux had a few choices: run a Linux distro inside a VM on their Windows 10 machines or use workarounds like Cygwin. Both solutions had limitations and neither was ideal. Additionally, they caused additional headaches for the IT teams who manage developer systems across organizations.

Microsoft needed a native solution and turned to Linux vendor Canonical to build Linux command-line capability in Windows 10. In 2016, Microsoft announced a beta project called Bash on Windows that aimed at bringing Ubuntu Bash to Windows. The project enabled developers to run many Linux command-line tools in Windows. By the end of 2017, the project was out of beta and was renamed to Windows Subsystem for Linux (WSL).

Tara Raj of Microsoft explained the reason of this name change and said that WSL referred to “the Microsoft-side of the technology stack, including the kernel and Windows tools that enable Linux binaries to run on Windows. The distros will simply be known by their own names – Ubuntu, openSUSE, SUSE Linux Enterprise Server, etc.”

With the arrival of Windows 10 Fall Creators Update (FCU), Microsoft introduced Linux command-line tools and utilities to the mass market. Anyone running Windows 10 can now enable WSL on their systems.

“I think it will actually increase the reach of Linux as now those users who would have never installed Linux will be able to use these tools. Windows has a much larger market share than Linux and these users will now have access to Linux tools,” said openSUSE Board Chairman Richard Brown in an interview. Ubuntu founder and CEO, Mark Shuttleworth displayed similar sentiments and said that it will expose Linux to an even wider audience.

Who is the audience?

Anyone who needs Linux tools and utilities on Windows is the target audience of WSL. The primary audience are certainly web developers and those IT professionals (sysadmins) who use Linux platform on their production systems.

“This is primarily a tool for developers — especially web developers and those who work on or with open source projects. This allows those who want/need to use Bash, common Linux tools (sed, awk, etc.) and many Linux-first tools (Ruby, Python, etc.) to use their toolchain on Windows,” says a community maintained FaQ by Microsoft.

Many languages and libraries are available only on Linux. Some are ported to Windows 10 but lack some features and functionalities. WSL allows the use of languages like Ruby or node natively, without making compromises.

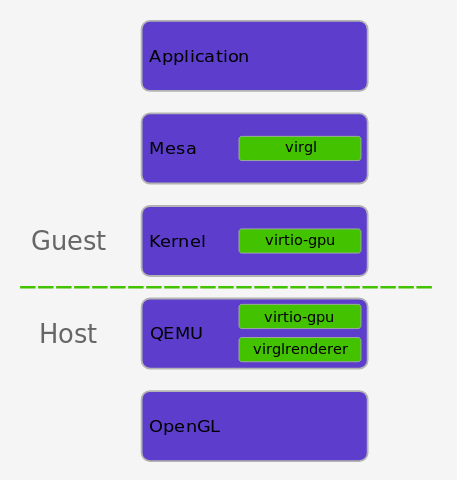

To be clear, WSL doesn’t use the Linux kernel, it still uses the Windows kernel (read more about how WSL works here). You can think of it as command-line Linux distro, without the kernel.

Multiple distributions

With the latest update, Microsoft made it easier to run multiple Linux distros inside Windows 10. All you need to do is enable the WSL, using Turn Windows Features On and Off, and then install any supported distribution from the Windows Store. You can run multiple distributions simultaneously, isolated from each other.

Why are all these distributions needed? Each Linux distribution has its own set of commands and utilities. You can’t run CentOS or openSUSE specific commands on Ubuntu. If you are running any of these distributions on your cloud, access to these distributions on your Windows machine allows you to use native command at both places.

Microsoft continues to add more capabilities to WSL. Now you can even mount drives and run commands like rsync locally. You can use it to manage your local systems with all those great Linux command-line tools.

Does that mean you can also run Linux desktop apps on Windows 10? Yes, theoretically, there are workarounds so you can run GUI Linux apps in Windows 10 via WLS, but that’s not the intended goal of the WSL project. The primary goal is to enable developers to run the command-line tools they need.

If you are a developer who develops for both Windows and Linux, you no longer have to resort to virtual machines, Cygwin, or dual-booting. Now you have the best of both worlds. PowerShell and Bash can be used at the same time, from the same machine. If you manage your Linux machines in Azure cloud, WSL is a huge improvement. The company has succeeded in building a fine bridge between these two platforms and creating an even playing field for developers. It’s a win-win situation.

In the next article in this series, I will show how to get started with WSL on Windows 10 systems.