There are times when, despite your best efforts, you have little choice but to put a quick workaround in place. Reconfiguring network-border firewalls or moving services between machines is simply not an option because the network’s topology is long established and definitely shouldn’t be messed about with.

Picture the scene. You’ve lost an inbound mail server due to some unusual issue with the application which will probably take more than the few minutes you have to fix. In their wisdom, the architects of your mail server infrastructure didn’t separate the web-based interface from the backend daemons that listen for the incoming email, and both services reside on the server with a failed IMAP server (Internet Message Access Protocol), which collects inbound mail for your many temperamental users.

This leaves you in a tricky position. Fundamentally, you need both the services up and available. Thankfully, there’s a cold-swap IMAP server with up-to-date user configuration available, but sadly you can’t move the IP address from the email web interface over to that box without breaking the interface’s connectivity with other services.

To save the day, you ultimately rely on a smattering of lateral thinking. After all, it’s only a TCP port receiving the inbound email, and luckily the web interface can refer to other servers so that users can access their emails. Step forward the excellent “redir” daemon.

This clever little daemon has the ability to listen out for inbound traffic on a particular port on a host and then forward that traffic onwards somewhere else. I should warn you in advance that it might struggle with some forms of encryption which require certificates being presented to it but otherwise I’ve had some excellent results from the redir utility. In this article, I’ll look at how redirecting traffic might be able to help you out of a tight spot and examine some possible alternatives to the miniscule redir utility.

Installation

You probably won’t be entirely surprised to read that it’s as easy as running this command on Debian derivatives:

# apt-get install redir

On Red Hat derivatives, you will likely need to download it from here: http://pkgs.repoforge.org/redir/

Then you simply use “rpm -i <version>” where “version” is the download which you choose. For example you could do something like this:

# wget http://pkgs.repoforge.org/redir/redir-2.2.1-1.2.el6.rf.x86_64.rpm

# rpm -i redir-2.2.1-1.2.el6.rf.x86_64.rpm

Now that we have a working binary, let’s look at how the useful redir utility works; thankfully it’s very straightforward indeed. Let’s begin by considering the non-encrypted version of IMAP (simply because I don’t want to promise too much with services encrypted by SSL or TLS).

First, consider the inbound email server listening on TCP port 143 and what would be needed should you wish to forward traffic from that port to another IP address. This is how you could achieve that with the excellent redir utility:

# redir --laddr=10.10.10.1 --lport=143 --caddr=10.10.10.2 --cport=143

In that example, we see our broken IMAP server (who has IP address “10.10.10.1”) running on local port 143 (set as “–lport=”) having traffic forwarded to our backup IMAP server (with IP address “10.10.10.2”) to the same TCP port number.

To run redir as a daemon in the background, you’re possibly safest to add an ampersand as we do in this example. Here, instead of forwarding traffic to a remote server, we simply adjust the port numbers on our local box.

# redir --laddr=10.10.10.1 --lport=143 --laddr=10.10.10.1 --cport=1234 &

You might also explore the “daemonize” command to assist. I should say that I have had mixed results from this in the past, however. If you want to experiment then there’s a man page here: http://linux.die.net/man/1/daemonize

You can also use the “screen” command to open up a session and leave the command running in the background. There’s a nicely written doc on the excellent “screen” utility here from the slick Arch Linux: https://wiki.archlinux.org/index.php/GNU_Screen

The above config example scenario is an excellent way of catching visitors to a service whose clients aren’t aware of a port number change, too. Say, for example, you have a clever daemon that can listen for both encrypted traffic (which would usually go to TCP port 993 on IMAP for the sake of argument) and unencrypted traffic (usually TCP port 143). You could redirect traffic destined for TCP port 143 to TCP port 993 for a short period of time while you tell your users to update their software. That way you might be able to close another port on your firewall and keep things simpler.

Handling IP address changes

Another life-saving use of the magical redir utility is when a DNS or IP address changes take place. Consider that you have a busy website listening on TCP port 80 and TCP port 443. All hell breaks loose with your ISP, and you’re told that you have 10 days to migrate to a new set of IP addresses. Usually this wouldn’t be too bad but the ISP in question has set your DNS TTL expiry time (Time To Live) to a whopping seven days. This means that you need to make the move quickly to provision for the cached DNS queries which go past seven days and beyond. Thankfully, the very slick redir tool can come to the rescue.

Having bound a new IP address to a machine you simply point back at the old server IP address using redir on your HTTP and HTTPS ports.

Then, you change your DNS to reflect the new IP address as soon as possible. The extra three days of grace should be enough to catch the majority of out-of-date DNS answers but even if it isn’t you could simply use the superb redir in the opposite direction if you ISP let you run something on the old IP address. That way, any stray DNS responses which arrive at your old server are simply forwarded to your new server. In theory (I’ve managed this in the past with a government website), you should have zero downtime throughout and if you drop any DNS queries to your new IP address the percentage will be so negligible your users probably won’t be affected.

In case you’re not aware, the DNS caching would only affect users who had visited in the seven days prior to the change of IP address. In other words, any new users to the website would simply have the new IP address served to them by DNS servers, without any issue whatsoever.

Voting by Proxy

I would be remiss not to mention that, of course, iptables also has a powerful grip on traffic hitting your boxes, too. We can deploy the mighty iptables to allow for a client to unwittingly push traffic via a conduit so that a large network can filter which websites its users are allowed to access, for example.

There’s a slightly outdated document on the excellent Linux Documentation Project (TLDP) website.

With the super-natty redir tool, we can create a transparent proxy as follows (incidentally, transparent proxies are also known as intercepting proxies or inline proxies):

# redir --transproxy 10.10.10.10 80 4567

In this example, we are simply forwarding all traffic destined for TCP port 80 to TCP port 4567 so that the proxy server can filter using its rules.

There’s also a potentially useful option called “–connect” which will allow HTTP proxies with the CONNECT functionality.

To use this option, add the IP address and port of the proxy (using these options “–caddr” and “–cport” respectively).

Shaping

I’ve expressed my reservations about redir handling encrypted traffic because of certificates sometimes messing things up. The same applies with some other two-way communication protocols or those which open up another port such as sFTP (Secure File Transfer Protocol) or SCP (Secure Copy Protocol). However, with some experimentation, and if you’re concerned with how much bandwidth might be forwarded, then the redir can help. Again, you might have mixed results.

You can alter how much bandwidth is allowed through your redirection with this option:

--max_bandwidth

The manual mentioned above does warn that the algorithm employed is a basic one and can’t be expected to be entirely accurate all the time. Think of these algorithms working by considering a period of a few seconds, the recorded throughput rate, and the ceiling which you’ve set it at. When it comes to throttling and shaping bandwidth, it’s not actually as easy to get 100 percent accuracy. Even shaping with the powerful “tc” Linux tool, combined with a suitable “qdisc” for the job in hand, is prone to errors, especially when working with very low capacities of throughput, despite the fact it works on an industrial scale.

My Network Is Down

The Traffic Control tool, “tc”, which I’ve just mentioned is also capable of simulating somewhat unusual network conditions. For example, if you wanted to simulate packets being delayed in transit (you might want to test this with pings), then you can use this “tc” command:

# tc qdisc add dev eth0 root netem delay 250ms

Append another value to the end of that command (e.g., “50ms”) and you then get a plus or minus variation in the delay.

You can also simulate packet loss like this:

# tc qdisc change dev eth0 root netem loss 10%

This should drop 10 percent of packets randomly with all going well. If it doesn’t work, then the manual can be found here: http://linux.die.net/man/8/tc and real-life examples here: http://www.admin-magazine.com/Archive/2012/10

I mention the fantastic “tc” at this juncture, because you might want to deploy similar settings using the versatile redir utility. It won’t offer you the packet loss functionality; however, it will add a random delay, which might be enough to make users look at their settings and then fix their client-side config without removing all access to their service.

Note that redir also supports the “–random_wait” option. Apparently, redir will randomly multiply whatever setting you put after that option by either zero, one, or two milliseconds before sending packets out. This option also can be used with another (the “–bufsize” option). The manual explains that it doesn’t deal directly with packets for its random delays but instead defines them this way:

“A “packet” is a bloc of data read in one time by redir. A “packet” size is always less than the bufsize (see also –bufsize).”

By default, the buffer size is 4,096 bytes; experiment as you wish if you want to alter the throughput speeds experienced by your redirected traffic. In the next article, we’ll look at using iptables to alter how your traffic is manipulated.

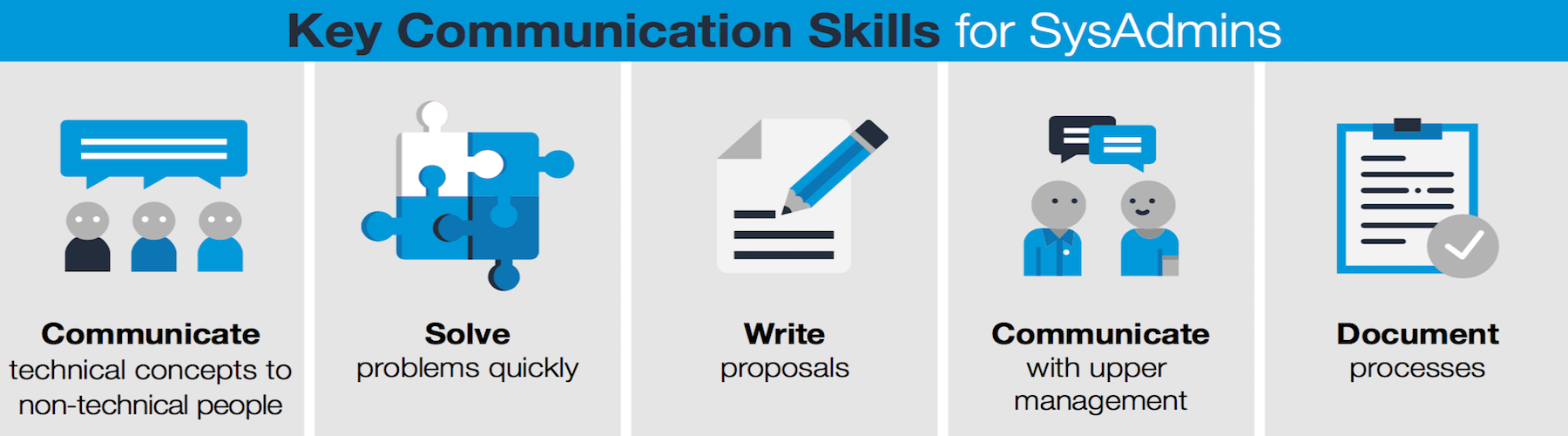

Learn more about essential sysadmin skills: Download the Future Proof Your SysAdmin Career ebook now.

Chris Binnie’s latest book, Linux Server Security: Hack and Defend, shows how hackers launch sophisticated attacks to compromise servers, steal data, and crack complex passwords, so you can learn how to defend against these attacks. In the book, he also talks you through making your servers invisible, performing penetration testing, and mitigating unwelcome attacks. You can find out more about DevSecOps and Linux security via his website (http://www.devsecops.cc).