AspenCore has released the results of an embedded technology survey of its EETimes and Embedded readers. The survey indicates that open source operating systems like Linux and FreeRTOS continue to dominate, while Microsoft Embedded and other proprietary platforms are declining.

Dozens of market studies are happy to tell you how many IoT gizmos are expected to ship by 2020, but few research firms regularly dig into embedded development trends. That’s where reader surveys come in handy. Our own joint survey with LinuxGizmos readers on hacker board trends offer insights into users of Linux and Android community-backed SBCs. The AspenCore survey has a smaller sample (1,234 vs. 1,705), but is broader and more in depth, asking many more questions and spanning developers who use a range of OSes on both MCU and application processors.

The survey, which was taken in March and April of this year, does not perfectly represent of global trends. The respondents are predominantly located in the U.S. and Canada (56 percent) followed by Europe/ENEA (25 percent), and Asia (11 percent). They also tend to be older, with an average of 24 years out of college, and work at larger, established companies with an average size of 3,452 employees and on teams averaging 15 engineers.

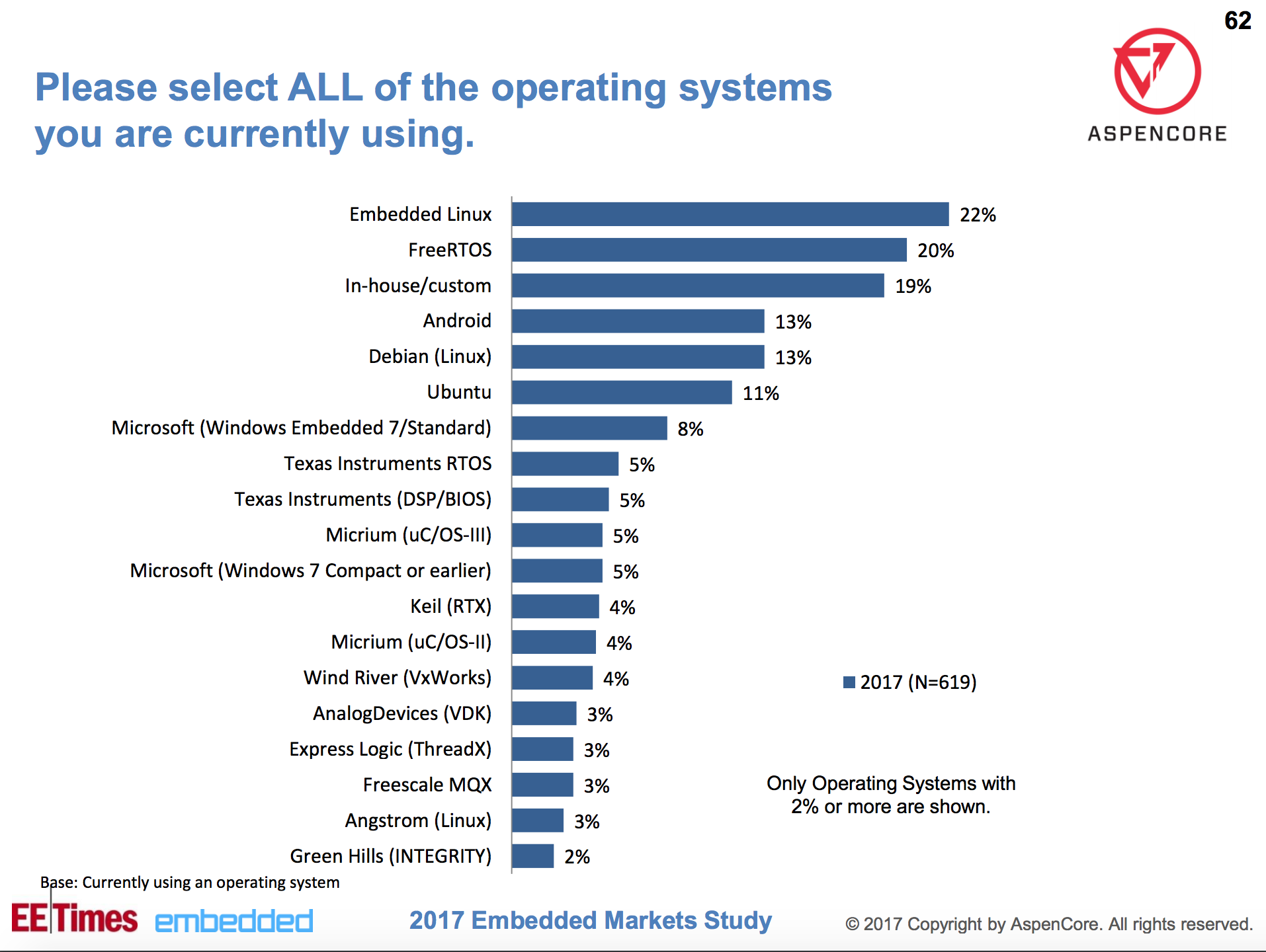

As shown by the chart above, Linux was dominant when readers were asked to list all the embedded OSes they used. Some 22 percent chose “Embedded Linux” compared to 20 percent selecting the open source FreeRTOS. The Linux numbers may actually be much higher since the 22 percent figure may only partially overlap with the 13 percent rankings for Debian and Android, the 11 percent ranking for Ubuntu, and 3 percent for Angstrom.

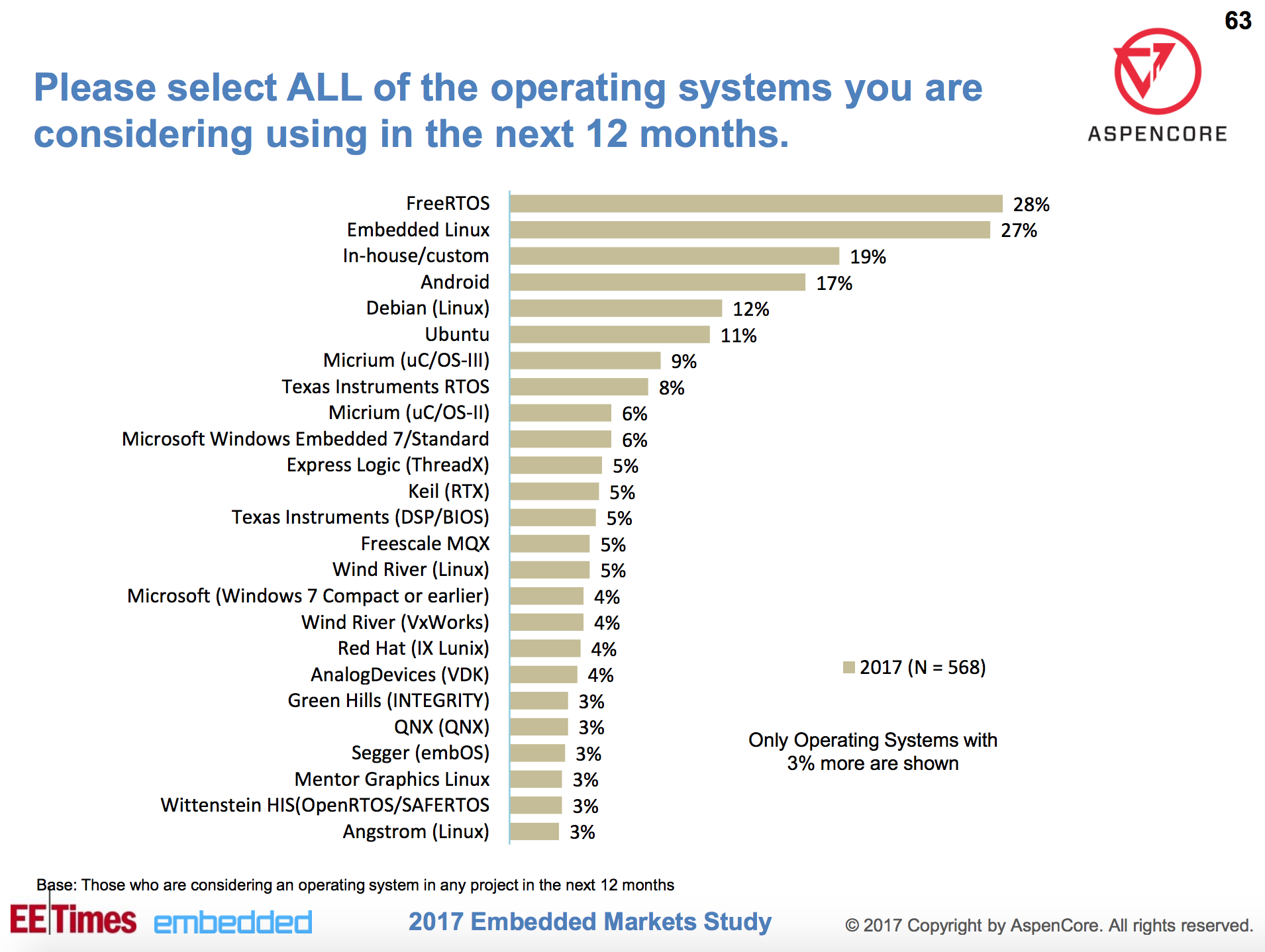

When looking at next year’s plans, FreeRTOS and Embedded Linux jump to 28 percent and 27 percent, respectively. Android also saw a sizable boost to 17 percent while Debian dropped to 12 percent, and Ubuntu and Angstrom stayed constant. The chief losers here are Microsoft Windows Embedded and Windows Compact, which rank 8 percent and 5 percent, respectively, dropping to 6 percent and 4 percent in future plans. Windows 10 IoT did not make the list at all.

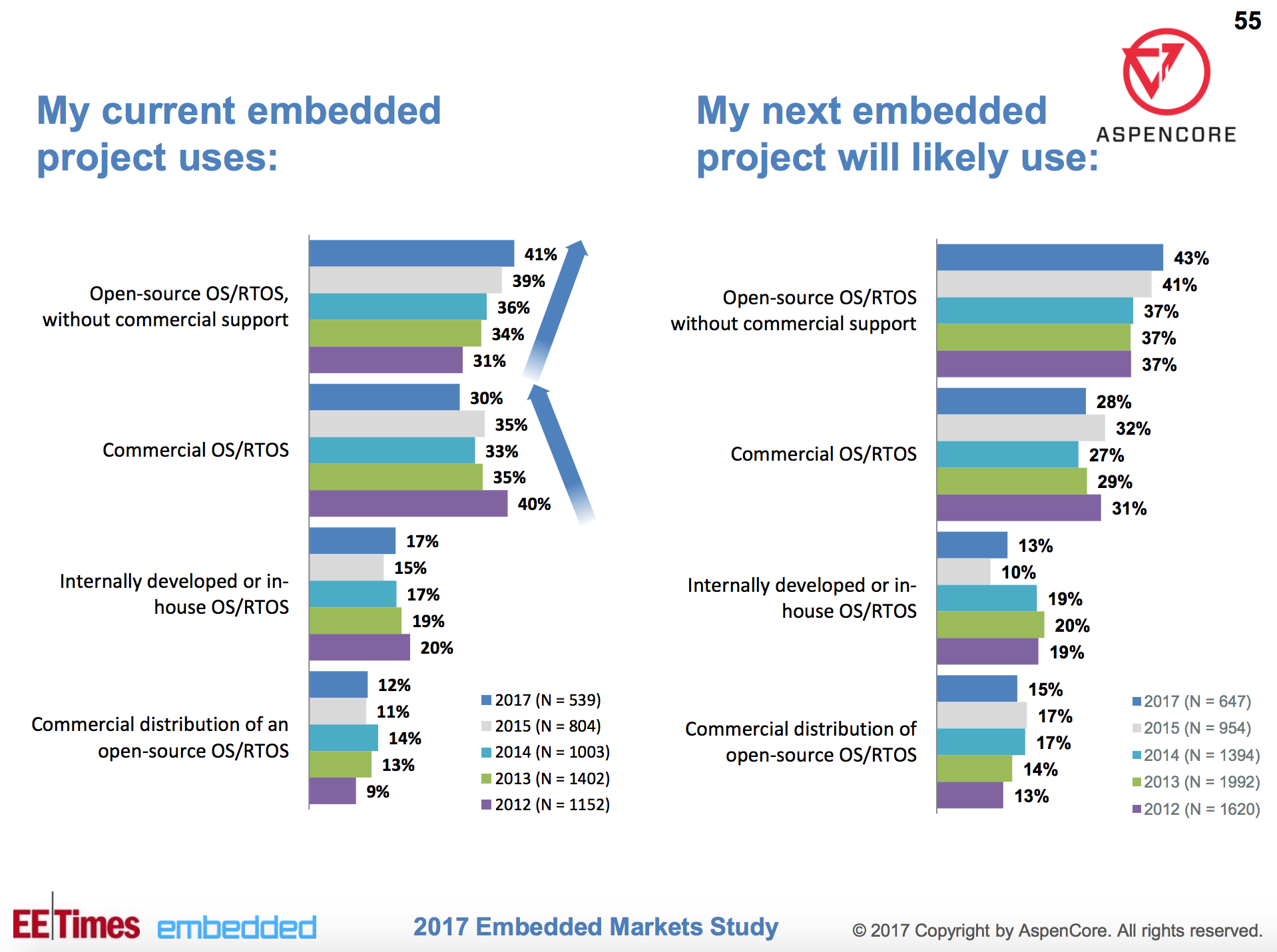

As seen in the above graph, open source operating systems offered without commercial support beat commercial OSes by 41 percent to 30 percent. The trend toward open source has been consistent for the last five years of the survey, and plans for future projects suggest it will continue, with 43 percent planning to use open source vs. 28 percent for commercial.

In-house OSes appear to be in a gradual decline while commercial distros based on open source, such as the Yocto-based Wind River Linux and Mentor Embedded Linux, are growing slightly. The advantages of commercial offerings are said to include better real-time capabilities (45 percent), followed by hardware compatibility, code size/memory usage, tech support, and maintenance, all in the mid 30 percentages. Drawbacks included expense and vendor lock-in.

When asked why respondents chose an OS in general, availability of full source code led at 39 percent, followed by “no royalties” (30 percent), and tech support and HW/SW compatibility, both at 27 percent. Next up was “freedom to customize or modify” and open source availability, both at 25 percent.

Increased interest in Linux and Android was also reflected in a question asking which industry conferences readers attended last year and expected to attend this year. The Linux Foundation’s Embedded Linux Conferences saw one of the larger proportional increases from 5.2 to 8.0 percent while the Android Builders Summit jumped from 2.7 percent to 4.5 percent.

Only 19 percent of respondents purchase off-the-shelf development boards vs. building or subcontracting their own boards. Among that 19 percent, boards from ST Microelectronics and TI are tied for most popular at 10.7 percent, followed by similarly unnamed boards from Xilinx, NXP, and Microchip. The 6-8 ranked entries are more familiar: Arduino (5.6 percent), Raspberry Pi (4.2 percent), and BeagleBone Black (3.4 percent).

When the question was asked slightly differently — What form factor do you work with? – these same three boards were included with categories like 3.5” and ATX. Here, the Arduino (17 percent), RPi (16 percent), and BB Black (10 percent) followed custom design (26 percent) and proprietary (23 percent). When asked which form factor readers planned for this year, the Raspberry Pi jumped to 23 percent. The only proportionally larger increase was ARM’s Mbed development platform which moved from 3 percent to 6 percent.

OS Findings Jibe with VDC Report

The key findings in the 2017 Embedded Market Survey on OS and open source are reflected in large part by the most recent VDC Research study on embedded tech, published last November. (We covered the 2015 report here.) VDC’s Global Market for IoT & Embedded Operating Systems 2016 projected only 2 percent (CAGR) growth for the IoT/embedded OS market through 2020 in large part due to the open source phenomenon.

“Free and/or publicly available, open source operating systems such as Debian-based Linux, FreeRTOS, and Yocto-based Linux continue to lead new stack wins, with nearly half of surveyed embedded engineers expecting to use some type of free, open source OS on their next project,” said VDC Research.

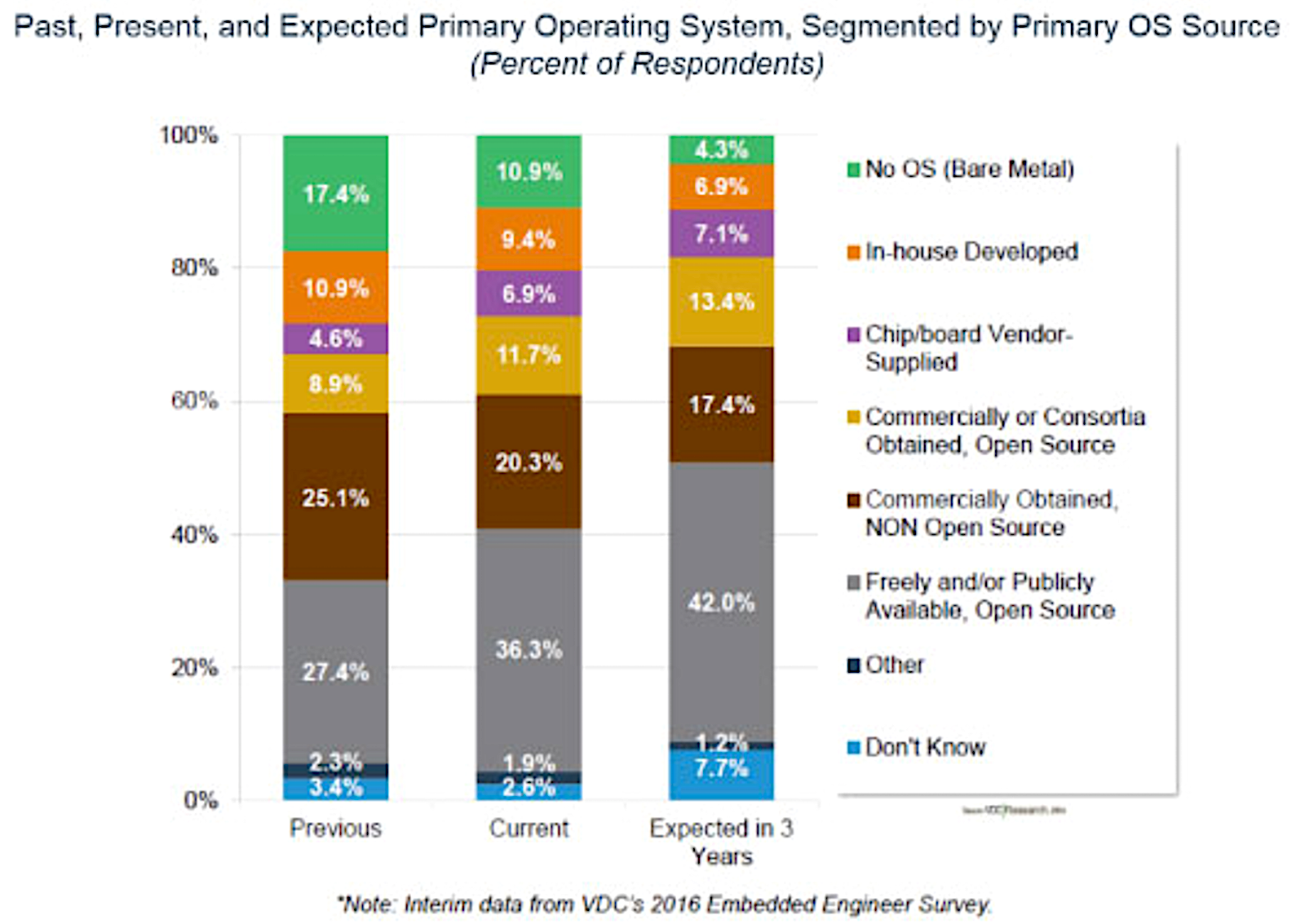

As the VDC chart above indicates, bare metal, in-house, and commercial OSes are on the decline, while open source – and especially free open source – platforms are on the rise. VDC cited the decline of Microsoft’s embedded platforms, and noted market uncertainty due to major chip vendor acquisitions, as well as the future of Wind River’s platforms as the company is fully integrated within Intel.

Other survey findings in chips, wireless, and more

More than a third of the AspenCore 2017 Embedded Market Survey respondents work on industrial automation, followed by a quarter each for consumer electronics and IoT. Half the respondents said that IoT will be important to their companies in 2017.

Some 13 percent of respondents said they use 64-bit chips, up from 8 percent in 2015. The report shows a reader-ranked list of processor vendors, but not the processors themselves. Most appear to be MCU vendors, but many also make higher-end SoCs. The picture is further muddied by rampant acquisition.

The processor leaders are Texas Instruments (31 percent) followed by Freescale (NXP/Qualcomm) and Atmel (Microchip) at 26 percent and Microchip on its own at 25 percent. Then comes STMicro (23 percent), NXP (Qualcomm) at 17 percent, and Intel at 16 percent. In future plans, TI and Freescale extend their lead, while STMicro jumps past Microchip to number three. Xilinx edges past Intel at 21 percent and 18 percent respectively, with Intel’s Altera unit at 17 percent.

When asked which 32-bit chips readers plan to use, the top contenders that run Linux include the Xilinx Zynq and NXP i.MX6, both ranked third at 17 percent behind STM32 and Microchip’s PIC-32. The Atmel SAMxx and TI Sitara families are tied for fifth at 14 percent, and the 32-bit models among Intel’s Atom and Core chips come next at 13 percent. Intel’s Linux-ready Altera FPGA SoCs follow at 12 percent, tied with Arduino. Despite the popularity of the Xilinx and Altera hybrid FPGA/ARM SoCs, use of FPGA chips overall has declined slightly to 30 percent.

C and C++ are by far the most popular programming languages at 56 percent and 22 percent, respectively. C has lost 10 percentage points since 2015, however, while C++ has gained three points. Python saw the largest boost when asked about future plans, jumping from 3 percent to 5 percent expected usage. Git is the top version control software at 38 percent, up from 31 percent two years ago.

The most widely implemented wireless technologies were WiFi (65 percent), Bluetooth (49 percent), cellular (25 percent), and 802.15.4 (ZigBee etc.), which ranked at 14 percent. Interestingly, use of virtualization and hypervisors has dropped to 15 percent, with only 7 percent saying they plan to use the technologies in 2017.

Debugging and “meeting schedules” were the two greatest development challenges cited by readers, both at 23 percent. The leading future challenge was “managing increases in code size and complexity,” at 19 percent.

Half the readers said they were working on embedded vision technology, but slightly less planned to do so this year. Other advanced technologies, including machine learning (25 percent), speech (22 percent), VR (14 percent), and AR (11 percent) all saw big jumps in expected use in 2017, especially with machine learning, which almost doubled to 47 percent.

Connect with the embedded community at Embedded Linux Conference Europe in Prague, October 23-25. Register now!