ODPi recently hosted a webinar with John Mertic, Director of ODPi at the Linux Foundation, and Tamara Dull, Director of Emerging Technologies at SAS Institute, to discuss ODPi’s recent 2017 Preview: The Year of Enterprise-wide Production Hadoop whitepaper and explore DataOps at Scale and the considerations businesses need to make as they move Apache Hadoop and Big Data out of Proof of Concepts (POC)s and into enterprise-wide production, hybrid deployments.

Watch Replay

Download Slides

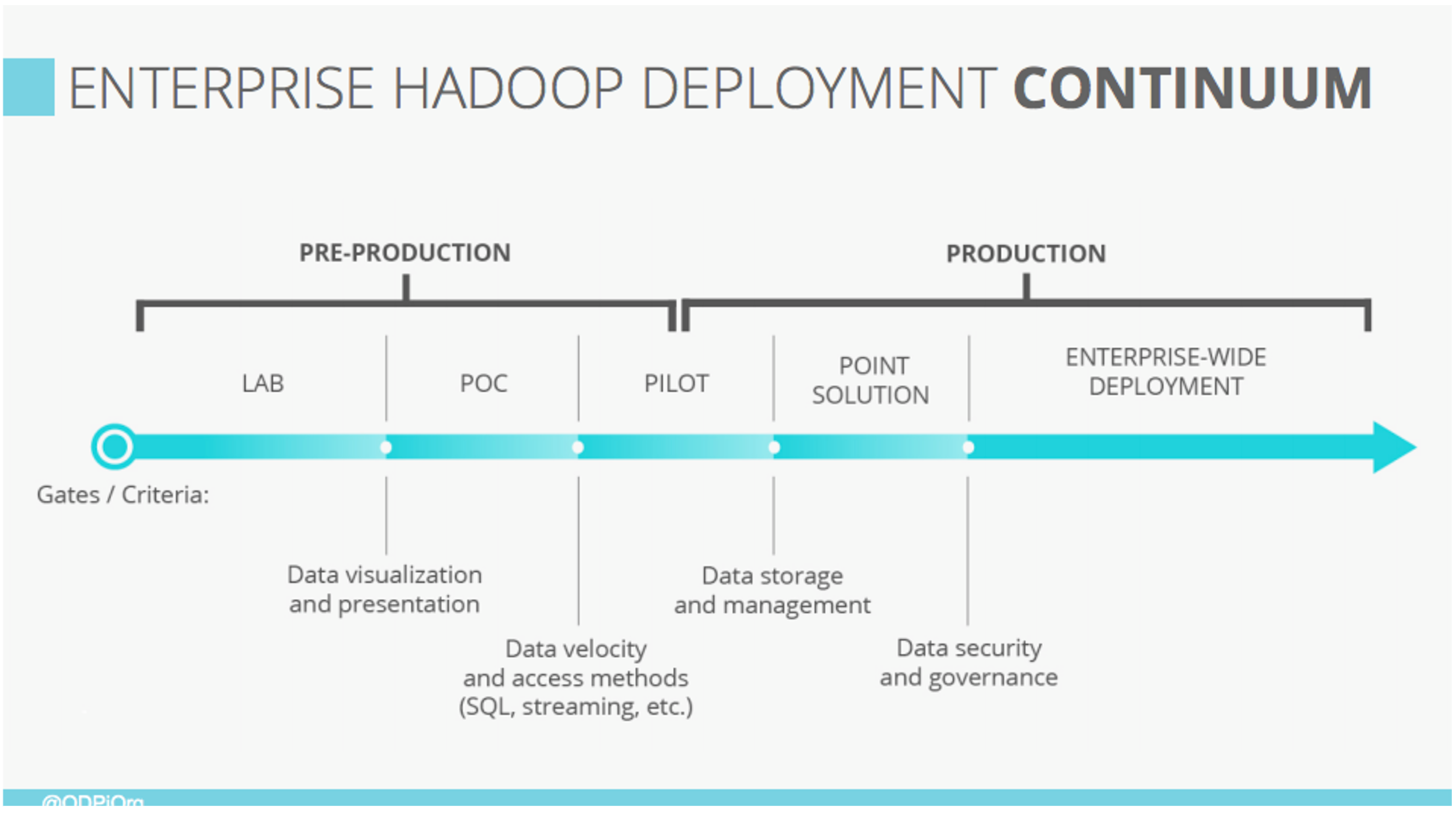

The webinar dived into why hybrid deployments are the norm for businesses moving Apache Hadoop and Big Data out of Proof of Concepts (POC)s and into enterprise-wide production. Mertic and Dull went through several important considerations that these businesses must address and provided the step-change DataOps requirements that come when you take Hadoop into enterprise-wide production. The webinar also discussed why deployment and management techniques that work in limited production may not scale when you go enterprise wide.

The webinar was so interactive with polls and questions, we unfortunately did not get to every question. This is why we sat down with Dull and Mertic to respond to them now.

What are the top 3 considerations for data projects?

TD: I have four, not three, considerations if you want your big data project to be successful:

-

Strategy: Does this project address/solve a real business problem? Does it tie back to a corporate strategy? If the answer is “no” to either question, try again.

-

Integration: At the infrastructure level, how are your big data technologies going to fit into your current environment? This is where ODPi comes in. And at the data level, how are you going to integrate your data – structured, semi-structured, and unstructured? You must figure this out before you go production with Hadoop.

-

Privacy/Security: This is part of the data governance discussion. I highlight it because as we move into this newer Internet of Things era, if privacy and security are not included in the design phase of whatever you’re building (product or service), you can count on this one biting you in the butt later on.

-

People/Skills: Do you have the right people on your data team? Today’s big data team is an evolution of your existing BI or COE team that requires new, additional skills.

Why is Hadoop a good data strategy solution?

TD: Hadoop allows you to collect, store, and process any and all kinds of data at a fraction of the cost and time of more traditional solutions. If you want to give all your data a voice at the table, then Hadoop makes it possible.

Why are some companies looking to de-emphasize Hadoop?

TD: See the “top 3 considerations” question. If any of these considerations are missed, your Hadoop project will be at risk. No company wants to emphasize risky projects.

How will a stable, standardized Hadoop benefit other big data projects like Spark?

JM: By helping grow innovation in those projects. It’s a commonly seen side effect that stabilizing the commodity core areas of a technology stack ( look at Linux for a great example ) enables R&D efforts to focus at higher levels in the stack.

How is Enterprise Hadoop challenges different for different verticals (healthcare, telco, banking, etc.)?

JM: Each vertical has very specific industry regulations and practices in how data is used and classified. This makes efforts around data governance that much more crucial – sharable templates and guidelines streamline data usage and enable focus on insight and discovery.

Is Hadoop adoption different around the world (i.e., EU, APAC, South America, etc.)?

JM: Each geo definitely has unique adoptions patterns depending on local business culture, the technology sector maturity and how technology is adopted and implemented in those regions. For example, we see China as a huge area of Hadoop growth that looks to adopt more full stack solutions as the next step from the EDW days. EU tends to lag a bit more behind in data analytics in general as they implement technology in a more thoughtful approach, and NA companies tend to implement technologies and then look how to connect to business problems.

What recent movements/impact in this space are you most excited about?

TD: We’ve been talking about “data-driven decision making” for decades. We now have the technologies to make it a reality – much quicker and without breaking the bank.

Where do you see the state of big data environments two years from now?

TD: Big data environments will be more stable and standardized. There will be less technical discussion about the infrastructure – i.e., the data plumbing – and more business discussion about analyzing the data and figuring out how to make or save money with it.

What impact does AR have on IoT and Big Data?

TD: I see this the other way around: Big data and IoT are fueling AR. Because of big data and IoT, AR can provide us with more context and a richer experience no matter where we are.

Can you recommend a resource that explains the hadoop ecosystem? People in this space seem to assume knowledge of the different open source project names and what they do, and explain what one component does in terms of the others. For me, it has been very difficult to figure out, i.e., “spark is like storm except in memory and less focused on streaming.”

TD: This is a very good question. What makes it more challenging is that the Hadoop ecosystem is growing and evolving, so you can count on today’s popular projects getting bumped to the side as newer projects come into play. I often refer to The Hadoop Ecosystem Table to understand the bigger picture and then drill down from there if I want to understand more.

We invite you to get involved with ODPi and learn more by visiting the ODPi website at https://www.odpi.org/.

We hope to see you again at an upcoming Linux Foundation webinar. Visit Linux.com to view the upcoming webinar schedule: https://www.linux.com/blog/upcoming-free-webinars-linux-foundation