Last year and at the beginning of this year, we asked you, Node.js users, to help us understand where, how and why you are using Node.js. We wanted to see what technologies you are using alongside Node.js, how you are learning Node.js, what Node.js versions you are using, and how these answers differ across the globe.

Thank you to all who participated.

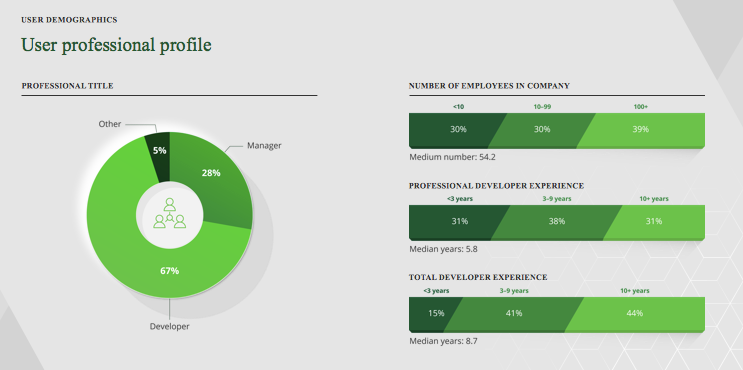

1,405 people from around the world (85+ countries) completed the survey, which was available in English and Mandarin. 67% of respondents were developers, 28% held managerial titles, and 5% listed their position as “other.” Geographic representation of the survey covered: 35% United States and Canada, 41% EMEA, 19% APAC, and 6% Latin and South America.

There was a lot of incredible data collected revealing:

- Users span a broad mix of development focus, ways of using Node.js, and deployment locations.

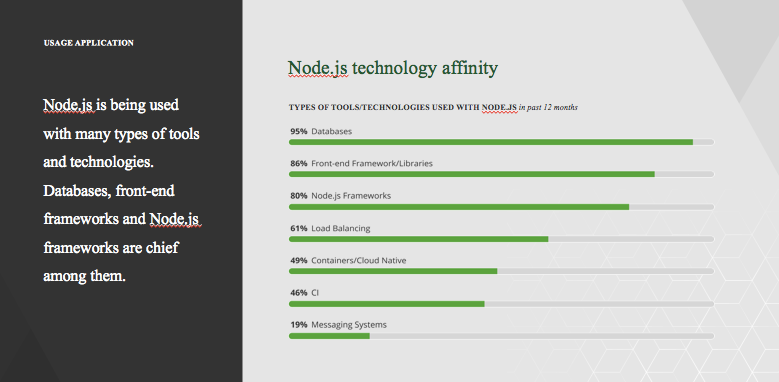

- There is a large mix of tools and technologies used with Node.js.

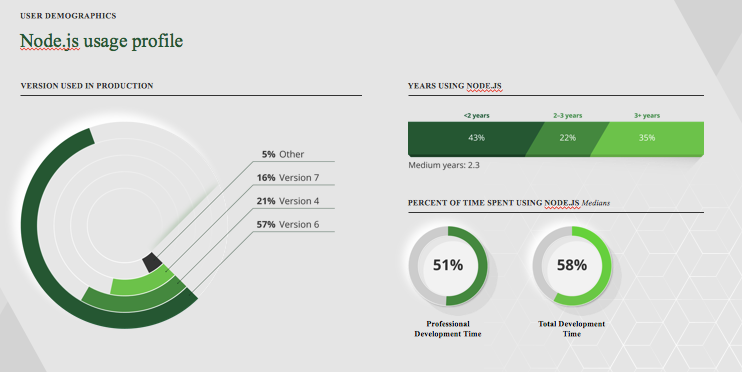

- Experience with Node.js is also varied — although many have been using Node.js less than 2 years.

- Node.js is a key part of the survey user’s toolkit, being used at least half of their development time.

The report also painted a detailed picture of the types of technologies being used with Node.js, language preferences alongside Node.js, and preferred production and development environments for the technology.

“Given developers’ important role in influencing the direction and pace of technology adoption, surveys of large developer communities are always interesting,” said Rachel Stephens, RedMonk Analyst. “This is particularly true when the community surveyed is strategically important like Node.js.”

In September, we will be releasing the interactive infographic of the results, which will allow you to dive deeper into your areas of interest. For the time being, check out our blog on the report below and download the executive summary here.

The Benefits of Node.js Grow with Time No Matter the Environment

Node.js is emerging as a universal development framework for digital transformation with a broad diversity of applications.

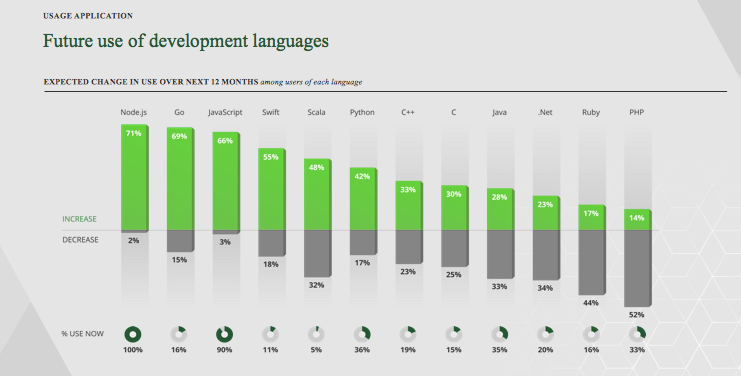

With more than 8 million Node.js instances online, three in four users are planning to increase their use of Node.js in the next 12 months. Many are learning Node.js in a foreign language with China being the second largest population outside of the United States using Node.js.

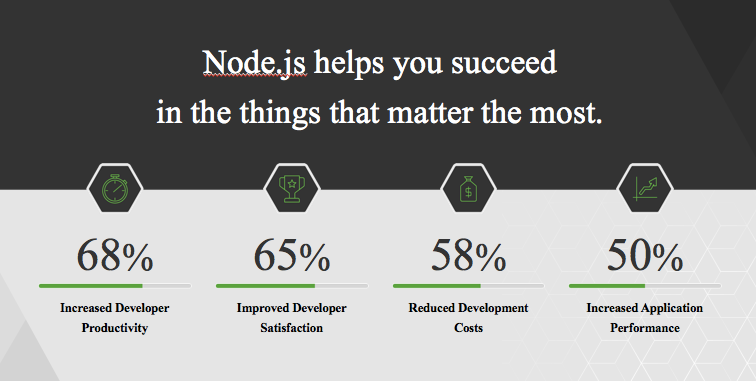

Those who continue to use Node.js over time were more likely to note the increased business impact of the application platform. Key data includes:

Most Node.js users found that the application platform helped improve developer satisfaction and productivity, and benefited from cost savings and increased application performance.

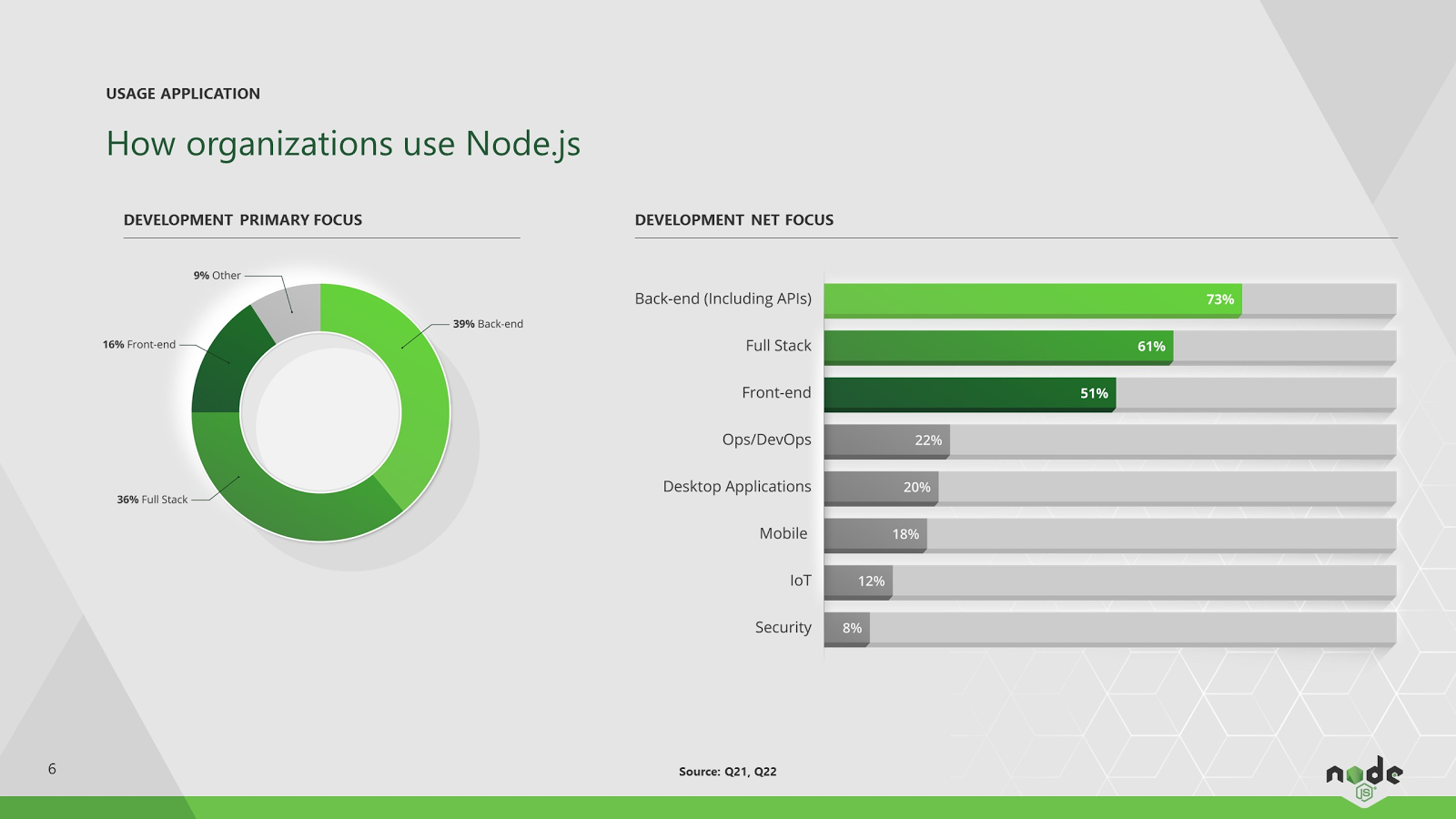

The growth of Node.js within companies is a testament to the platform’s versatility. It is moving beyond being simply an application platform, and beginning to be used for rapid experimentation with corporate data, application modernization and IoT solutions. It is often times the primary focus for developers with the majority of developers spending their time with Node.js on the back-end, full stack, and front-end. Although Node.js use is beginning to rise in the Ops/DevOps sector and mobile as well.

Node.js Used Less Than 2 Years, but Growing Rapidly In Businesses

Experience with Node.js varied — although many have been using Node.js less than 2 years. Given the rapid pace of Node.js adoption with a growth rate of about 100% year-over-year this isn’t surprising.

Companies that were surveyed noted that they were planning to expand their use of Node.js for web applications, enterprise, IoT, embedded systems and big data analytics. Conversely, they are looking to decrease the use of Java, PHP and Ruby.

Modernizing systems and processes are a top priority across businesses and verticals. Node.js’ light footprint and componentized nature make it a perfect fit for microservices (both container and serverless based) for lean software development without the need to gut out legacy infrastructure.

The survey revealed that 47% of respondents are using Node.js for container and serverless-based architectures across development areas:

- 50% of respondents are using containers for back-end development.

- 52% of respondents are using containers for full-stack development.

- 39% of respondents are using containers for front-end development.

- 48% of respondents are using containers for another area of development with Node.js.

The use of Node.js expands well beyond containers and cloud-native apps to touch development with databases, front-end framework/libraries, load balancing, message systems and more.

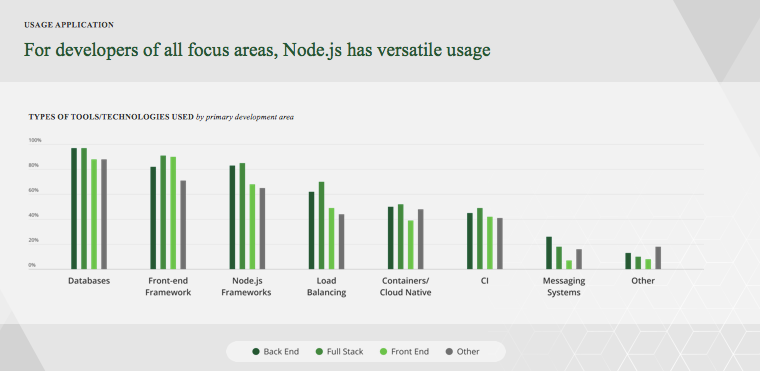

And for developers of all focus areas, Node.js has versatile usage.

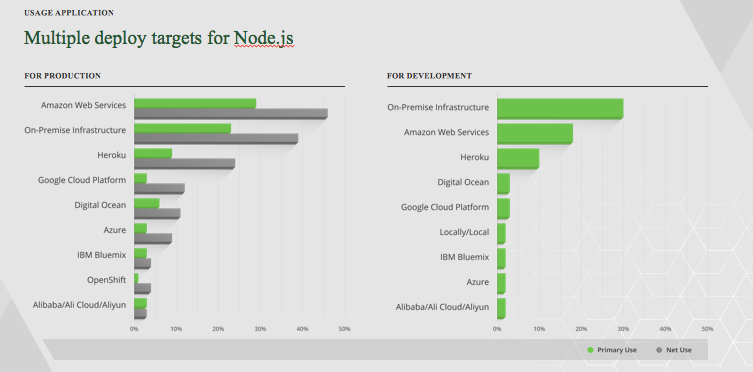

68% of survey respondents who are using Node.js for serverless are using Amazon Web Services for production. 47% of survey respondents using Node.js and serverless are using Amazon Web Services for development.

Developers who use Node.js and serverless used it across several development areas with the most popular being: back-end, full stack, front-end and DevOps.

Revenues for big data and business analytics are set to grow to more than $203 billion in 2020. Vendors in this market require distributed systems within their products and rely on Node.js for data analysis.

The survey revealed that big data/business analytics developers and managers are more likely to see major business impacts after instrumenting Node.js into their infrastructure with key benefits being productivity, satisfaction, cost containment, and increased application performance.

Node.js Grows in the Enterprise

With the creation of the long-term support plan in 2015, there has been an increase of enterprise development work with Node.js. The Long-Term support plan provides a stable (LTS) release line for enterprises that prioritize security and stability, and a current release line for developers who are looking for the latest updates and experimental features. The survey revealed:

- 39% of respondents with the developer title are using Node.js for enterprise.

- 59% of respondents with a manager title are using Node.js for enterprise.

- 69% of enterprise users plan to increase their use of Node.js over the next 12 months.

- 47% of enterprise users have been using Node.js for 3+ years and 58% of developers using Node.js have 10+ years in total development experience.

The Long-Term Support versions of Node.js tend to be the most highly sought after with development.

*Node.js 4 and 6 are the LTS versions and best suited for the enterprise of those that favor stability and security over new features.

If you want to continue to learn more about Node.js, sign up for our monthly community newsletter, which will continue to pepper you with data for months to come, and also provide you with information on cool new projects using Node.js. The Node.js Foundation will also hold its annual conference from October 4–6 in Vancouver, Canada. Come join us at Node.js Interactive.

*Mark Hinkle, the Node.js Foundation’s Executive Director, will be talking about this data and provide an update on the Foundation during his keynote at NodeSummit.

This article originally appeared on HackerNoon.