Application development methodologies have seen a lot of change in recent years. With the rise and adoption of microservice architectures, cloud computing, single-page applications, and responsive design to name a few, developers have many decisions to make, all while still keeping project timelines, user experience, and performance in mind. Nowhere is this more true than in front-end development and JavaScript.

To help catch everyone up, we’ll take a brief look at the revolution in JavaScript development over the last few years. Next, we’ll look at the some of the challenges and opportunities facing the front-end development community. To wrap things up, and to help lead into the next parts of this series, we’ll preview the components of a fully modern front-end stack.

The JavaScript Renaissance

When NodeJS came out in 2009, it was more than just JavaScript on the command line or a web server running in JavaScript. NodeJS revolutionized a concentration of software development around something that was so desperately needed: a mature and stable ecosystem focused on the front-end developer. Thanks to Node and its default package manager, npm, JavaScript saw a renaissance in how applications could be architected (e.g., Angular leveraging Observables or the functional paradigms of React) as well as how they were developed. The ecosystem thrived, but because it was young it also constantly churned.

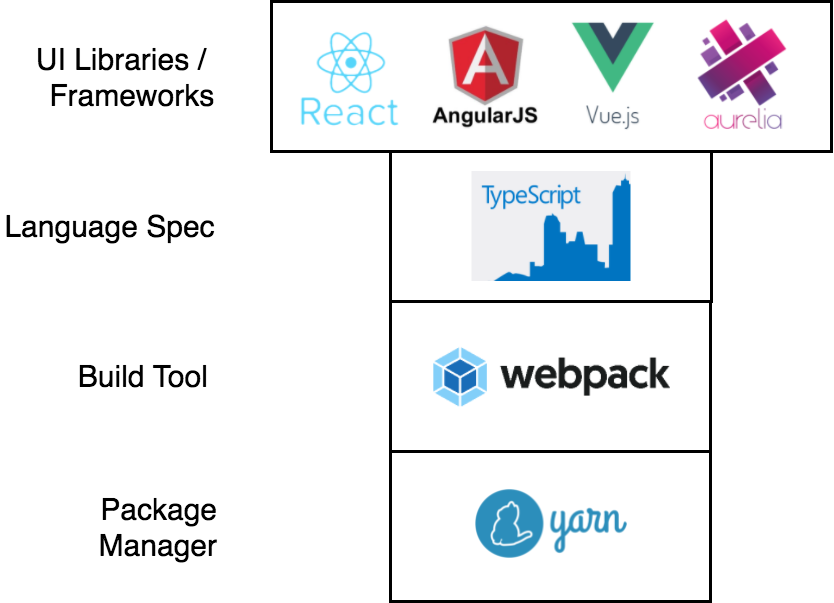

Happily, the past few years have allowed certain patterns and conventions to rise to the top. In 2015, the JavaScript community saw the release of a new spec, ES2015, along with an even greater explosion in the ecosystem. The illustration below shows just some of the most popular JavaScript ecosystem elements.

State of the JavaScript ecosystem in 2017

At Kenzan, we’ve been developing JavaScript applications for more than 10 years on a variety of platforms, from browsers to set-top boxes. We’ve watched the front-end ecosystem grow and evolve, embracing all the great work done by the community along the way. From Grunt™ to Gulp, from jQuery® to AngularJS, from copying scripts to using Bower for managing our front-end dependencies, we’ve lived it.

As JavaScript matured, so did our approach to our development processes. Building off our passion for developing well-designed, maintainable, and mature software applications for our clients, we realized that success always starts with a strong local development workflow and stack. The desire for dependability, maturity, and efficiency in the development process led us to the conclusion that the development environment could be more than just a set of tools working together. Rather, it could contribute to the success of the end product itself.

Challenges and Opportunities

With so many choices, and such a robust and blossoming ecosystem at present, where does that leave the community? While having choices is a good thing, it can be difficult for organizations to know where to start, what they need to be successful, and why they need it. As user expectations grow for how an application should perform and behave (load faster, run more smoothly, be responsive, feel native, and so on), it gets ever more challenging to find the right balance between the productivity needs of the development team and the project’s ability to launch and succeed in its intended market. There is even a term for this called analysis paralysis, which is a difficulty in arriving at a decision due to overthinking and needlessly complicating a problem.

Chasing the latest tools and technologies can inhibit velocity and the achievement of significant milestones in a project’s development cycle, risking time to market and customer retention. At a certain point an organization needs to define its problems and needs, and then make a decision from the available options, understanding the pros and cons so that it can better anticipate the long-term viability and maintainability of the product.

At Kenzan, our experience has led us to define and coalesce around some key concepts and philosophies that ensure our decisions will help solve the challenges we’ve come to expect from developing software for the front end:

-

Leverage the latest features available in the JavaScript language to support more elegant, consistent, and maintainable source code (like import / export (modules), class, and async/await).

-

Provide a stable and mature local development environment with low-to-no maintenance (that is, no global development dependencies for developers to install or maintain, and intuitive workflows/tasks).

-

Adopt a single package manager to manage front-end and build dependencies.

-

Deploy optimized, feature-based bundles (packaged HTML, CSS, and JS) for smarter, faster distribution and downloads for users. Combined with HTTP/2, large gains can be made here for little investment to greatly improve user experience and performance.

A New Stack

In this series, our focus is on three core components of a front-end development stack. For each component, we’ll look at the tool that we think brings the best balance of dependability, productivity, and maintainability to modern JavaScript application development, and that are best aligned around our desired principals.

Package Management: Yarn

The challenge of how to manage and install external vendor or internal packages in a dependable and consistently-reproducible way is critical to the workflow of a developer. It’s also critical for maintaining a CI/CD (continuous integration/continuous delivery) pipeline. But, which package manager do you choose given all the great options available to evaluate? npm? jspm? Bower? CDN? Or do you just copy and paste from the web and commit to version control?

Our first article will look at Yarn and how it focuses on being fast and providing stable builds. Yarn accomplishes this by ensuring the version of a vendor dependency installed today will be the exact same version installed by a developer next week. It is imperative that this process is frictionless and reliable, distributed and at scale, because any downtime prevents developers from being able to code or deploy their applications. Yarn aims to address these concerns by providing a fast, reliable alternative to the npm cli for managing dependencies, while continuing to leverage the npm registry as the host for public Node packages. Plus it’s backed by Facebook, an organization that has scale in mind when developing their tooling.

Application Bundling: webpack™

The orchestration of building a front-end application, which is typically comprised of a mix of HTML, CSS, and JS, as well as binary formats like images and fonts, can be tricky to maintain and even more challenging to orchestrate. So how does one turn a code base into an optimized, deployable artifact? Gulp? Grunt? Browserify? Rollup? SystemJS? All of these are great options that provide their own strengths and weaknesses, but we need to make sure the choice reflects our intended principals we discussed above.

webpack is a build tool specifically designed to package and deploy web applications comprised of any kind of potential assets (HTML, CSS, JS, images, fonts, and so on) into an optimized payload to deliver to users. We want to take advantage of the latest language features like import/export and class to make our code future-facing and clean, while letting the tooling orchestrate the bundling of our code such that it is optimized for both the browser and the user. webpack can do just that, and more!

Language Specification: TypeScript

Writing clean code in and of itself is always a challenge. JavaScript, which is a dynamic language and loosely typed, has afforded developers a medium to implement a wide range of design patterns and conventions. Now, with the latest JavaScript specification, we see more solid patterns from the programming community making their way into the language. Support for features like the use of import/export and class have brought a fundamental paradigm shift to how a JavaScript application can be developed, and can help ensure that code is easier to write, read, and maintain. However, there is still a gap in the language that generally begins to impact applications as they grow: maintainability and integrity of the source code, and predictability of the system (the application state at runtime).

TypeScript is superset of JavaScript that adds type safety, access modifiers (private and public), and newer features from the next JavaScript specification. The security in a more strictly typed language can help promote and then enforce architectural design patterns by using a transpiler to validate code before it even gets to the browser, which helps to reduce developer cycle time while also being self-documenting. This is particularly advantageous because, as applications grow and change happens within the codebase, TypeScript can help keep regressions in check while adding clarity and confidence to the code base. IDE integration is also a huge win here as well.

What About Front-End Frameworks?

As you may have noticed, so far we’ve intentionally avoided recommending a front-end framework or library like Angular or React, so let’s address that now.

Different applications call for different approaches to their development based on many factors like team experience, scope and size, organizational preference, and familiarity with concepts like reactive or functional programming. At Kenzan, we believe evaluating and choosing any ES2015/TypeScript compatible library or framework, be it Angular 2 or React, should be based on characteristics specific to the given situation.

If we revisit our illustration from earlier, we can see a new stack take form that provides flexibility in choosing front-end frameworks.

A modern stack that offers flexibility in front-end frameworks

Below this upper “view” layer is a common ground that can be built upon by leveraging tools that embrace our key principles. At Kenzan, we feel that this stack converges on a space that captures the needs of both user and developer experience. This yields results that can benefit any team or application, large or small. It is important to remember that the tools presented here are intended for a specific type of project development (front-end UI application), and that this is not intended to be a one-size-fits-all endorsement. Discretion, judgement, and the needs of the team should be the prominent decision-making factors.

What’s Next

So far, we’ve looked back at how the JavaScript renaissance of the last few years has led to a rapidly-maturing JavaScript ecosystem. We laid out the core philosophies that have helped us to meet the challenges and opportunities of developing software for the front end. And we outlined three main components of a modern front-end development stack. Throughout the rest of this series, we’ll dive deeper into each of these components. Our hope is that, by the end, you’ll be in a better position to evaluate the infrastructure you need for your front-end applications.

We also hope that you’ll recognize the value of the tools we present as being guided by a set of core principles, paradigms, and philosophies. Writing this series has certainly caused us to put our own experience and process under the microscope, and to solidify our rationale when it comes to front-end tooling. Hopefully, you’ll enjoy what we’ve discovered, and we welcome any thoughts, questions, or feedback you may have.

Next up in our blog series, we’ll take a closer look at the first core component of our front-end stack—package management with Yarn.

Kenzan is a software engineering and full service consulting firm that provides customized, end-to-end solutions that drive change through digital transformation. Combining leadership with technical expertise, Kenzan works with partners and clients to craft solutions that leverage cutting-edge technology, from ideation to development and delivery. Specializing in application and platform development, architecture consulting, and digital transformation, Kenzan empowers companies to put technology first.

Grunt, jQuery, and webpack are trademarks of the JS Foundation.