As cloud computing continues to evolve, it’s clear that the OpenStack platform is guaranteeing a strong open source foundation for the cloud ecosystem. At the recent OpenStack Days conference in Melbourne, OpenStack Foundation Executive Director Jonathan Bryce noted that although the early stages of cloud technology emphasized public platforms such as AWS, Azure and Google, the latest stage is much more focused on private clouds.

According to the The OpenStack Foundation User Survey, organizations everywhere have moved beyond just kicking the tires and evaluating OpenStack to deploying the platform. In fact, the survey found that OpenStack deployments have grown 44 percent year-over-year. More than 50 percent of Fortune 100 companies are running the platform, and OpenStack is a global phenomenon. According to survey findings, five million cores of compute power, distributed across 80 countries, are powered by OpenStack.

The typical size of an OpenStack cloud increased over the past year as well. Thirty-seven percent of clouds have 1,000 or more cores, compared to 29 percent a year ago, and 3 percent of clouds have more than 100,000 cores. You can see the survey findings, which are based on responses from 2561 users, in this video overview.

The fact that OpenStack is built on open source is not lost on organizations deploying it. The OpenStack Foundation User Survey shows that avoiding vendor lock-in and accelerating the ability to innovate are the top reasons cited for OpenStack deployment. According to the survey, the highest number of OpenStack deployments fall within the Information Technology industry (56 percent), followed by telecommunications, academic/research, finance, retail/e-commerce, manufacturing/industrial, and government/defense.

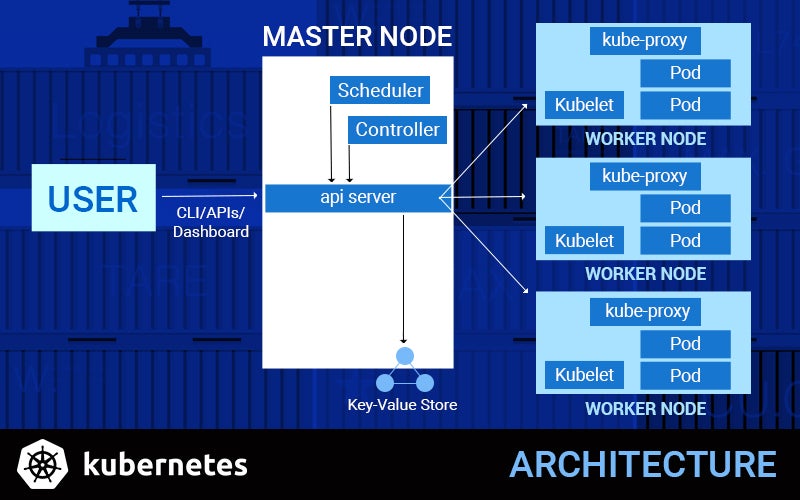

The survey also found that most OpenStack deployments consist of on-premises private clouds (70 percent), with public cloud deployments at 12 percent. Interestingly, containers remain the top emerging technology of interest to OpenStack users. And, 65 percent of organizations running OpenStack services inside containers use Docker runtime, while nearly 50 percent of those using containers to orchestrate apps on OpenStack use Kubernetes.

Organizations are building infrastructure around OpenStack, too. Survey results show that the median user runs 61–80 percent of their overall cloud infrastructure on OpenStack, while the typical large user (deployment with 1,000+ cores) reports running 81–100 percent of their total infrastructure on OpenStack.

It’s proven that OpenStack skills are in high-demand in the job market, and if you are seeking training and certification, opportunities abound. The OpenStack Foundation offers a Certified OpenStack Administrator (COA) exam. Developed in partnership with The Linux Foundation, the exam is performance-based and available anytime, anywhere. It allows professionals to demonstrate their OpenStack skills and helps employers gain confidence that new hires are ready to work.

The Linux Foundation also offers an OpenStack Administration Fundamentals course, which serves as preparation for the certification. The Foundation also offers comprehensive Linux training and other classes. You can explore options here. Red Hat and Mirantis offer very popular OpenStack training options as well.

For a comprehensive look at trends in the open cloud, The Linux Foundation’s Guide to the Open Cloud report is a good place to start. The report covers not only OpenStack, but well-known projects like Docker and Xen Project, and up-and-comers such as Apache Mesos, CoreOS and Kubernetes.

Now updated for OpenStack Newton! Our Essentials of OpenStack Administration course teaches you everything you need to know to create and manage private and public clouds with OpenStack. Download a sample chapter today!