Introduction to Rebuild

Building modern software in a predictable and repeatable way isn’t easy. The overwhelming number of software dependencies and the need to isolate conflicting components presents numerous challenges to the task of managing build environments.

While there are many tools aimed at mitigating this challenge, they all generally utilize two approaches: they either rely on package managers to preserve and replicate package sets, or they use virtual or physical machines with preconfigured environments.

Both of these approaches are flawed. Package managers fail to provide a single environment for components with conflicting build dependencies, and separate machines are heavy and fail to provide a seamless user experience. Rebuild solves these issues by using modern container technologies that offer both isolated environments and ease of use.

Rebuild is perfect for establishing build infrastructures for source code. It allows the user to create and share fast, isolated and immutable build environments. These environments may be used both locally and as a part of continuous integration systems.

The distinctive feature of Rebuild environments is a seamless user experience. When you work with Rebuild, you feel like you’re running “make” on a local machine.

Client Installation

The Rebuild client requires Docker engine 1.9.1 or newer and Ruby 2.0.0 or newer.

Rebuild is available at rubygems.org:

gem install rbld

Testing installation

Run:

rbld help

Quick start

Search for existing environments

Let’s see how we can simplify the usage of embedded toolchains with Rebuild. By default, Rebuild is configured to work with DockerHub as an environment repository and we already have ready-made environments there.

The workflow for Rebuild is as follows: a) search for the needed environment in Environment Repository, b) deploy the environment locally (needs to be done only once for the specific environment version), c) run Rebuild. If needed you can modify, commit and publish modified environments to Registry while simultaneously keeping track of different environment versions.

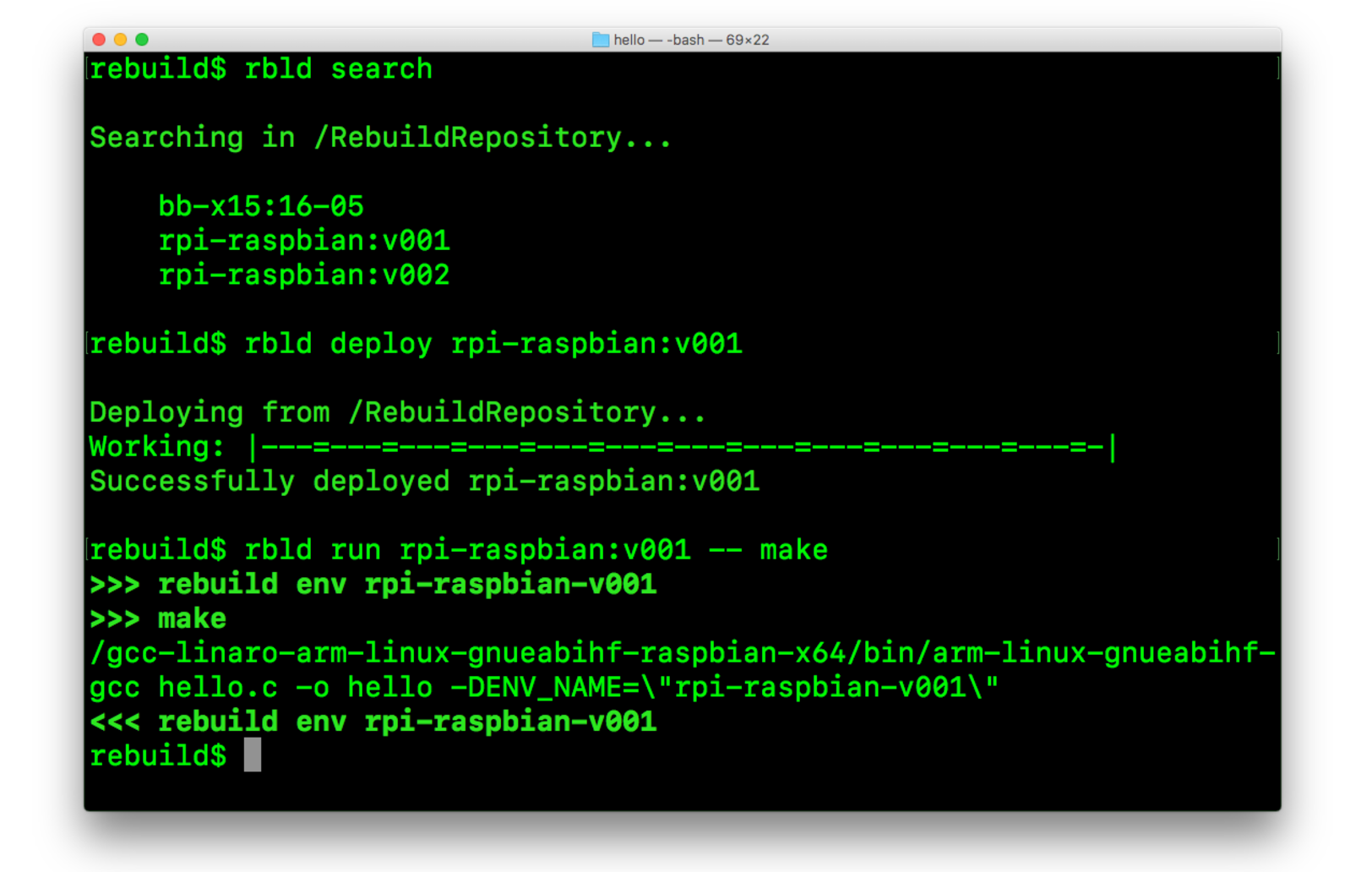

First, we search for environments by executing this command:

$ rbld search

Searching in /RebuildRepository...

bb-x15:16-05

rpi-raspbian:v001

Next, we deploy the environment to our local machine. To do this, enter the following command:

$ rbld deploy rpi-raspbian:v001

Deploying from /RebuildRepository...

Working: |---=---=---=---=---=---=---=---=---=---=---=---=-|

Successfully deployed rpi-raspbian:v001

And now it is time to use Rebuild to compile your code. Enter the directory with your code and run:

$ rbld run rpi-raspbian:v001 -- make

Of course, you can also use any other command that you use to build your code.

You can also use your environment in interactive mode. Just run the following:

$ rbld run rpi-raspbian:v001

Then, simply execute the necessary commands from within the environment. Rebuild will take care of the file permission and ownership for the build result that will be located on your local file system.

Explore Rebuild

To learn more about Rebuild, run rbld help or read our GiftHub documentation.

Rebuild commands can be grouped by functionality:

-

Creation and modification of the environments:

-

rbld create – Create a new environment using the base environment or from an archive with a file system

-

rbld modify – Modify the environment

-

rbld commit – Commit modifications to the environment

-

rbld status – Check the status of existing environments

-

rbld checkout – Revert changes made to the environment

-

Managing local environments:

-

rbld list – List local environments

-

rbld rm – Delete local environment

-

rbld save – Save local environment to file

-

rbld load – Load environment from file

-

Working with environment registries:

-

rbld search – Search registry for environments

-

rbld deploy – Deploy environment from registry

-

rbld publish – Publish local environment to registry

-

Running an environment either with a set of commands or in interactive mode:

Additional information