At the recent Embedded Linux Conference, IBM IoT/Mobile software engineer Kalonji Bankole and IBM Cloud & Watson developer Prashant Khanal detailed Big Blue’s spin on serverless, called IBM Bluemix OpenWhisk.

At the recent Embedded Linux Conference, IBM IoT/Mobile software engineer Kalonji Bankole and IBM Cloud & Watson developer Prashant Khanal detailed Big Blue’s spin on serverless, called IBM Bluemix OpenWhisk.

This week in OSS & Linux news, Jack Wallen shares a rundown of Google Fuchsia features and how they affect Android, Microsoft can no longer ignore Linux in the data center, & more! Read on to stay open-source-informed!

1) Jack Wallen shares pros and cons of Google Fuchsia

What Fuchsia Could Mean For Android– TechRepublic

2) “Microsoft is bridging the gap with Linux by baking it into its own products.”

How Microsoft is Becoming a Linux Vendor– CIO

3) Sprint’s CP30 “is designed to streamline mobile core architecture by collapsing multiple components into as few network nodes as possible.”

Sprint Debuts Open Source NFV/SDN Platform Developed with Intel Labs– Wireless Week

4) Move over, Siri! Open source Mycroft is here to assist us.

This Open-Source AI Voice Assistant Is Challenging Siri and Alexa for Market Superiority– Forbes

5) Heterogenous memory management is being added to the Linux kernel. Here’s what that will mean for machine learning hardware:

Faster Machine Learning is Coming to the Linux Kernel– InfoWorld

In a cloud native world, where workloads and infrastructure are all geared towards applications that spend their entire life cycle in a cloud environemnt, One of the first shifts was towards lightning fast release cycles. No longer would dev and ops negotiate 6 month chunks of time to ensure safe deployment in production of major application upgrades. No, in a cloud native world, you deploy incremental changes in production whenever needed. And because the dev and test environments have been automated to the extreme, the pipeline for application delivery in production is much shorter and can be triggered by the development team, without needing to wait for a team of ops specialists to clear away obstacles and build out infrastructure – that’s already done.

Read more at Open Source Entrepreneur Network

At the company’s annual developer conference today, CEO Sundar Pichai announced a new computer processor designed to perform the kind of machine learning that has taken the industry by storm in recent years.

The announcement reflects how rapidly artificial intelligence is transforming Google itself, and it is the surest sign yet that the company plans to lead the development of every relevant aspect of software and hardware.

Perhaps most importantly, for those working in machine learning at least, the new processor not only executes at blistering speed, it can also be trained incredibly efficiently. Called the Cloud Tensor Processing Unit, the chip is named after Google’s open-source TensorFlow machine-learning framework.

Read more at Technology Review

Just like organizations can build up technical debt, so too can they also build up something called “security debt,” if they don’t plan accordingly, attendees learned at the WomenWhoCode Connect event at Twitter headquarters in San Francisco last month.

Security has got to be integral to every step of the software development process, stressed Mary Ann Davidson, Oracle’s Chief Security Officer, in a keynote talk with about security for developers with Zassmin Montes de Oca of WomenWhoCode.

AirBnB’s Keziah Plattner echoed that sentiment in her breakout session. “Most developers don’t see security as their job,” she said, “but this has to change.” She shared four basic security principles for engineers. First, security debt is expensive. There’s a lot of talk about technical debt and she thinks security debt should be included in those conversations.

Read more at The New Stack

Functions as a Service or FaaS (by Alex Ellis) is a really neat way of implementing serverless functions with Docker. You can build out functions in any programming language and then deploy them to your existing Docker Swarm.

In this post we’ll look at an experimental CLI for making this process even easier.

The diagram below gives an overview of how the FaaS function package, the Docker image, and the faas-cli deploy command fit together.

Read more at Dev.to

Linux containers are gaining significant ground in the enterprise, which is not surprising, since they make so much sense in today’s business environment. With that said, container technology as we know it today is relatively new, and companies are still in the process of understanding the different ways in which containers can be leveraged.

In a nutshell, Linux containers enable companies to package up and isolate applications with all of the files necessary for each to run. This makes it easy to move containerized applications among environments while retaining their full functionality.

Read more at NetworkWorld

Virtualization has been a blessing for data centers – thanks to the humble hypervisor, we can create, move and rearrange computers on a whim, without thinking about the physical infrastructure.

The simplicity and efficiency of VMs has prompted network engineers to envision a programmable, flexible network based on open protocols and REST APIs that could be managed from a single interface, without worrying about each router and switch.

The idea came to be known as software defined networking (SDN), a term that originally emerged more than a decade ago. SDN also promised faster network deployments, lower costs and a high degree of automation. There was just one problem – the lack of software tools to make SDN a reality.

Read more at Datacenter Dynamics

With the Internet of Things, the realms of embedded Linux and enterprise computing are increasingly intertwined, and serverless computing is the latest enterprise development paradigm that device developers should tune into. This event-driven variation on Platforms-as-a-Service (PaaS) can ease application development using ephemeral Docker containers, auto-scaling, and pay-per execution in the cloud. Serverless is seeing growing traction in enterprise applications that need fast deployment and don’t require extremely high performance or low latency, including many cloud-connected IoT applications.

At the recent Embedded Linux Conference, IBM IoT/Mobile software engineer Kalonji Bankole and IBM Cloud & Watson developer Prashant Khanal detailed Big Blue’s spin on serverless, called IBM Bluemix OpenWhisk. Their presentation — built around a demo of a DIY, voice-enabled Raspberry Pi home automation gizmo that activates a WeMo smart light switch — shows how OpenWhisk integrates with IBM Watson, and discusses Watson interactions with MQTT and IFTTT (see video below).

Like commercial serverless frameworks such as Amazon’s AWS Lambda, Microsoft’s Azure Functions, and Google Functions, the open source OpenWhisk provides a “function as a service” approach to app development. The most immediate benefit of serverless is that it frees developers from the hassles of managing a server.

The term serverless is something of a misnomer, as there are still servers processing the code. However, the developer doesn’t need to worry about it.

“Serverless saves you from spending all your time firefighting and fixing a lot of DevOps issues like dealing with crashes, scaling, updates, and networking issues,” said Bankole, who covered the serverless part of the presentation. “Instead you can just focus on your code.”

PaaS platforms such as Cloud Foundry and Heroku promise something similar, but with a key difference. “PaaS platforms can also handle all the dependencies, scaling, and hosting, but once the application is deployed, it’s always up and waiting for requests — and charging your account for that uptime,” said Bankole. “By contrast, serverless allows us to spin up portions of an application on demand in an ephemeral Docker container, and the contents are deleted when you’re done. This supports a microservices approach where you only get charged based on when the code is running.”

With serverless platforms, the developer writes a series of stateless decoupled functions and uploads them to a serverless engine. “The function can then be called by an HTTP request or a change in a service such as a database or social networking service,” said Bankole.

OpenWhisk, which is also available as Apache OpenWhisk, is currently the only open source serverless platform, said Bankole. “That means you can run it at home or in your own data center,” he added. The modular, event-driven framework makes it easier for teams to work on different pieces of code simultaneously and to dynamically respond to the rapid scaling that is typical of many mobile end-user scenarios.

OpenWhisk comprises triggers, actions, rules, and packages, which are combined as services. Developers associate actions to handle events via rules, and packages are used to bundle and distribute sets of actions. All these components can be published publicly or privately.

Triggers “define which events OpenWhisk should pay attention to,” said Bankole. “They can be a web hook, changes to a database, incoming tweets, or a change of hash tags to social media account. Triggers can be data coming in from IoT devices or messages coming in to specific MQTT channels.”

The logic that responds to the triggers is called an action. These snippets of code, which can also be considered as “functions,” are “uploaded to the OpenWhisk action pool,” explained Bankole. OpenWhisk currently supports Node.js, Python, and Apple’s Swift, which Bankole singled out for praise. Other platforms will be added in the future.

Actions are executed in Docker containers, and the results are returned to the user. They can also be forwarded to other actions in a process called chaining. “This lets you reuse pieces of code and combine them in different sequences,” said Bankole.

Rules define a relationship between triggers and actions. A single trigger can set off multiple actions, or a single action can be triggered by multiple rules. This flexibility is well suited to IoT applications, such as home automation and security. For example, a rule can be set up so that when a trigger goes off based on a sensor, the action sends out several alert texts. The trigger could also be set up to kick off multiple actions in parallel, such as locking doors, flashing lights, and activating a siren.

Each function presented to the system is run as a customized REST implant. This in turn initiates an HTTP request that can be emitted by any device with Internet connectivity.

As an alternative to HTTP/REST requests, you can use “feeds,” which monitor services such as a database or message bus like MQTT. “If a message comes in to a certain topic on a MQTT broker or a new record is added to a database, the action can be triggered in response,” said Bankole.

The OpenWhisk IoT demo integrated the IBM Watson cognitive SaaS platform. For the demo, Bankole and Khanal specifically tapped Watson’s speech-to-text and natural language classifier services. Joined together, these provide a voice agent technology much like that of Alexa or Google Assistant.

In the IoT demo, Watson’s natural language classifier interpreted the speech-to-text output to find the intent. “Watson can tell us what device the request is trying to control, and what kind of control command is being sent,” said Khanal. The speech-to-text service, which supports eight languages, uses either HTTP or WebSocket interfaces to transcribe speech.

Like other Watson cognitive services such as machine learning and visual recognition, these voice services are configurable to run on a customer’s training model. For example, once you train the natural language classifier, it can identify the classes from text, from which you can then determine intent. You can automate the training process with REST and CLI.

To communicate with various devices, Bankole and Khanal used the Watson IoT Platform, which is an MQTT broker for Watson. Watson can integrate with other MQTT brokers, as well.

“Watson IoT provides REST and real-time APIs, mostly to communicate with devices,” said Khanal. “But Watson IoT can also be extended to read and store the state and device events so you can add analytics.”

Khanal also explained how you could connect the serverless/Watson based application to other home automation and smart appliance devices using IFTTT (If This Then That). IFTTT “makes it easier to connect to the many IFTTT-registered services and devices already out there,” said Khanal.

IFTTT combines triggers and actions to control devices or web services. “If you receive a tweet that says shut down the fan, you can use that trigger to connect to vendor devices registered in IFTTT cloud,” said Khanal. “It’s easy to use IFTTT to extend your architecture to connect to devices like smart dishwashers and refrigerators.”

You can watch the complete video below.

Connect with the Linux community at Open Source Summit North America on September 11-13. Linux.com readers can register now with the discount code, LINUXRD5, for 5% off the all-access attendee registration price. Register now to save over $300!

So, you want to stuff your Linux laptop or PC full of virtual machines and perform all manner of mad experiments. And so you shall, and a fine time you will have. Come with me and learn how to do this with KVM.

KVM, kernel-based virtual machine, was originally developed by Qumranet. Red Hat bought Qumranet in 2008, re-licensed KVM to the GPL, and then it became part of the mainline kernel. KVM is a type 2 hypervisor, which means it runs on a host operating system. VirtualBox and Hyper-V are type 2 hypervisors. In contrast, type 1 hypervisors run on the bare metal and don’t need host operating systems, like Xen and VMware ESX.

“Hypervisor” is a old term from the early days of computing. It has taken various meanings over the decades; I’m satisfied with thinking of it as a virtual machine manager that has control over hardware, hardware emulation, and the virtual machines.

KVM runs unmodified guest operating systems, including Linux, Unix, Max OS X, and Windows. You need a CPU with virtualization support, and while it is unlikely that your CPU does not have this, it takes just a second to check.

$ egrep -o '(vmx|svm)' /proc/cpuinfo vmx vmx vmx vmx vmx vmx vmx vmx

vmx means Intel, and svm is AMD. That is a quad-core Intel CPU with eight logical cores, and it is ready to do the virtualization rock. (Intel Core i7-4770K 3.50GHz, a most satifying little powerhouse that handles everything I throw at it, including running great thundering herds of VMs.)

Download a few Linux .isos for creating virtual machines.

Create two new directories, one to hold your .isos, and one for your storage pools. You want a lot of disk space, so put these in your home directory to make testing easier, or any directory with a few hundred gigabytes of free space. In the following examples, my directories are ~/kvm-isos and ~/kvm-pool.

Remember back in the olden days, when how-tos like this were bogged down with multiple installation instructions? We had to tell how to install from source code, from dependency-resolving package managers like apt and yum, and non-dependency-resolving package managers like RPM and dpkg. If we wanted to be thorough we included pkgtool, pacman, and various graphical installers.

Happy I am to not have to do that anymore. KVM on Ubuntu and CentOS 7 consists of qemu-kvm, libvirt-bin, virt-manager, and bridge-utils. openSUSE includes patterns-openSUSE_KVM_server, which installs everything, and on Fedora install virt-manager, libvirt, libvirt-python, and python-virtinst. You probably want to review the instructions for your particular flavor of Linux in case there are quirks or special steps to follow.

After installation, add yourself to the libvirt or libvirtd group, whichever one you have, and then log out and log back in. This allows you to run commands without root privileges. Then run this virsh command to check that the installation is successful:

$ virsh -c qemu:///system list Id Name State --------------------------------

When you see this it’s ready to go to work.

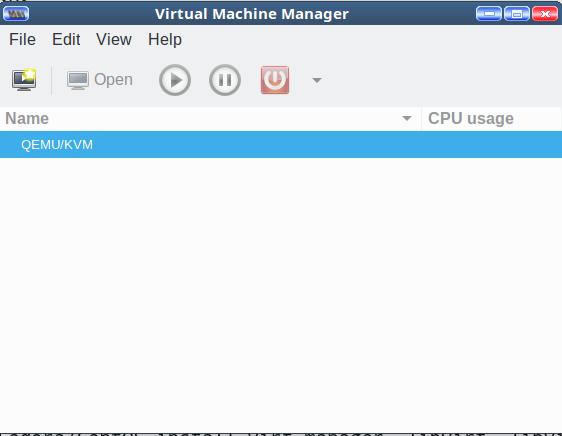

Look in your applications menu for Virtual Machine Manager and open it. In Ubuntu and openSUSE it’s under System. If you can’t find it then run the virt-manager command with no options. You will see something like Figure 1.

It’s not much to look at yet. Cruise through the menus, and double-click QEMU/KVM to start it, and to see the Connection Details window. Again, not much to see, just idle status monitors and various configuration tabs.

Now create a new virtual machine with one of those .isos you downloaded. I’ll use Fedora 25.

Go to File > New Virtual Machine. You get a nice dialog that offers several choices for your source medium. Select Local Install Media (ISO image or CDROM), then click Forward.

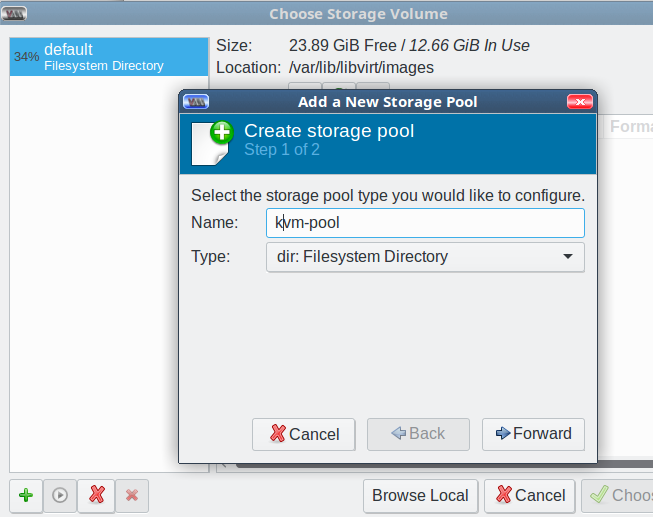

On the next screen check Use ISO Image:, and click the Browse button. This opens the Choose Storage Volume screen. The default storage volume of around 24GB in /var/lib/libvirt/images is too small, so you want to use your nice new kvm-pool directory. The interface is a little confusing; first, you create your nice large kvm-pool in the left pane, and then create individual storage pools for your VMs in the right pane each time you create a new VM.

Start by clicking the green add button at the bottom left to add your new large storage pool. This opens the Add a New Storage Pool screen. Select the dir: Filesystem Directory type, type a name for your storage pool, and click Forward (Figure 2).

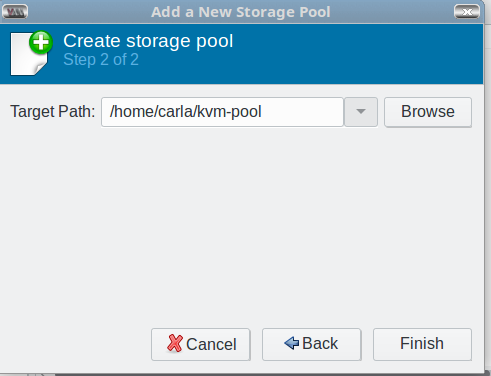

In the next screen, click the Browse button to browse to the pool directory you created back in the Prequisites section; this becomes the Target Path, and then click Finish (Figure 3).

Now you’re back at the Choose Storage Volume screen. You should see the default and your new storage pool in the left pane.

Click the Browse Local button at the bottom of the right pane to find the .iso you want to use. Select one and click Forward. This automatically adds your .iso directory to the left pane.

In the next screen, set your CPU and memory allocations, then click Forward. For Fedora I want two CPUs and 8096MB RAM.

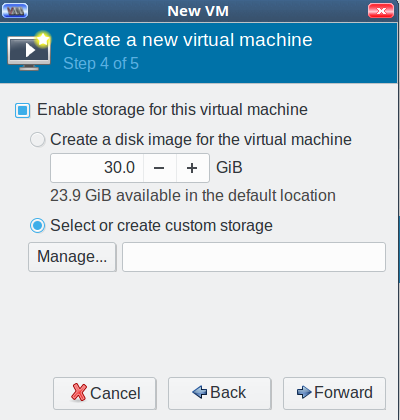

Click Forward, and enable storage for your new VM. Check Select or create custom storage, and click the Manage button (Figure 4).

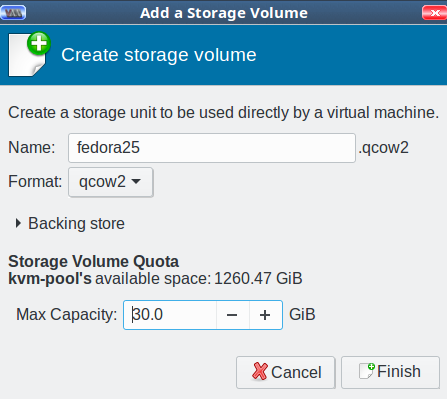

Now you’re back at the Choose Storage Volume screen. Click the green create new volume button next to Volumes in the right pane. Give your new storage volume a name and size, then click Finish (Figure 5). (We’ll get into the various format types later; for now go with qcow2.)

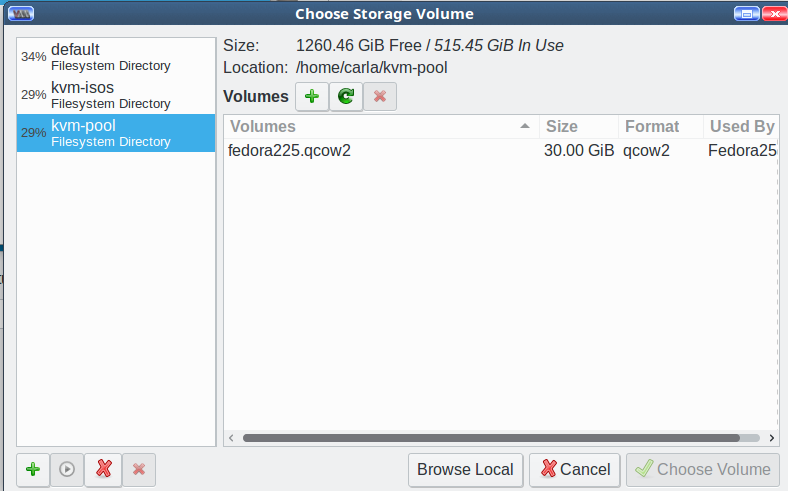

This brings you back to Choose Storage Volume. It should look like Figure 6.

Select your new storage pool, highlight your new storage volume, and click Choose Volume. Now you’re back at Step 4 of 5, Create a new virtual machine. Click Forward. In Step 5, type the name of your new VM, then click Finish and watch your new virtual machine start. As this is an installation .iso, the final step is to go through the usual installation steps to finish creating your VM.

When you create more VMs, the process will be more streamlined because you will use the .iso and storage pools you created on the first run. Don’t worry about getting things exactly right because you can delete everything and start over as many times as you want.

Come back next week to learn about networking and configurations.

Learn more about Linux through the free “Introduction to Linux” course from The Linux Foundation and edX.