By Arpit Joshipura, General Manager, Networking and Orchestration, The Linux Foundation

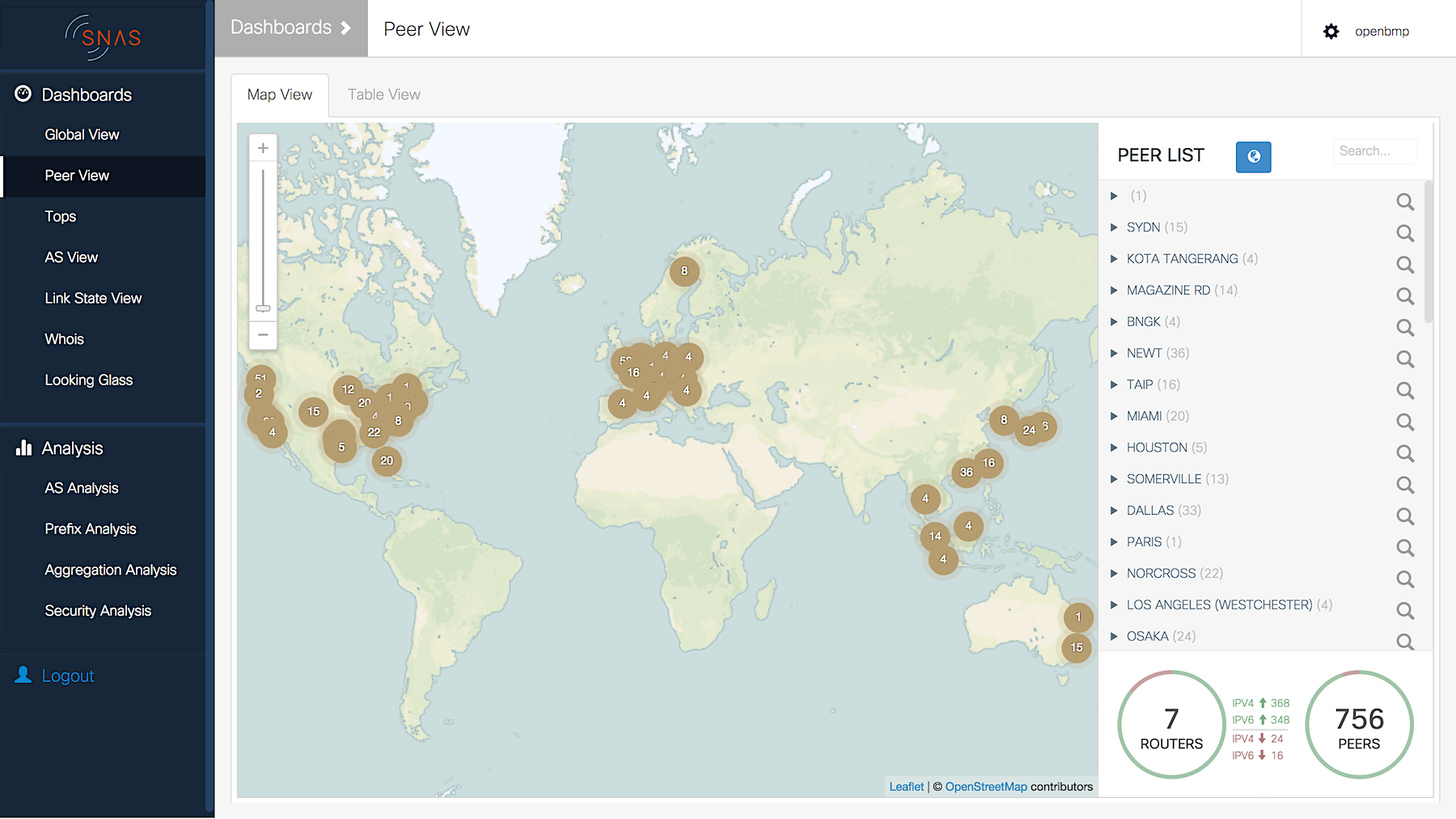

We are excited to announce that SNAS.io, a project that provides network routing topologies for software-defined applications, is joining The Linux Foundation’s Networking and Orchestration umbrella. SNAS.io tackles the challenging problem of tracking and analyzing network routing topology data in real time for those who are using BGP as a control protocol, internet service providers, large enterprises, and enterprise data center networks using EVPN.

Topology network data collected stems from both layer 3 and layer 2 of the network, and includes IP information, quality of service requests, and physical and device specifics. The collection and analysis of this data in real time allows DevOps, NetOps, and network application developers who are designing and running networks, to work with topology data in big volumes efficiently and to better automate the management of their infrastructure.

Contributors to the project include Cisco, Internet Initiative of Japan (IIJ), Liberty Global, pmacct, RouteViews, and the University of California, San Diego.

Originally called OpenBMP, the project focused on providing a BGP monitoring protocol collector. Since it launched two years ago, it has expanded to include other software components to make real-time streaming of millions of routing objects a viable solution. The name change helps reflect the project’s growing scope.

The SNAS.io collector not only streams topology data, it also parses it, separating the networking protocol headers and then organizing the data based on these headers. Parsed data is then sent to the high-performance messagebus, Kafka, in a well-documented and customizable topic structure.

SNAS.io comes with an application that stores the data in a MySQL database. Others that use SNAS.io can access the data either at the messagebus layer using Kafka APIs or using the project’s RESTful database API service.

The SNAS.io Project is complementary to several Linux Foundation projects, including PNDA and FD.io, and is a part of the next phase of networking growth: the automation of networking infrastructure made possible through open source collaboration.

Industry Support for the SNAS.io Project and Its Use Cases

Cisco

“SNAS.io addresses the network operational problem of real-time analytics of the routing topology and load on the network. Any NetDev or Operator working to understand the dynamics of the topology in any IP network can benefit from SNAS.io’s capability to access real-time routing topology and streaming analytics,” said David Ward, SVP, CTO of Engineering and Chief Architect, Cisco. “There is a lot of potential linking SNAS.io and other Linux Foundation projects such as PNDA, FD.io, Cloud Foundry, OPNFV, ODL and ONAP that we integrating to evolve open networking. We look forward to working with The Linux Foundation and the NetDev community to deploy and extend SNAS.io.”

Internet Initiative Japan (IIJ)

“If successful, the SNAS.io Project will provide a great tool for both operators and researchers,” said Randy Bush, Research Fellow, Internet Initiative Japan. “It is starting with usable visualization tools, which should accelerate adoption and make more of the Internet’s hidden data accessible.”

Liberty Global

“The SNAS.io Project’s technology provides our huge organization with an accurate network topology,” said Nikos Skalis, Network Automation Engineer, Liberty Global. “Together with its BGP forensics and analytics, it suited well to our toolchain.”

pmacct

“The BGP protocol is one of the very few protocols running on the Internet that has a standardized, clean and separate monitoring plane, BMP,” said Paolo Lucente, Founder and Author of the pmacct project. “The SNAS.io Project is key in providing the community a much needed full-stack solution for collecting, storing, distributing and visualizing BMP data, and more.”

RouteViews

“The SNAS.io Project greatly enhances the set of tools that are available for monitoring Internet routing,” said John Kemp, Network Engineer, RouteViews. “SNAS.io supports the use of the IETF BGP Monitoring Protocol on Internet routers. Using these tools, Internet Service Providers and university researchers can monitor routing updates in near real-time. This is a monitoring capability that is long overdue, and should see wide adoption throughout these communities.”

University of California, San Diego

“The Border Gateway Protocol (BGP) is the backbone of the Internet. A protocol for efficient and flexible monitoring of BGP sessions has been long awaited and finally standardized by the IETF last year as the BGP Monitoring Protocol (BMP). The SNAS.io Project makes it possible to leverage this new capability, already implemented in routers from many vendors, by providing efficient and easy ways to collect BGP messages, monitor topology changes, track convergence times, etc,” said Alberto Dainotti, Research Scientist, Center for Applied Internet Data Analysis, University of California, San Diego “SNAS.io will not only have a large impact in network management and engineering, but by multiplying opportunities to observe BGP phenomena and collecting empirical data, it has already demonstrated its utility to science and education.”

You can learn more about the project and how you can get involved here https://www.SNAS.io.