At last year’s Embedded Linux Conference Europe, Sony’s Tim Bird warned that the stalled progress in reducing Linux kernel size meant that Linux was ceding the huge market in IoT edge nodes to real-time operating systems (RTOSes). At this February’s ELC North America event, another figure who has long been at the center of the ELC scene — Free Electrons’ Michael Opdenacker — summed up the latest kernel shrinkage schemes as well as future possibilities. Due perhaps to Tim Bird’s exhortations, ELC 2017 had several presentations on reducing footprint, including Rob Landley’s Tutorial: Building the Simplest Possible Linux System.

Like Bird, Opdenacker bemoaned the lack of progress, but said there are plenty of ways for embedded Linux developers to reduce footprint. These range from using newer technologies such as musl, toybox, and Clang to revisiting other approaches that developers sometimes overlook.

In his talk, Opdenacker explained that the traditional motivator for shrinking the kernel was to speed boot time or copy a Linux image from low-capacity storage. In today’s IoT world, this has been joined with meeting the requirement for very small endpoints with limited resources. These aren’t the only reasons, however. “Some want to run Linux as a bootloader so they don’t have to re-create bootloader drivers, and some want to run to the whole system in internal RAM or cache,” said Opdenacker. “A small kernel can also reduce the attack surface to improve security.”

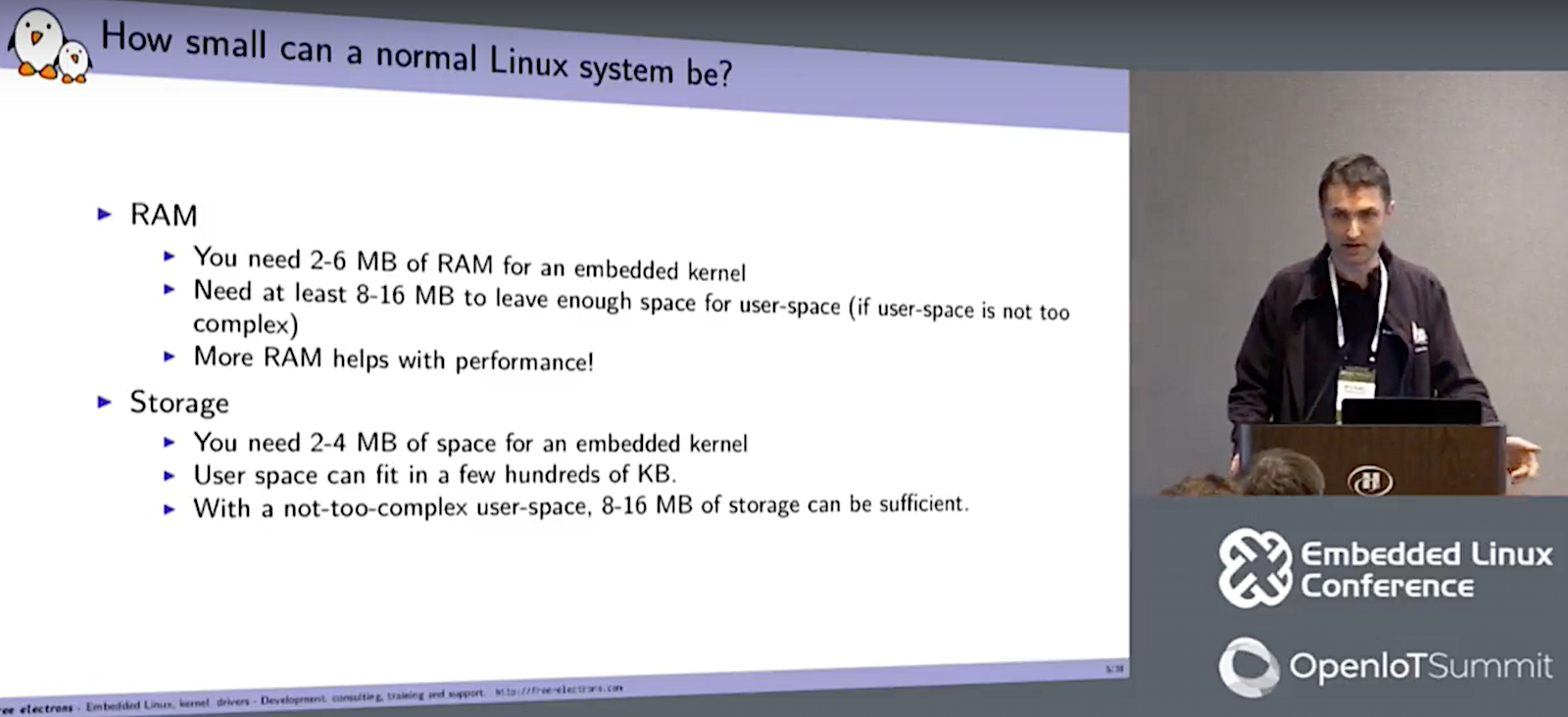

Stalled efforts such as the Linux Kernel Tinification project have largely done their job, said Opdenacker. Although size has edged up slightly over the years, you can still call upon a variety of techniques to run your kernel in as little as 4MB of RAM.

“You’d think that since the Tinification project is not that active that the kernel would grow exponentially, but it’s still under control, so maybe we could reverse the trend,” said Opdenacker. “With more aggressive work, 2-3MB may be achievable. Still, there has not been much new in this area since ELC Europe 2015.”

Although Josh Triplett’s Tinification patches, which remove functionality via configuration settings, have themselves been removed from the linux-next tree, they are still available for experimentation. The main reason: Kernel developers are hesitant to rip out too much plumbing due to the potential for bugs.

“Removing functionality may no longer be the way to go, as the complexity of kernel configuration parameters is already difficult to manage,” said Opdenacker. “Kernel developers don’t like to remove features. In the future, we may see new approaches that automatically detect and remove unused features like system calls, command-line options, /proc contents, and kernel command-line parameters. You would trace your system and see what you use at runtime and then remove the code you don’t need.”

Shrinking the kernel

Meanwhile, there are still plenty of ways to reduce footprint. One of the easiest is to shrink kernel size during compile. First, use a recent compiler, said Opdenacker. For example, gcc 6.2 gives you almost a half percentage point reduction over gcc 4.7 with ARM versatile Linux 4.10. That may not be much, but, “every byte can count,” he added.

Then there are compiler optimizations. With gcc, for example, you can use the -Os option to reduce size. Since gcc 4.7, users have also been able to run optional Link Time Optimizations that can reduce unused code when applied at the end of the compile “when linking all the object files together to optimize things like inlining across various objects,” said Opdenacker. In one test, running gcc 6.2 with LTO reduced the size of the stripped variable by 2.6 percent (x86_64) to 2.8 percent (32-bit ARM).

A few years ago, there was keen interest in an LLVM Linux project that used the Clang front end to the LLVM compiler to compile the Linux kernel for performance and size optimizations. “It is possibly better than what you can get with gcc LTO today, but the project has been stalled since 2015,” said Opdenacker. In response, an audience member suggested the project was still alive.

Using the Clang front end for the LLVM compiler brings even more footprint savings than gcc LTO. Opdenacker ran some tests using a program called OggEnc that consists of a single C program. He then compared Clang 3.8.1 with gcc 6.2 on x86_64, and saw a 5 percent reduction “out of the box without doing anything.” Gcc, however, can offer greater reductions when compiling very small programs, he added.

Opdenacker also mentioned some patches proposed by Andi Kleen in 2012 built around gcc LTO. They promised performance improvements and a reduction of as much as 6 percent of unused code on ARM systems. “Unfortunately, the patches caused some new problems so it wasn’t accepted,” he added. “The kernel developers were afraid of creating new bugs that were hard to track down. But maybe it’s worth trying again.”

Another compiler technique available to ARM users is to compile with the thumb (-mthumb), which offers a mix of 16- and 32-bit instructions instead of the all 32-bit ARM (-marm) instruction set. Some compilers, such as Ubuntu’s, will compile to thumb by default, said Opdenacker. Using OggEnc, the thumb compile was 6.8 percent smaller than the ARM compile. He conceded, however, that this was not a definitive test, as his compiler also compiled parts of the program using the ARM set.

Since Linux 3.18, developers have been able to reduce kernel size by using the “make tinyconfig” command, which combines “make allnoconfig” with a few adding settings that reduce size. “It uses gcc optimize for size, so the code may be slower but it’s smaller,” said Opdenacker. “You turn on kernel XZIP compression, and you save about 6 to 10KB.”

The kernel now has several tinification options you can choose from, like adding obj-y in kernel Makefiles. “In other words, you can compile the kernel without needing ptrace on all the time, which on ARM takes up 14KB.” You can find several tinification opportunities in the Linux kernel by looking for obj-y in kernel Makefiles, corresponding to code that is always included in the kernel binary. “For example, you may be able to compile the kernel without ptrace support, which on ARM takes up 14KB,” said Opdenacker.

It’s a good idea to “study your compile logs and see if everything is really needed,” said Opdenacker. “You can decide how useful it is and how difficult it is to remove. You can also look for size regressions using the bloat-o-meter command, which compares with vmlinux to see what has increased in size between versions.”

User space reductions

To reduce user space footprint on simpler programs, Opdenacker suggests that instead of busybox, developers should try the toybox set of Linux command line utilities, which is now baked into Android. “Toybox has the same applications and mostly the same features as busybox, but uses only 84KB instead of 100KB,” he added. “If you just want a shell with just a few command line utilities, toybox could save you a few thousands of bytes, though it’s less configurable.”

Another technique is to switch C/POSIX standard library implementations. The newer musl libc uses less space than uclibc and glibc. Opdenacker described one test on the hello.c program under gcc 6.3 and busybox in which musl used only 7.3KB vs. 67KB for uclibc-ng 1.0.22 vs. 49KB using glibc with with gcc 6.2.

For reducing file system size, Opdenacker recommends booting on initramfs for small file systems. “It lets you boot earlier because you don’t have to initialize file-system and storage drivers.” For bigger RAM sizes, he suggests using compression file systems such as SquashFS, JFFS2, or ZRAM.

“There’s still significant room for improvement in user and kernel space reduction,” concluded Opdenacker. However, when he asked if the community should resurrect the Kernel Tinification project, he was met with a somewhat tepid response.

“These days you can’t even buy an 8MB RAM card,” said one attendee. “It’s an interesting exercise, but I don’t know that there’s a whole lot of payback.” Another developer noted that one problem with the Tinification project was that it “removed things that weren’t needed in really small memory configurations, but the minute you go to the cloud all that stuff is required.”

If Linux has indeed run into some practical limits to reducing footprint, there will be new opportunities for simpler RTOSes, including several open source platforms like Zephyr and FreeRTOS, to operate small footprint endpoints on microcontrollers. Yet that does not mean Linux is only useful for IoT gateways. With the growth of AI- and multimedia-related IoT nodes, Linux may be the only game in town. Meanwhile, it’s good to know there are some new tricks available to create the minimalist embedded masterpiece of your dreams.

Watch the full video below:

Connect with the Linux community at Open Source Summit North America on September 11-13. Linux.com readers can register now with the discount code, LINUXRD5, for 5% off the all-access attendee registration price. Register now to save over $300!