DevOps taking the center stage in the software industry, the job role ‘DevOps Engineer’ is buzzing around and today, I have some thoughts that can guide you to become a great DevOps engineer.

What is a DevOps?

The term Devops was coined as a combination of DEVelopers and OPerationS. According to Wikipedia:

DevOps is a term used to refer to a set of practices that emphasize the collaboration and communication of both software developers and information technology (IT) professionals while automating the process of software delivery and infrastructure changes. It aims at establishing a culture and environment where building, testing, and releasing software can happen rapidly, frequently, and more reliably.

Digital transformation is happening in every sector today and if you don’t adapt yourself to the changing technology advancements, your business is likely to die in the coming years. Automation being the key, every company nowadays wants to get rid of repetitive tasks and automate them as much as possible to increase the productivity. Here is where DevOps comes into the picture and however it is derived from the agile and lean tech aspects but still it’s new in the software industry today.

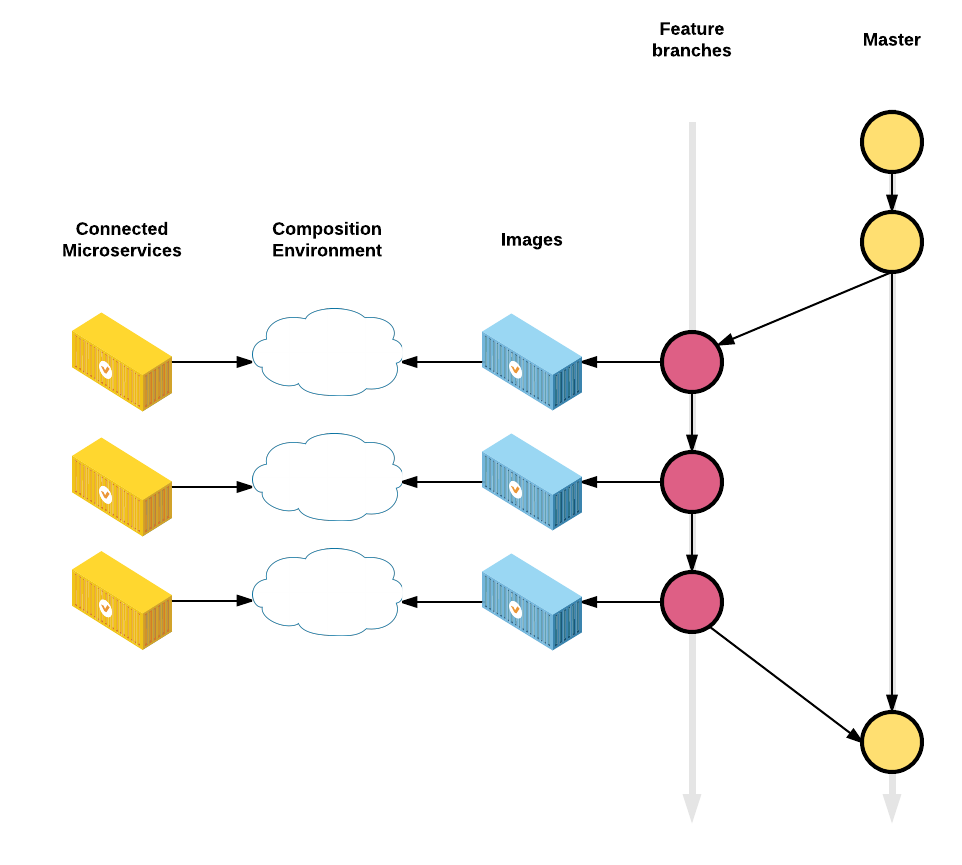

With the increasing usage of tools and platforms like Docker, AWS, Puppet, GitHub etc the companies can easily leverage automation and succeed their way.

What is a DevOps Engineer?

A major part of adopting DevOps is to create a better working relationship between development and operations teams. Some suggestions to do this include setting the teams together, involving them in each other’s processes and workflows, and even creating one cross-functional team that does everything. In all these methods, Dev is still Dev and Ops is still Ops.

The term DevOps Engineer tries to blur this divide between Dev and Ops altogether and suggests that the best approach is to hire engineers who can be excellent coders as well as handle all the Ops functions. In short, a DevOps engineer can be a developer who can think with an Operations mindset and has the following skillset:

- Familiarity and experience with a variety of Ops and Automation tools

- Great at writing scripts

- Comfortable with dealing with frequent testing and incremental releases

- Understanding of Ops challenges and how they can be addressed during design and development

- Soft skills for better collaboration across the team

How can you be a great DevOps Engineer?

The key to being a great DevOps Engineer is to focus on the following:

- Know the basic concepts on DevOps and get into the mindset of automating almost everything

- Know about the different DevOps tools like AWS, GitHub, Puppet, Docker, Chef, New Relic, Ansible, Shippable, JIRA, Slack etc

- Org-wide Ops mindset:

There are many common Ops pitfalls that developers need to consider while designing software. Reminding developers of these during design and development will go a long way in avoiding these altogether rather than running into issues and then fixing them.

Try to standardize this process by creating a checklist that is part of a template for design reviews.

-

End to end collaboration and helping others solve the issues

-

You should be a scripting guru: Bash, Powershell, Perl, Ruby, JavaScript, Python – you name it. They must be able to write code to automate repeatable processes.

Factors to measure DevOps success

- Deployment frequency

- Lead time for code changes

- Roll back rate

- Usage of automation tools for CI/CD

- Test automation

- Meeting business goals

- Faster time to market

- Customer satisfaction %

This is all about DevOps and how to be good at it.